The YCB-Slide dataset comprises of DIGIT sliding interactions on YCB objects. We envision this can contribute towards efforts in tactile localization, mapping, object understanding, and learning dynamics models. We provide access to DIGIT images, sensor poses, RGB video feed, ground-truth mesh models, and ground-truth heightmaps + contact masks (simulation only). This dataset is supplementary to the MidasTouch paper, a CoRL 2022 submission.

The recommended way to download the YCB-Slide is through download_dataset.sh (before you do, please run pip install gdown). Or, you could download object-specific data here:

conda env create -f environment.yml

conda activate ycb_slide

pip install -e .

chmod +x download_dataset.sh

./download_dataset.sh # requires gdownOr, you could download object-specific data here.

# Visualize trajectory

python visualize_trajectory.py --data_path dataset/real/035_power_drill/dataset_0 --object 035_power_drill --real # real dataset

python visualize_trajectory.py --data_path dataset/sim/035_power_drill/00 --object 035_power_drill # sim dataset

# Visualize tactile data

python visualize_tactile.py --data_path dataset/real/035_power_drill/dataset_0 --object 035_power_drill --real # real dataset

python visualize_tactile.py --data_path dataset/sim/035_power_drill/00 --object 035_power_drill # sim datasetPlug in your DIGIT (and webcam optionally), and record your own trajectories:

python record_data_expt.py --duration 60 --webcam_port 2 --object 035_power_drill # record DIGIT images and webcamYou can also timesync and align your data with these scripts:

python timesync_digit.py --digit_file dataset/real/035_power_drill/dataset_0/digit_data.npy --tracking_file dataset/real/035_power_drill/035_power_drill.csv --bodies "DIGIT 035_power_drill" # timesync optitrack and DIGIT data

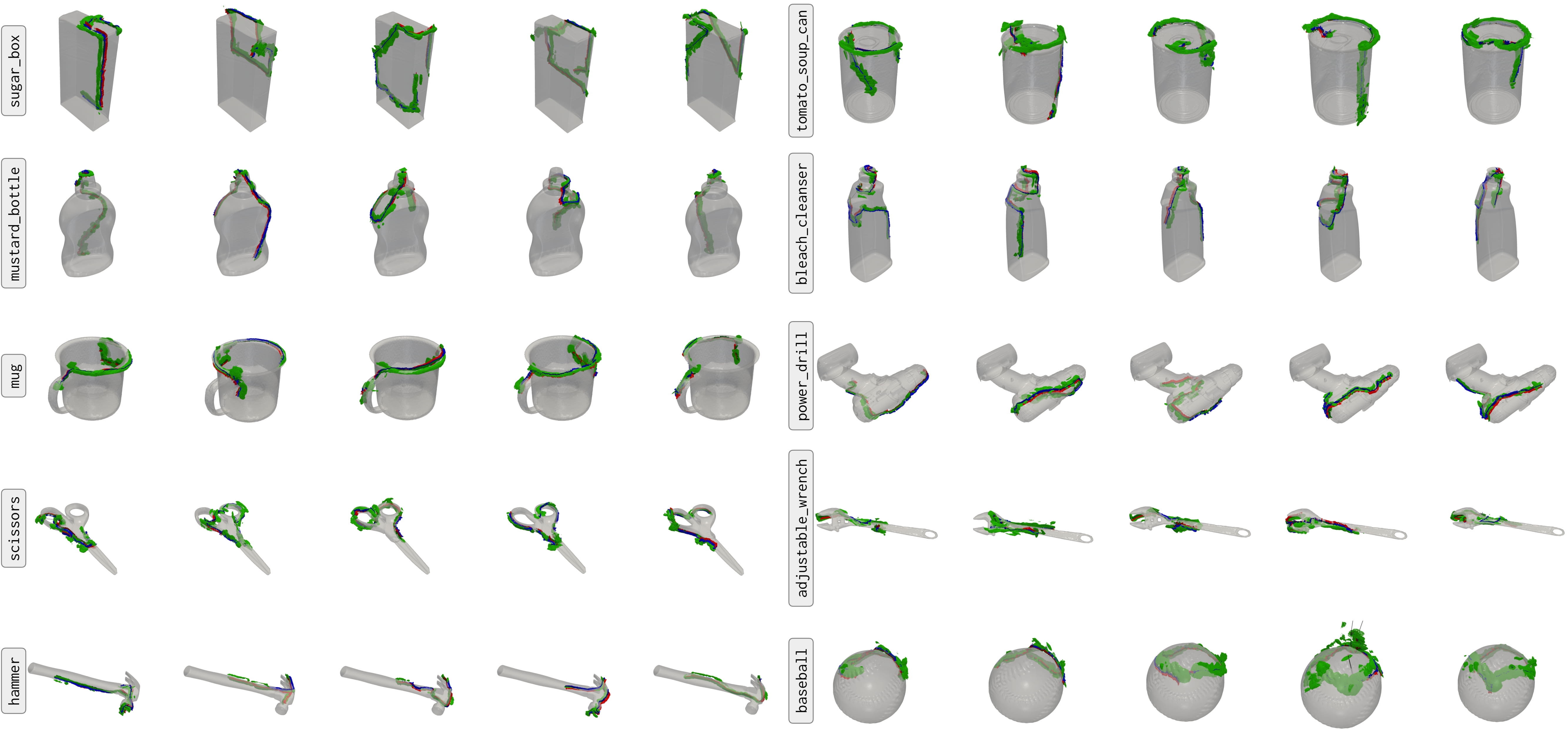

python align_data.py --data_path dataset/real/035_power_drill --object 035_power_drill # manually alignment fine-tuningYCB objects: We select 10 YCB objects with diverse geometries in our tests: sugar_box, tomato_soup_can, mustard_bottle, bleach_cleanser, mug, power_drill, scissors, adjustable_wrench, hammer, and baseball. You can download the ground-truth meshes here.

Simulation: We simulate sliding across the objects using TACTO, mimicking realistic interaction sequences. The pose sequences are geodesic-paths of fixed length L = 0.5m, connecting random waypoints on the mesh. We corrupt sensor poses with zero-mean Gaussian noise

Real-world: We perform sliding experiments through handheld operation of the DIGIT. We keep each YCB object stationary with a heavy-duty bench vise, and slide along the surface and record 60 secs trajectories at 30Hz. We use an OptiTrack system for timesynced sensor poses (synced_data.npy), with 8 cameras tracking the reflective markers. We affix six markers on the DIGIT and four on each test object. The canonical object pose is adjusted to agree with the ground-truth mesh models. Minor misalignment of sensor poses are rectified by postprocessing (alignment.npy). We record five logs per-object, for a total of 50 sliding interactions

| Object | Sim data | Real data | Object | Sim data | Real data |

|---|---|---|---|---|---|

| 004_sugar_box | [100.6 MB] | [321.9 MB] | 035_power_drill | [111.3 MB] | [339.1 MB] |

| 005_tomato_soup_can | [147.9 MB ] | [327.4 MB] | 037_scissors | [109 MB] | [320 MB] |

| 006_mustard_bottle | [102.7 MB] | [319.4 MB] | 042_adjustable_wrench | [147.7 MB] | [326.9 MB] |

| 021_bleach_cleanser | [86.5 MB] | [318.5 MB] | 048_hammer | [102 MB] | [320.9 MB] |

| 025_mug | [107 MB] | [325.5 MB] | 055_baseball | [153.4 MB] | [331.1 MB] |

sim

├── object_0 # eg: 004_sugar_box

│ ├── 00

│ │ ├── gt_contactmasks # ground-truth contact masks

│ │ │ ├── 0.jpg

│ │ │ └── ...

│ │ ├── gt_heightmaps # ground-truth depth maps

│ │ │ ├── 0.jpg

│ │ │ └── ...

│ │ ├── tactile_images # RGB DIGIT images

│ │ │ ├── 0.jpg

│ │ │ └── ...

│ │ ├── tactile_data_noisy.png # 3-D viz w/ noisy poses

│ │ ├── tactile_data.png # 3-D viz

│ │ └──tactile_data.pkl # pose information

│ ├── 01

│ └── ...

├── object_1

└── ...real

├── object_0 # eg: 004_sugar_box

│ ├── dataset_0

│ │ ├── frames # RGB DIGIT images

│ │ │ ├── frame_0000000.jpg

│ │ │ ├── frame_0000001.jpg

│ │ │ └── ...

│ │ ├── webcam_frames # RGB webcam images

│ │ │ ├── frame_0000000.jpg

│ │ │ ├── frame_0000001.jpg

│ │ │ └── ...

│ │ ├── digit_data.npy # pose information

│ │ ├── frames.mp4 # RGB DIGIT video

│ │ ├── synced_data.npy # timesynced image/pose data

│ │ ├── tactile_data.png # 3-D viz

│ │ └── 3d_rigid_bodies.pdf # pose viz

│ ├── object_0.csv # raw optitrack data

│ ├── alignment.npy # adjustment tf matrix

│ └── tactile_data.png # 3-D viz

│ ├── dataset_1

│ └── ...

├── object_1

└── ...This dataset is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License, with the accompanying code licensed under the MIT License.

If you use YCB-Slide dataset in your research, please cite:

@inproceedings{suresh2022midastouch,

title={{M}idas{T}ouch: {M}onte-{C}arlo inference over distributions across sliding touch},

author={Suresh, Sudharshan and Si, Zilin and Anderson, Stuart and Kaess, Michael and Mukadam, Mustafa},

booktitle = {Proc. Conf. on Robot Learning, CoRL},

address = {Auckland, NZ},

month = dec,

year = {2022}

}