This codebase is currently undergoing refactoring and it is not possible to run the examples. For this reason, I am deeply sorry. I am now trying to construct a better codebase.

Thunder is a deep reinforcement learning package (platform), which still has a lot of shortcomings. The project will gradually improve with my learning.

And more important, this project welcome everyone's ideas, improvements and contributions.

My graduation project, a vision-based robotic grasp task by using the deep reinforcement learning, also uses this platform. Due to time constraints and hardware limits, it did not achieve a good result in visual servo based grasp task without any prior knowledge.

The graduation project use the SAC(soft actor-critic) algorithm which is implemented in Thunder to train a cnn model. Actually, because of the limitation of hardware, the default architecture of the cnn model has small parameters.

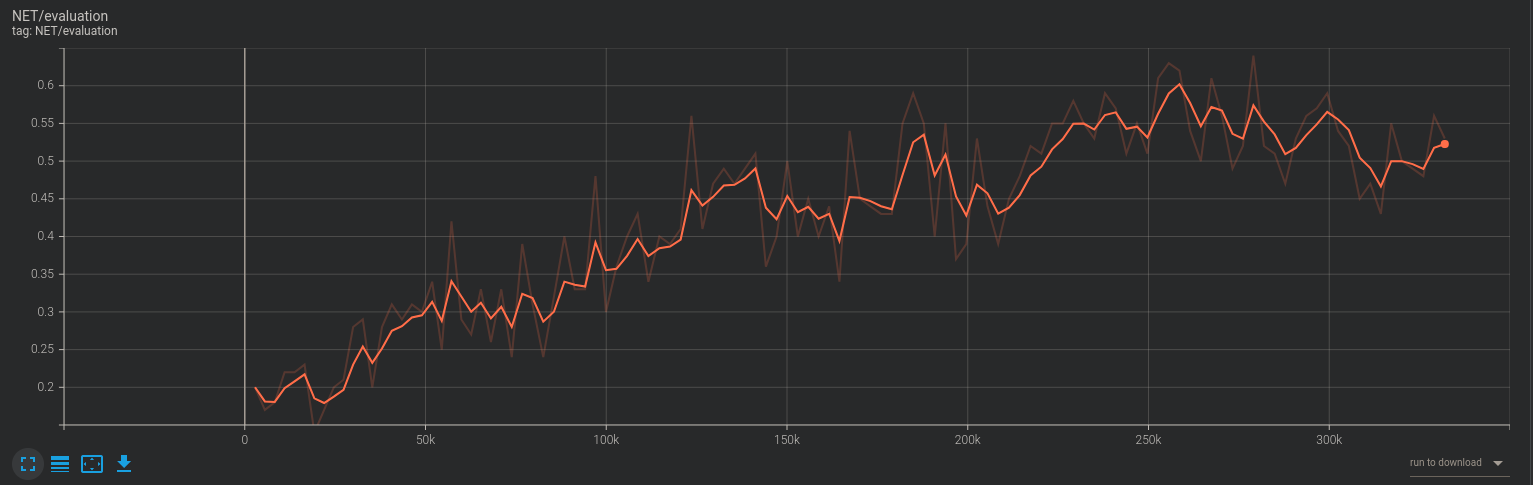

After training for 300,000 steps, the model achieved a grasping rate of 64% on non-visual servo grasping tasks using the jaco robotic arm.The following is the change curve of its crawl rate.

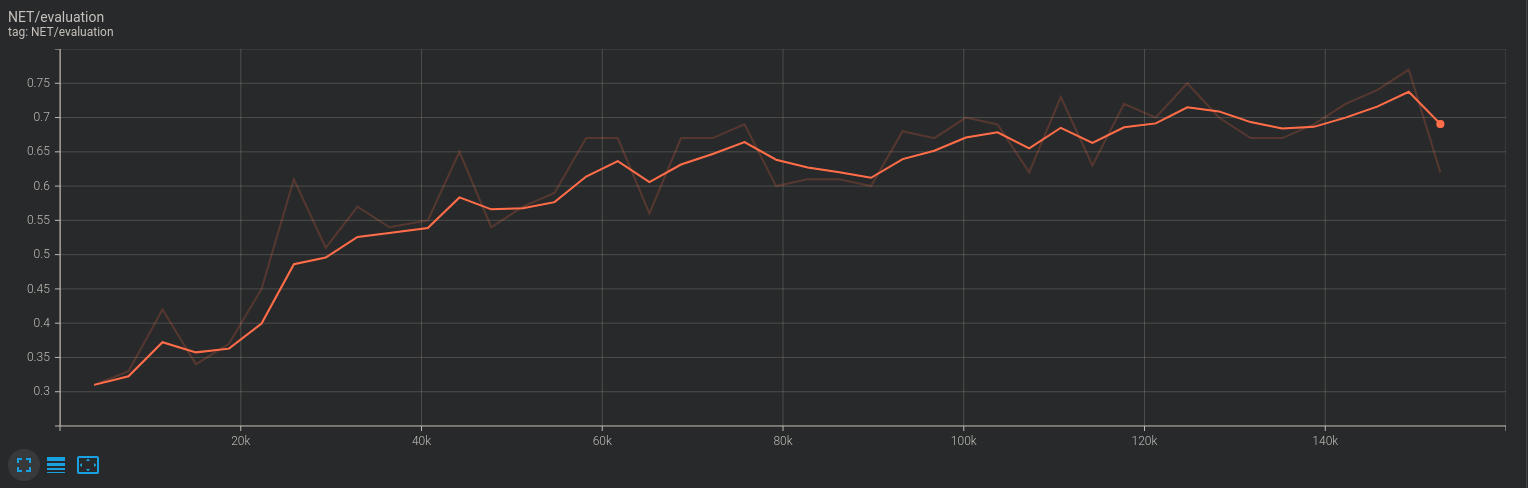

But the model achieved a grasping rate of 77% only after training for 160,000 steps if using the Kuka robotic arm. The following is the change curve of its crawl rate.

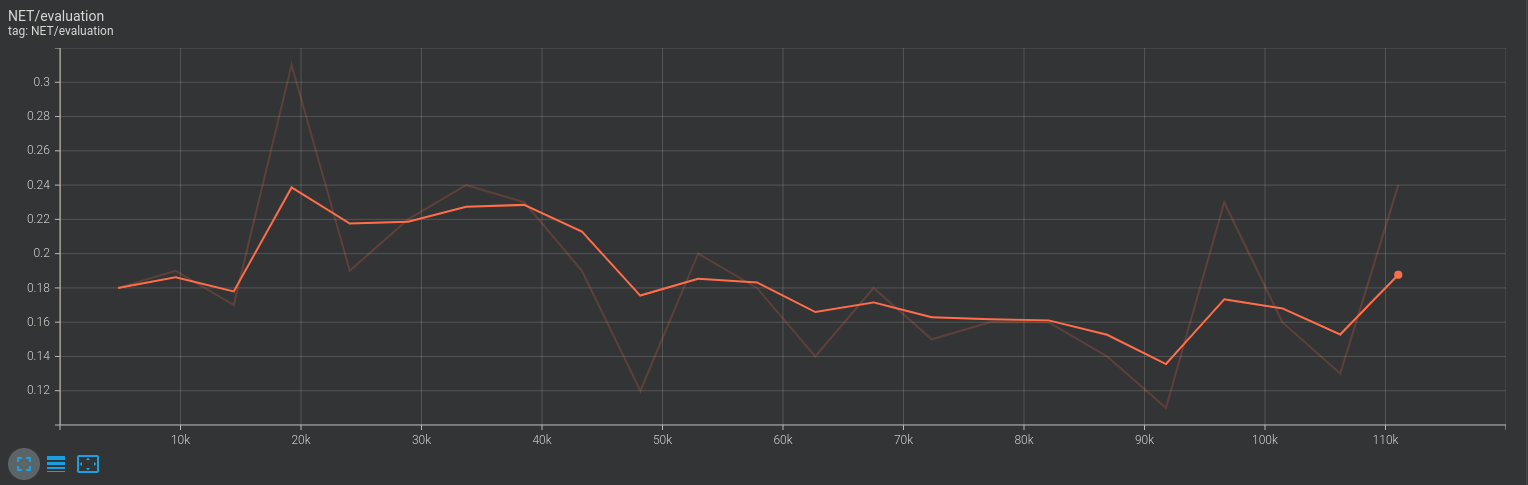

For this small model, the complete vision servo grasp problem seems too hard and the model fails to converge. But after reducing the amount of control to learn, the model can still learn something. The following is the change curve of its crawl rate on vision servo grasping task.