This repository contains a ROS workspace for the control of a 7 DOF robot (Panda by Franka Emika) or a 2 DOF robot (RRbot) using a webcam, with the movement of the operator body.

Short_demo.mp4

Short_demo_rrbot.mp4

This is a work done for the course of Smart Robotics taught at University of Modena and Reggio Emilia (UNIMORE) in the second semester of the academic year 2021/2022.

This project aims to demostrate a proof of concept using both computer vision algorithms and robot simulation and control, combining Python,OpenCV, Mediapipe and ROS + Gazebo.

In fact, controlling remotely a robot can be useful in dangerous environments, for medical assistance and for simulation/testing. Vision control for anthropomorphic robots can be a new way to work with remote operations in a simpler manner.

- Benedetta Fabrizi (LinkedIn - GitHub)

- Emanuele Bianchi (LinkedIn - GitHub)

- Ettore Candeloro (LinkedIn - GitHub)

- Gabriele Rosati (LinkedIn - GitHub)

NOTE: In every folder, there are README.md files that contains all the instruction to follow for installing ROS and setting up correctly the workspaces.

- In the Demo_1_RRbot_Control we implemented a first version of our project with a simpler 2 DOF robot.

- In the Demo_2_Panda_Control we implemented a second version of our project with a more complex robot and control with both the hand aperture and pose angles.

- In the project_presentation folder, a Power Point file containing a brief description of the overall work is present.

- In the images and videos folders all the media used for this repository and the ppt presentation can be found.

- The video stream of the Operator is taken with a webcam/camera

- Each frame is passed to the vision_arm_control of the ROS workspace.

- The vision script detects the hand and pose keypoints and then compute the hand aperture and pose angles

- The hand aperture and angles are sent to the Panda robot in the Gazebo simulation via a Pub/Sub ROS node

- The robot is simulated and controlled almost real-time thanks to the Panda Simulator Interfaces packages.

For the vision part of the project, we used the OpenCV and Mediapipe libraries. We created a HandDetector and PoseDetector modules to extract the hand aperture and pose angles from a video stream. In the main.py script we capture the video footage and also use a pub/sub to interact with the robot.

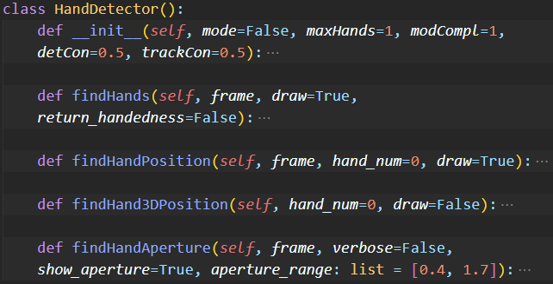

The hand detector class is composed of the following methods:

- The four hand index tips are located, along the base of the hand (middle point between wrist and thumb base)

- The median point between the fingers tips (thumb excluded) is computed

- The L2 distance between the base of the hand point and the median fingers position is computed.

- The distance is then normalized by the palm size and remapped between the value 0 and 100

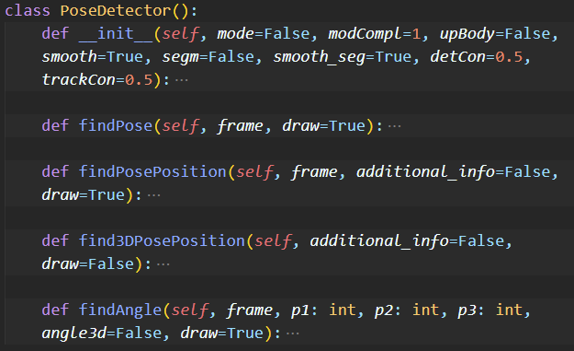

The hand detector class is composed of the following methods:

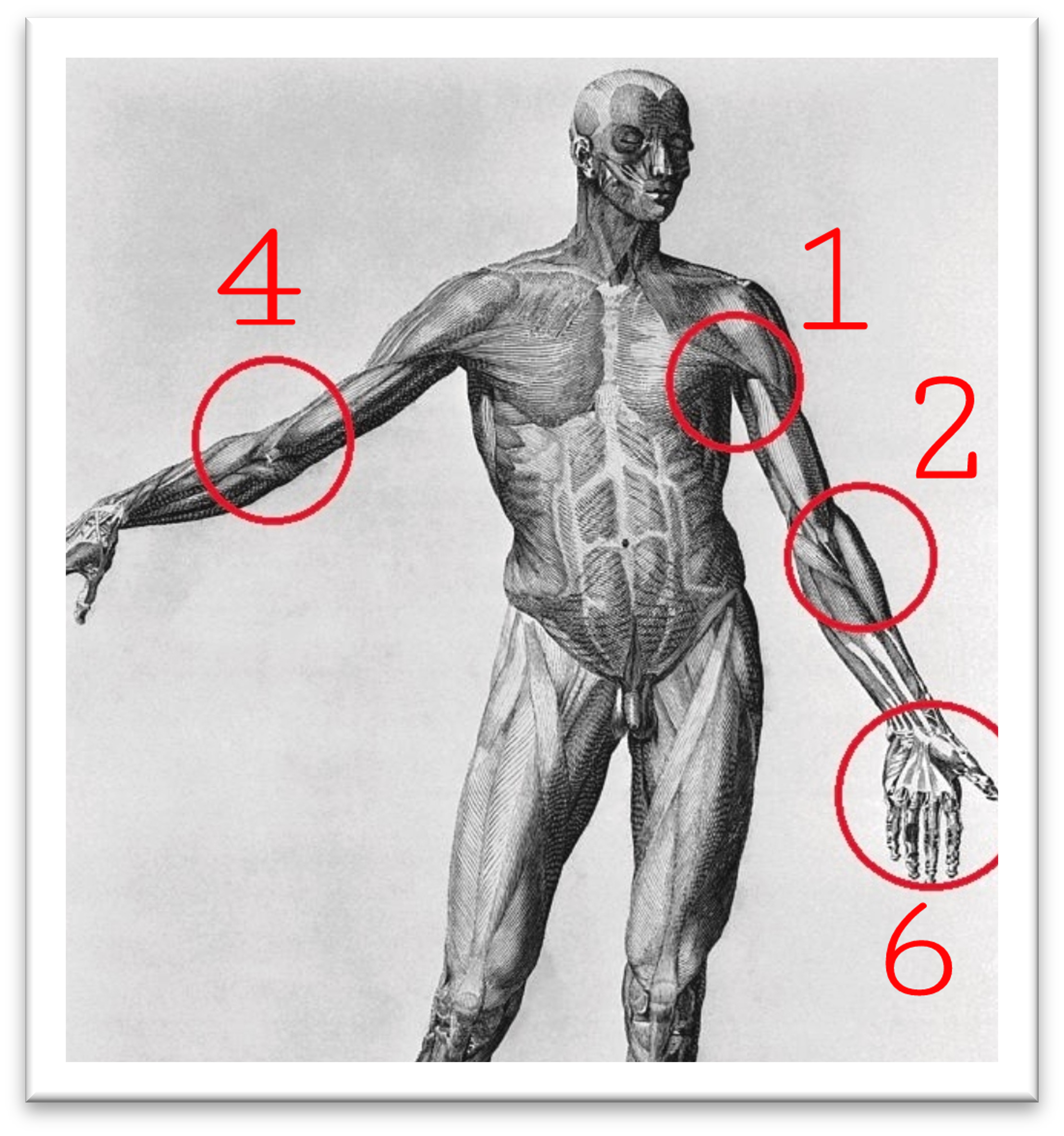

To extract the angles between a set of 3 keypoints (like the elbow angle given the points 12, 14, 16) we make use of the property of the scalar product between two vectors.

- We create two vectors with the same origin (at the central keypoint, where we want to know the angle value)

- We use the following formula to compute the angle between those two vectors:

$\phi = \arccos (\frac{a\cdot b}{|a||b|})$ - With this method we can consider both the 2D and 3D keypoints for more robustness!

For the robot simulation and control part of the project, we used the ROS with Gazebo. We created ad hoc ROS nodes with a publisher and subsriber to various topics to control the RRbot while we leveraged the Panda Simulator Package for the Panda arm control.

Both the two demos make use of a pub/sub mechanism but in case of the Panda Control package, the publishing is done with a list of joint values.

Focusing on the second and final demo of this project,for moving our robot we use:

- One hand for the sixth joint

- Right-elbow angle for the fourth joint

- Left-elbow angle for the second joint

- Left-shoulder angle for the first joint

The other 3 joints are fixed

- Gestures complexity and usability: Increase the set of possible gestures by adding different types of movements and improving the usability.

- Test the prototype on a high-end machine, then transpose it over a real robot.

- Divide each node into a standalone machine, which will be connected to the master nodes remotely.

- Improve the connectivity of the solution, by exploiting camera and devices that could be connected remotely.