Ángel Martínez-Tenor - 2016

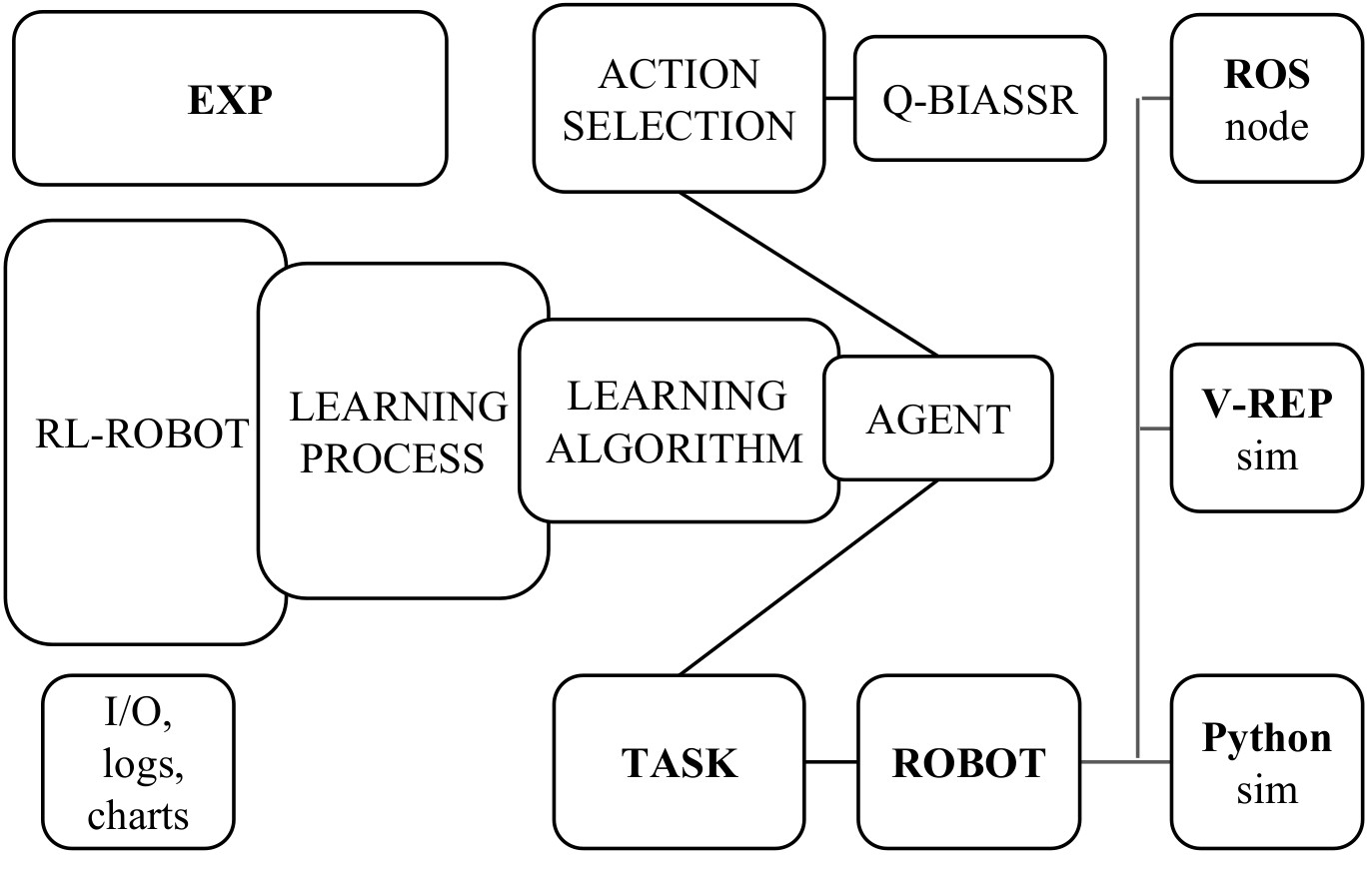

This repository provides a Reinforcement Learning framework in Python from the Machine Perception and Intelligent Robotics research group (MAPIR).

Reference: Towards a common implementation of reinforcement learning for multiple robotics tasks. Arxiv preprint ScienceDirect

Setup

- Create a python environment and install the requirements. e.g. using conda:

conda create -n rlrobot python=3.10

conda activate rlrobot

pip install -r requirements.txt

# tkinter: sudo apt install python-tk

Run

- Execute

python run_custom_exp.py(content below)

import exp

import rlrobot

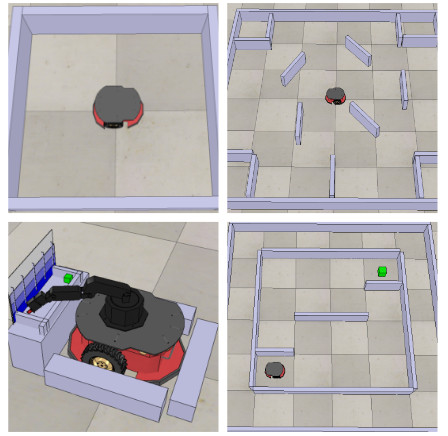

exp.ENVIRONMENT_TYPE = "MODEL" # "VREP" for V-REP simulation

exp.TASK_ID = "wander_1k"

exp.FILE_MODEL = exp.TASK_ID + "_model"

exp.ALGORITHM = "TOSL"

exp.ACTION_STRATEGY = "QBIASSR"

exp.N_REPETITIONS = 1

exp.N_EPISODES = 1

exp.N_STEPS = 60 * 60

exp.DISPLAY_STEP = 500

rlrobot.run()

-

Full set of parameters available in

exp.py -

Tested on Ubuntu 14,16 ,18, 20 (64 bits)

Tested: V-REP PRO EDU V3.3.2 / V3.5.0

-

Use default values of

remoteApiConnections.txtportIndex1_port = 19997 portIndex1_debug = false portIndex1_syncSimTrigger = true -

Activate threaded rendering (recommended):

system/usrset.txt -> threadedRenderingDuringSimulation = 1

Recommended simulation settings for V-REP scenes:

- Simulation step time: 50 ms (default)

- Real-Time Simulation: Enabled

- Multiplication factor: 3.00 (required CPU >= i3 3110m)

Execute V-REP

(./vrep.sh on linux). File -> Open Scene -> <RL-ROBOT path>/vrep_scenes