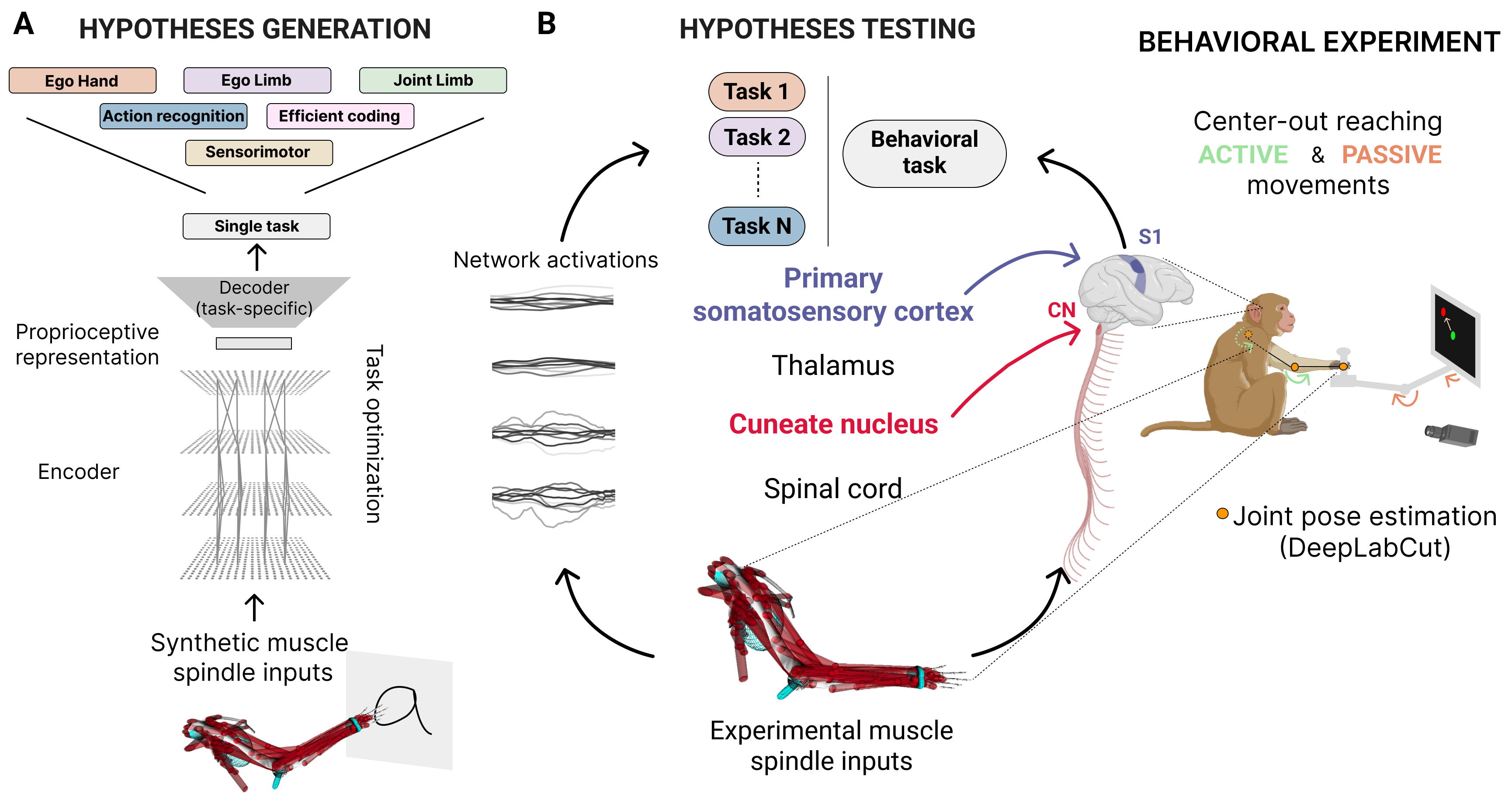

We created a normative framework to study the neural dynamics of proprioception. Using synthetic muscle spindle input inferred via musculoskeletal modeling, we optimized neural networks to solve 16 computational tasks to test multiple hypotheses, each representing different hypotheses of the proprioceptive system. We contrasted these different hypotheses by using neural networks' internal representations to predict the neural activity in proprioceptive brain areas (cuneate nucleus and primary somatosensory cortex) of primates performing a reaching task. Importantly, the models were not trained to directly predict the neural activity but were linearly probed at test time. To fairly compare different computational tasks, we kept fixed the movement statistics and the neural network architectures.

We found that:

- network’s internal representations developed through task-optimization generalize from synthetic data to predict single-trial neural activity in proprioceptive brain areas outperforming linear and data-driven model.

- neural networks trained to predict the limb position and velocity can better predict the neural activity in both areas with the neural predictability correlated with the network's task performance.

- task-driven models can better predict neural activity than untrained models only for active movements, but not for passive ones, suggesting there might be a top-down modulation during goal-directed movements.

Check out our manuscript, if you want to learn more: Task-driven neural network models predict neural dynamics of proprioception.

Here we share the code to reproduce the results. Please note that the code was developed and tested in Linux (see: Installation, software & requirements).

The code is organized into subtasks of the whole pipeline as follows:

- PCR-data-generation contains code for creating the PCR dataset using a NHP musculoskeletal model arm (adapted from Sandbrink et al.)

- nn-training contains the code for training Temporal Convolutional Network on the PCR dataset on different tasks (partially adapted from Sandbrink et al.)

- code contains the utils scripts for the training code and all the paths.

- exp_data_processing contains the code to simulate proprioceptive inputs from kinematic experimental data

- rl-data-generation contains the code to create the dataset for training the TCNs on the joint torque regression task

- neural_prediction contains the code to use pre-trained networks to predict experimental NHPs neural data

- paper_figures contains notebooks to generate main figures of the paper

Instructions for using the code and installation are contained in the subfolders.

The dataset can be found on Zenodo. We provide 3 different links:

- Spindle datasets - Contains the muscle spindle datasets: pcr dataset and rl dataset

- Models checkpoints - Contains dataframe with hyperparameters for training and all model checkpoints

- Experimental data - Contains experimental data (primate data from Miller lab at Northwestern University), result dataframes, activations and predictions of best models for all tasks

Once you have downloaded the data, update the ROOT_PATH in path_utils with the corresponding folder.

To reproduce the results, two environments are necessary:

- docker - For generating the PCR dataset, train the neural networks and extracting activations

- conda environment - For performing the neural predictions

To install the anaconda environment:

conda env create -f environment_prediction.yml

Otherwise:

conda create -n DeepProprio python=3.8

conda activate DeepProprio

pip install -r requirements.txt

To use the docker container with Opensim3.3, have a look at the docker_instruction

We also provide an anaconda environment with Tensorflow 1.5.0:

conda env create -f environment.yml

We are grateful to the authors of DeepDraw, as our synthetic primate proprioceptive dataset and network models build on top of their work.

We acknowledge the following code repositories: DeepLabCut, Barlow-Twins-TF, Opensim, scikit-learn, Tensorflow

If you find this code or ideas presented in our work useful, please cite:

Task-driven neural network models predict neural dynamics of proprioception, Cell (2024) by Alessandro Marin Vargas*, Axel Bisi*, Alberto S. Chiappa, Christopher Versteeg, Lee E. Miller, Alexander Mathis.

@article{vargas2024task,

title={Task-driven neural network models predict neural dynamics of proprioception},

author={{Marin Vargas}, Alessandro and Bisi, Axel and Chiappa, Alberto S and Versteeg, Chris and Miller, Lee E and Mathis, Alexander},

journal={Cell},

year={2024},

publisher={Elsevier}

}