This is the official PyTorch implementation for training and testing depth estimation models using the method described in

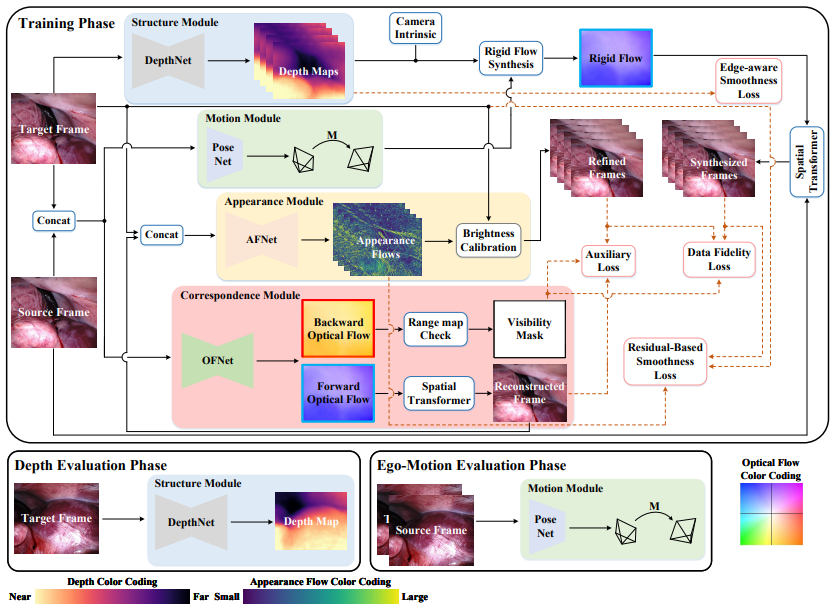

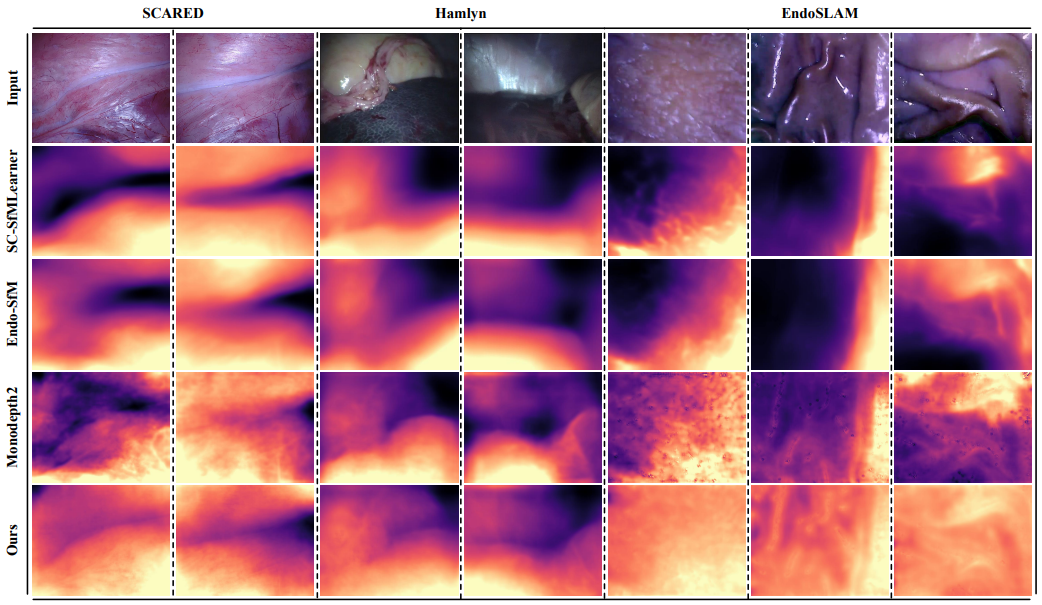

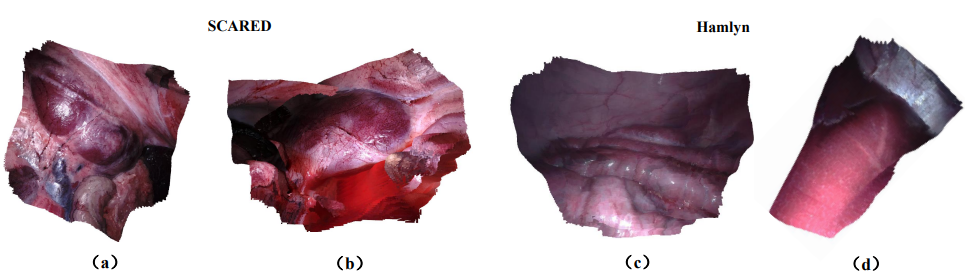

Self-Supervised Monocular Depth and Ego-Motion Estimation in Endoscopy: Appearance Flow to the Rescue

Shuwei Shao, Zhongcai Pei, Weihai Chen, Wentao Zhu, Xingming Wu, Dianmin Sun and Baochang Zhang

and

Self-Supervised Learning for Monocular Depth Estimation on Minimally Invasive Surgery Scenes

Shuwei Shao, Zhongcai Pei, Weihai Chen, Baochang Zhang, Xingming Wu, Dianmin Sun and David Doermann

If you find our work useful in your research please consider citing our paper:

@article{shao2022self,

title={Self-Supervised monocular depth and ego-Motion estimation in endoscopy: Appearance flow to the rescue},

author={Shao, Shuwei and Pei, Zhongcai and Chen, Weihai and Zhu, Wentao and Wu, Xingming and Sun, Dianmin and Zhang, Baochang},

journal={Medical image analysis},

volume={77},

pages={102338},

year={2022},

publisher={Elsevier}

}

@inproceedings{shao2021self,

title={Self-Supervised Learning for Monocular Depth Estimation on Minimally Invasive Surgery Scenes},

author={Shao, Shuwei and Pei, Zhongcai and Chen, Weihai and Zhang, Baochang and Wu, Xingming and Sun, Dianmin and Doermann, David},

booktitle={2021 IEEE International Conference on Robotics and Automation (ICRA)},

pages={7159--7165},

year={2021},

organization={IEEE}

}

We ran our experiments with PyTorch 1.2.0, torchvision 0.4.0, CUDA 10.2, Python 3.7.3 and Ubuntu 18.04.

You can predict scaled disparity for a single image or a folder of images with:

CUDA_VISIBLE_DEVICES=0 python test_simple.py --model_path <your_model_path> --image_path <your_image_or_folder_path>You can download the Endovis or SCARED dataset by signing the challenge rules and emailing them to max.allan@intusurg.com, the EndoSLAM dataset, the SERV-CT dataset, and the Hamlyn dataset.

Endovis split

The train/test/validation split for Endovis dataset used in our works is defined in the splits/endovis folder.

Endovis data preprocessing

We use the ffmpeg to convert the RGB.mp4 into images.png:

find . -name "*.mp4" -print0 | xargs -0 -I {} sh -c 'output_dir=$(dirname "$1"); ffmpeg -i "$1" "$output_dir/%10d.png"' _ {}We only use the left frames in our experiments and please refer to extract_left_frames.py. For dataset 8 and 9, we rephrase keyframes 0-4 as keyframes 1-5.

Data structure

The directory of dataset structure is shown as follows:

/path/to/endovis_data/

dataset1/

keyframe1/

image_02/

data/

0000000001.png

Stage-wise fashion:

Stage one:

CUDA_VISIBLE_DEVICES=0 python train_stage_one.py --data_path <your_data_path> --log_dir <path_to_save_model (optical flow)>Stage two:

CUDA_VISIBLE_DEVICES=0 python train_stage_two.py --data_path <your_data_path> --log_dir <path_to_save_model (depth, pose, appearance flow, optical flow)> --load_weights_folder <path_to_the_trained_optical_flow_model_in_stage_one>End-to-end fashion:

CUDA_VISIBLE_DEVICES=0 python train_end_to_end.py --data_path <your_data_path> --log_dir <path_to_save_model (depth, pose, appearance flow, optical flow)>To prepare the ground truth depth maps run:

CUDA_VISIBLE_DEVICES=0 python export_gt_depth.py --data_path endovis_data --split endovis...assuming that you have placed the endovis dataset in the default location of ./endovis_data/.

The following example command evaluates the epoch 19 weights of a model named mono_model:

CUDA_VISIBLE_DEVICES=0 python evaluate_depth.py --data_path <your_data_path> --load_weights_folder ~/mono_model/mdp/models/weights_19 --eval_mono| Model | Abs Rel | Sq Rel | RMSE | RMSE log | Link |

|---|---|---|---|---|---|

| Stage-wise (ID 5 in Table 8) | 0.059 | 0.435 | 4.925 | 0.082 | baidu (code:n6lh); google |

| End-to-end (ID 3 in Table 8) | 0.059 | 0.470 | 5.062 | 0.083 | baidu (code:z4mo); google |

| ICRA | 0.063 | 0.489 | 5.185 | 0.086 | baidu (code:wbm8); google |

If you use the latest PyTorch version,

Note1: please try to add 'align_corners=True' to 'F.interpolate' and 'F.grid_sample' when you train the network, to get a good camera trajectory.

Note2: please revise color_aug=transforms.ColorJitter.get_params(self.brightness,self.contrast,self.saturation,self.hue) to color_avg=transforms.ColorJitter(self.brightness,self.contrast,self.saturation,self.hue).

If you have any questions, please feel free to contact swshao@buaa.edu.cn.

Our code is based on the implementation of Monodepth2. We thank Monodepth2's authors for their excellent work and repository.