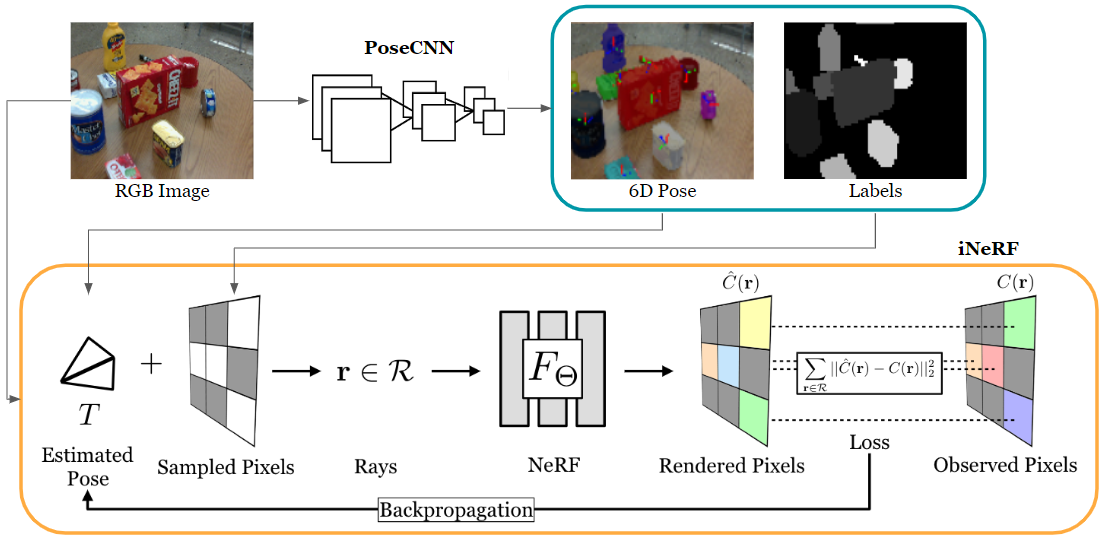

We present an efficient and robust system for view synthesis and pose estimation by integrating PoseCNN and iNeRF. Our method leverages the pose and object segmentation predictions from PoseCNN to improve the initial camera pose estimation and accelerate the optimization process in iNeRF.

Left - Interest Regions Sampling; right - Mask Region Sampling. Mask regions sampling strategy provides faster convergence and doesn't stick in a local minimum like interest regions.

python scripts/run_inerf.py --config configs/inerf/PROPS.txtfor optimizing iNeRFpython scripts/run_nerf.py --config configs/nerf/PROPS.txtfor training and rendering NeRFpython scripts/run_posecnn.pyfor training and evaluating PoseCNN

-

To start, install

pytorchandtorchvisionaccording to your own GPU version, and then create the environment using conda:If you see

ParseException: Expected '}', found '=' (at char 759), (line:34, col:18)error, check heregit clone https://github.com/silvery107/fast-iNeRF.git cd fast-iNeRF conda env create -f environment.yml conda activate inerf -

Download pretrained NeRF and PoseCNN models on PROPS dataset here and place them in

<checkpoints>folder. -

Download the PROPS-NeRF Dataset and extract it to

<data>folder. If you want to train a new PoseCNN model, also download the PROPS-Pose-Dataset. The dataset structure should be like thisdata ├── nerf_synthetic │ ├── lego │ └── ... ├── PROPS-NeRF │ ├── obs_imgs │ └── ... └── PROPS-Pose-Dataset └── ...

-

To run the algorithm on PROPS NeRF

python scripts/run_inerf.py --config configs/inerf/PROPS.txt --posecnn_init_pose --mask_regionIf you want to store gif video of optimization process, set

--overlay. -

Set

--posecnn_init_posewill enable pose initialization using estimation from PoseCNN and set--mask_regionwill enable mask region sampling using segmentation mask from PoseCNN. -

All other parameters such as batch size, sampling strategy, initial camera error you can adjust in corresponding config files.

- All NeRF models were trained using the PyTorch implementation of the original NeRF, see the next section.

-

To train a full-resolution PROPS NeRF:

python scripts/run_nerf.py --config configs/nerf/PROPS.txt

After training for 100k iterations (~6 hours on a single RTX 3060 GPU), you can find the following video at

<logs>folder. Set--render_onlyfor evaluation render only.

-

Continue training from checkpoints

You can directly resume any training using the command above.

-

To train a new PoseCNN model on PROPS Pose Dataset

python scripts/run_posecnn.py --train

Set

--evalfor evaluation only and run the 5°5cm metric on validation set.

- PyTorch >= 1.11

- torchvision >= 0.12

Thanks for their great open-source software

- NeRF - PyTorch implementation from yenchenlin/nerf-supervision-public

- iNeRF - Pytorch implementation from salykovaa/inerf

- PoseCNN - PyTorch implementation from DeepRob course project