Accepted in Transactions of Machine Learning Research (TMLR) 2023.

Harshit Sikchi, Akanksha Saran, Wonjoon Goo, Scott Niekum

If you find this code useful, please reference in your paper:

@article{

sikchi2022a,

title={A Ranking Game for Imitation Learning},

author={Harshit Sikchi and Akanksha Saran and Wonjoon Goo and Scott Niekum},

journal={Transactions on Machine Learning Research},

year={2022},

url={https://openreview.net/forum?id=d3rHk4VAf0},

note={}

}

conda env create -f environment.yml

Install D4RL from source here: https://github.com/rail-berkeley/d4rl (In setup.py comment out dm_control)

Download and extract expert data and preferences data inside the rank-game/expert_data/ folder from this [link](https://drive.google.com/drive/folders/1KJayG61KqiHqtRbxnUrGPBxTX2oDccSn?usp=sharing)

- rank-game (Core method)

-

- Training code (Online Imitation Learning from Observations):

rank_game_lfo.py

- Training code (Online Imitation Learning from Observations):

-

- Training code (Online Imitation Learning from Demonstrations):

rank_game_lfd.py

- Training code (Online Imitation Learning from Demonstrations):

-

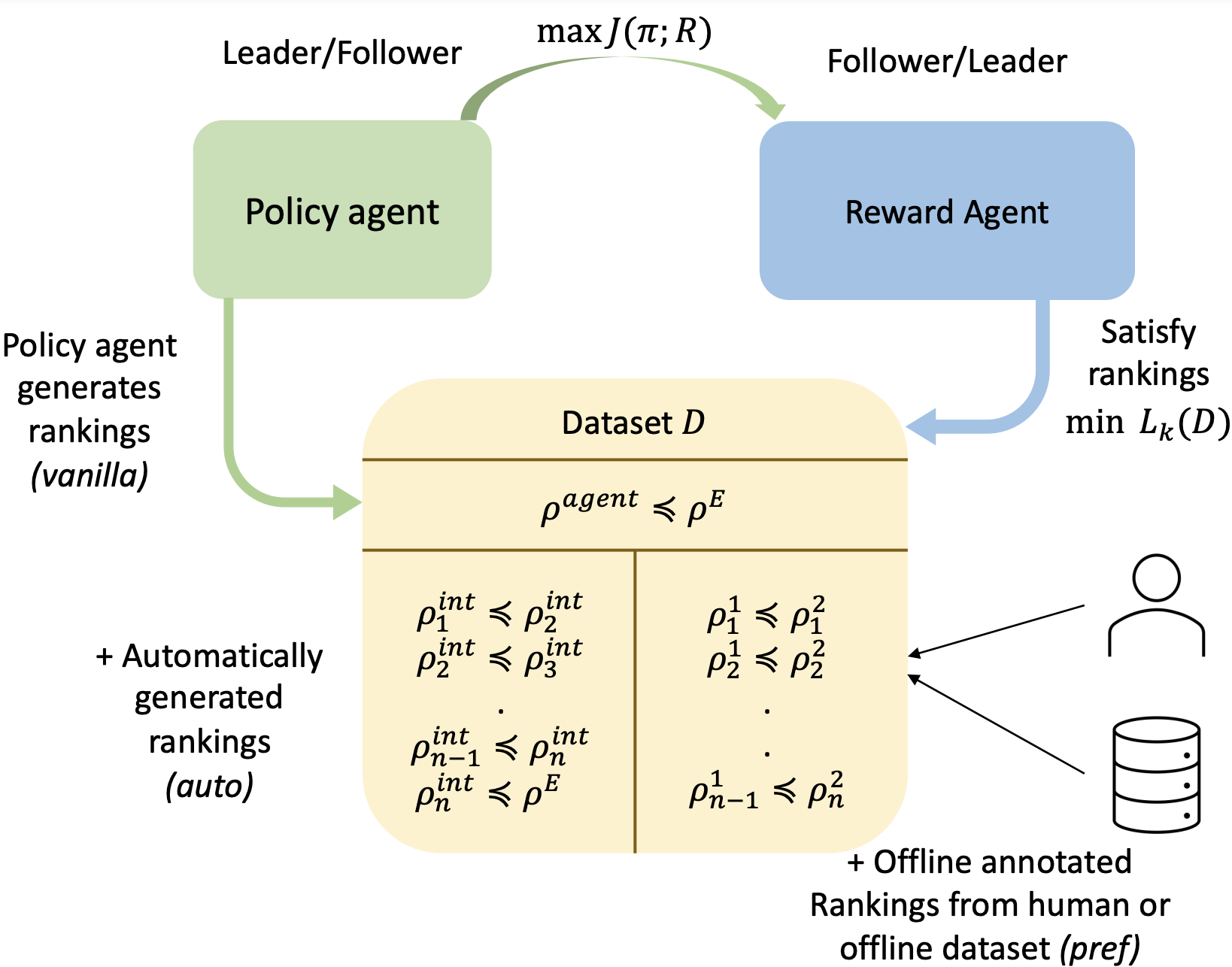

- Ranking Loss (PAL/RAL):

reward_agent/ranking_losses.py

- Ranking Loss (PAL/RAL):

-

- Policy Agent:

policy_agent/sac.py

- Policy Agent:

- Environments:

envs/ - Configurations:

configs/ - Expert Data:

expert_data/(needs to downloaded from <>)

- All the experiments are to be run under the root folder.

- Config files in

configs/are used to specify hyperparameters for each environment. - Please keep all the other values in yml files consistent with hyperparamters given in paper to reproduce the results in our paper.

The following command will reproduce the results in our paper without changing the config:

python rank_game_lfo.py --config=<configs/env_name.yml> --seed=<seed> --exp_name=<experiment name> --obj=<rank-pal-auto/rank-ral-auto> --regularization=${4} --expert_episodes=${5}

Note that as indicated in the paper, training the reward function until the cross-validation loss saturates also works well but takes longer to train. Hence, our default configs train the reward function for iterations that scale linearly with the preference dataset size.