- TPAMI2023: UniFormer: Unifying Convolution and Self-attention for Visual Recognition

- ICLR2022: UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning

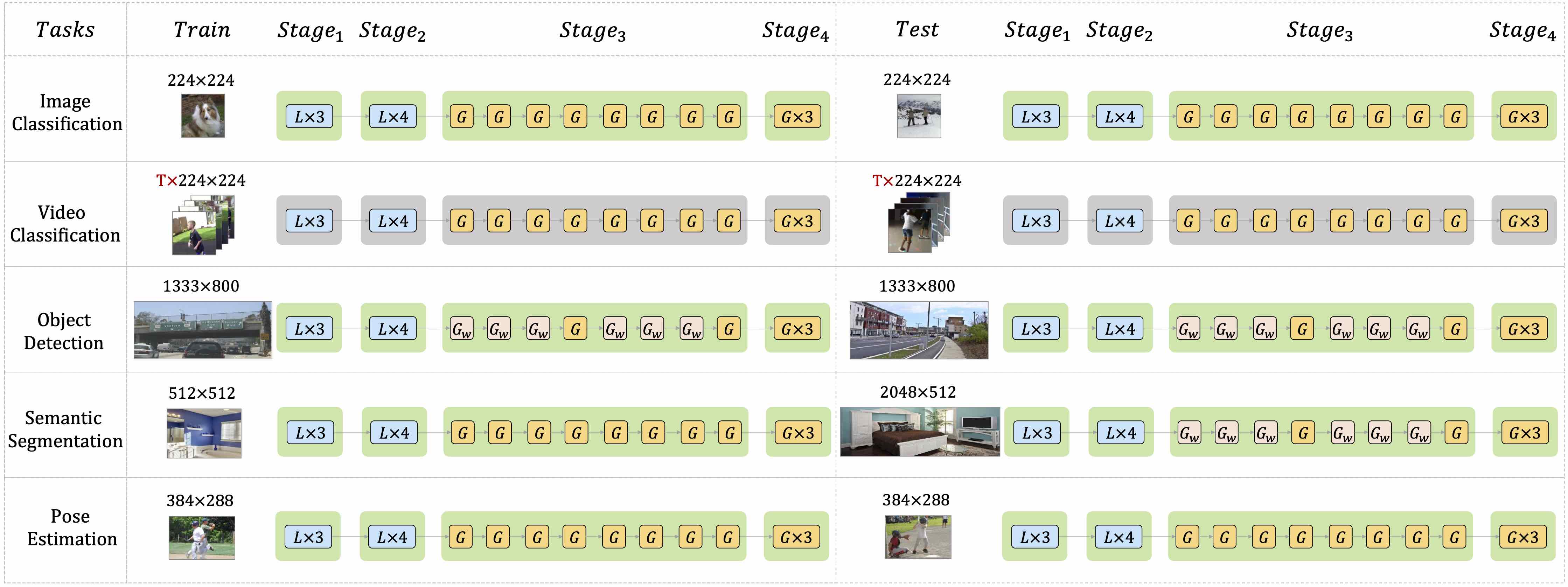

- Image Classification

- Video Classification

- Object Detection

- Semantic Segmentation

- Pose Estimation

- Lightweght Model (see

exp_lightin each task)

- UniFormerV2: The first model to achieve 90% top-1 accuracy on Kinetics-400.

- Unmasked Teacher: Using only public sources for pre-training in 6 days on 32 A100 GPUs, our scratch-built ViT-L/16 achieves state-of-the-art performances on various video tasks.

- Ask-Anything: Ask anything in video and image!

For downstream tasks:

- We forget to freeze BN in backbone, which will further improve the performance.

- We have verified that Token Labeling can largely help the downstream tasks. Have a try if you utilize UniFormer for competition or application.

- The

head_dimof some models are32, which will lead to large memory cost but little improvement for downstream tasks. Those models withhead_dim=64are released released in image_classification.

05/19/2023

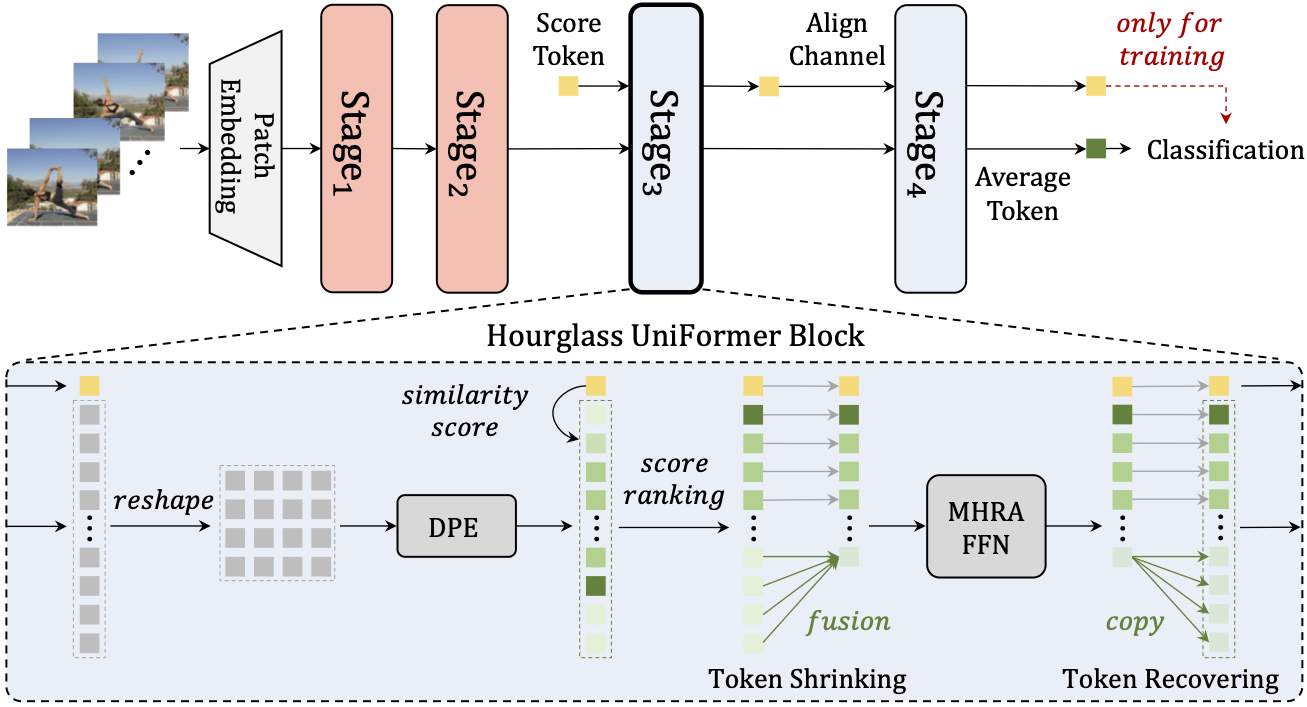

The extension version has been accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 🎉🎉🎉. In revision, we explore the simple yet effective lightweight design: Hourglass UniFormer. Based on that, we propose the efficient UniFormer-XS and UniFormer-XXS:

- For image tasks, they surpass MobileViT, PVTv2 and EfficientNet.

- For video tasks, they surpass X3D and MoViNet.

- Try our 🚀fast demo🚀 on CPU!

11/20/2022

We have released UniFormerV2, which aims to arming the pre-trained ViTs with efficient UniFormer designs. It can save a lot of reaining resources and achieve powerful performance on 8 popular benchmarks. Please have a try! 🎉🎉

10/26/2022

We have provided the code for video visualizations, please see video_classification/vis.

05/24/2022

- Some bugs for video recognition have been fixed in Nightcrawler. We successfully adapt UniFormer for extreme dark video classification! 🎉🎉

- More demos for Detection and Segmentation are provided. 👏😄

03/6/2022

Some models with head_dim=64 are released, which can save memory cost for downstream tasks.

02/9/2022

Some popular models and demos are updated in hugging face.

02/3/2022

Integrated into

01/21/2022

UniFormer for video is accepted by ICLR2022 (8868, Top 3%)!

01/19/2022

- Pretrained models on ImageNet-1K with Token Labeling.

- Large resolution fine-tuning.

01/18/2022

- The supported code and models for COCO object detection.

- The supported code and models for ADE20K semantic segmentation.

- The supported code and models for COCO pose estimation.

01/13/2022

-

Pretrained models on ImageNet-1K, Kinetics-400, Kinetics-600, Something-Something V1&V2.

-

The supported code and models for image classification and video classification are provided.

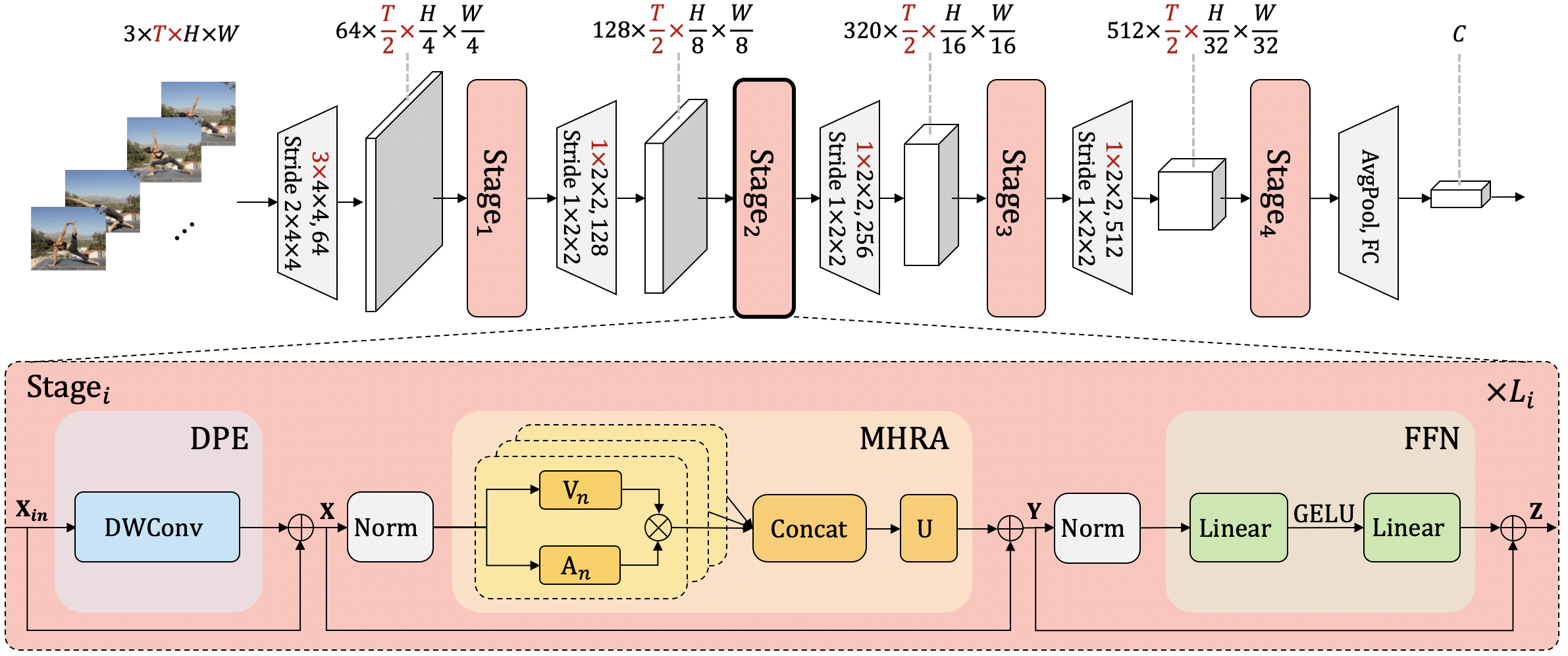

UniFormer (Unified transFormer) is introduce in arxiv (more details can be found in arxiv), which can seamlessly integrate merits of convolution and self-attention in a concise transformer format. We adopt local MHRA in shallow layers to largely reduce computation burden and global MHRA in deep layers to learn global token relation.

Without any extra training data, our UniFormer achieves 86.3 top-1 accuracy on ImageNet-1K classification. With only ImageNet-1K pre-training, it can simply achieve state-of-the-art performance in a broad range of downstream tasks. Our UniFormer obtains 82.9/84.8 top-1 accuracy on Kinetics-400/600, and 60.9/71.2 top-1 accuracy on Something-Something V1/V2 video classification tasks. It also achieves 53.8 box AP and 46.4 mask AP on COCO object detection task, 50.8 mIoU on ADE20K semantic segmentation task, and 77.4 AP on COCO pose estimation task. Moreover, we build an efficient UniFormer with a concise hourglass design of token shrinking and recovering, which achieves 2-4× higher throughput than the recent lightweight models.

Please see image_classification for more details.

More models with large resolution and token labeling will be released soon.

| Model | Pretrain | Resolution | Top-1 | #Param. | FLOPs |

|---|---|---|---|---|---|

| UniFormer-XXS | ImageNet-1K | 128x128 | 76.8 | 10.2M | 0.43G |

| UniFormer-XXS | ImageNet-1K | 160x160 | 79.1 | 10.2M | 0.67G |

| UniFormer-XXS | ImageNet-1K | 192x192 | 79.9 | 10.2M | 0.96G |

| UniFormer-XXS | ImageNet-1K | 224x224 | 80.6 | 10.2M | 1.3G |

| UniFormer-XS | ImageNet-1K | 192x192 | 81.5 | 16.5M | 1.4G |

| UniFormer-XS | ImageNet-1K | 224x224 | 82.0 | 16.5M | 2.0G |

| UniFormer-S | ImageNet-1K | 224x224 | 82.9 | 22M | 3.6G |

| UniFormer-S† | ImageNet-1K | 224x224 | 83.4 | 24M | 4.2G |

| UniFormer-B | ImageNet-1K | 224x224 | 83.9 | 50M | 8.3G |

| UniFormer-S+TL | ImageNet-1K | 224x224 | 83.4 | 22M | 3.6G |

| UniFormer-S†+TL | ImageNet-1K | 224x224 | 83.9 | 24M | 4.2G |

| UniFormer-B+TL | ImageNet-1K | 224x224 | 85.1 | 50M | 8.3G |

| UniFormer-L+TL | ImageNet-1K | 224x224 | 85.6 | 100M | 12.6G |

| UniFormer-S+TL | ImageNet-1K | 384x384 | 84.6 | 22M | 11.9G |

| UniFormer-S†+TL | ImageNet-1K | 384x384 | 84.9 | 24M | 13.7G |

| UniFormer-B+TL | ImageNet-1K | 384x384 | 86.0 | 50M | 27.2G |

| UniFormer-L+TL | ImageNet-1K | 384x384 | 86.3 | 100M | 39.2G |

Please see video_classification for more details.

| Model | Pretrain | #Frame | Sampling Stride | FLOPs | K400 Top-1 | K600 Top-1 |

|---|---|---|---|---|---|---|

| UniFormer-S | ImageNet-1K | 16x1x4 | 4 | 167G | 80.8 | 82.8 |

| UniFormer-S | ImageNet-1K | 16x1x4 | 8 | 167G | 80.8 | 82.7 |

| UniFormer-S | ImageNet-1K | 32x1x4 | 4 | 438G | 82.0 | - |

| UniFormer-B | ImageNet-1K | 16x1x4 | 4 | 387G | 82.0 | 84.0 |

| UniFormer-B | ImageNet-1K | 16x1x4 | 8 | 387G | 81.7 | 83.4 |

| UniFormer-B | ImageNet-1K | 32x1x4 | 4 | 1036G | 82.9 | 84.5* |

| Model | Pretrain | #Frame | Resolution | FLOPs | K400 Top-1 |

|---|---|---|---|---|---|

| UniFormer-XXS | ImageNet-1K | 4x1x1 | 128 | 1.0G | 63.2 |

| UniFormer-XXS | ImageNet-1K | 4x1x1 | 160 | 1.6G | 65.8 |

| UniFormer-XXS | ImageNet-1K | 8x1x1 | 128 | 2.0G | 68.3 |

| UniFormer-XXS | ImageNet-1K | 8x1x1 | 160 | 3.3G | 71.4 |

| UniFormer-XXS | ImageNet-1K | 16x1x1 | 128 | 4.2G | 73.3 |

| UniFormer-XXS | ImageNet-1K | 16x1x1 | 160 | 6.9G | 75.1 |

| UniFormer-XXS | ImageNet-1K | 32x1x1 | 160 | 15.4G | 77.9 |

| UniFormer-XS | ImageNet-1K | 32x1x1 | 192 | 34.2G | 78.6 |

#Frame = #input_frame x #crop x #clip

* Since Kinetics-600 is too large to train (>1 month in single node with 8 A100 GPUs), we provide model trained in multi node (around 2 weeks with 32 V100 GPUs), but the result is lower due to the lack of tuning hyperparameters.

* For UniFormer-XS and UniFormer-XXS, we use sparse sampling.

Please see video_classification for more details.

| Model | Pretrain | #Frame | FLOPs | SSV1 Top-1 | SSV2 Top-1 |

|---|---|---|---|---|---|

| UniFormer-S | K400 | 16x3x1 | 125G | 57.2 | 67.7 |

| UniFormer-S | K600 | 16x3x1 | 125G | 57.6 | 69.4 |

| UniFormer-S | K400 | 32x3x1 | 329G | 58.8 | 69.0 |

| UniFormer-S | K600 | 32x3x1 | 329G | 59.9 | 70.4 |

| UniFormer-B | K400 | 16x3x1 | 290G | 59.1 | 70.4 |

| UniFormer-B | K600 | 16x3x1 | 290G | 58.8 | 70.2 |

| UniFormer-B | K400 | 32x3x1 | 777G | 60.9 | 71.1 |

| UniFormer-B | K600 | 32x3x1 | 777G | 61.0 | 71.2 |

#Frame = #input_frame x #crop x #clip

Please see video_classification for more details.

| Model | Pretrain | #Frame | Sampling Stride | FLOPs | UCF101 Top-1 | HMDB51 Top-1 |

|---|---|---|---|---|---|---|

| UniFormer-S | K400 | 16x3x5 | 4 | 625G | 98.3 | 77.5 |

#Frame = #input_frame x #crop x #clip

* We only report the results in the first split. As for the results in our paper, we run the model in 3 training/validation splits and average the results.

Please see object_detection for more details.

| Backbone | Lr Schd | box mAP | mask mAP | #params | FLOPs |

|---|---|---|---|---|---|

| UniFormer-XXS | 1x | 42.8 | 39.2 | 29.4M | - |

| UniFormer-XS | 1x | 44.6 | 40.9 | 35.6M | - |

| UniFormer-Sh14 | 1x | 45.6 | 41.6 | 41M | 269G |

| UniFormer-Sh14 | 3x+MS | 48.2 | 43.4 | 41M | 269G |

| UniFormer-Bh14 | 1x | 47.4 | 43.1 | 69M | 399G |

| UniFormer-Bh14 | 3x+MS | 50.3 | 44.8 | 69M | 399G |

* The FLOPs are measured at resolution 800×1280.

| Backbone | Lr Schd | box mAP | mask mAP | #params | FLOPs |

|---|---|---|---|---|---|

| UniFormer-Sh14 | 3x+MS | 52.1 | 45.2 | 79M | 747G |

| UniFormer-Bh14 | 3x+MS | 53.8 | 46.4 | 107M | 878G |

* The FLOPs are measured at resolution 800×1280.

Please see semantic_segmentation for more details.

| Backbone | Lr Schd | mIoU | #params | FLOPs |

|---|---|---|---|---|

| UniFormer-XXS | 80K | 42.3 | 13.5M | - |

| UniFormer-XS | 80K | 44.4 | 19.7M | - |

| UniFormer-Sh14 | 80K | 46.3 | 25M | 172G |

| UniFormer-Bh14 | 80K | 47.0 | 54M | 328G |

| UniFormer-Sw32 | 80K | 45.6 | 25M | 183G |

| UniFormer-Sh32 | 80K | 46.2 | 25M | 199G |

| UniFormer-S | 80K | 46.6 | 25M | 247G |

| UniFormer-Bw32 | 80K | 47.0 | 54M | 310G |

| UniFormer-Bh32 | 80K | 47.7 | 54M | 350G |

| UniFormer-B | 80K | 48.0 | 54M | 471G |

* The FLOPs are measured at resolution 512×2048.

| Backbone | Lr Schd | mIoU | MS mIoU | #params | FLOPs |

|---|---|---|---|---|---|

| UniFormer-Sh14 | 160K | 46.9 | 48.0 | 52M | 947G |

| UniFormer-Bh14 | 160K | 48.9 | 50.0 | 80M | 1085G |

| UniFormer-Sw32 | 160K | 46.6 | 48.4 | 52M | 939G |

| UniFormer-Sh32 | 160K | 47.0 | 48.5 | 52M | 955G |

| UniFormer-S | 160K | 47.6 | 48.5 | 52M | 1004G |

| UniFormer-Bw32 | 160K | 49.1 | 50.6 | 80M | 1066G |

| UniFormer-Bh32 | 160K | 49.5 | 50.7 | 80M | 1106G |

| UniFormer-B | 160K | 50.0 | 50.8 | 80M | 1227G |

* The FLOPs are measured at resolution 512×2048.

Please see pose_estimation for more details.

| Backbone | Input Size | AP | AP50 | AP75 | ARM | ARL | AR | FLOPs |

|---|---|---|---|---|---|---|---|---|

| UniFormer-S | 256x192 | 74.0 | 90.3 | 82.2 | 66.8 | 76.7 | 79.5 | 4.7G |

| UniFormer-S | 384x288 | 75.9 | 90.6 | 83.4 | 68.6 | 79.0 | 81.4 | 11.1G |

| UniFormer-S | 448x320 | 76.2 | 90.6 | 83.2 | 68.6 | 79.4 | 81.4 | 14.8G |

| UniFormer-B | 256x192 | 75.0 | 90.6 | 83.0 | 67.8 | 77.7 | 80.4 | 9.2G |

| UniFormer-B | 384x288 | 76.7 | 90.8 | 84.0 | 69.3 | 79.7 | 81.4 | 14.8G |

| UniFormer-B | 448x320 | 77.4 | 91.1 | 84.4 | 70.2 | 80.6 | 82.5 | 29.6G |

If you find this repository useful, please give us stars and use the following BibTeX entry for citation.

@misc{li2022uniformer,

title={UniFormer: Unifying Convolution and Self-attention for Visual Recognition},

author={Kunchang Li and Yali Wang and Junhao Zhang and Peng Gao and Guanglu Song and Yu Liu and Hongsheng Li and Yu Qiao},

year={2022},

eprint={2201.09450},

archivePrefix={arXiv},

primaryClass={cs.CV}

}@misc{li2022uniformer,

title={UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning},

author={Kunchang Li and Yali Wang and Peng Gao and Guanglu Song and Yu Liu and Hongsheng Li and Yu Qiao},

year={2022},

eprint={2201.04676},

archivePrefix={arXiv},

primaryClass={cs.CV}

}This project is released under the MIT license. Please see the LICENSE file for more information.

UniFormer is maintained by Kunchang Li.

For help or issues using UniFormer, please submit a GitHub issue.

For other communications related to UniFormer, please contact Kunchang Li (kc.li@siat.ac.cn).