Reinforcement learning for path following of an AirSim quadrotor implemented in Unity city environment

|

|

|

|---|---|---|

| Figure1. training started | Figure2. 4,000 time steps | Figure3. 12,000 time steps |

-

Download the Unity package containing the customized city environment and the AirSim drone;

-

-

Directly load the pre-trainined model by hitting

python Model_Load.pyin the terminal, and you'll see the drone following the city road

-

Code snippet credit to AirSim/PythonClient/Reinforcement_learning/drone_env, and the reward function is as following:

def _compute_reward(self):

thresh_dist = 7

beta = 1

x = -240

y = 10

z = 200

pts = [

np.array([x, y, z]),

np.array([-350, y, z]),

np.array([-350, y, 150]),

np.array([-350, y, z-100]),

np.array([-350, y, z-200]),

]

...

...

if self.state["collision"]:

reward = -100

else:

dist = 10000000

for i in range(0, len(pts) - 1):

dist = min(

dist,

np.linalg.norm(np.cross((quad_pt - pts[i]), (quad_pt - pts[i + 1])))

/ np.linalg.norm(pts[i] - pts[i + 1]),

)

if dist > thresh_dist:

reward = -10

...

...

done = 0

if reward <= -10:

done = 1

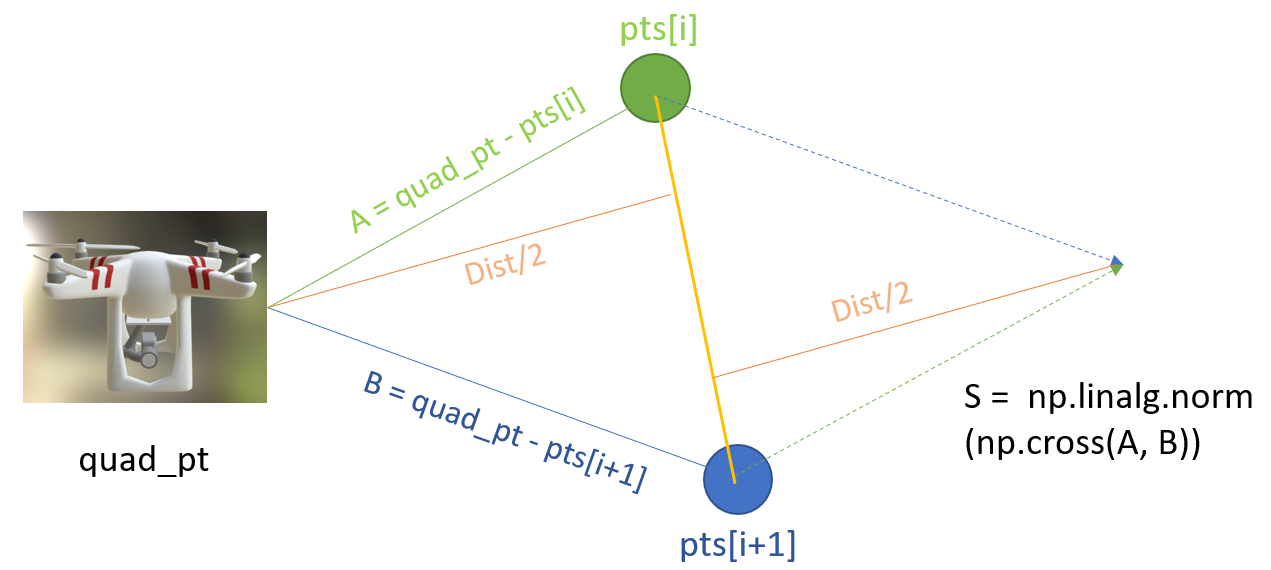

return reward, doneThe tuple of the coordinates represents the central line of the city road. The dist in the reward function computes the twice distance between the realtime drone and the central line comprised by the points. The distance computing is reperesented as following picture:

- A trained policy by cross-modal representations has been achieved by Rogerio Bonatti. The imitation learning data is generated for passing through the drone racing obstacles. The path following task should also work applied with the generated imitation learning data.

- Transfer the simulation algorithm to real-world platform.