This repo is the official implementation of "MedViT: A Robust Vision Transformer for Generalized Medical Image Classification".

🎉 🎉 🎉 Good News: MedViT-V2 will be released soon link

- (beginner friendly🍉) To train/evaluate MedViT on Cifar/Imagenet/CustomDataset follow "CustomDataset".

- (New version) Updated the code "Instructions.ipynb", added the installation requirements and adversarial robustness.

- (Previous version) Please go to "Colab_MedViT.ipynb" for complete detail on dataset preparation and Train/Test procedure.

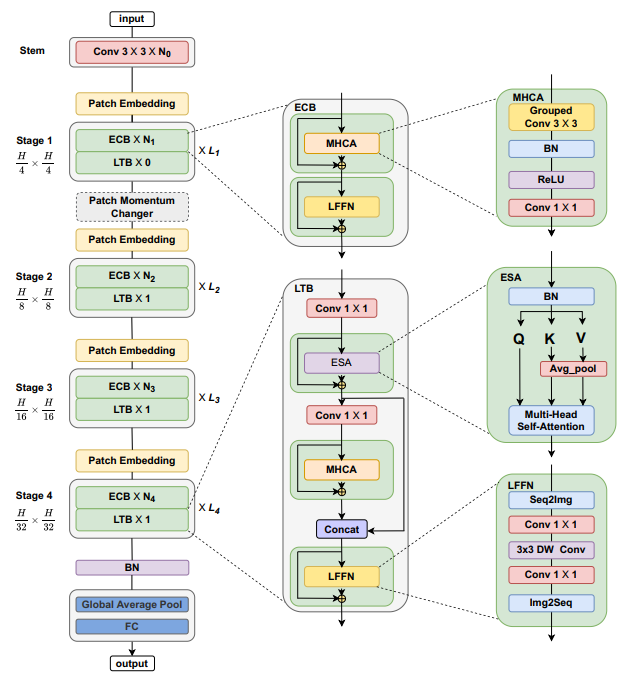

Convolutional Neural Networks (CNNs) have advanced existing medical systems for automatic disease diagnosis. However, there are still concerns about the reliability of deep medical diagnosis systems against the potential threats of adversarial attacks since inaccurate diagnosis could lead to disastrous consequences in the safety realm. In this study, we propose a highly robust yet efficient CNN-Transformer hybrid model which is equipped with the locality of CNNs as well as the global connectivity of vision Transformers. To mitigate the high quadratic complexity of the self-attention mechanism while jointly attending to information in various representation subspaces, we construct our attention mechanism by means of an efficient convolution operation. Moreover, to alleviate the fragility of our Transformer model against adversarial attacks, we attempt to learn smoother decision boundaries. To this end, we augment the shape information of an image in the high-level feature space by permuting the feature mean and variance within mini-batches. With less computational complexity, our proposed hybrid model demonstrates its high robustness and generalization ability compared to the state-of-the-art studies on a large-scale collection of standardized MedMNIST-2D datasets.

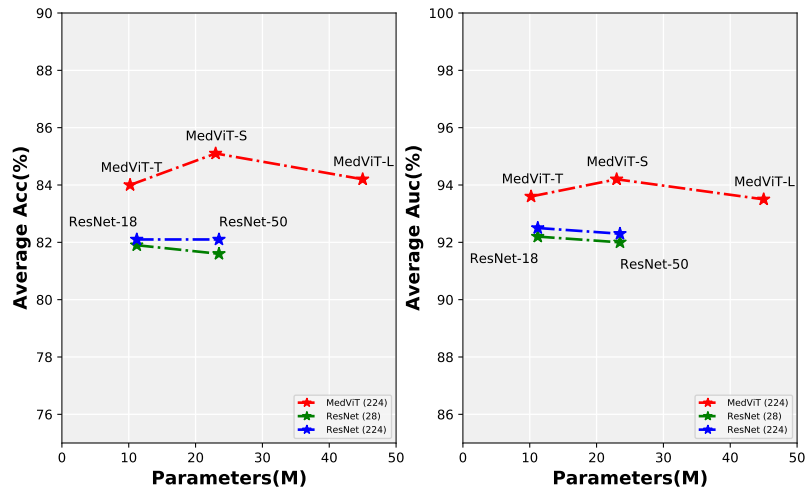

Figure 1. Comparison between MedViTs and the baseline ResNets, in terms of average ACC-Parameters and average AUC-Parametrs trade-off over all 2D datasets. Figure 2. The overall hierarchical architecture of MedViT.We provide a series of MedViT models pretrained on ILSVRC2012 ImageNet-1K dataset.

| Model | Dataset | Resolution | Acc@1 | ckpt |

|---|---|---|---|---|

| MedViT_small | ImageNet-1K | 224 | 83.70 | ckpt |

| MedViT_base | ImageNet-1K | 224 | 83.92 | ckpt |

| MedViT_large | ImageNet-1K | 224 | 83.96 | ckpt |

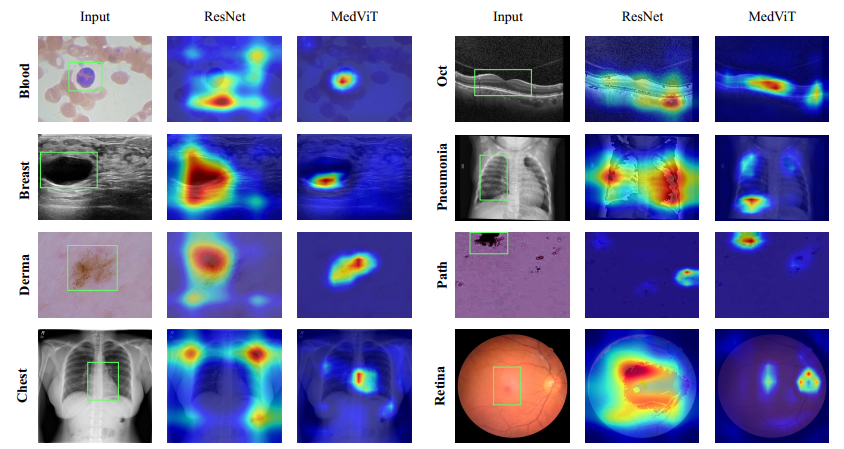

Visual inspection of MedViT-T and ResNet-18 using Grad-CAM on MedMNIST-2D datasets. The green rectangles is

used to show a specific part of the image that contains information relevant to the diagnosis or analysis of a medical condition,

where the superiority of our proposed method can be clearly seen.

If you find this project useful in your research, please consider cite:

@article{manzari2023medvit,

title={MedViT: A robust vision transformer for generalized medical image classification},

author={Manzari, Omid Nejati and Ahmadabadi, Hamid and Kashiani, Hossein and Shokouhi, Shahriar B and Ayatollahi, Ahmad},

journal={Computers in Biology and Medicine},

volume={157},

pages={106791},

year={2023},

publisher={Elsevier}

}

We heavily borrow the code from RVT and LocalViT.

For any inquiries or questions regarding the code, please feel free to contact us directly via email:

- Omid Nejaty: omid.nejaty@gmail.com

- Hossein Kashiani: hkashia@clemson.edu