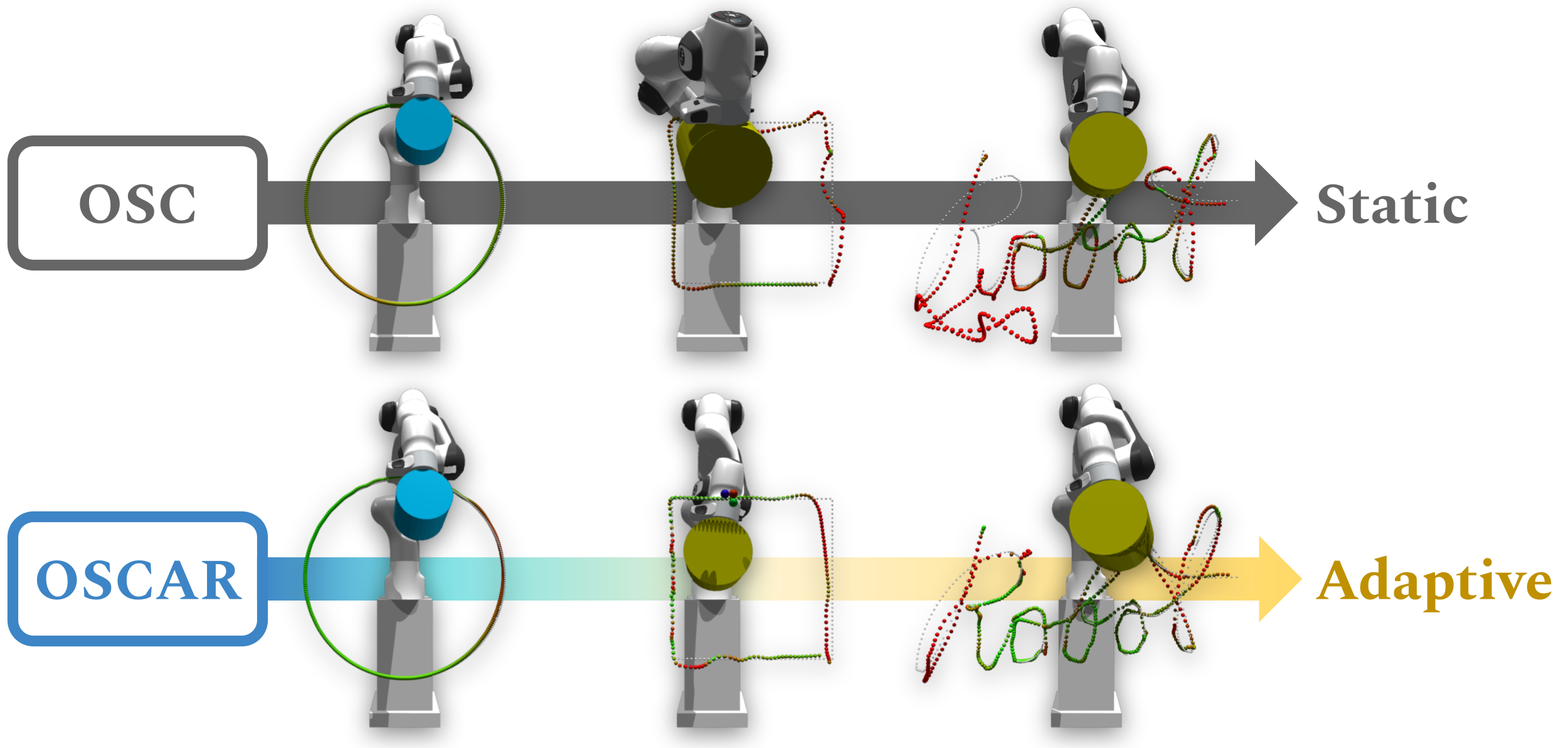

This repository contains the codebase used in OSCAR: Data-Driven Operational Space Control for Adaptive and Robust Robot Manipulation.

More generally, this codebase is a modular framework built upon IsaacGym, and intended to support future robotics research leveraging large-scale training.

Of note, this repo contains:

- High-quality controller implementations of OSC, IK, and Joint-Based controllers that have been fully parallelized for PyTorch

- Complex Robot Manipulation tasks for benchmarking learning algorithms

- Modular structure enabling rapid prototyping of additional robots, controllers, and environments

- Linux machine

- Conda

- NVIDIA GPU + CUDA

First, clone this repo and initialize the submodules:

git clone https://github.com/NVlabs/oscar.git

cd oscar

git submodule update --init --recursiveNext, create a new conda environment to be used for this repo and activate the repo:

bash create_conda_env_oscar.sh

conda activate oscarThis will create a new conda environment named oscar and additional install some dependencies. Next, we need IsaacGym. This repo itself does not contain IsaacGym, but is compatible with any version >= preview 3.0.

Install and build IsaacGym HERE.

Once installed, navigate to the python directory and install the package to this conda environment:

(oscar) cd <ISAACGYM_REPO_PATH>/python

(oscar) pip install -e .Now with IsaacGym installed, we can finally install this repo as a package:

(oscar) cd <OSCAR_REPO_PATH>

(oscar) pip install -e .That's it!

Provided are helpful scripts for running training, evaluation, and finetuning. These are found in the Examples directory. You can set the Task, Controller, and other parameters directly at the top of the example script. They should run out of the box, like so:

cd examples

bash train.shFor evaluation (including zero-shot), you can modify and run:

bash eval.shFor finetuning on the published out-of-distribution task settings using a pretrained model, you can modify and run:

bash finetune.shTo pretrain the initial OSCAR base network, you can modify and run:

bash pretrain_oscar.shWe provide all of our final trained models used in our published results, found in trained_models section.

This repo is designed to be built upon and enable future large-scale robot learning simulation research. You can add your own custom controller by seeing an example controller like the OSC controller, your own custom robot agent by seeing an example agent like the Franka agent, and your own custom task by seeing an example task like the Push task.

Please check the LICENSE file. OSCAR may be used non-commercially, meaning for research or evaluation purposes only. For business inquiries, please contact researchinquiries@nvidia.com.

Please cite OSCAR if you use this framework in your publications:

@inproceedings{wong2022oscar,

title={OSCAR: Data-Driven Operational Space Control for Adaptive and Robust Robot Manipulation},

author={Josiah Wong and Viktor Makoviychuk and Anima Anandkumar and Yuke Zhu},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

year={2022}

}