This repo contains the official implementation of our paper:

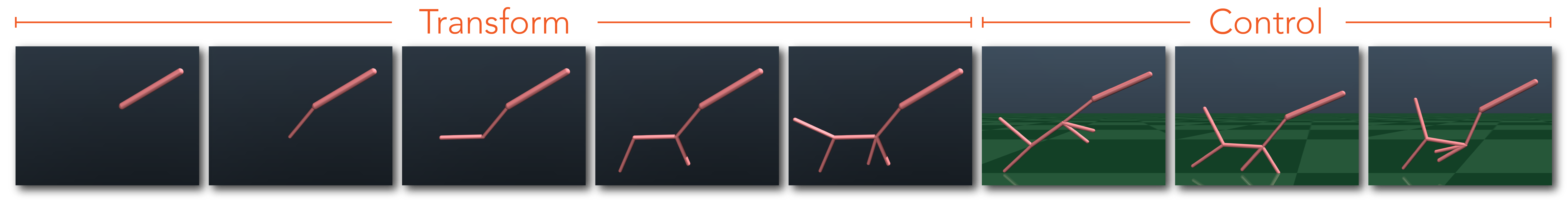

Transform2Act: Learning a Transform-and-Control Policy for Efficient Agent Design

Ye Yuan, Yuda Song, Zhengyi Luo, Wen Sun, Kris Kitani

ICLR 2022 (Oral)

website | paper

- Tested OS: MacOS, Linux

- Python >= 3.7

- PyTorch == 1.8.0

- Install PyTorch 1.8.0 with the correct CUDA version.

- Install the dependencies:

pip install -r requirements.txt - Install torch-geometric with correct CUDA and PyTorch versions (change the

CUDAandTORCHvariables below):CUDA=cu102 TORCH=1.8.0 pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html pip install torch-sparse==0.6.12 -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html pip install torch-cluster -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html pip install torch-spline-conv -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html pip install torch-geometric==1.6.1 - install mujoco-py following the instruction here.

- Set the following environment variable to avoid problems with multiprocess trajectory sampling:

export OMP_NUM_THREADS=1

- You can download pretrained models from Google Drive or BaiduYun (password: 2x3q).

- Once the

transform2act_models.zipfile is downloaded, unzip it under theresultsfolder of this repo:Note that the pretrained models directly correspond to the config files in design_opt/cfg.mkdir results unzip transform2act_models.zip -d results

You can train your own models using the provided config in design_opt/cfg:

python design_opt/train.py --cfg hopper --gpu 0

You can replace hopper with {ant, gap, swimmer} to train other environments. Here is the correspondence between the configs and the environments in the paper: hopper - 2D Locomotion, ant - 3D Locomotion, swimmer - Swimmer, and gap - Gap Crosser.

If you have a display, run the following command to visualize the pretrained model for the hopper:

python design_opt/eval.py --cfg hopper

Again, you can replace hopper with {ant, gap, swimmer} to visualize other environments.

You can also save the visualization into a video by using --save_video:

python design_opt/eval.py --cfg hopper --save_video

This will produce a video out/videos/hopper.mp4.

If you find our work useful in your research, please cite our paper Transform2Act:

@inproceedings{yuan2022transform2act,

title={Transform2Act: Learning a Transform-and-Control Policy for Efficient Agent Design},

author={Yuan, Ye and Song, Yuda and Luo, Zhengyi and Sun, Wen and Kitani, Kris},

booktitle={International Conference on Learning Representations},

year={2022}

}

Please see the license for further details.