https://arxiv.org/abs/2303.08333

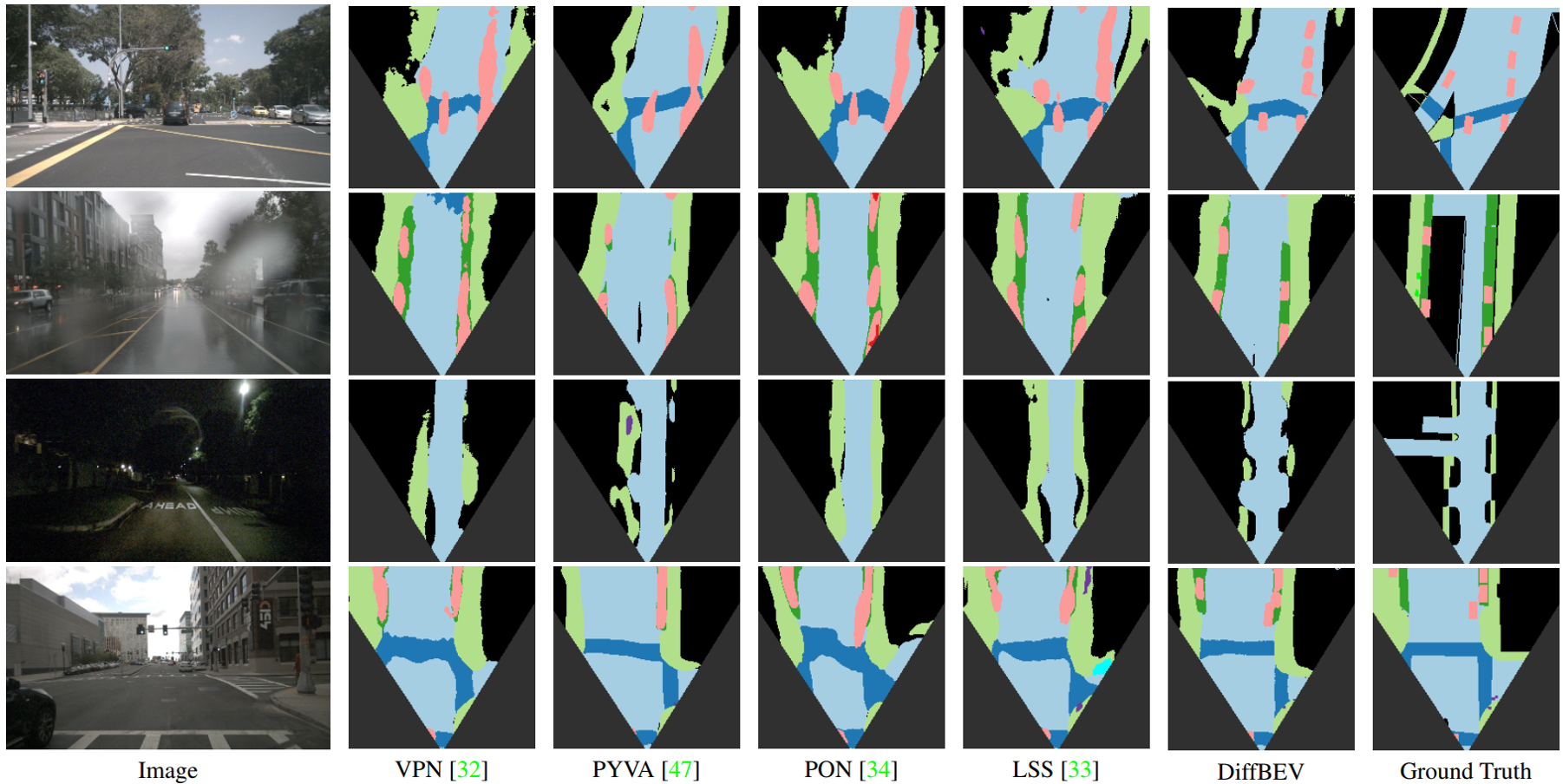

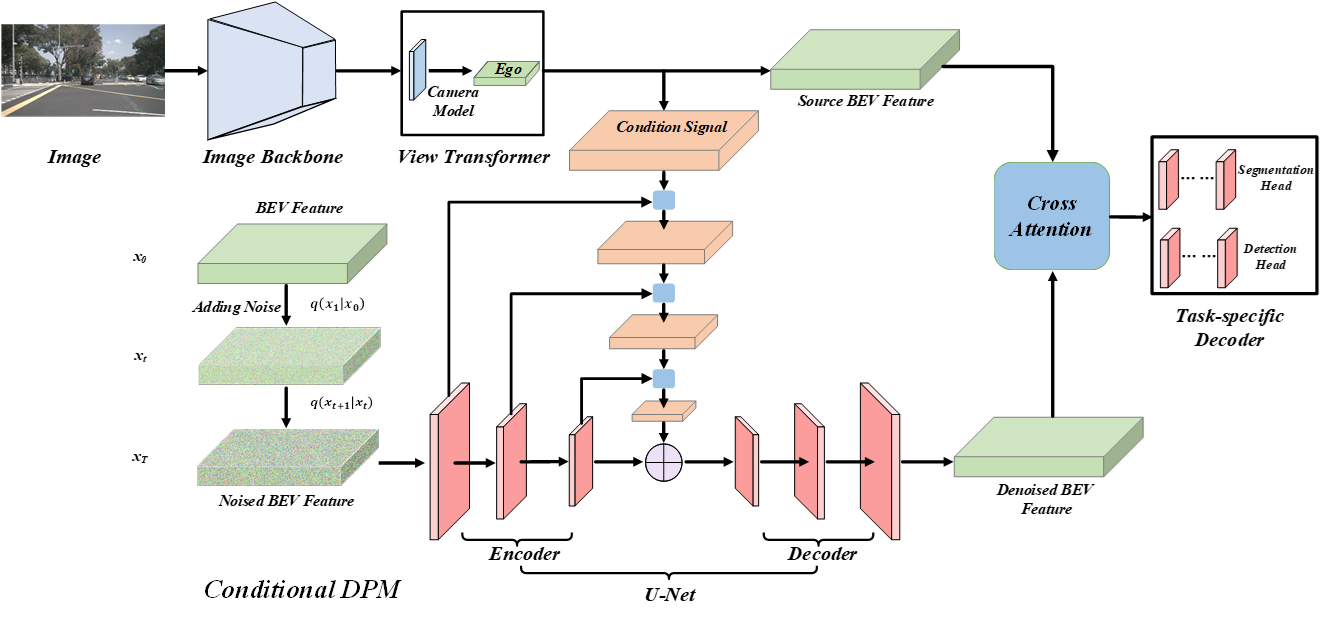

BEV perception is of great importance in the field of autonomous driving, serving as the cornerstone of planning, controlling, and motion prediction. The quality of the BEV feature highly affects the performance of BEV perception. However, taking the noises in camera parameters and LiDAR scans into consideration, we usually obtain BEV representation with harmful noises. Diffusion models naturally have the ability to denoise noisy samples to the ideal data, which motivates us to utilize the diffusion model to get a better BEV representation. In this work, we propose an end-to-end framework, named DiffBEV, to exploit the potential of diffusion model to generate a more comprehensive BEV representation. To the best of our knowledge, we are the first to apply diffusion model to BEV perception. In practice, we design three types of conditions to guide the training of the diffusion model which denoises the coarse samples and refines the semantic feature in a progressive way. What's more, a cross-attention module is leveraged to fuse the context of BEV feature and the semantic content of conditional diffusion model. DiffBEV achieves a 25.9% mIoU on the nuScenes dataset, which is 6.2% higher than the best-performing existing approach. Quantitative and qualitative results on multiple benchmarks demonstrate the effectiveness of DiffBEV in BEV semantic segmentation and 3D object detection tasks.

Extensive experiments are conducted on the nuScenes, KITTI Raw, KITTI Odometry, and KITTI 3D Object benchmarks.

Follow the script to generate depth maps for KITTI datasets. The depth maps of KITTI datasets are available at Google Drive and Baidu Net Disk. We also provide the script to get the depth map for nuScenes dataset. Replace the dataset path in the script accroding to your dataset directory.

After downing these datasets, we need to generate the annotations in BEV. Follow the instructions below to get the corresponding annotations.

Run the script make_nuscenes_labels to get the BEV annotation for the nuScenes benchmark. Please follow here to generate the BEV annotation (ann_bev_dir) for KITTI datasets.

Follow the instruction to get the BEV annotations for KITTI Raw, KITTI Odometry, and KITTI 3D Object datasets.

The datasets' structure is organized as follows.

data

├── nuscenes

├── img_dir

├── train

├── val

├── ann_bev_dir

├── train

├── val

├── train_depth

├── val_depth

├── calib.json

├── kitti_processed

├── kitti_raw

├── img_dir

├── train

├── val

├── ann_bev_dir

├── train

├── val

├── train_depth

├── val_depth

├── calib.json

├── kitti_odometry

├── img_dir

├── train

├── val

├── ann_bev_dir

├── train

├── val

├── train_depth

├── val_depth

├── calib.json

├── kitti_object

├── img_dir

├── train

├── val

├── ann_bev_dir

├── train

├── val

├── train_depth

├── val_depth

├── calib.json

For the camera parameters on each dataset, we write them into the corresponding _calib.json file. For each dataset, we upload the _calib.json to Google Drive and Baidu Net Disk.

Please change the dataset path according to the real data directory in the nuScenes, KITTI Raw, KITTI Odometry, and KITTI 3D Object dataset configurations. Modify the path of pretrained model in model configurations.

DiffBEV is tested on:

- Python 3.7/3.8

- CUDA 11.1

- Torch 1.9.1

Please check install for installation.

- Create a conda environment for the project.

conda create -n diffbev python=3.7

conda activate diffbev- Install Pytorch following the instruction.

conda install pytorch torchvision -c pytorch - Install mmcv

pip install -U openmim

mim install mmcv-full- Git clone this repository

git clone https://github.com/JiayuZou2020/DiffBEV.git- Install and compile the required packages.

cd DiffBEV

pip install -v -e .If you find our work is helpful for your research, please consider citing as follows.

@article{zou2023diffbev,

title={DiffBEV: Conditional Diffusion Model for Bird's Eye View Perception},

author={Jiayu, Zou and Zheng, Zhu and Yun, Ye and Xingang, Wang},

journal={arXiv preprint arXiv:2303.08333},

year={2023}

}

Our work is partially based on the following open-sourced projects: mmsegmentation, VPN, PYVA, PON, LSS. Thanks for their contribution to the research community of BEV perception.