Implementation is strictly for educational purposes and not distributed (as in paper), but it works.

from training import run_experiment, render_policy

example_config = {

"experiment_name": "test_BipedalWalker_v0",

"plot_path": "plots/",

"model_path": "models/", # optional

"log_path": "logs/", # optional

"init_model": "models/test_BipedalWalker_v5.0.pkl", # optional

"env": "BipedalWalker-v3",

"n_sessions": 128,

"env_steps": 1600,

"population_size": 256,

"learning_rate": 0.06,

"noise_std": 0.1,

"noise_decay": 0.99, # optional

"lr_decay": 1.0, # optional

"decay_step": 20, # optional

"eval_step": 10,

"hidden_sizes": (40, 40)

}

policy = run_experiment(example_config, n_jobs=4, verbose=True)

# to render policy perfomance

render_policy(model_path, env_name, n_videos=10)- OpenAI ES algorithm [Algorithm 1].

- Z-normalization fitness shaping (not rank-based).

- Parallelization with joblib.

- Training for 6 OpenAI gym envs (3 solved).

- Simple three layer net as policy example.

- Learning rate & noise std decay.

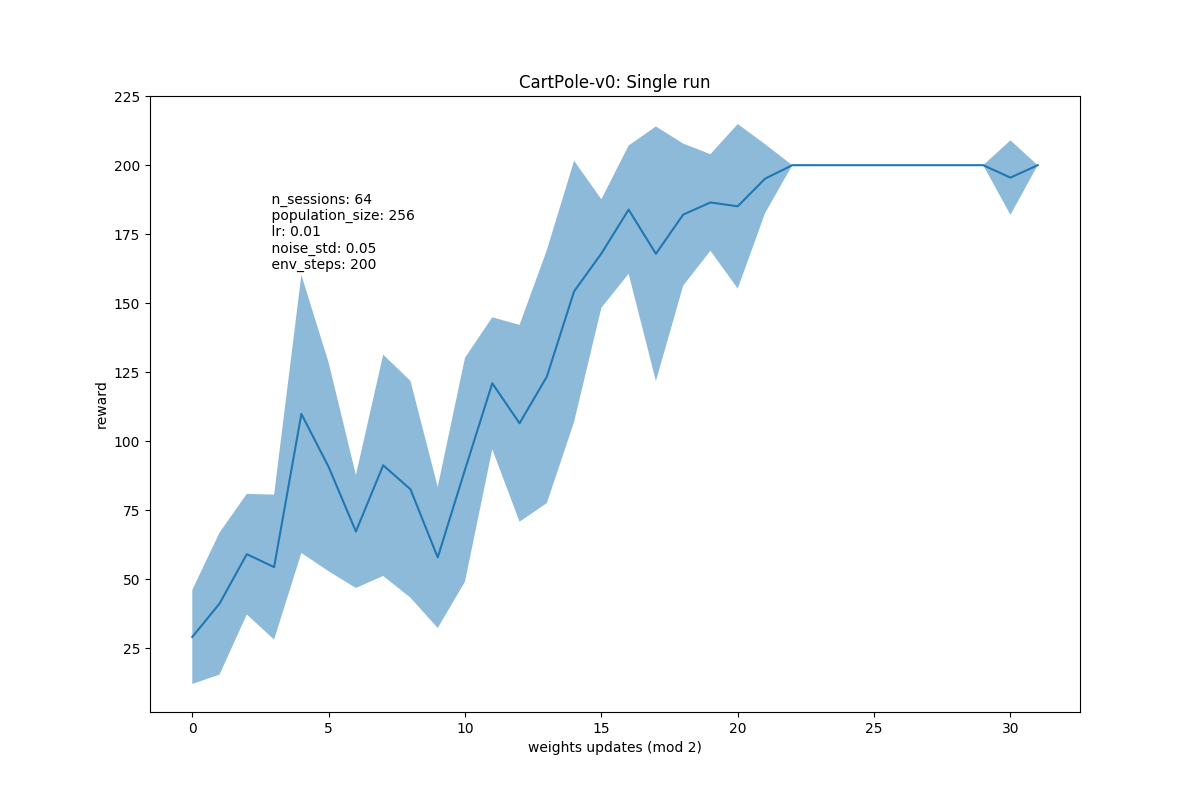

Solved quickly and easily, especially if the population size is increased. However it is necessary to control the learning rate: it is better to put it less, as well as noise std: in this task there is no need to explore, it is enough to get a lot of feedback as a reward for natural gradient estimation.

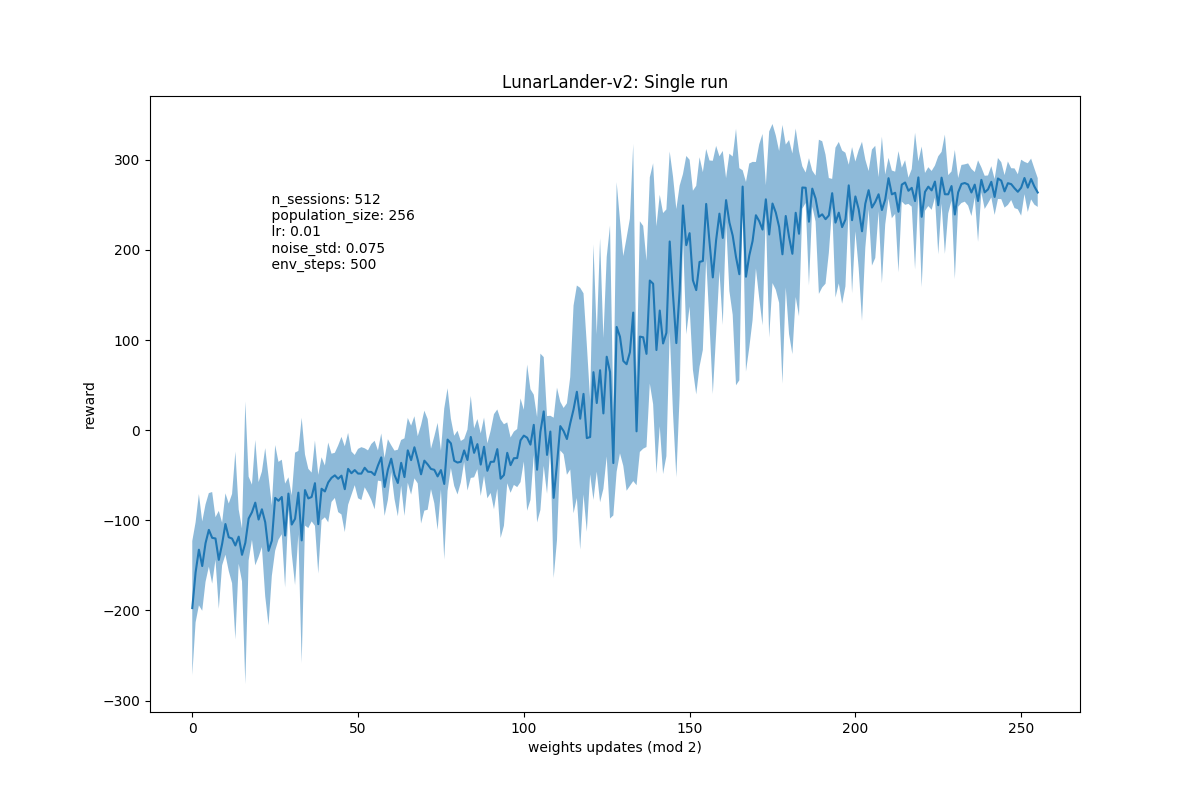

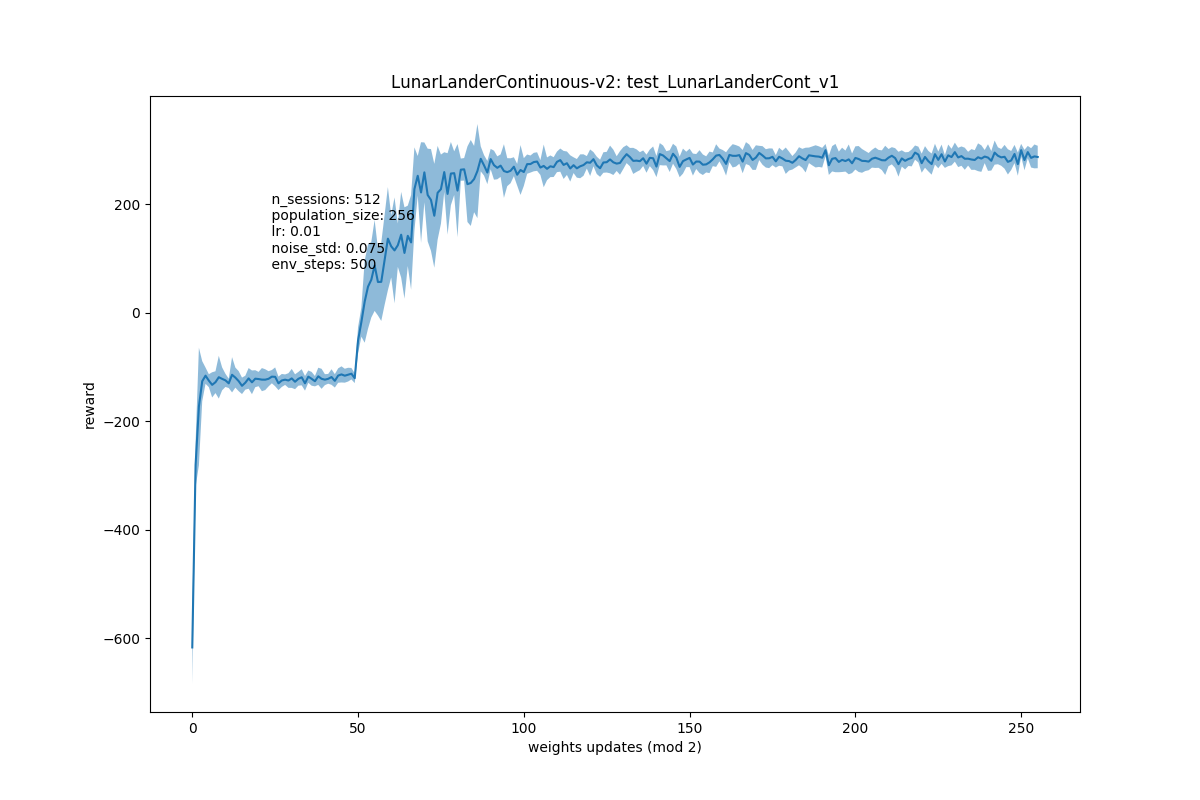

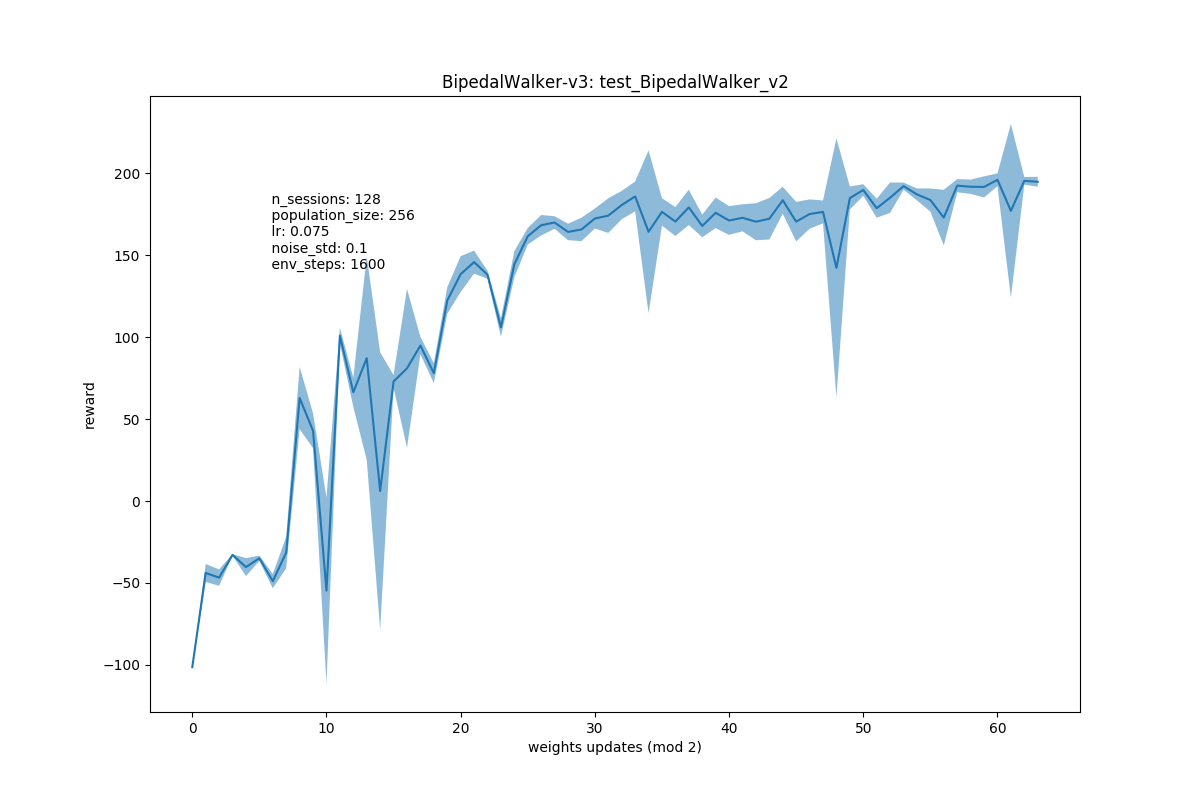

As in the previous task, the algorithm is doing well, it is also important to set a small learning rate, but slightly increase nose std.

Continuous env is solved much faster and better, probably at the expense of more dense reward. It is also interesting that here the agent has learned to land faster, not to turn on the engines immediately, but only before landing.

Сan't solve it yet.

In the discrete version of env, the main problem is sparse reward, which is only given at the very end if you climb a hill. Since the agent does not have time for 200 iterations with the random weights to do so, the natural gradient turns out to be zero and the training is stuck. Solution: remove the 200 iteration limit and wait for the random agent to climb the mountain himself, getting the first reward :). However, this is not quite fair.

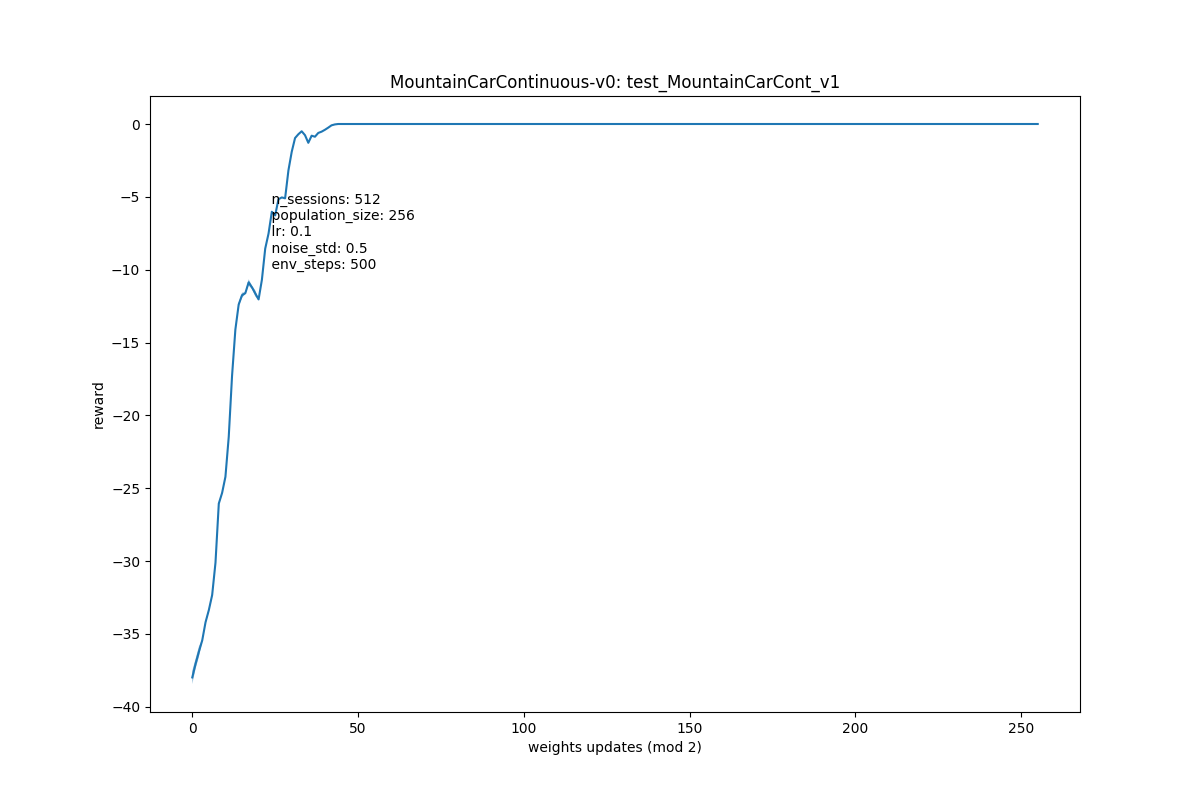

In continuous env, the main problem is the lack of exploration. The agent quickly (faster than climbing the hill) realizes that the best way is to stand still and get 0 reward, which is much higher than when moving.

Possible solution: novelity search. As a novelity function it is possible to take velocity, velocity * x_coord, or x_coord at the end of episode. Reward shaping may improve convergence for DQN/CEM methods, but in this case it does not produce better results.

Not solved yet. More iterations is needed.

Evolution Strategies as a Scalable Alternative to Reinforcement Learning (Tim Salimans, Jonathan Ho, Xi Chen, Ilya Sutskever)