Pytorch implementation of "OOSTraj: Out-of-Sight Trajectory Prediction With Vision-Positioning Denoising" in CVPR2024.

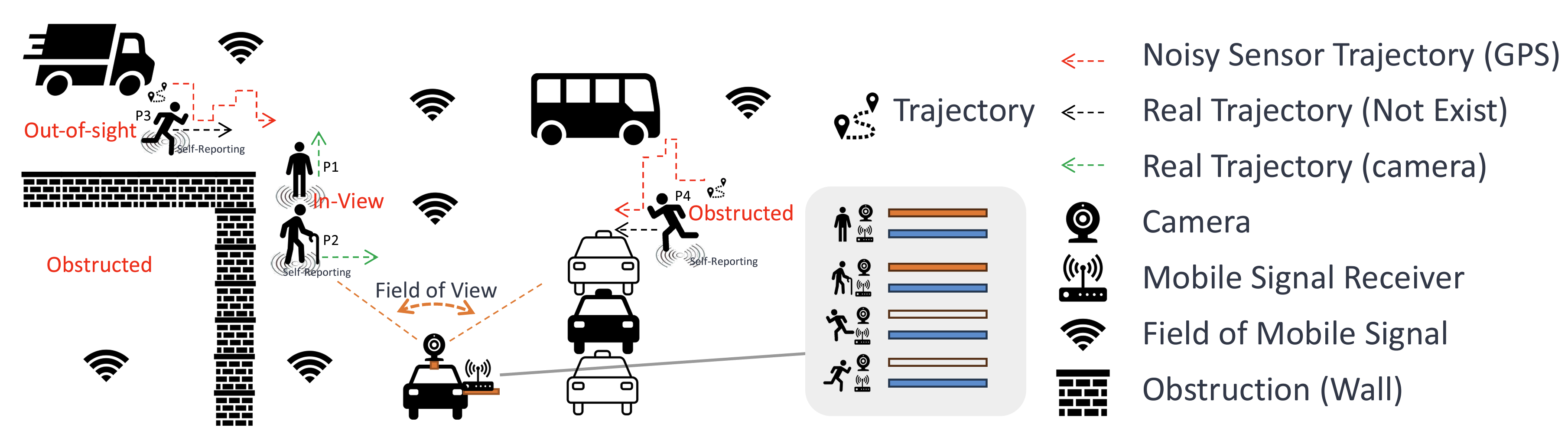

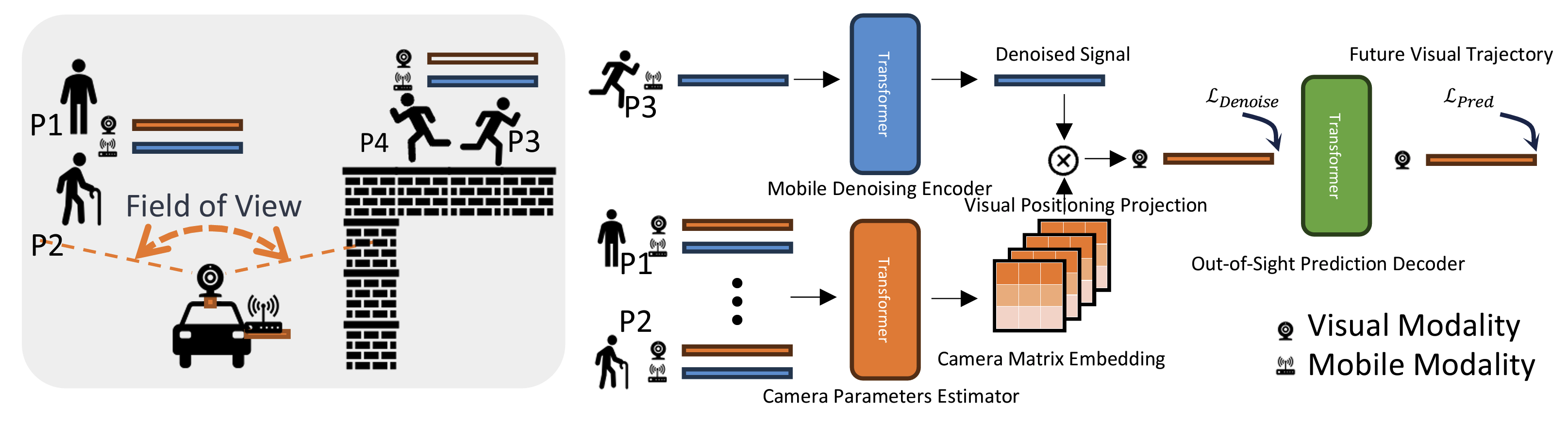

Trajectory prediction is fundamental in computer vision and autonomous driving, particularly for understanding pedestrian behavior and enabling proactive decision-making. Existing approaches in this field often assume precise and complete observational data, neglecting the challenges associated with out-of-view objects and the noise inherent in sensor data due to limited camera range, physical obstructions, and the absence of ground truth for denoised sensor data. Such oversights are critical safety concerns, as they can result in missing essential, non-visible objects. To bridge this gap, we present a novel method for out-of-sight trajectory prediction that leverages a vision-positioning technique. Our approach denoises noisy sensor observations in an unsupervised manner and precisely maps sensor-based trajectories of out-of-sight objects into visual trajectories. This method has demonstrated state-of-the-art performance in out-of-sight noisy sensor trajectory denoising and prediction on the Vi-Fi and JRDB datasets. By enhancing trajectory prediction accuracy and addressing the challenges of out-of-sight objects, our work significantly contributes to improving the safety and reliability of autonomous driving in complex environments. Our work represents the first initiative towards Out-Of-Sight Trajectory prediction (OOSTraj), setting a new benchmark for future research.

Processed Vi-Fi and JRDB dataset (Link to Google Drive)

Please download pkl files for preprocessed datasets, and put them in "JRDB/jrdb.pkl" and "vifi_dataset_gps/vifi_data.pkl". If need, you can also access original data from Vi-Fi Dataset or JRDB

python train.py train --model VisionPosition --gpus 0 --phase 2 --dataset vifi --dec_model Transformer --learning_rate 0.001

The training and print accuracy logs are located under ./checkpoints/

@inproceedings{Zhang2024OOSTraj,

title={OOSTraj: Out-of-Sight Trajectory Prediction With Vision-Positioning Denoising},

author={Haichao Zhang, Yi Xu, Hongsheng Lu, Takayuki Shimizu, and Yun Fu},

booktitle={In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024}

}

The majority of OOSTraj is licensed under an Apache License 2.0