TensorFlow implementation of ContextDesc for CVPR'19 paper (oral) "ContextDesc: Local Descriptor Augmentation with Cross-Modality Context", by Zixin Luo, Tianwei Shen, Lei Zhou, Jiahui Zhang, Yao Yao, Shiwei Li, Tian Fang and Long Quan.

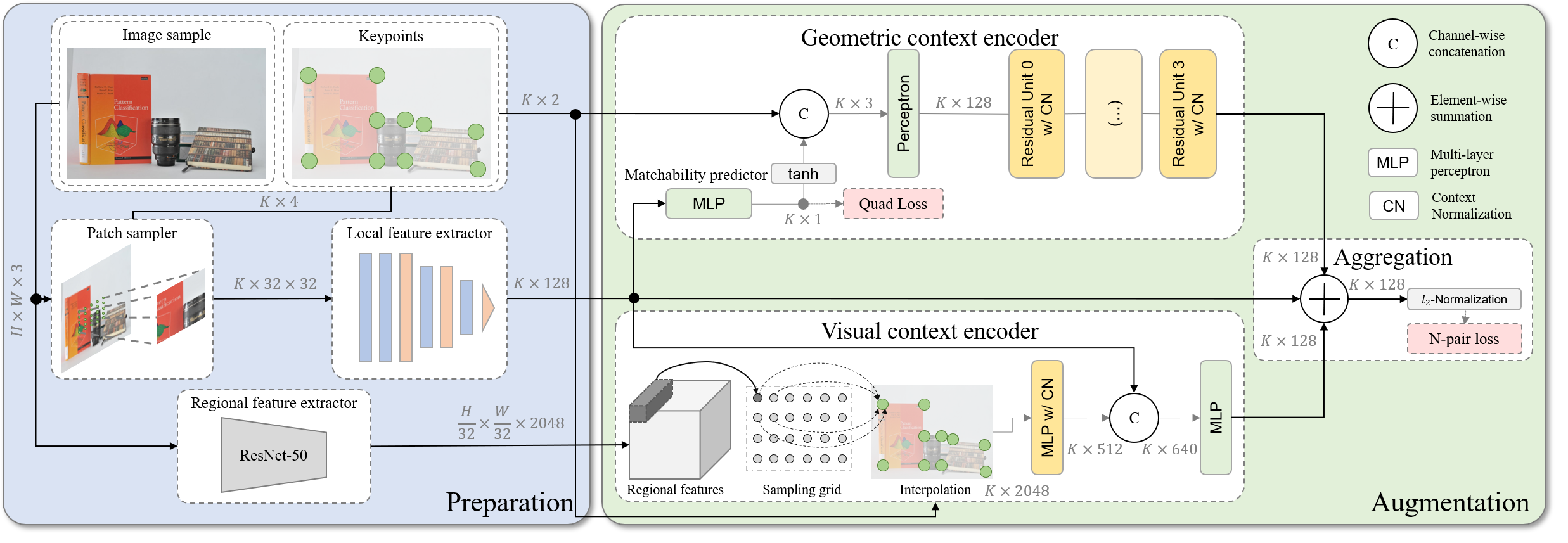

This paper focuses on augmenting off-the-shelf local feature descriptors with two types of context: the visual context from high-level image representation, and geometric context from keypoint distribution. If you find this project useful, please cite:

@article{luo2019contextdesc,

title={ContextDesc: Local Descriptor Augmentation with Cross-Modality Context},

author={Luo, Zixin and Shen, Tianwei and Zhou, Lei and Zhang, Jiahui and Yao, Yao and Li, Shiwei and Fang, Tian and Quan, Long},

journal={Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}

Please use Python 2.7, install NumPy, OpenCV (3.4.2) and TensorFlow (1.12.0). To run the image matching example, you may also need opencv_contrib to enable SIFT.

A ContextDesc model comprises three submodels: raw local feature descriptor (including matchability predictor), regional feature extractor and feature augmentation model. We temporally provide models in Tensorflow Protobuf format for simplicity.

| Local | Regional | Augmentation | Descriptions | |

|---|---|---|---|---|

| contextdesc-base | Link | Link | Link | Use original GeoDesc [1] (ECCV'18) as the base local model. |

| contextdesc-sa-npair | Link | - | Link | (Better) Retrain GeoDesc with the proposed scale-aware N-pair loss as the base |

| contextdesc-e2e | Link | - | Link | (Best performance) End-to-end train local and augmentation models. |

Part of the training data is released in GL3D. Please also cite MIRorR [2] if you find this dataset useful for your research.

To get started, clone the repo and download the pretrained model:

git clone https://github.com/lzx551402/contextdesc.git

cd contextdesc/models

wget http://home.cse.ust.hk/~zluoag/data/contextdesc-e2e.pb

wget http://home.cse.ust.hk/~zluoag/data/contextdesc-e2e-aug.pb

wget http://home.cse.ust.hk/~zluoag/data/retrieval_resnet50.pbthen simply run:

cd contextdesc/examples

python image_matching.pyThe matching results from SIFT (top), base local descriptor (middle) and augmented descriptor (bottom) will be displayed. Type python image_matching.py --h to view more options and test on your own images.

Download HPSequences (full image sequences of HPatches [3] and their corresponding homographies).

[1] GeoDesc: Learning Local Descriptors by Integrating Geometry Constraints, Zixin Luo, Tianwei Shen, Lei Zhou, Siyu Zhu, Runze Zhang, Yao Yao, Tian Fang, Long Quan, ECCV 2018

[2] Matchable Image Retrieval by Learning from Surface Reconstruction, Tianwei Shen, Zixin Luo, Lei Zhou, Runze Zhang, Siyu Zhu, Tian Fang, Long Quan, ACCV 2018.

[3] HPatches: A benchmark and evaluation of handcrafted and learned local descriptors, Vassileios Balntas*, Karel Lenc*, Andrea Vedaldi and Krystian Mikolajczyk, CVPR 2017.