This repo contains the implementation of my master thesis. We tried to improve the visual fidelity of 3D reconstruction results with GAN, hence the name "adversarial shape reconstruction”. Check out the presentation slides for details.

3D generation methods with GAN can generate photo-realistic images that are indistinguishable from real objects. But they are not conditioned on existing objects.

In the meantime, 3D reconstruction can reconstruct existing objects with high geometric accuracy. But the results are often noisy and not photo-realistic to human perception.

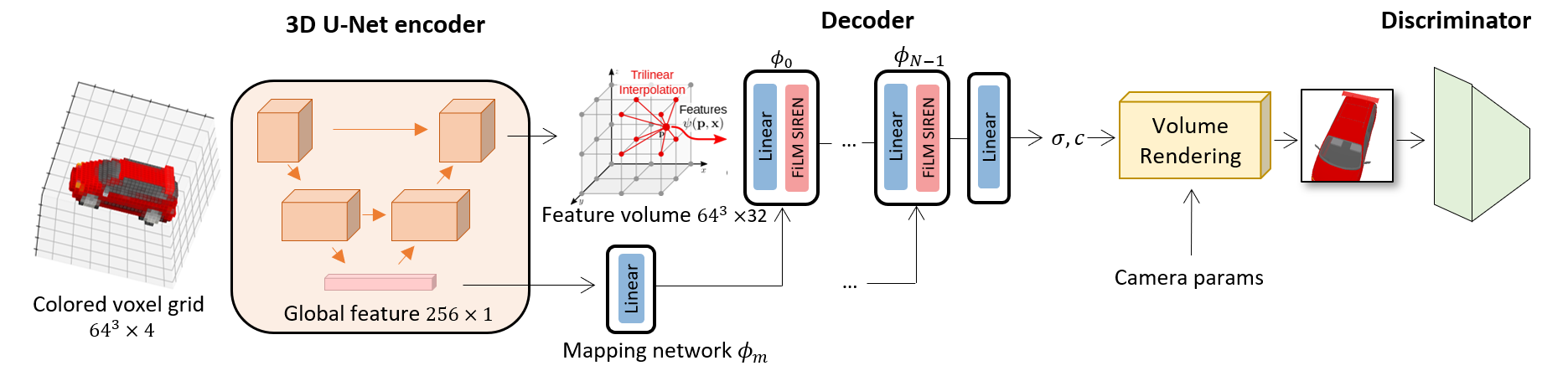

We want to have the best of both worlds, and to improve the visual fidelity of 3D reconstruction results (e.g point clouds or voxel grids) with an adversarial loss. We decide to use NeRF/Neural fields as the 3D representation model due to its ability to render photo-realistic images of high resolution.

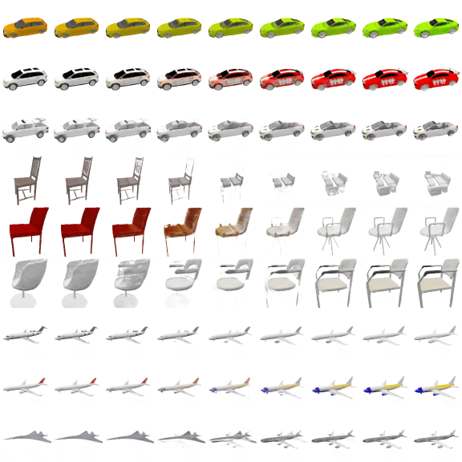

Last two rows show rendering results of our method:

Our method produces much smoother results than the input geometry:

In the following video, the first, third, fifth rows show images rendered from input geometry; the second, fourth, sixth rows show rendered images of better visual quality from our method.

Our method is also capable of interpolation in the latent space.

conda create --name VIRTENV python=3.9

conda activate VIRTENV

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch

pip install -r requirements.txt

./prepare_data.sh

Then, update the "dataset":"path" field in configs/special.py to point to your ./data/ShapeNetCar directory.

python train.py -o test -p 1

- Default hyperparameters: default.py

- Frequently changed hyperparameters: special.py

- What does each hyperparameter mean?

The final hyperparameters will be default hyperparameters in configs/thousand/default.py overloaded by configs/thousand/special.py and then overloaded by the parser.config (if --config is specified).

python train.py -o OUTPUT_DIR -p PRINT_FREQUENCY -s SAMPLING_IMGS_FREQENCY

During training, the model will output rendered rgb and depth images of train/val/test set cars under the OUTPUT_DIR/samples/ directory. But you can also do inference on selected cars after the training is done:

python inference.py CKPT_DIR --sampling_mode SUBSET_OF_CARS_TO_INFERENCE --video

python inference.py CKPT_DIR --sampling_mode SUBSET_OF_CARS_TO_INFERENCE --images

python misc/draw_loss.py PATH_EXP1 PATH_EXP2 ...