Official implementation of [Leverage Large Language Model-based Hierarchical Scene Graph Contrastive Learning for Multimodal Visual Navigation]).

Try out the web demo 🤗 of HSG: [![Hugging Face Spaces] to be continue.

The repository contains:

- The 15K data used for fine-tuning the model.

- The code for generating the data.

- The code for fine-tuning the model on RTX 3090 GPUs with Lit-LLaMA.

- The code for inference during navigation.

- [2024.05.15] The training code for HSG are released. 📌

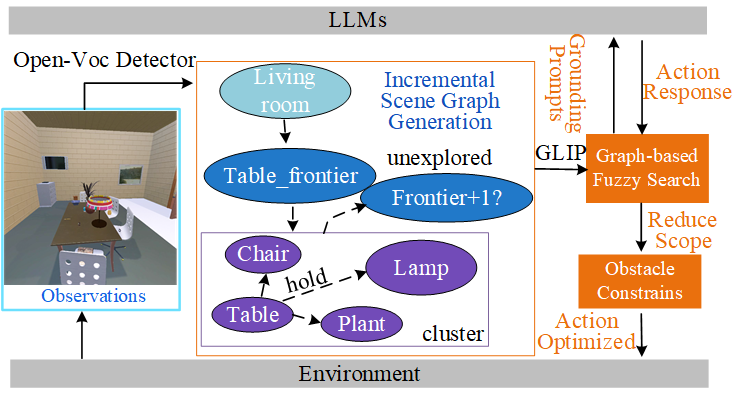

The pipeline of our embodied visual navigation framwork.The overview of our HSG visual navigation frame- work.We collect environmental object categories using an open-vocabulary detector from current observations. Then, we design a scene text prompt, including descriptions, object re- lationships, and action prompts. The LLM outputs normalized object node positions, which are then subjected to graph fuzzy search to approach close frontiers. After each LLM inference loop, the prompt is updated based on historical observations and actions to optimize action selection by updating the graph structure.

Here is a from-scratch script for HSG.

# Install Lit-LLaMA

conda create -n hsg python=3.10

conda activate hsg

git clone https://github.com/zhoukang12321/HSG_VN.git

cd HSG_VN

pip install -r requirements.txt

# If you want to utilize more than one GPU

pip install deepspeedIf you have problems with the installation, you can follow these steps

- conda create -n hsg python=3.10

- conda activate hsg

- git clone https://github.com/zhoukang12321/HSG_VN

- cd HSG_VN

- pip install torch==2.0.0+cu117 torchvision==0.15.1+cu117 torchaudio==2.0.1 --index-url https://download.pytorch.org/whl/cu117

- pip install sentencepiece

- pip install tqdm

- pip install numpy

- pip install jsonargparse[signatures]

- pip install bitsandbytes

- pip install datasets

- pip install zstandard

- pip install lightning==2.1.0.dev0

- pip install deepspeed

# Install Detic

# Exit the HSG_VN2 file first

cd ..

git clone git@github.com:facebookresearch/detectron2.git

cd detectron2

pip install -e .

cd ..

git clone https://github.com/facebookresearch/Detic.git --recurse-submodules

cd Detic

pip install -r requirements.txtNote: If you have any problems with the installation, you can refer to Detic_INSTALL.md

Meanwhile, you also need to download the appropriate pre-trained model and put the weights into the models folder.

Once the installation is complete, we need to copy the files from Detic to the hsg directory.

The HSG_VN file directory should be:

HSG_VN

├── checkpoints

│ ├── lit-llama

│ ├── llama

├── configs

├── create_dataset

├── data

├── datasets

├── detic

├── docs

├── evaluate

├── finetune

├── generate

├── howto

├── lit-llama

├── models

│ ├── Detic_LCOCOI21k_CLIP_SwinB_896b32_4x_ft4x_max-size.pth

├── pretrain

├── quantize

├── scripts

├── tests

├── third_party

│ ├── CenterNet2

│ ├── Deformable-DETR

├── tools

......

If you want to make your own dataset, please install the openAI API and AI2-THOR.

# Install OpenAI API

pip install openai

# If there is a communication error, please try

pip install urllib3==1.25.11

# Install AI2THOR

pip install ai2thor

# If this is your first installation, please run

python prepare_thor.py

# to download the necessary scene resourcesFor more details on the installation and usage of AI2-THOR, please visit AI2-THOR.

alpaca_15k_instruction.json contains 15K instruction-following data we used for fine-tuning the LLaMA-7B model.

The format is the same as Aplaca. Each dictionary contains the following fields:

instruction:str, instructions given by the user, e.g., Please give me a cup of coffee.input:str, categories of objects contained in the scene.output:str, the step-by-step actions to the instruction as generated bygpt-3.5-turbo-0301.

As for the prompts, we used the prompts proposed by Alpaca directly.

Of course, we can also modify the prompts from Alpaca a bit, such as:

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request and list each step to finish the instruction.

### Instruction:

{instruction}

### Input:

{input}

### Response:

Training and inference stages keep the same prompts.

This is an example of making a dataset in AI2THOR.

If you need to make your own dataset, the easiest way is to modify the way the object list is generated.

# Create object list from AI2THOR scenes

cd create_dataset

python create_scene_obj_list.py

python create_json_data.py

python create_gpt_respond.py

python prase_json_2_alpaca.pyAfter running the above code, you will get the file alpaca_15k_instruction.json which contains almost 15K instructions.

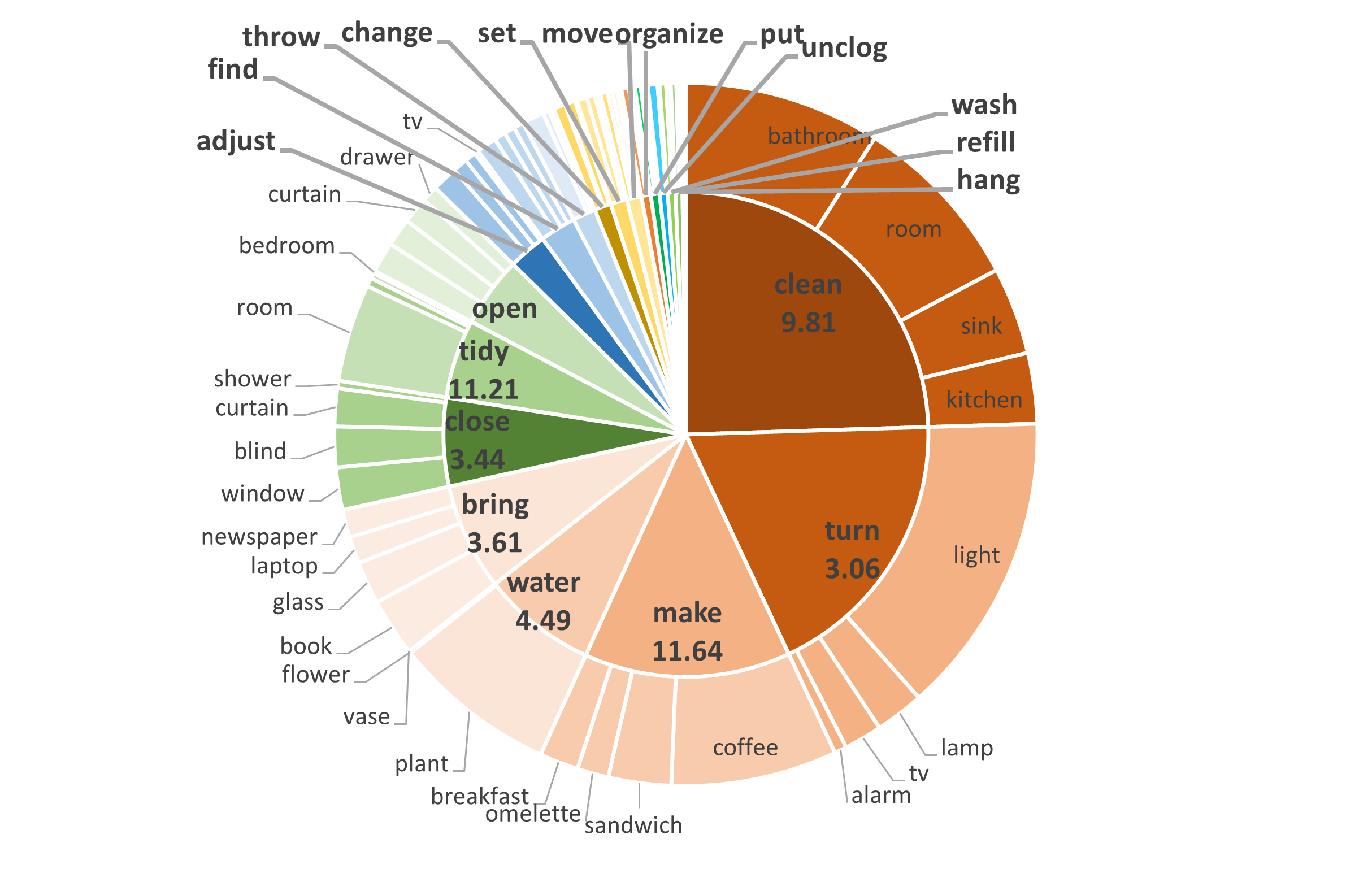

We performed preliminary statistics on the dataset and found that the instructions generated by GPT-3.5 are more diverse and complex.

We plot the following graph to show the diversity of our data, with the inner circle being the root verb in the instruction and the outer circle representing the direct object in the instruction.

Meanwhile, we also count the average number of actions required by the Top7 instructions to demonstrate the complexity.

We extract HSG by random walking in the scenes, which is our previous work. We build a initial scene graph and update using GAT.

We fine-tune the LLaMA-7B model on alpaca_15k_instruction.json according to the script provided by Lit-LLaMA.

Please request access to the pre-trained LLaMA from this form (official) or download the LLaMA-7B from Hugging Face (unofficial).

Then, put them in the checkpoints directory.

HSG_VN

├── checkpoints

│ ├── lit-llama

│ ├── llama

│ │ ├── 7B

│ │ │ ├── checklist.chk

│ │ │ ├── consolidated.00.pth

│ │ │ ├── params.json

│ │ ├── tokenizer.model

Convert the weights to the Lit-LLaMA format:

python scripts/convert_checkpoint.py --model_size 7BOnce converted, you should have a folder like this:

HSG_VN

├── checkpoints

│ ├── lit-llama

│ │ ├── 7B

│ │ │ ├── lit-llama.pth

│ │ ├── tokenizer.model

│ ├── llama

│ │ ├── 7B

│ │ │ ├── checklist.chk

│ │ │ ├── consolidated.00.pth

│ │ │ ├── params.json

│ │ ├── tokenizer.model

Generate the Alpaca format instruction tuning dataset:

python scripts/prepare_alpaca.pyThe finetuning requires at least one GPU with ~24 GB memory (RTX 3090). You can speed up training by setting the devices variable in the script to utilize more GPUs if available.

Here are some parameter settings.

devices = 2

micro_batch_size = 8

# GPU memory limit

devices = 8

micro_batch_size = 2# Use 2 GPUs

CUDA_VISIBLE_DEVICES=0,1 python finetune/adapter.py

# Use 8 GPUs

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python finetune/adapter.pyYou can test the finetuned model with your own instructions by running:

python generate/adapter_robot.py \

--prompt "Can you prepare me a sandwich?" \

--quantize llm.int8 \

--max_new_tokens 512 \

--input "[Cabinet, PaperTowelRoll, Cup, ButterKnife, Shelf, Bowl, Fridge, CounterTop, Drawer, Potato, DishSponge, Bread, Statue, Spoon, SoapBottle, ShelvingUnit, HousePlant, Sink, Fork, Spatula, GarbageCan, Plate, Pot, Blinds, Kettle, Lettuce,Stool, Vase, Tomato, Mug, StoveBurner, StoveKnob, CoffeeMachine, LightSwitch, Toaster, Microwave, Ladle, SaltShaker, Apple, PepperShaker]"You can also take several scene images and save them to ./input/rgb_img directory and use Detic to generate a list of scene objects.

python generate/adapter_with_detic.py \

--prompt "Can you open the computer?" \

--max_new_tokens 512 \

--img_path input/rgb_imgIf you want to try to get results on the validation set, need to prepare the validation set first.

# Creating multi-modal validation set

python create_partial_vision_dataset.py

python create_vision_dataset.pyThe default validation set instructions are stored in alpaca_20_val_instruction.json.

If you want to create your own validation set, you can perform the dataset generation process again based on alpaca_20_val.json

Once the validation set generation is complete, run:

python generate/adapter_detic_robot_eval_random.py --navigation_strategy Select one of the random strategies

python generate/adapter_detic_robot_eval_traversal.py --navigation_strategy Select one of the traversal strategiesThis repo benefits from AI2THOR, LLaMA, Stanford Alpaca, Detic, and Lit-LLaMA. Thanks for their wonderful works.