This repository implements the work of the student project Visual Odometry with new Unprecedented Event Camera by Yang Miao(yamiao@student.ethz.ch), supervised by Nico Messikommer, Daniel Gehrig and Prof. Davide Scaramuzza. To our best knowledge, this is the first feature tracking framework that utilize events with abosolute intensity measurement.

The code framework is based on EKLT. According to the aggrement with RPG-UZH, the code is currently unavailable to public. If you are interested in the code, please contact Yang Miao(yamiao@student.ethz.ch).

- "Implementation Details" are recommended for reading, which summarize the ideas of this project and provide the implementation details.

- "scripts" folder contains python scripts used for data preprocess and conversion.

- "src/oculi_track" contains c++ source code for feature tracking with output of Oculi sensor.

- Getting familiar with EKLT would be very helpful for understanding this repository.

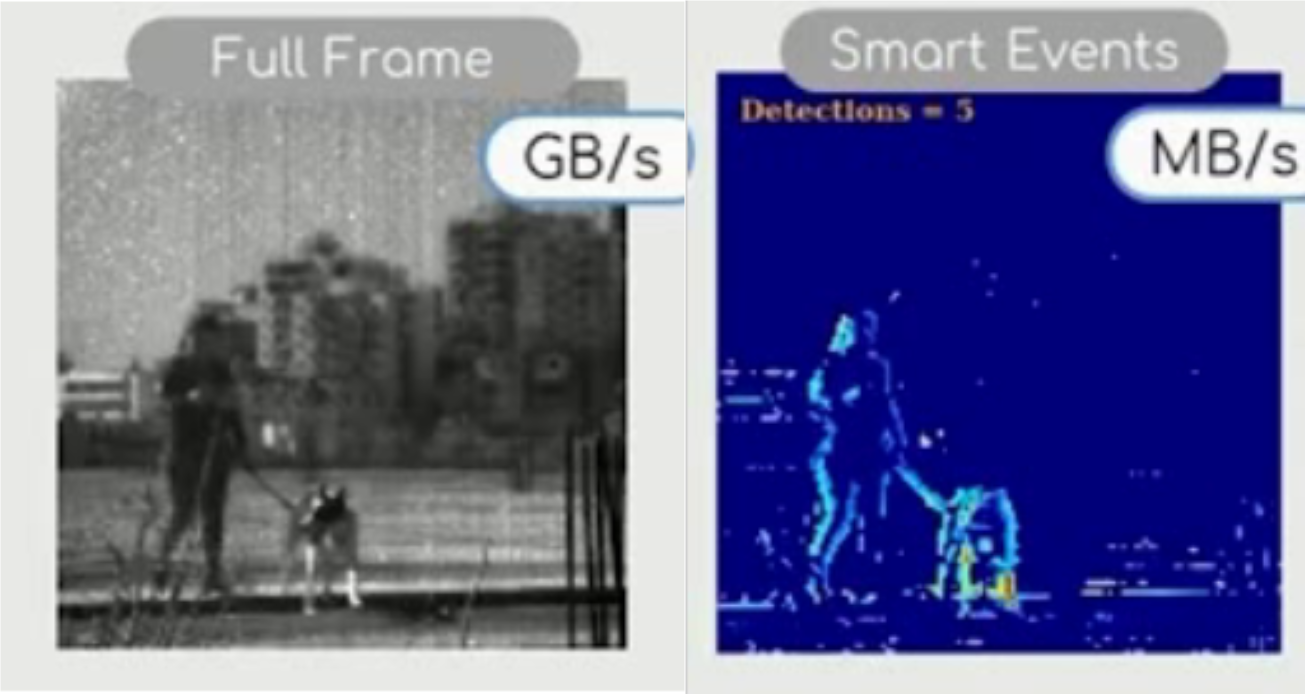

Unlike standard event cameras, Oculi event camera output events which contains full intensity instead of polarity, as shown in the image below:

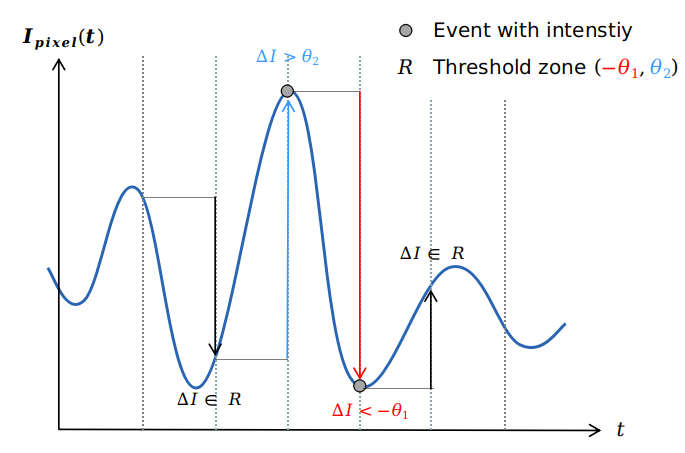

. Full frame and event frame The generation of the Oculi events is also different with existing standard event cameras. As shown by the image above, the events are generated when change of pixel intensity is larger than certain threshold compared to intensity in the last sampling time. In order words, the events are generatede synchronously, but at a very high frame rate (up to 30,000 Hz). . Generation of Oculi eventsBased on this unprecedented property, We design an novel feature tracking algorithms which outperform existing algorithms with only full-frame images.

The scripts for visualizaiton of Oculi full frames and event frames are "scripts/playback.py" and "scripts/playback_events.py".

playback.py -i $(Oculi full frame file)

playback_events.py -i $(Oculi event frame file)

"scripts/Oculi_to_rosbag.ipynb" convert the oculi data to rosbag with messages of full frame and Oculi event frames.

The Oculi events has two main properties: i. containing intensity instead of polarity and ii. generated with large intensity change.

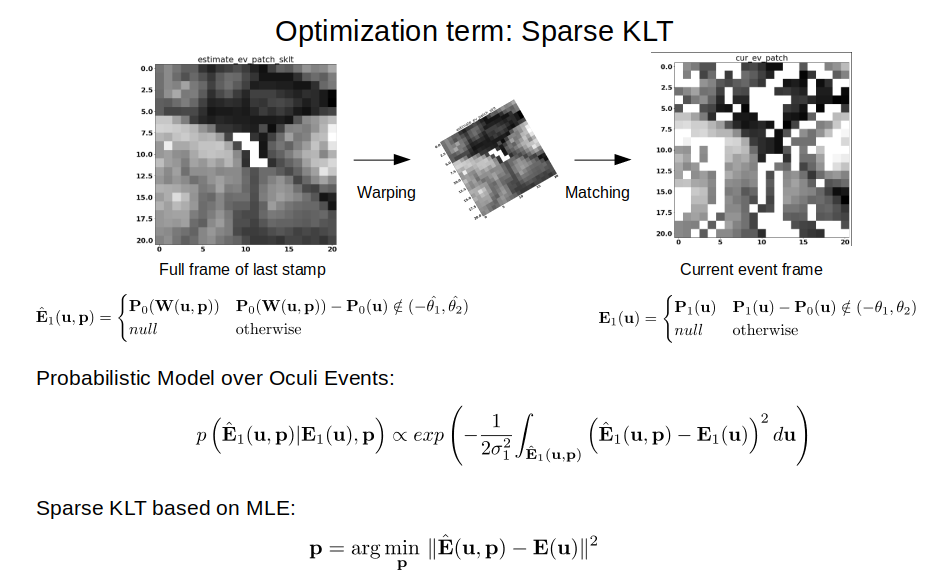

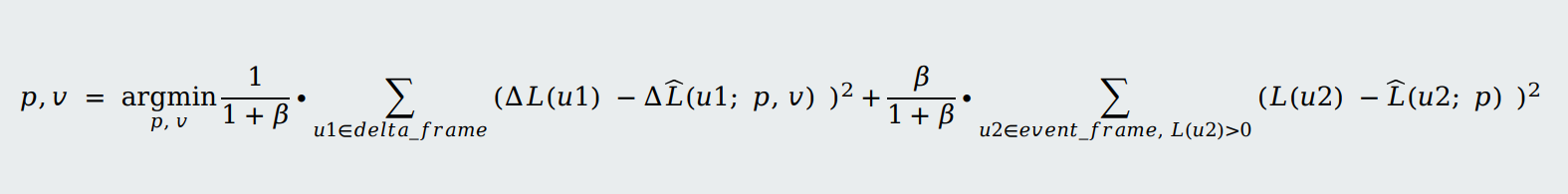

Based on the two properties, we come up with a optimization-based patch matching for feature tracking. The loss function of the optimization framework consists of sparse KLT term and Oculi EKLT term, which correspont to the two properties respectively.

As KLT, brightness constancy, temporal consistency and spatial coherency are assumed for our feature tracking algorithms.

As the name "Sparse KLT" denotes, the term is calculated with sparse pixels in the feature patch where there are Oculi events. We assume the noise of pixel intensity is Gaussian-distributed. The optimization term is trying to match the warped patch of last frame to current observed event frame. Warping parameter is optimized during this process. The process is shown in the image below:

. Spare KLT TermAnother property of the Oculi events is that events are generated with large intensity change.

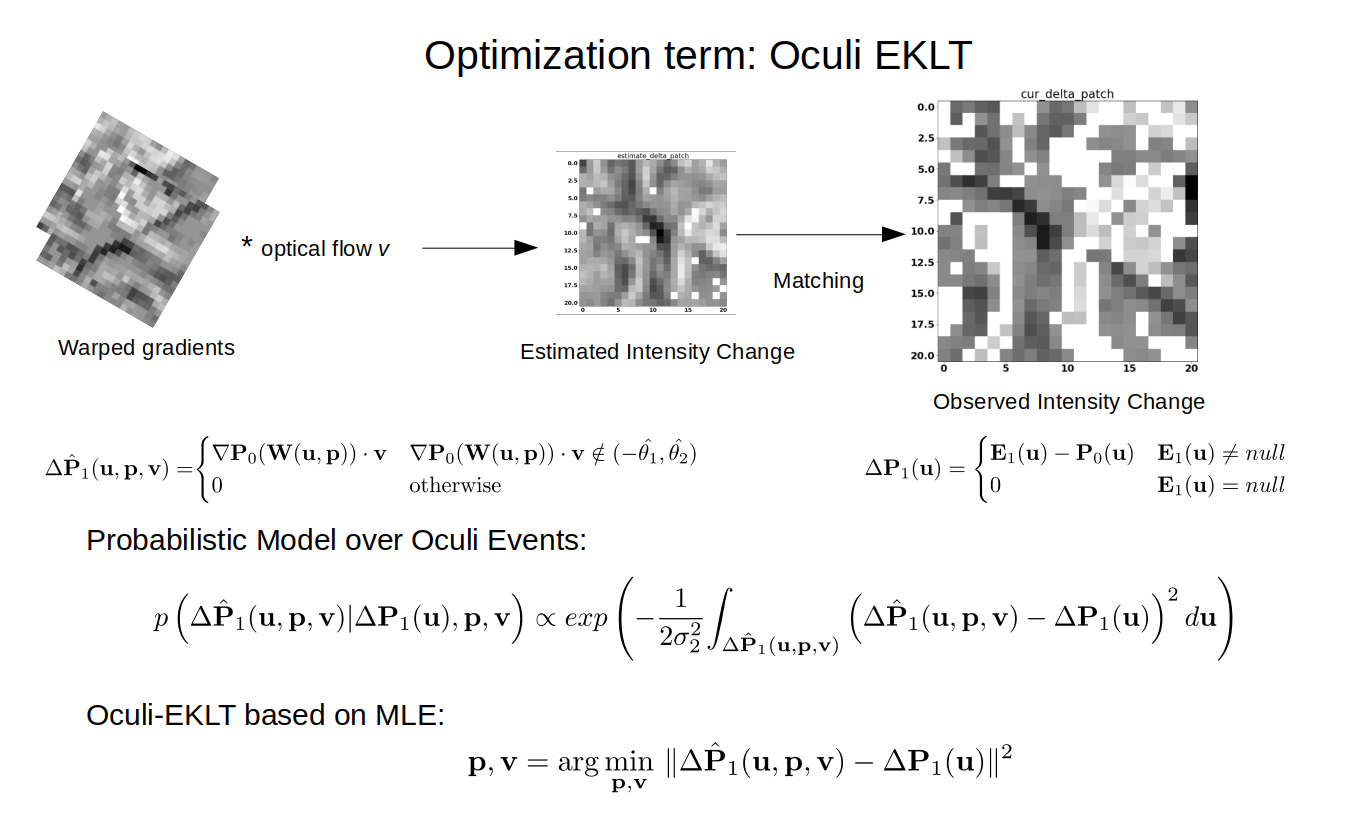

In order to make use of that property for feature matching, firstly the gradient of updated fullframe is calculated. After that the gradient frame is warped and then times optical flow and thus the estimated intensity change is calculated and then matched to the observed delta event frame(event frame - last updated frame). Similarly, Gaussian noise is assumed for the intensity change frame.

The process is shown in the image below:

The two terms are then combined together for jointly optimization of warping parameter W and optical flow v:

. Oculi events Term+Spare KLT TermBoth simulated and real-world experiments are conducted to show effectiveness of our algorithms.

Sponza dataset(rendered within 3D model) is used for simulation, as it has ground truth feature location.

The Sponza model looks like this:

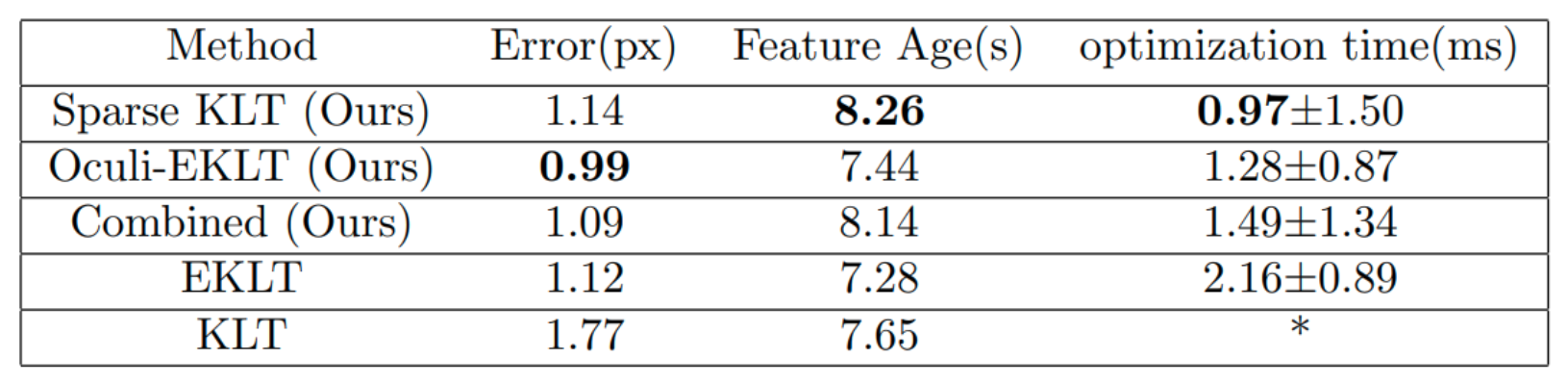

The result shows that the sparse KLT term achieves better feature age while the event term can achieve better tracking accuracy. Combining the two terms can make a balance between feature age and tracking accuracy:

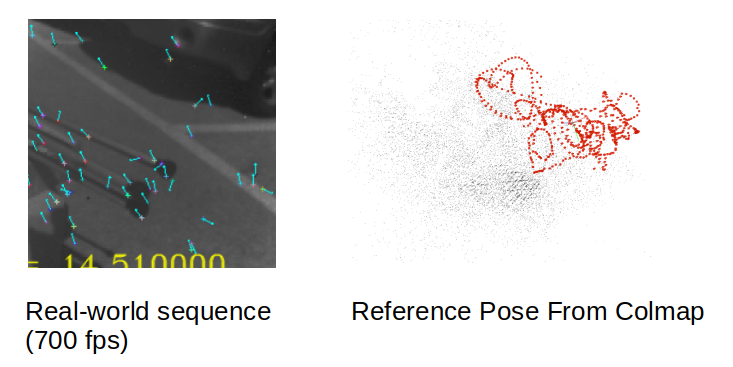

The real-world data is collected by Oculi company. We use trajectory from COLMAP to evaluate the feature tracking error:

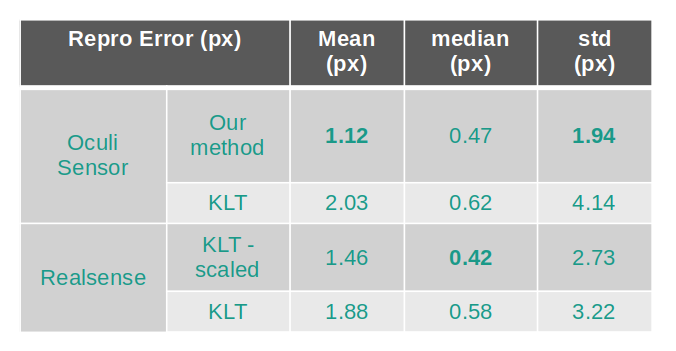

The result shows that our methods can achieve better tracking accuracy than existing method with Oculi sensor(127x127 pxs), and also achieve comparable accuracy with Oculi sensor as existing method with a much higher resolutional Realsense sensor(640x480 pxs):

Like in EKLT, launch feature tracking with rosbag can be done with command:

roslaunch oculi_track oculi_tracking.launch tracks_file_txt:=$(tracks file) v:=1