An implementation of Google MobileNet-V2 and IGCV3 introduced in PyTorch.

Link to the original paper: Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation,IGCV3: Interleaved Low-Rank Group Convolutions for Efficient Deep Neural Networks. Ke Sun, Mingjie Li, Dong Liu, and Jingdong Wang.

arXiv preprint arXIV:1806.00178 (2017)

This implementation was made to be an example of a common deep learning software architecture. It's simple and designed to be very modular. All of the components needed for training and visualization are added.

This project uses Python 3 and PyTorch 0.3.1

pytorch 0.3.1

numpy

tqdm

easydict

matplotlib

tensorboardX

Install dependencies:

pip install -r requirements.txt- Prepare your data, then create a dataloader class such as

cifar10data.pyandcifar100data.py. - Create a .json config file for your experiments. Use the given .json config files as a reference.

python main.py config/<your-config-json-file>.json

Due to the lack of computational power. I trained on CIFAR-10 dataset as an example to prove correctness, and was able to achieve test top1-accuracy of 90.9%.

Tensorboard is integrated with the project using tensorboardX library which proved to be very useful as there is no official visualization library in pytorch.

You can start it using:

tensorboard --logdir experimenets/<config-name>/summariesThese are the learning curves for the CIFAR-10 experiment.

The codes are based on https://github.com/liangfu/mxnet-mobilenet-v2.

IGCV3: Interleaved Low-Rank Group Convolutions for Efficient Deep Neural Networks. Ke Sun, Mingjie Li, Dong Liu, and Jingdong Wang. arXiv preprint arXIV:1806.00178 (2017)

Interleaved Group Convolutions (IGCV1)

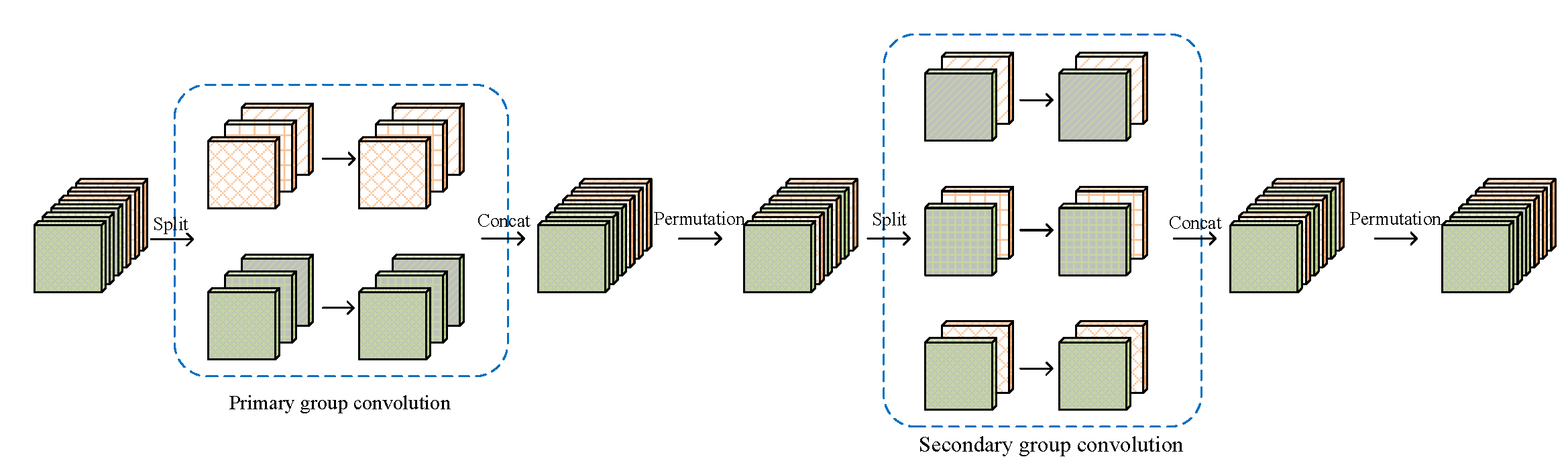

Interleaved Group Convolutions use a pair of two successive interleaved group convolutions: primary group convolution and secondary group convolution. The two group convolutions are complementary.

Illustrating the interleaved group convolution, with L = 2 primary partitions and M = 3 secondary partitions. The convolution for each primary partition in primary group convolution is spatial. The convolution for each secondary partition in secondary group convolution is point-wise (1 × 1).

You can find its code here!

Interleaved Structured Sparse Convolution (IGCV2)

IGCV2 extends IGCV1 by decomposing the convolution matrix in to more structured sparse matrices, which uses a depth-wise convoultion (3 × 3) to replace the primary group convoution in IGC and uses a series of point-wise group convolutions (1 × 1).

We proposes Interleaved Low-Rank Group Convolutions, named IGCV3, extend IGCV2 by using low-rank group convolutions to replace group convoutions in IGCV2. It consists of a channel-wise spatial convolution, a low-rank group convolution with groups that reduces the width and a low-rank group convolution with

groups which expands the widths back.

Illustrating the interleaved branches in IGCV3 block. The first group convolution is a group 1 × 1 convolution with

=2 groups. The second is a channel-wise spatial convolution. The third is a group 1 × 1 convolution with

=2 groups.

We compare our IGCV3 network with other Mobile Networks on CIFAR datasets which illustrated our model' advantages on small dataset.

Classification accuracy comparisons of MobileNetV2 and IGCV3 on CIFAR datasets. "Network s×" means reducing the number of parameter in "Network 1.0×" by s times.

| #Params (M) | CIFAR-10 | CIFAR100 | |

|---|---|---|---|

| MobileNetV2(our impl.) | 2.3 | 94.56 | 77.09 |

| IGCV3-D 0.5× | 1.2 | 94.73 | 77.29 |

| IGCV3-D 0.7× | 1.7 | 94.92 | 77.83 |

| IGCV3-D 1.0× | 2.4 | 94.96 | 77.95 |

| IGCV3-D 1.0×(my pytorch impl) | 2.4 | 94.70 | 75.96 |

| MobileNetV2(my pytorch impl) | 2.3 | 94.01 | -- |

| #Params (M) | CIFAR-10 | CIFAR100 | |

|---|---|---|---|

| IGCV2 | 2.4 | 94.76 | 77.45 |

| IGCV3-D | 2.4 | 94.96 | 77.95 |

Comparison with MobileNetV2 on ImageNet.

| #Params (M) | Top-1 | Top-5 | |

|---|---|---|---|

| MobileNetV2 | 3.4 | 70.0 | 89.0 |

| IGCV3-D | 3.5 | 70.6 | 89.7 |

| #Params (M) | Top-1 | Top-5 | |

|---|---|---|---|

| MobileNetV2 | 3.4 | 71.4 | 90.1 |

| IGCV3-D | 3.5 | 72.2 | 90.5 |