The codes are based on https://github.com/liangfu/mxnet-mobilenet-v2.

IGCV3: Interleaved Low-Rank Group Convolutions for Efficient Deep Neural Networks. Ke Sun, Mingjie Li, Dong Liu, and Jingdong Wang. arXiv preprint arXIV:1806.00178 (2017)

Interleaved Group Convolutions (IGCV1)

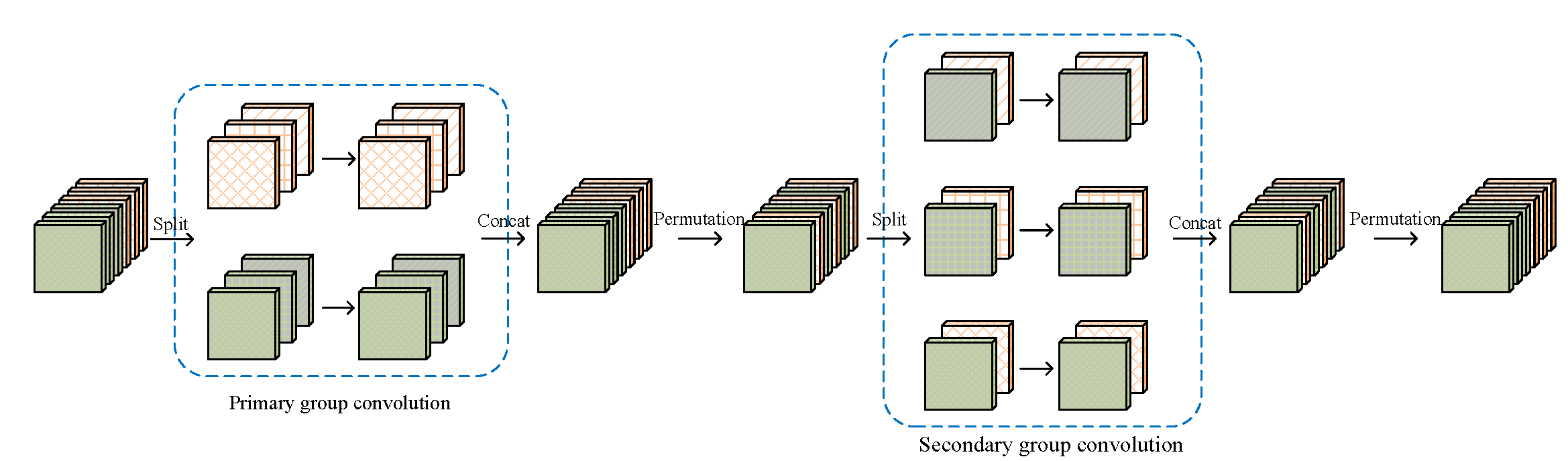

Interleaved Group Convolutions use a pair of two successive interleaved group convolutions: primary group convolution and secondary group convolution. The two group convolutions are complementary.

Illustrating the interleaved group convolution, with L = 2 primary partitions and M = 3 secondary partitions. The convolution for each primary partition in primary group convolution is spatial. The convolution for each secondary partition in secondary group convolution is point-wise (1 × 1).

You can find its code here!

Interleaved Structured Sparse Convolution (IGCV2)

IGCV2 extends IGCV1 by decomposing the convolution matrix in to more structured sparse matrices, which uses a depth-wise convoultion (3 × 3) to replace the primary group convoution in IGC and uses a series of point-wise group convolutions (1 × 1).

We proposes Interleaved Low-Rank Group Convolutions, named IGCV3, extend IGCV2 by using low-rank group convolutions to replace group convoutions in IGCV2. It consists of a channel-wise spatial convolution, a low-rank group convolution with groups that reduces the width and a low-rank group convolution with

groups which expands the widths back.

Illustrating the interleaved branches in IGCV3 block. The first group convolution is a group 1 × 1 convolution with

=2 groups. The second is a channel-wise spatial convolution. The third is a group 1 × 1 convolution with

=2 groups.

We compare our IGCV3 network with other Mobile Networks on CIFAR datasets which illustrated our model' advantages on small dataset.

Classification accuracy comparisons of MobileNetV2 and IGCV3 on CIFAR datasets. "Network s×" means reducing the number of parameter in "Network 1.0×" by s times.

| #Params (M) | CIFAR-10 | CIFAR100 | |

|---|---|---|---|

| MobileNetV2(our impl.) | 2.3 | 94.56 | 77.09 |

| IGCV3-D 0.5× | 1.2 | 94.73 | 77.29 |

| IGCV3-D 0.7× | 1.7 | 94.92 | 77.83 |

| IGCV3-D 1.0× | 2.4 | 94.96 | 77.95 |

| #Params (M) | CIFAR-10 | CIFAR100 | |

|---|---|---|---|

| IGCV2 | 2.4 | 94.76 | 77.45 |

| IGCV3-D | 2.4 | 94.96 | 77.95 |

Comparison with MobileNetV2 on ImageNet.

| #Params (M) | Top-1 | Top-5 | |

|---|---|---|---|

| MobileNetV2 | 3.4 | 70.0 | 89.0 |

| IGCV3-D | 3.5 | 70.6 | 89.7 |

| #Params (M) | Top-1 | Top-5 | |

|---|---|---|---|

| MobileNetV2 | 3.4 | 71.4 | 90.1 |

| IGCV3-D | 3.5 | 72.2 | 90.5 |

IGCV3 pretrained model is released in models folder.

- Install MXNet

Current code supports training IGCV3s on ImageNet. All the networks are contained in the symbol folder.

For example, running the following command can train the IGCV3 network on ImageNet.

python train_imagenet.py --network=IGCV3 --multiplier=1.0 --gpus=0,1,2,3,4,5,6,7 --batch-size=96 --data-dir=<dataset location>multiplier is means how many times wider than the original IGCV3 network whose width is the same as MobileNet-V2.

Please cite our papers in your publications if it helps your research:

@article{WangWZZ16,

author = {Jingdong Wang and

Zhen Wei and

Ting Zhang and

Wenjun Zeng},

title = {Deeply-Fused Nets},

journal = {CoRR},

volume = {abs/1605.07716},

year = {2016},

url = {http://arxiv.org/abs/1605.07716}

}

@article{ZhaoWLTZ16,

author = {Liming Zhao and

Jingdong Wang and

Xi Li and

Zhuowen Tu and

Wenjun Zeng},

title = {On the Connection of Deep Fusion to Ensembling},

journal = {CoRR},

volume = {abs/1611.07718},

year = {2016},

url = {http://arxiv.org/abs/1611.07718}

}

@article{DBLP:journals/corr/ZhangQ0W17,

author = {Ting Zhang and

Guo{-}Jun Qi and

Bin Xiao and

Jingdong Wang},

title = {Interleaved Group Convolutions for Deep Neural Networks},

journal = {ICCV},

volume = {abs/1707.02725},

year = {2017},

url = {http://arxiv.org/abs/1707.02725}

}

@article{DBLP:journals/corr/abs-1804-06202,

author = {Guotian Xie and

Jingdong Wang and

Ting Zhang and

Jianhuang Lai and

Richang Hong and

Guo{-}Jun Qi},

title = {{IGCV2:} Interleaved Structured Sparse Convolutional Neural Networks},

journal = {CVPR},

volume = {abs/1804.06202},

year = {2018},

url = {http://arxiv.org/abs/1804.06202},

archivePrefix = {arXiv},

eprint = {1804.06202},

timestamp = {Wed, 02 May 2018 15:55:01 +0200},

biburl = {https://dblp.org/rec/bib/journals/corr/abs-1804-06202},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

@article{KeSun18,

author = {Ke Sun and

Mingjie Li and

Dong Liu and

Jingdong Wang},

title = {IGCV3: Interleaved Low-Rank Group Convolutions for Efficient Deep Neural Networks},

journal = {CoRR},

volume = {abs/1806.00178},

year = {2018},

url = {http://arxiv.org/abs/1806.00178}

}