Yuanzhi Liu, Yujia Fu, Minghui Qin, Yufeng Xu, Baoxin Xu, et al.

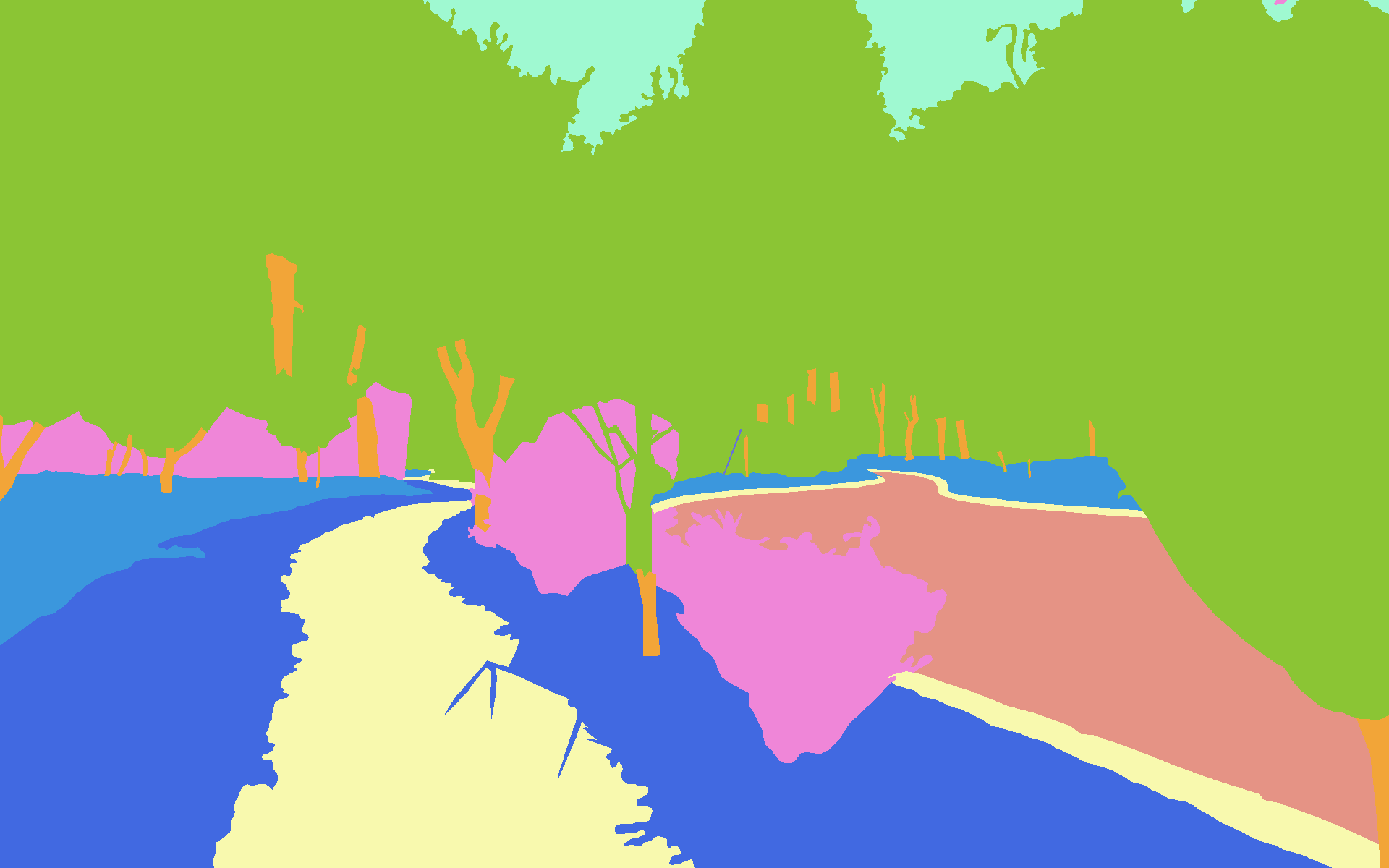

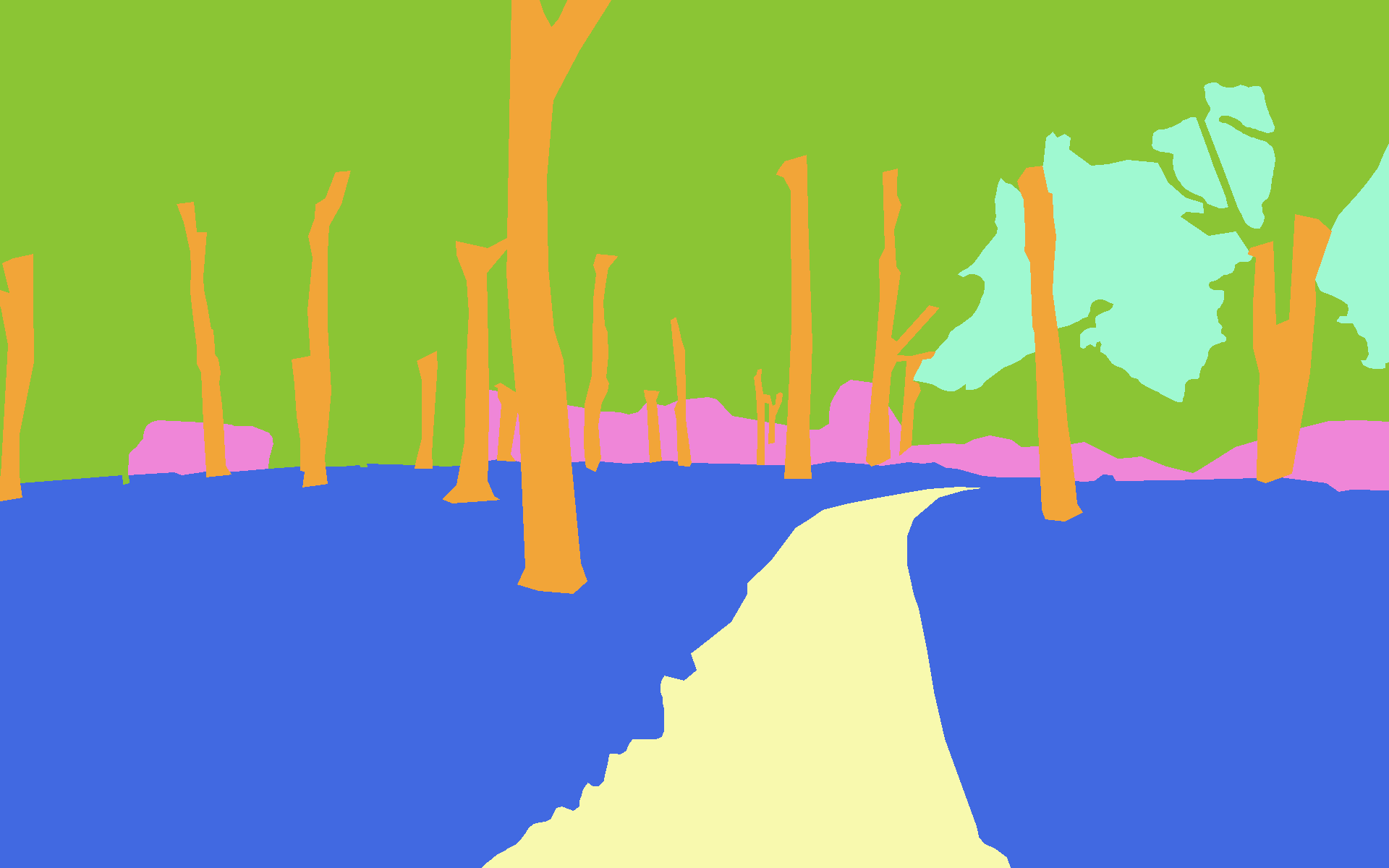

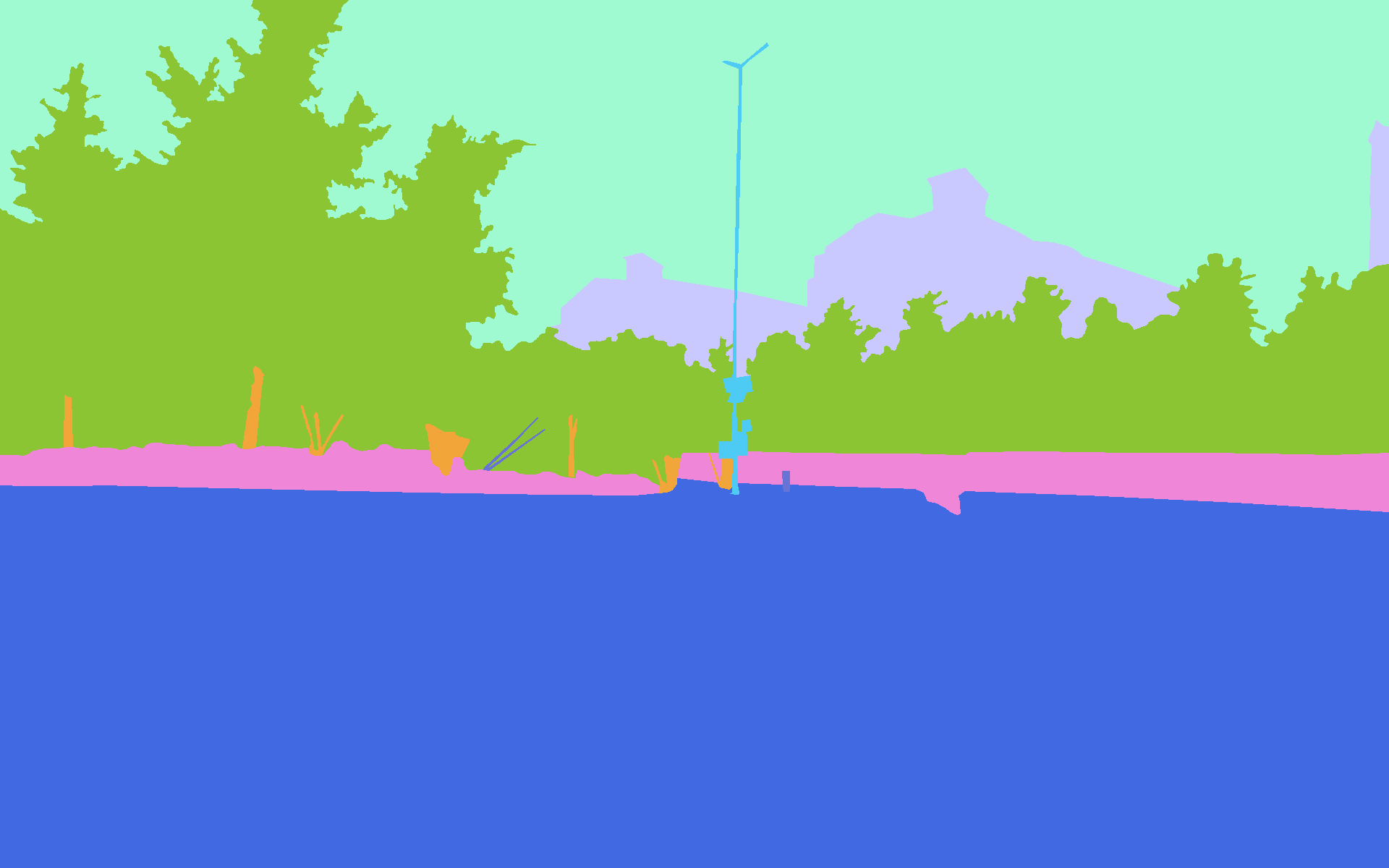

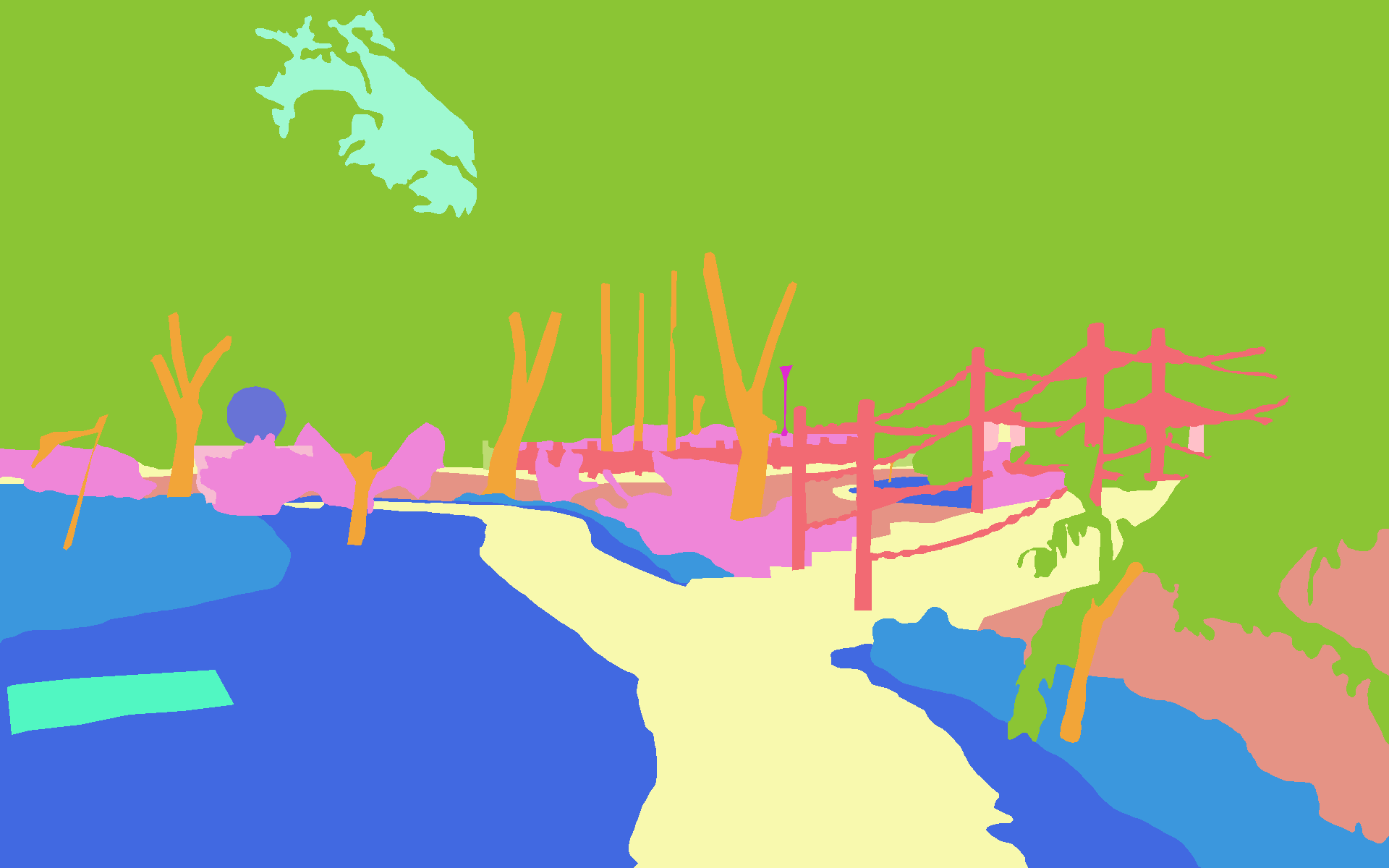

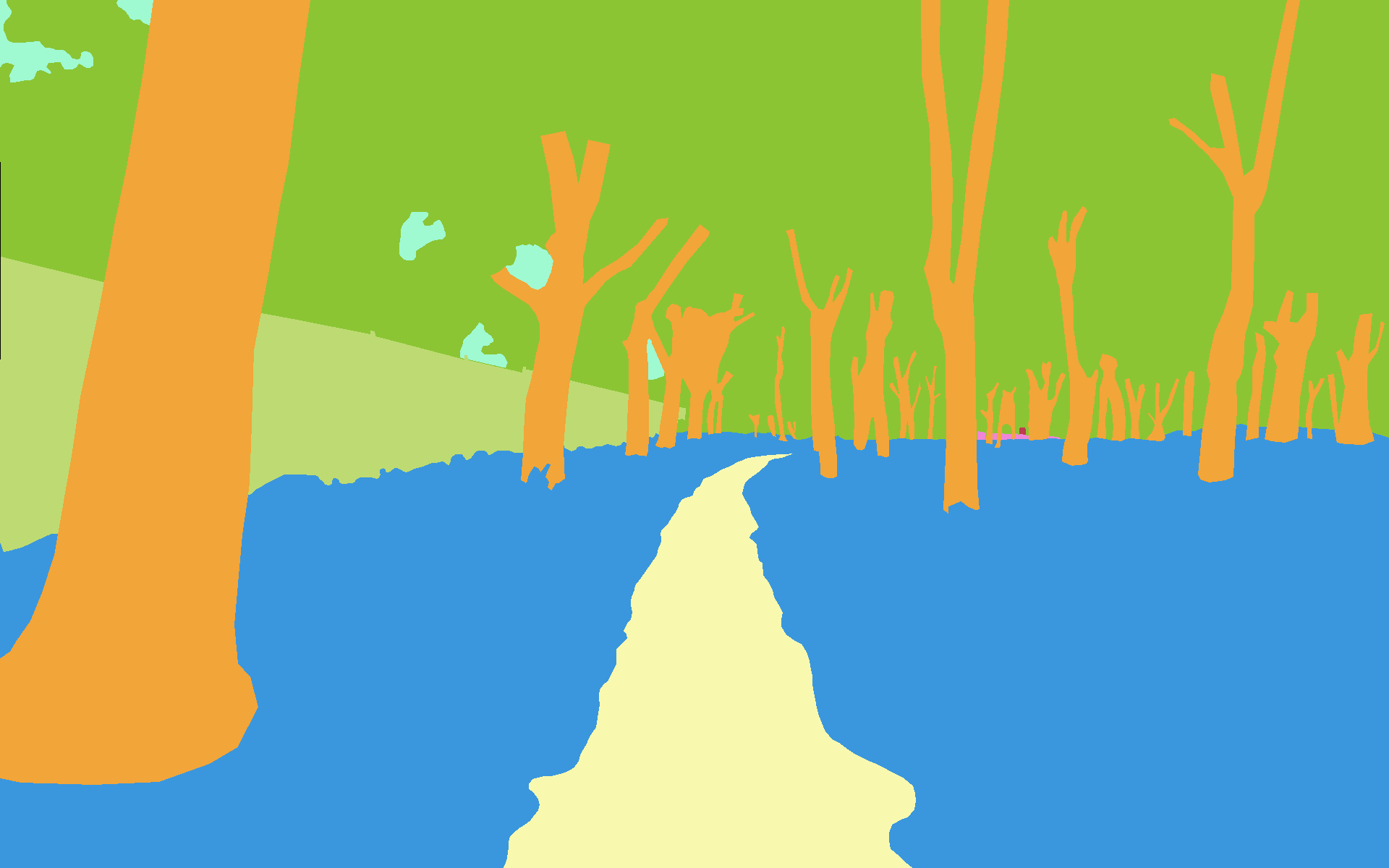

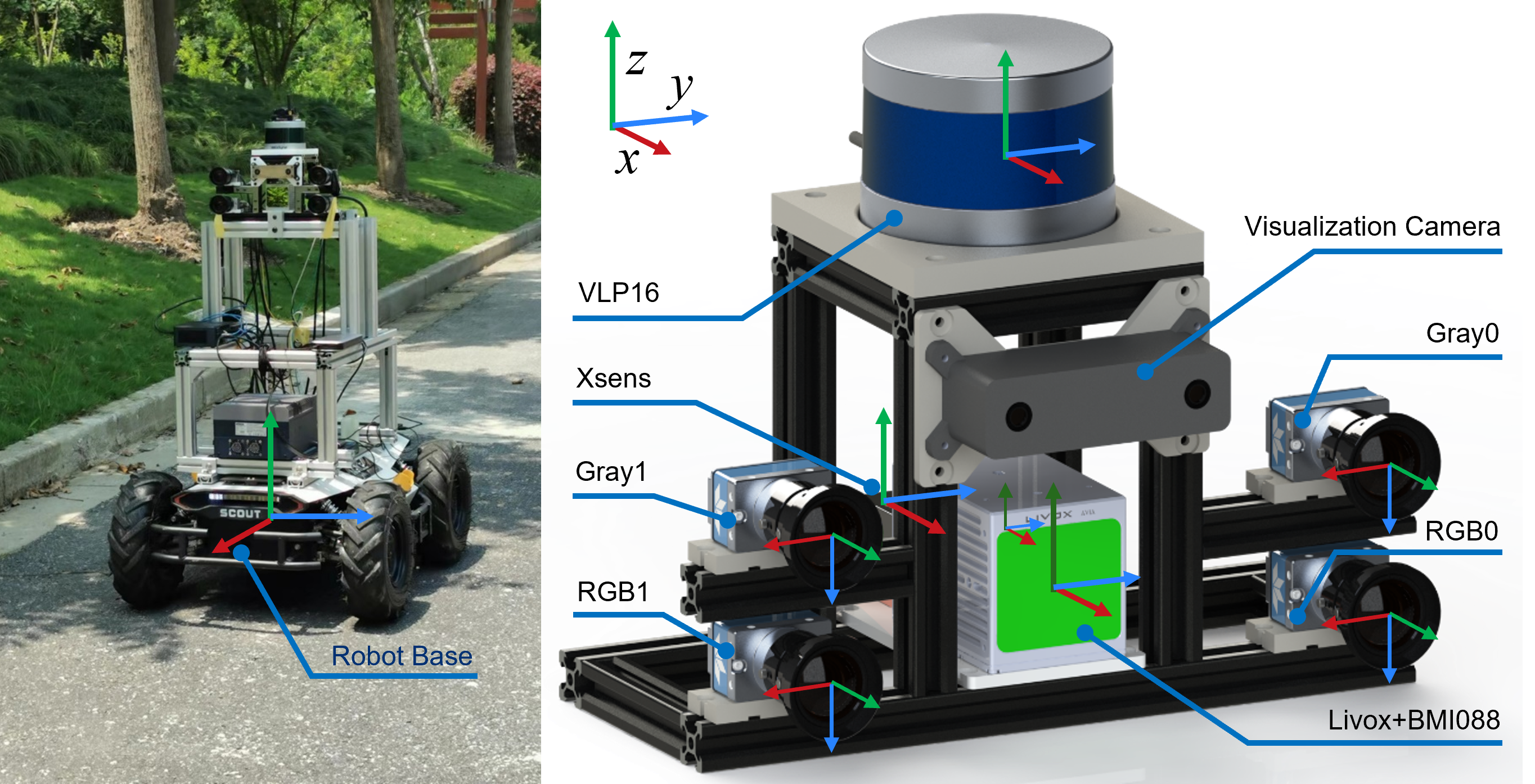

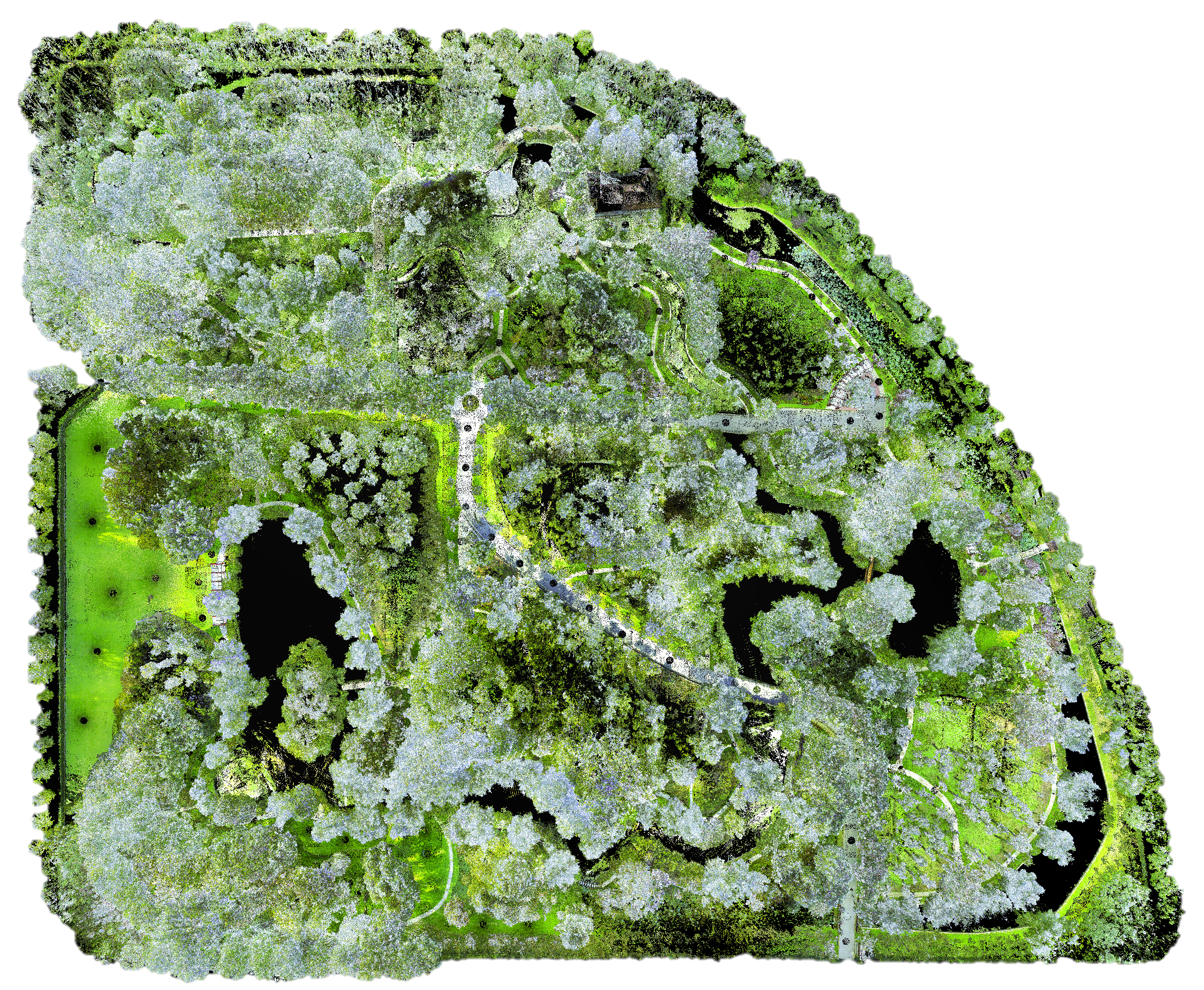

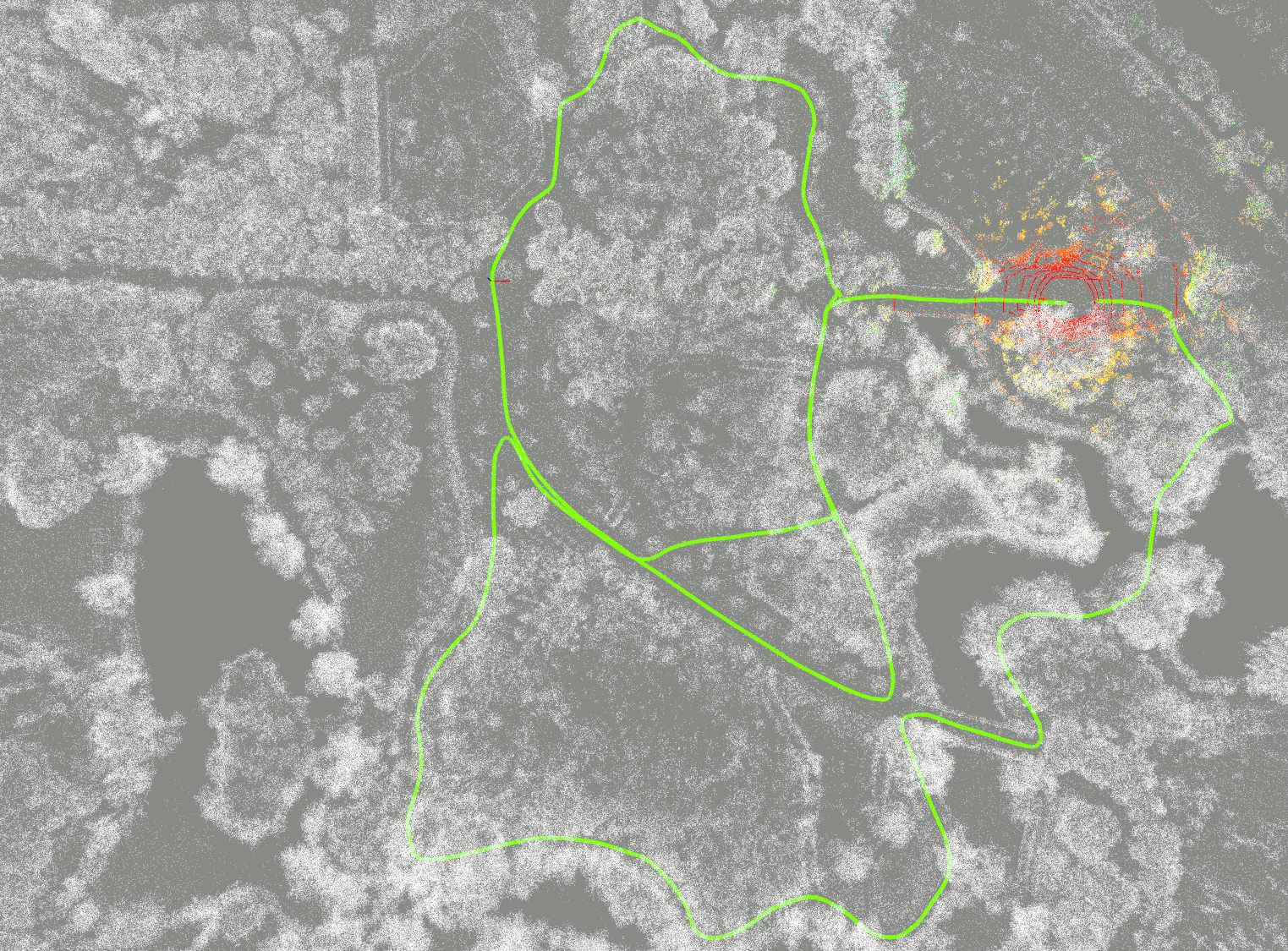

The rapid developments of mobile robotics and autonomous navigation over the years are largely empowered by public datasets for testing and upgradation, such as SLAM and localization tasks. Impressive demos and benchmark results have arisen, indicating the establishment of a mature technical framework. However, from the real-world deployments point of view, there are still critical defects of robustness in challenging environments, especially in large-scale, GNSS-denied, textural-monotonous, and unstructured scenarios. To meet the urgent validation demands in such scope, we build a novel challenging robot navigation dataset in a large botanic garden of more than 48000m2. Comprehensive sensors are employed, including high-res/rate stereo Gray&RGB cameras, rotational and forward 3D LiDARs, and low-cost and industrial-grade IMUs, all of which are well calibrated and hardware-synchronized to nanoseconds accuracy. An all-terrain wheeled robot is configured to mount the sensor suite and providing odometry data. A total of 32 long and short sequences of 2.3 million images are collected, covering scenes of thick woods, riversides, narrow paths, bridges, and grasslands that rarely appeared in previous resources. Excitedly, both highly-accurate ego-motions and 3D map ground truth are provided, along with fine-annotated vision semantics. Our goal is to contribute a high-quality dataset to advance robot navigation and sensor fusion research to a higher level.

- We build a novel multi-sensory dataset in a large botanic garden, with a total of 32 long &short sequences and ~2.3 million images which contain diverse challenging natural factors that rarely seen in previous resources.

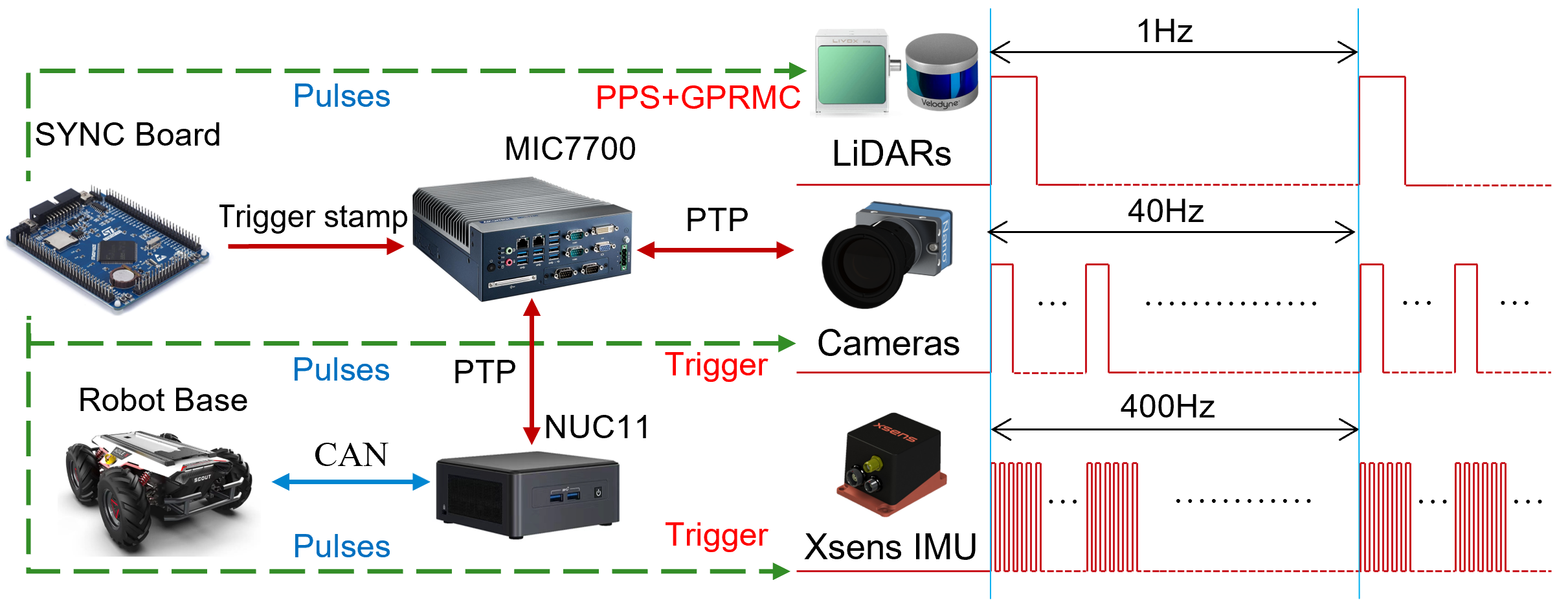

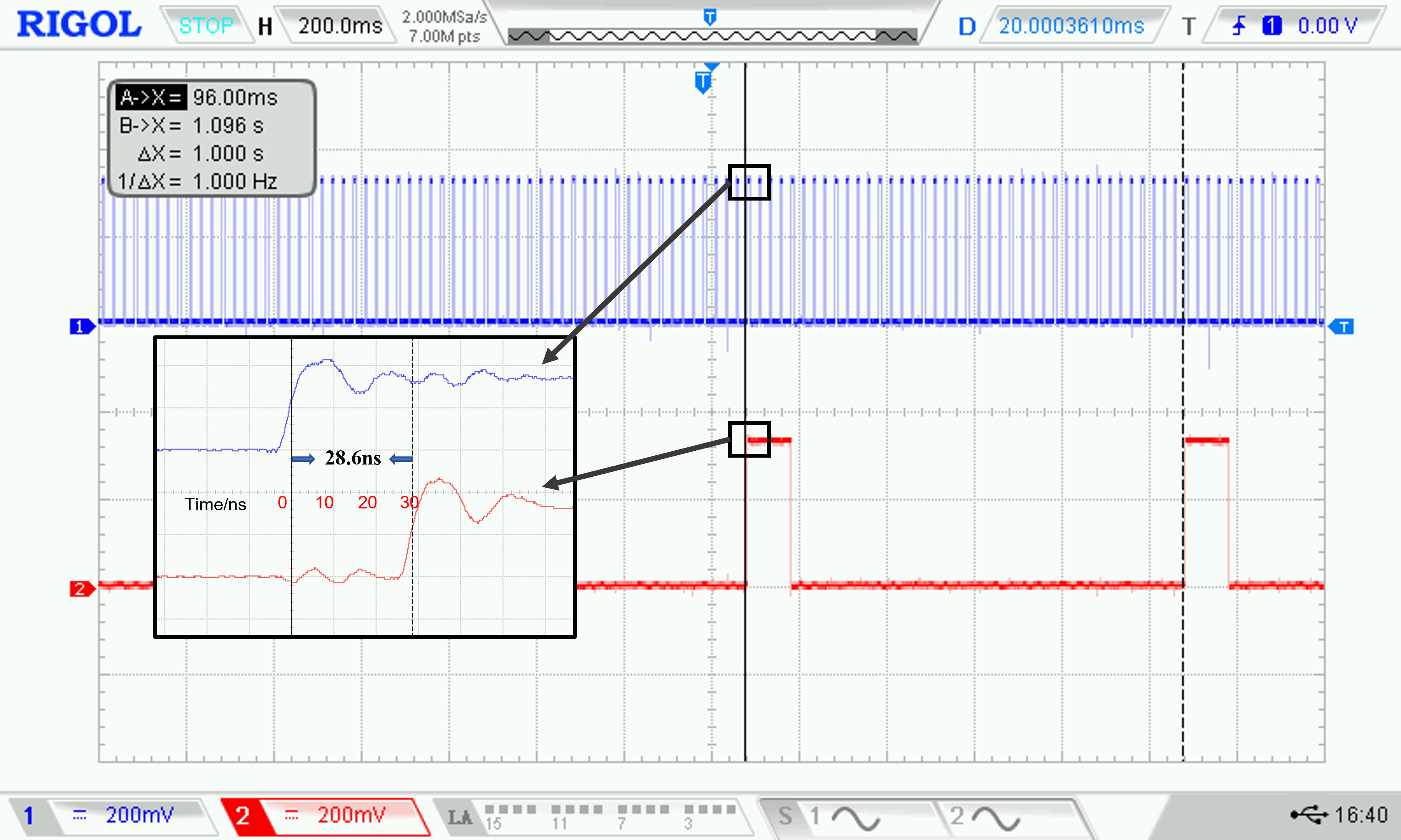

- We employed comprehensive sensors, including high-res and high-rate stereo Gray&RGB cameras, rotational and forward-facing 3D LiDARs, and low-cost and industrial-grade IMUs, supporting a wide range of applications. By elaborate development of the integrated system, we have achieved synchronization of nanoseconds accuracy. Both the sensors and sync-quality are at top-level of this field.

- We provide both highly-accurate 3D map and trajectories ground truth by dedicated surveying works and advanced map-based localization algorithm. We also provide dense vision semantics labeled by experienced annotators. This is the first robot navigation dataset that provides such all-rounded and high-quality reference data.

| Sensor/Device | Model | Specification |

|---|---|---|

| Gray Stereo | DALSA M1930 | 1920*1200, 2/3", 71°×56°FoV, 40Hz |

| RGB Stereo | DALSA C1930 | 1920*1200, 2/3", 71°×56°FoV, 40Hz |

| LiDAR | Velodyne VLP16 | 16C, 360°×30°FoV, ±3cm@100m, 10Hz |

| MEMS LiDAR | Livox AVIA | 70°×77°FoV, ±2cm@200m, 10Hz |

| D-GNSS/INS | Xsens Mti-680G | 9-axis, 400Hz, GNSS not in use |

| Consumer IMU | BMI088 | 6-axis, 200Hz, Livox built-in |

| Wheel Encoder | Scout V1.0 | 4WD, 3-axis, 200Hz |

| GT 3D Scanner | Leica RTC360 | 130m range, 1mm+10ppm accuracy |

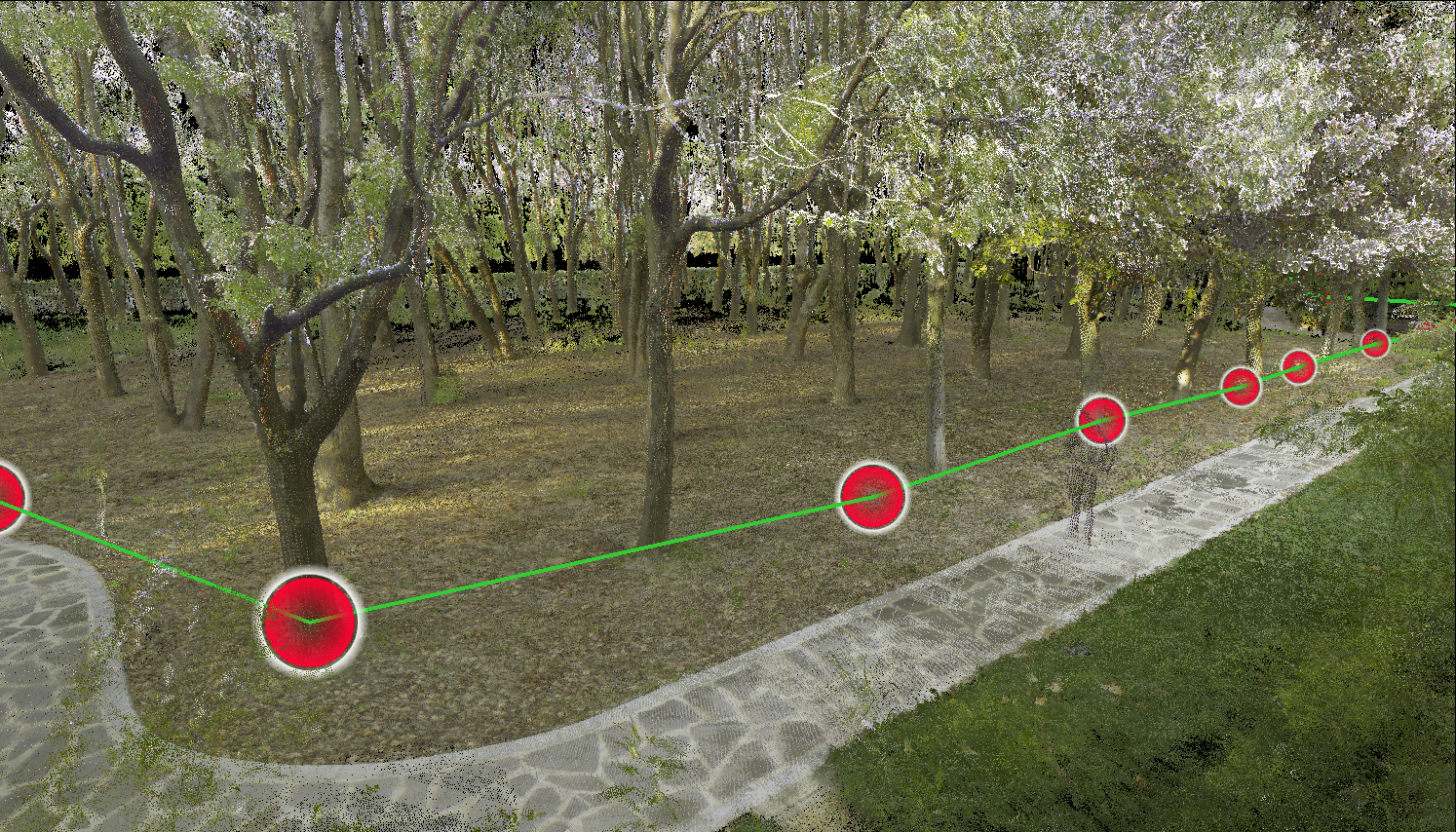

To ensure the global accuracy, we have not used any mobile-mapping based techniques (e.g., SLAM), instead we employ a tactical-grade stationary 3D laser scanner and conduct a qualified surveying and mapping job with professional colleagues from the College of Surveying and Geo-Informatics, Tongji University. The scanner is the RTC360 from Leica, which can output very dense and colored point cloud with a 130m scan radius and mm-level ranging accuracy, as shown the specifications in above table. The survey job takes in total 20 workdays and more than 900 individual scans, and get an accuracy of 11mm std. from Leica's report.

Some survey photos and registration works:Our dataset consists of 32 data sequences in total. At present, we have comprehensively evaluated the state-of-the-arts(SOTA) on 7 sample sequences, the statistics and download links are listed below. More sequences can be requested from Yuanzhi Liu via E-mail.

| Stat/Sequence | 1005-00 | 1005-01 | 1005-07 | 1006-01 | 1008-03 | 1018-00 | 1018-13 |

|---|---|---|---|---|---|---|---|

| Duration/s | 583.78 | 458.91 | 541.52 | 738.70 | 620.29 | 131.12 | 194.36 |

| Distance/m | 598.46 | 477.92 | 587.52 | 761.41 | 747.26 | 114.12 | 199.93 |

| Size/GB | 66.8 | 49.0 | 59.8 | 83.1 | 71.0 | 13.0 | 20.9 |

| rosbag | onedrive | onedrive | onedrive | onedrive | onedrive | onedrive | onedrive |

| imagezip | onedrive | onedrive | onedrive | onedrive | onedrive | onedrive | onedrive |

The rostopics and corresponding message types are listed below:

| ROS Topic | Message Type | Description |

|---|---|---|

| /dalsa_rgb/left/image_raw | sensor_msgs/Image | Left RGB camera |

| /dalsa_rgb/right/image_raw | sensor_msgs/Image | Right RGB camera |

| /dalsa_gray/left/image_raw | sensor_msgs/Image | Left Gray camera |

| /dalsa_gray/right/image_raw | sensor_msgs/Image | Right Gray camera |

| /velodyne_points | sensor_msgs/PointCloud2 | Velodyne VLP16 LiDAR |

| /livox/lidar | livox_ros_driver/CustomMsg | Livox AVIA LiDAR |

| /imu/data | sensor_msgs/Imu | Xsens IMU |

| /livox/imu | sensor_msgs/Imu | Livox BMI088 IMU |

| /gt_poses | geometry_msgs/PoseStamped | Ground truth poses |

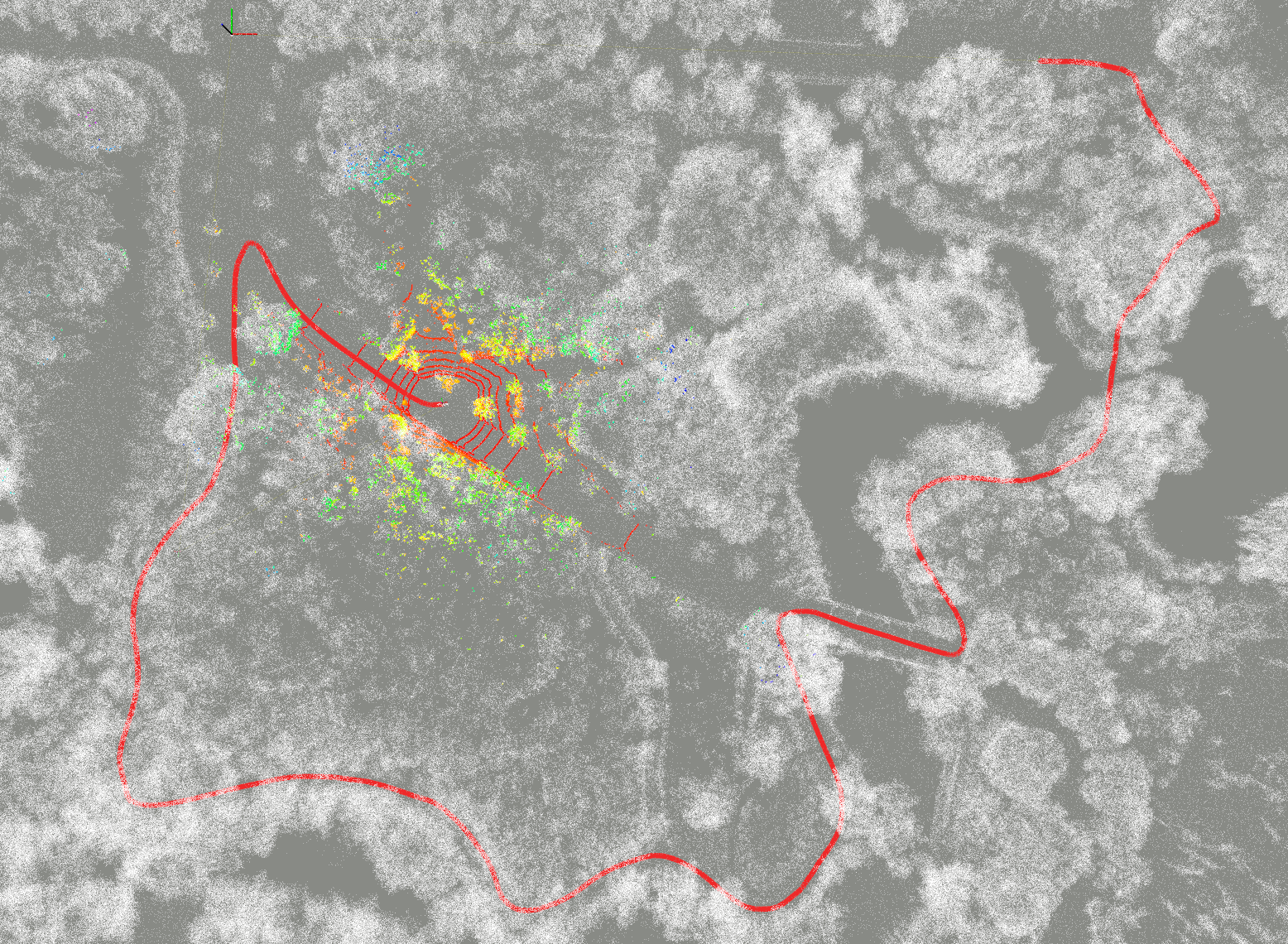

We have tested the performance of visual (ORB-SLAM3), visual-inertial (ORB-SLAM3, VINS-Mono), LiDAR (LOAM), LiDAR-inertial (Fast-LIO2), and visual-LiDAR-inertial fusion (LVI-SAM, R3LIVE) systems on the above 7 sample sequences, as listed below the evaluation statistics.

| Sequence | 1005-00 | 1005-01 | 1005-07 | 1006-01 | 1008-03 | 1018-00 | 1018-13 | |||||||

| Method/Metric | RPE/% | ATE/m | RPE/% | ATE/m | RPE/% | ATE/m | RPE/% | ATE/m | RPE/% | ATE/m | RPE/% | ATE/m | RPE/% | ATE/m |

| ORB-SLAM3-S | X | X | 5.586 NC | 5.933 NC | X | X | 4.143 LC | 3.453 LC | 4.148 LC | 5.005 LC | 5.220 NC | 1.466 NC | 5.303 NC | 2.818 NC |

| ORB-SLAM3-SI | 4.386 NC | 5.511 NC | 4.808 NC | 5.376 NC | 4.771 NC | 5.283 NC | 3.733 LC | 3.150 LC | 3.853 LC | 4.311 LC | 4.118 LC | 1.116 LC | 4.238 NC | 2.967 NC |

| VINS-Mono | 3.403 NC | 8.617 NC | 2.383 NC | 4.029 NC | 3.694 NC | 7.819 NC | 3.101 LC | 2.318 LC | 3.475 LC | 3.620 LC | 3.859 NC | 1.767 NC | 5.588 NC | 2.967 NC |

| LOAM | 1.993 | 3.744 | 2.589 | 5.624 | 2.293 | 3.253 | 2.188 | 2.553 | 2.046 | 2.994 | 2.530 | 0.523 | 2.441 | 1.330 |

| FAST-LIO2 | 1.827 | 2.305 | 1.870 | 2.470 | 2.349 | 4.438 | 6.573 | 39.733 | 2.404 | 4.019 | 2.770 | 2.154 | 2.562 | 2.390 |

| LVI-SAM | 1.899 | 2.774 | 2.033 | 2.640 | 2.295 | 3.232 | 2.004 | 1.700 | 1.799 | 1.798 | 2.595 | 0.700 | 2.565 | 1.061 |

| R3LIVE | 1.924 | 3.300 | 1.907 | 2.259 | 2.197 | 3.799 | 2.192 | 7.051 | 2.077 | 2.776 | 2.462 | 0.875 | 2.779 | 1.318 |

To simplify the user testing procedure, We have provided the calibration and config files of the State-Of-The-Arts, which can be accessed in calib and config folders.

Testing of LVI-SAM on 1005-00 sequence:

All data are provided in LabelMe format and support future reproducing. It is expected that these data can strengthen the abilities of robust motion estimation and semantic map paintings.

Our dataset is captured in rosbag and raw formats. For the convenience of usage, we have provided a toolbox to convert between different structures, check the rosbag_tools folder for usage.

The semantics are labelled in LabelMe json format. For the convenience of usage, we have provided a toolbox to convert to PASCAL VOC and MS COCO formats, check the semantic_tools folder for usage.

We have designed a consice toolbox for camera-LiDAR calibration based on several 2D checker boards, check the calibration_tools folder for usage.

We recommend to use the open-source tool EVO for algorithm evaluation. Our Ground truth Poses are provided in TUM format consisting of timestamps, translations x-y-z, and quaternions x-y-z-w, which are concise and enable trajactory alignment based on time correspondances. Note that, the GT poses are tracking the VLP16 frame, so you must transform your poses to VLP16 side by hand-eye formula AX=XB before evaluation.

The authors would like to thank the colleagues from Tongji University and Sun Yat-sen University for their assistances in the rigorous survey works and post-processings, especially Xiaohang Shao, Chen Chen, and Kunhua Liu. We also thank A/Prof. Hangbin Wu for his guidance in data collection. Besides, we acknowledge Grace Xu from Livox for the support on AVIA LiDAR, and we appreciate the colleagues of Appen for their professional work in visual semantic annotations. Yuanzhi Liu would like to thank Jingxin Dong for her job-loggings and photographs during our data collection.

This work was supported by National Key R&D Program of China under Grant 2018YFB1305005.

Feb 6, 2022 Open the GitHub website: https://github.com/robot-pesg/BotanicGarden

This dataset is provided for academic purposes. If you meet some technical problems, please open an issue or contact <Yuanzhi Liu: lyzrose@sjtu.edu.cn>.