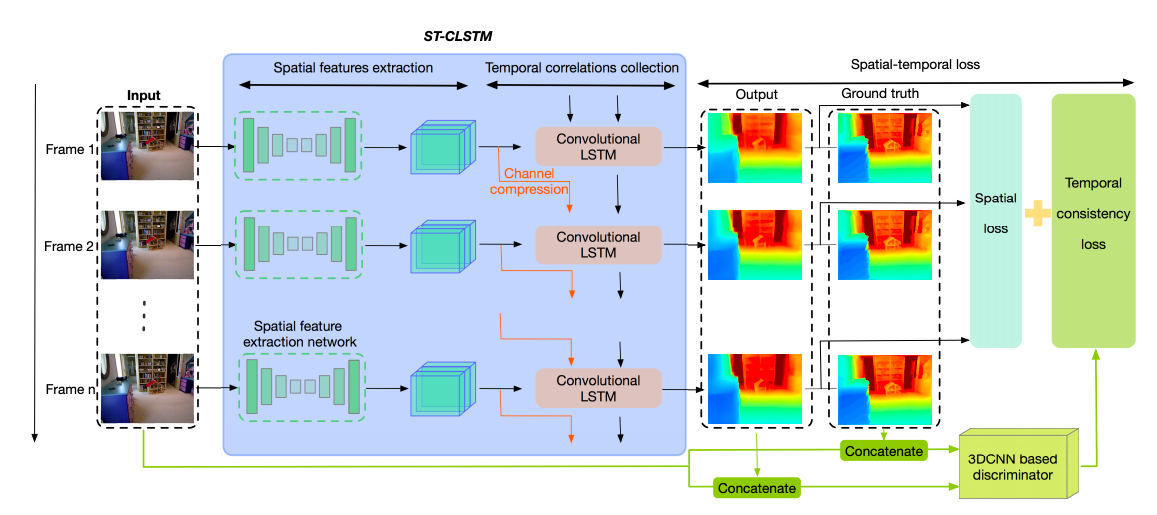

This is the UNOFFICIAL implementation of the paper Exploiting Temporal Consistency for Real-Time Video Depth Estimation, ICCV 2019, Haokui Zhang, Chunhua Shen, Ying Li, Yuanzhouhan Cao, Yu Liu, Youliang Yan.

You can find official implementation (WITHOUT TRAINING SCRIPTS) here.

We didn't preprocess data as in the official implementation. Instead, we use the dataset shared by Junjie Hu, which is also used by SARPN. You can download the pre-processed data from here.

When you have downloaded the dataset, run the following command to creat training list.

python create_list_nyuv2.pyYou can also follow the procedure of ST-CLSTM to preprocess the data. It is based on the oficial Matlab Toolbox. If Matlab is unavailable for you, there is also a Python Port Toolbox for processing the raw dataset by GabrielMajeri, which contains code for Higher-level interface to the labeled subset, Raw dataset extraction and preprocessing and Performing data augmentation.

The final folder structure is shown below.

data_root

|- raw_nyu_v2_250k

| |- train

| | |- basement_0001a

| | | |- rgb

| | | | |- rgb_00000.jpg

| | | | |_ ...

| | | |- depth

| | | | |- depth_00000.png

| | | | |_ ...

| | |_ ...

| |- test_fps_30_fl5_end

| | |- 0000

| | | |- rgb

| | | | |- rgb_00000.jpg

| | | | |- rgb_00001.jpg

| | | | |- ...

| | | | |- rgb_00004.jpg

| | | |- depth

| | | | |- depth_00000.png

| | | | |- depth_00001.png

| | | | |- ...

| | | | |- depth_00004.png

| | |- ...

| |- test_fps_30_fl4_end

| |- test_fps_30_fl3_end>

As an example, use the following command to train on NYUDV2.

CUDA_VISIBLE_DEVICES="0,1,2,3" python train.py --epochs 20 --batch_size 128 \

--resume --do_summary --backbone resnet18 --refinenet R_CLSTM_5 \

--trainlist_path ./data_list/raw_nyu_v2_250k/raw_nyu_v2_250k_fps30_fl5_op0_end_train.json \

--root_path ./data/ --checkpoint_dir ./checkpoint/ --logdir ./log/Use the following command to evaluate the trained model on ST-CLSTM test data.

CUDA_VISIBLE_DEVICES="0" python evaluate.py --batch_size 1 --backbone resnet18 --refinenet R_CLSTM_5 --loadckpt ./checkpoint/ \

--testlist_path ./data_list/raw_nyu_v2_250k/raw_nyu_v2_250k_fps30_fl5_op0_end_test.json \

--root_path ./data/st-clstm/You can download the pretrained model: NYUDV2.

@inproceedings{zhang2019temporal,

title = {Exploiting Temporal Consistency for Real-Time Video Depth Estimation},

author = {Haokui Zhang and Chunhua Shen and Ying Li and Yuanzhouhan Cao and Yu Liu and Youliang Yan},

conference={International Conference on Computer Vision},

year = {2019}

}