The implementation of our paper accepted in ICCV 2019 (International Conference on Computer Vision, IEEE)

Authors: Yida Wang, David Tan, Nassir Navab and Federico Tombari

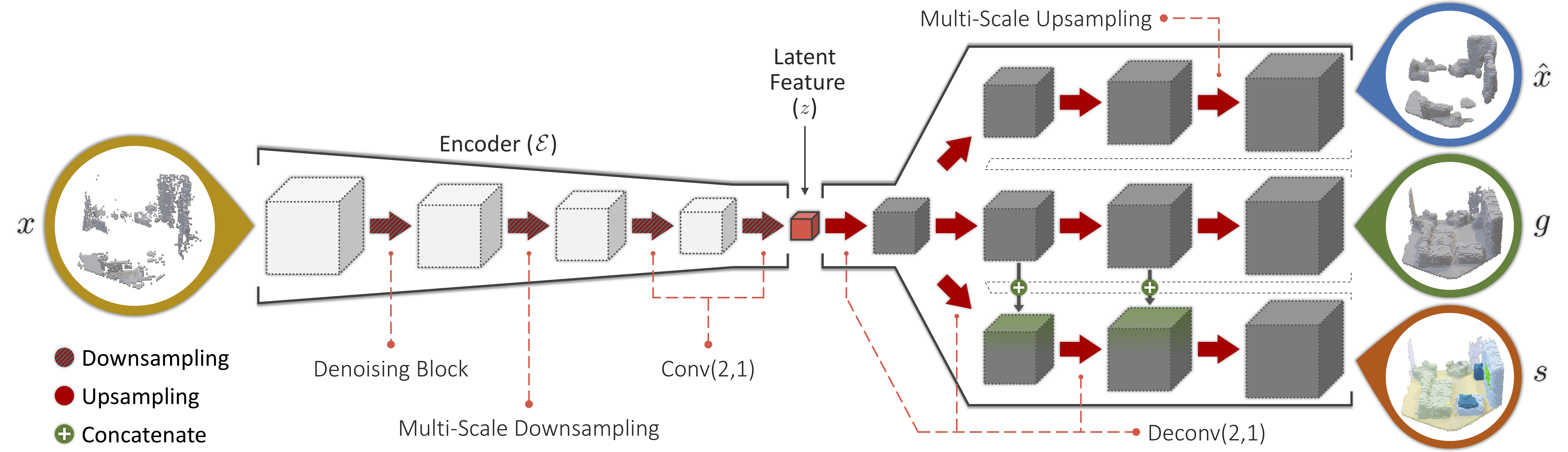

Based on a single encoder and three separate generators used to reconstruct different geometric and semantic representations of the original and completed scene, all sharing the same latent space.

| NYU | ShapeNet |

|---|---|

|

|

Results of registration

| Slow | Fast |

|---|---|

|

|

Firstly you need to go to depth-tsdf folder to compile the our depth converter. Then camake and make are suggested tools to compile our codes.

cmake . # configure

make # compiles demo executableAfter the file named with back-project is compiled, depth images of NYU or SUNCG datasets could be converted into TSDF volumes parallelly.

CUDA_VISIBLE_DEVICES=0 python2 data/depth_backproject.py -s /media/wangyida/SSD2T/database/SUNCG_Yida/train/depth_real_png -tv /media/wangyida/HDD/database/SUNCG_Yida/train/depth_tsdf_camera_npy -tp /media/wangyida/HDD/database/SUNCG_Yida/train/depth_tsdf_pcdWe further convert the binary files from SUNCG and NYU datasets into numpy arrays in dimension of [80, 48, 80] with 12 semantic channels. Those voxel data are used as training ground truth. Notice that our data is presented in numpy array format which is converted from the original binary data

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/SUNCGtrain_1001_2000 -tv /media/wangyida/HDD/database/SUNCG_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/SUNCGtrain_501_1000 -tv /media/wangyida/HDD/database/SUNCG_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/SUNCGtrain_1_1000 -tv /media/wangyida/HDD/database/SUNCG_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/SUNCGtrain_1001_3000 -tv /media/wangyida/HDD/database/SUNCG_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/SUNCGtrain_3001_5000 -tv /media/wangyida/HDD/database/SUNCG_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/SUNCGtrain_1_500 -tv /media/wangyida/HDD/database/SUNCG_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/SUNCGtrain_5001_7000 -tv /media/wangyida/HDD/database/SUNCG_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/depthbin_NYU_SUNCG/SUNCGtest_49700_49884 -tv /media/wangyida/HDD/database/SUNCG_Yida/test/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/depthbin_NYU_SUNCG/NYUtrain -tv /media/wangyida/HDD/database/NYU_Yida/train/voxel_semantic_npy &

python2 data/depthbin2npy.py -s /media/wangyida/HDD/database/depthbin_NYU_SUNCG/NYUtest -tv /media/wangyida/HDD/database/NYU_Yida/test/voxel_semantic_npy &

waitCUDA_VISIBLE_DEVICES=0 python3 main.py --mode train --discriminative TrueFirstly a list of name of the samples are needed, you can generate it easilly in Linux using find, assume that all the testing samples are located in /media/wangyida/HDD/database/050_200/test/train, a test_fusion.list would be generated

find /media/wangyida/HDD/database/050_200/test/train -name '*.npy' > test_fusion.listThen the path prefix of /media/wangyida/HDD/database/050_200/test/train should be removed in the .list file. It could be easilly dealt by VIM using

:%s/\/media\/wangyida\/HDD\/database\/050_200\/test\/train\///gcWe provide a compact version of ForkNet which is only 25 MB in the pretrained_model folder If the model is not discriminative, notice that this model is sparser

CUDA_VISIBLE_DEVICES=1 python main.py --mode evaluate_recons --conf_epoch 59Otherwise

CUDA_VISIBLE_DEVICES=1 python main.py --mode evaluate_recons --conf_epoch 37 --discriminative Truewhere '--conf_epoch' indicates the index of the pretrained model

The overall architecture is combined with 1 encoder with input of a TSDF volume and 3 decoders.

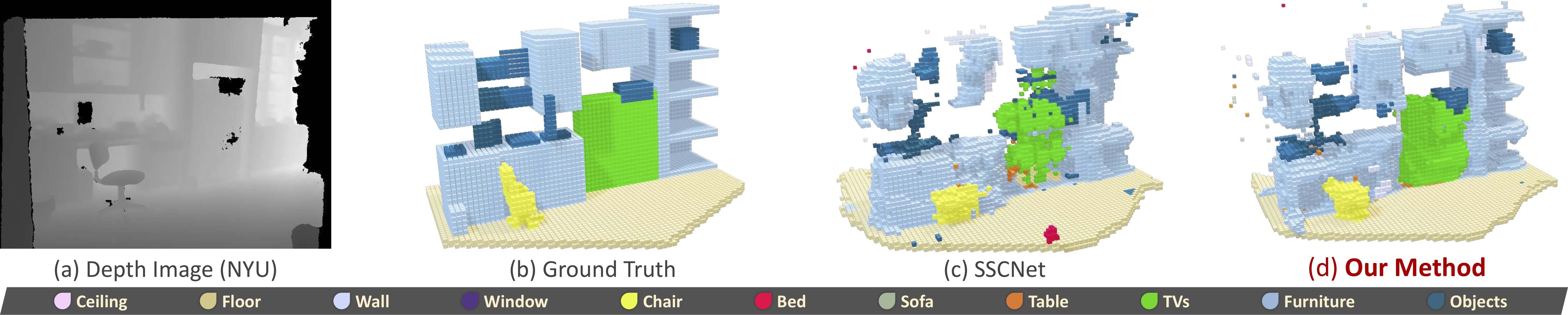

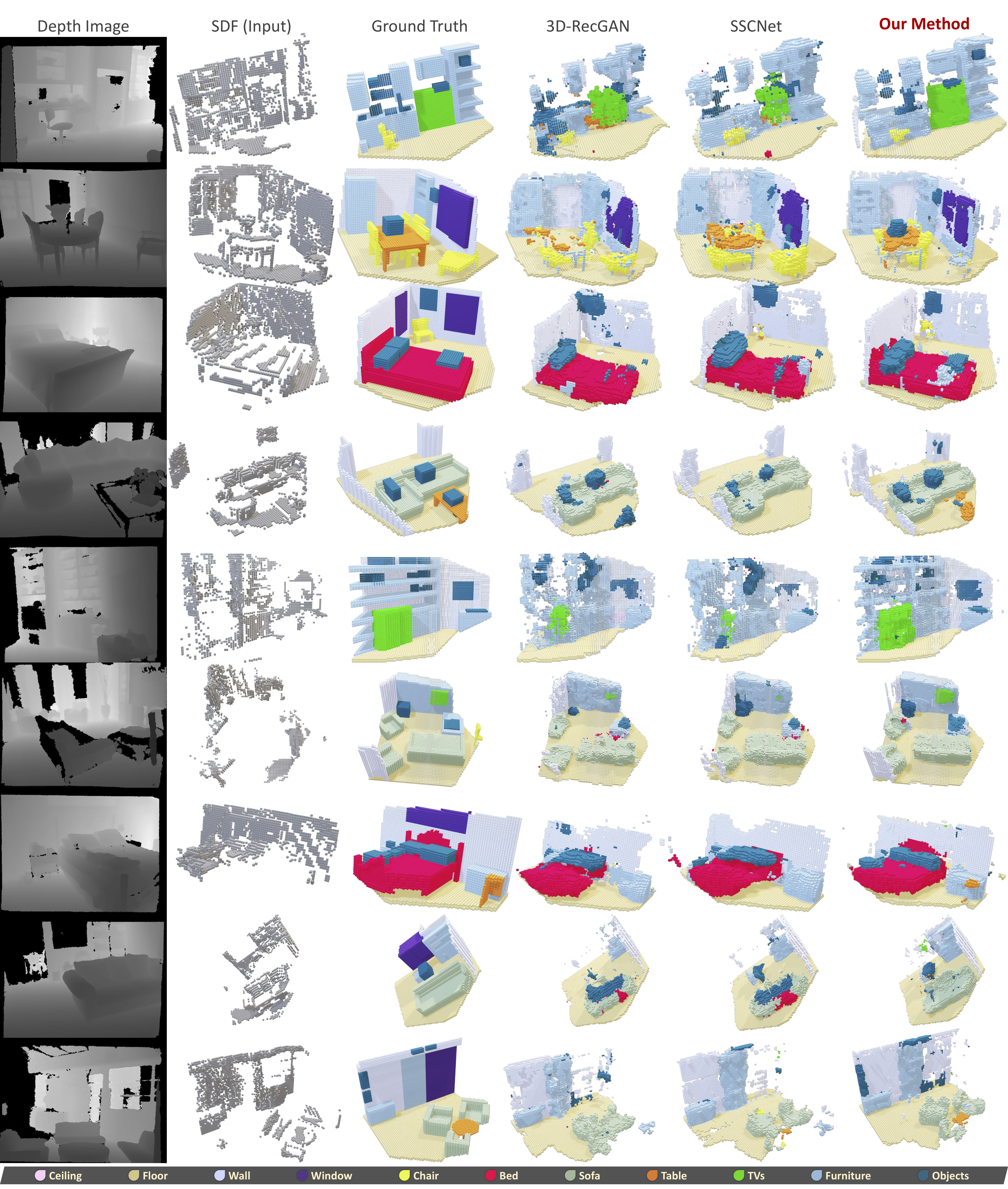

The NYU dataset is composed of 1,449 indoor depth images captured with a Kinect depth sensor

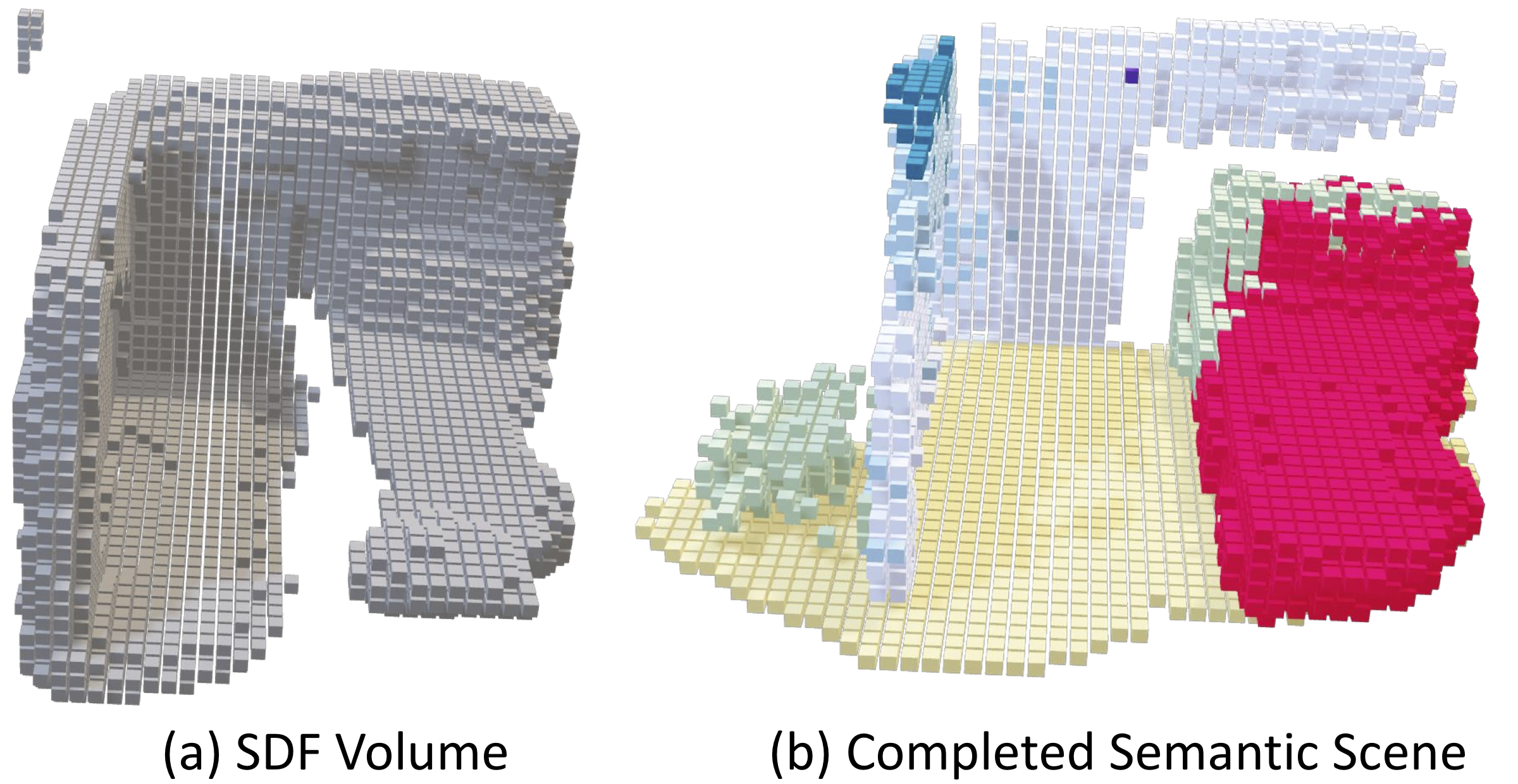

We build the new dataset by sampling the features directly from latent space which generates a pair of partial volumetric surface and completed volumetric semantic surface.

Given 1 latent sample, we can use 2 decoders to generate a pair of TSDF volume and semantic scene separately.

If you find this work useful in yourr research, please cite:

@inproceedings{wang2019forknet,

title={ForkNet: Multi-branch Volumetric Semantic Completion from a Single Depth Image},

author={Wang, Yida and Tan, David Joseph and Navab, Nassir and Tombari, Federico},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

pages={8608--8617},

year={2019}

}