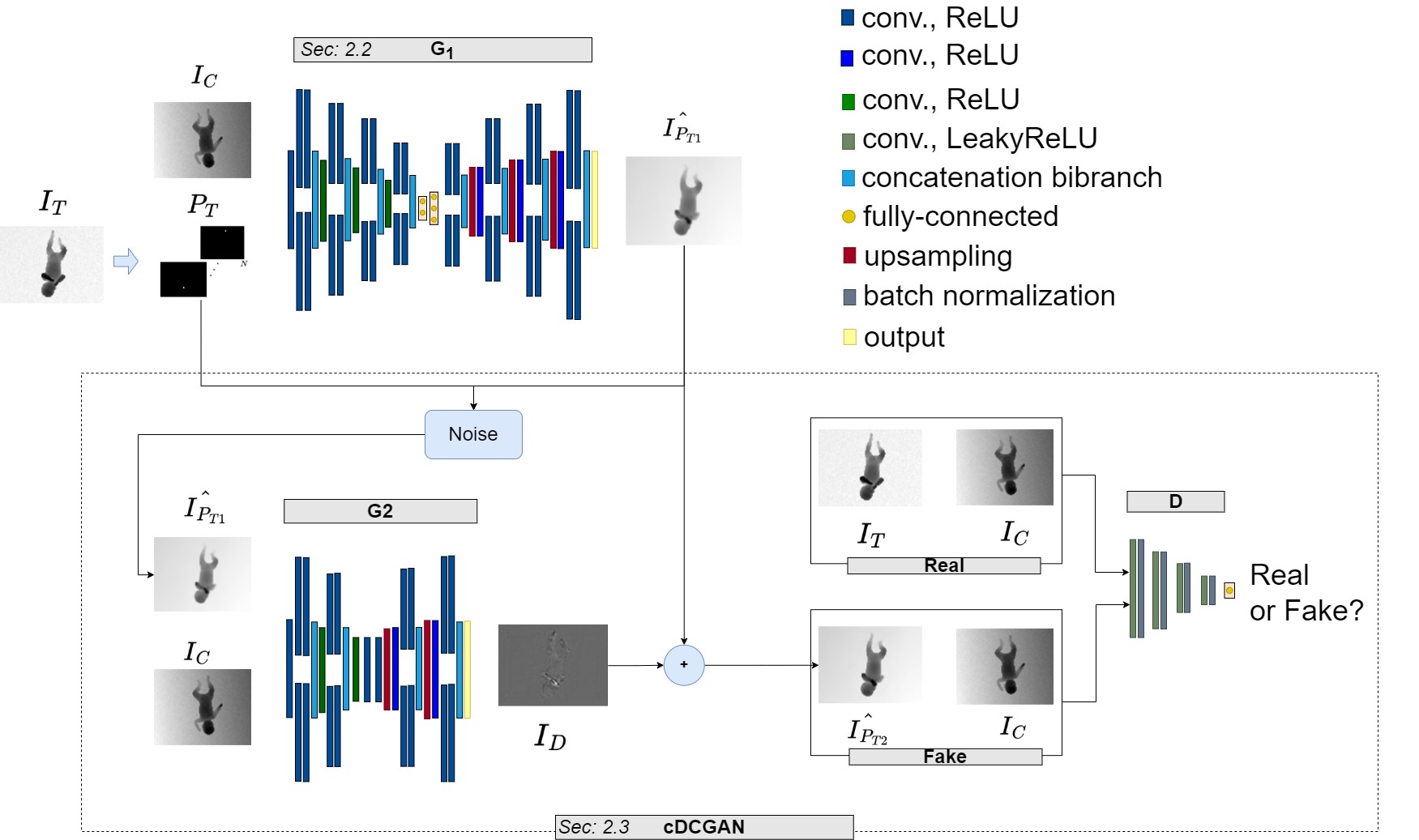

In this repository is the Tensorflow implementation of the proposed GAN-based framework described in "Generating depth images of preterm infants with a given pose using GANs" paper and shown in Figure 1.

The proposed bibranch architecture is in ./src/models/bibranch directory, while the network architecture present in literature (mono-branch GAN-based framework) is in ./src/models/mono directory.

The dataset used for training and evaluation phases is the MINI-RGBD available online. We applied to them some trasformations. For more information see ./data/Syntetich_complete

The framework can be used by choosing between the available architectures and datasets. Alternatively, you can define your own datasets or architectures to be used. For both options please refer to sections 2 and in 3 for the creation of the dataset and architectures, while section 4 explains how to start the framework.

Code was run using the dependencies described in Dockerfile. To prepare the environment, follow these steps:

- Download the Github repository

sudo apt install unzip

wget https://github.com/vrai-group/guided-infant-generation/archive/master.zip

unzip master.zip

mv guided-infant-generation-master GuidedInfantGeneration

- Move in BabyPoseGuided directory

cd GuidedInfantGeneration

- You can download the ganinfant:1.0.0 docker image by running the follow istructions. Note: The gainfant:1.0.0 docker image inherits layers from tensorflow/tensorflow image with -gpu tag. This needs nvidia-docker to run as you can read in optional section of the same.

docker pull giuseppecannata/ganinfant:1.0.0

docker tag giuseppecannata/ganinfant:1.0.0 ganinfant:1.0.0

Alternatively, if you do not download the docker image, you can build the Dockerfile in the repository. In the following instruction, we build the Dockerfile in the actual directory (./) calling the docker image ganinfant version 1.0.0

docker build -t ganinfant:1.0.0 .

- Start the container GAN_infant_container with the docker image ganinfant:1.0.0. In the following instruction, you need to replace <id_gpu> with your GPU id, <local_directory> with the absolute path of the BabyPoseGuided directory described at point (1.) , <container_directory> absolute path where the container will mount the <local_directory>

docker run -it --name "GAN_infant_container" --gpus "<id_gpu>" -v <local_directory>:<container_directory> ganinfant:1.0.0 bash

Each defined dataset must be placed in the ./data directory and must respect a specific structure. For more information, you can refer to the ./data/REAMDE file.

To each [type] of dataset is associated a related python processing module defined in ./src/dataset.

For more information you can refer to the ./src/dataset/README.

To use the framework you have the possibility to define your own architecture.

Each defined architecture must be placed in the ./src/models directory.

For more information refer to the ./src/models/README file.

Once the environment has been installed and the dataset and architecture have been defined, the framework can be used.

In particular we need to set the configuration file.- MODE

-

Specify the mode to start the framework. The list of MODE value is given below:

- train_G1: train generator G1

- train_cDCGAN: train the conditional Generative Adversarial Network

- evaluate_G1: calculate FID and IS scores of G1 on test set

- evaluate_GAN: calculate FID and IS scores of all framework on test set

- tsne_GAN: calculate t-sne of all framework

- inference_G1: inference on test set using G1

- inference_GAN: inference on test set using all framework

- plot_history_G1: plot history file of G1 training

- plot_history_GAN: plot history file of GAN training

- DATASET

- Name of the dataset you want to use. The directory of dataset must be contained in ./data directory and must present the following pattern in the name: [type][underscore][note].

- DATASET_CONFIGURATION

- Name of the dataset configuration you want to use. The directory of configuration must be contained as sub-folder in tfrecord directory.

- ARCHITECTURE

- Name of the architecture you want to use. The directory of architecture must be contained in ./src/models directory.

- OUTPUTS_DIR

- Name of directory in which to save the results of training, validation and inference.

- G1_NAME_WEIGHTS_FILE

-

Name of .hdf5 file to load in G1 model.

The file will be find in OUTPUTS_DIR/weights/G1 directory.

This variable will be read only if MODE is one of this: ['inference_G1', 'evaluate_G1', 'train_cDCGAN', 'evaluate_GAN', 'tsne_GAN', 'inference_GAN']. - G2_NAME_WEIGHTS_FILE

-

Name of .hdf5 file to load in G2 model.

The file will be find in OUTPUTS_DIR/weights/GAN directory.

This variable will be read only if MODE is one of this: ['evaluate_GAN', 'inference_GAN', 'tsne_GAN'].

python src/main.py

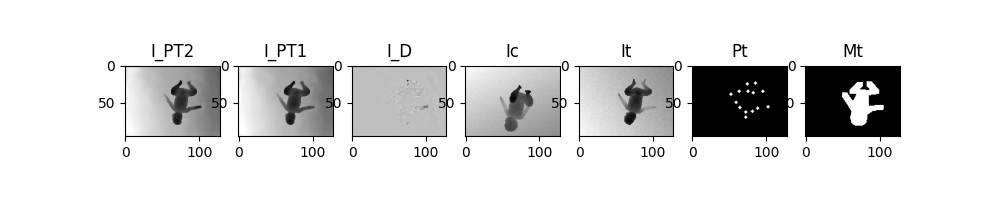

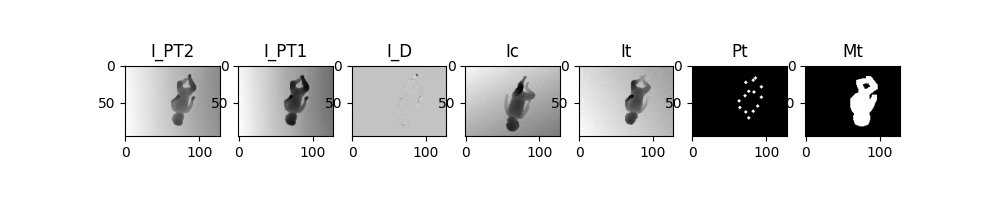

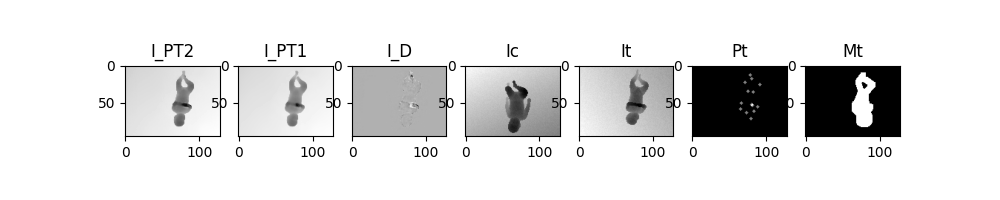

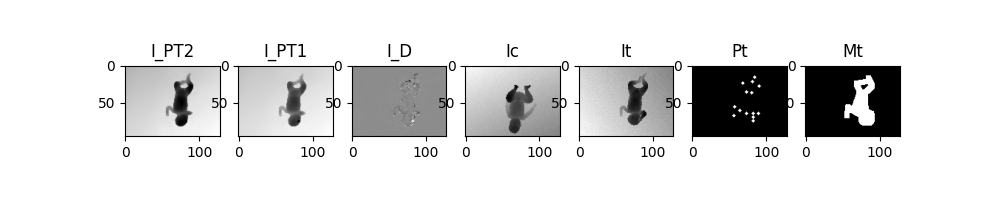

In this section, some qualitative results obtained using the bibranch architecture and the Syntetcih_complete dataset (configuration negative_no_flip_camp_5_keypoints_2_mask_1) are presented.

- I_PT2 = I_PT1 + I_D

- I_PT1 = output of G1 generator

- I_D = output of G2 generator

- Ic = condition image

- It = target image

- Pt = target pose

- Mt = target binary mask

|

|---|

|

|

|