ChatSim: Editable Scene Simulation for Autonomous Driving via LLM-Agent Collaboration

02/09/2024 update: We release the preprint paper in arxiv, and the code is coming soon!

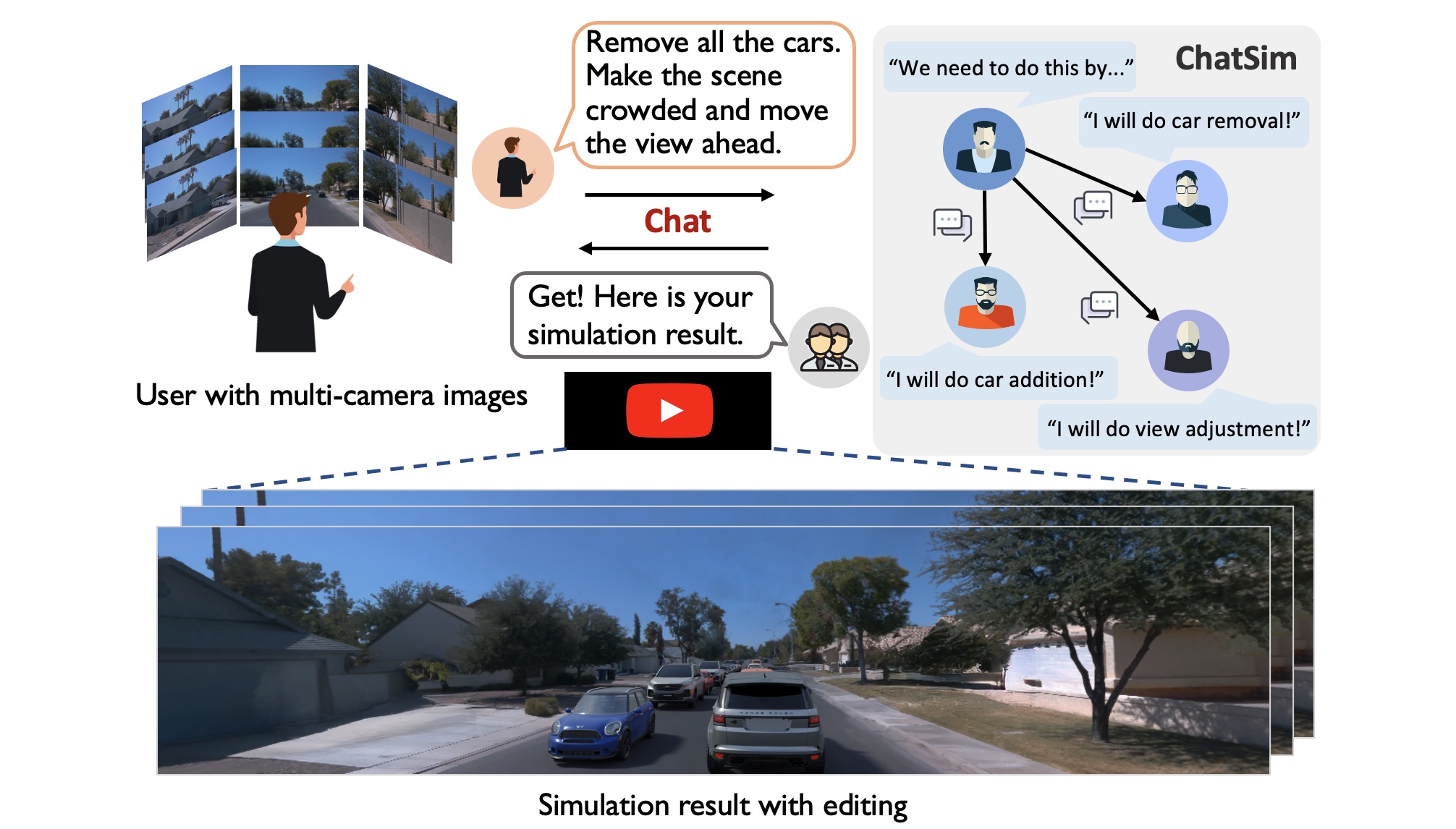

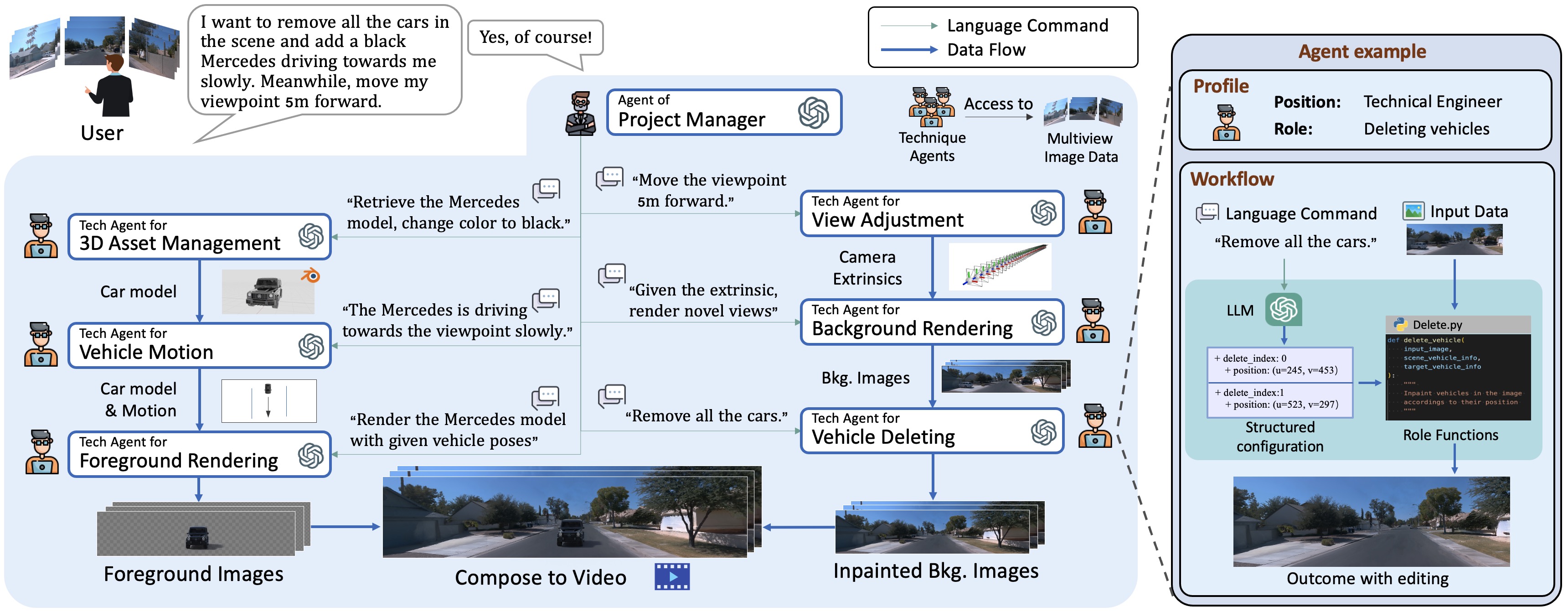

Scene simulation in autonomous driving has gained significant attention because of its huge potential for generating customized data. However, existing editable scene simulation approaches face limitations in terms of user interaction efficiency, multi-camera photo-realistic rendering and external digital assets integration. To address these challenges, this paper introduces ChatSim, the first system that enables editable photo-realistic 3D driving scene simulations via natural language commands with external digital assets. To enable editing with high command flexibility, ChatSim leverages a large language model (LLM) agent collaboration framework. To generate photo-realistic outcomes, ChatSim employs a novel multi-camera neural radiance field method. Furthermore, to unleash the potential of extensive high-quality digital assets, ChatSim employs a novel multi-camera lighting estimation method to achieve scene-consistent assets' rendering. Our experiments on Waymo Open Dataset demonstrate that ChatSim can handle complex language commands and generate corresponding photo-realistic scene videos.

To address complex or abstract user commands effectively, ChatSim adopts a large language model (LLM)-based multi-agent collaboration framework. The key idea is to exploit multiple LLM agents, each with a specialized role, to decouple an overall simulation demand into specific editing tasks, thereby mirroring the task division and execution typically founded in the workflow of a human-operated company. This workflow offers two key advantages for scene simulation. First, LLM agents' ability to process human language commands allows for intuitive and dynamic editing of complex driving scenes, enabling precise adjustments and feedback. Second, the collaboration framework enhances simulation efficiency and accuracy by distributing specific editing tasks, ensuring improved task completion rates.

User command: "Create a traffic jam."

traffic_jam_note.mp4

User command: "Remove all cars in the scene and add a Porsche driving the wrong way toward me fast. Additionally, add a police car also driving the wrong way and chasing behind the Porsche. The view should be moved 5 meters ahead and 0.5 meters above."

complex_input_note.mp4

User command (round 1): "Ego vehicle drives ahead slowly. Add a car to the close front that is moving ahead."

multiround_input_note_1.mp4

User command (round 2): "Modify the added car to turn left. Add another Chevrolet to the front of the added one."

multiround_input_note_2.mp4

User command (round 3): "Add another vehicle to the left of the Mini driving toward me."

multiround_input_note_3.mp4

ChatSim adopts a novel multi-camera lighting estimation. With predicted environment lighting, we use Blender to render the scene-consistent foreground objects.

foreground_rendering.mp4

ChatSim introduces an innovative multi-camera radiance field approach to tackle the challenges of inaccurate poses and inconsistent exposures among surrounding cameras in autonomous vehicles. This method enables the rendering of ultra-wide-angle images that exhibit consistent brightness across the entire image.

background_rendering1.mp4

- Arxiv paper release

- Code release

- Data and model release