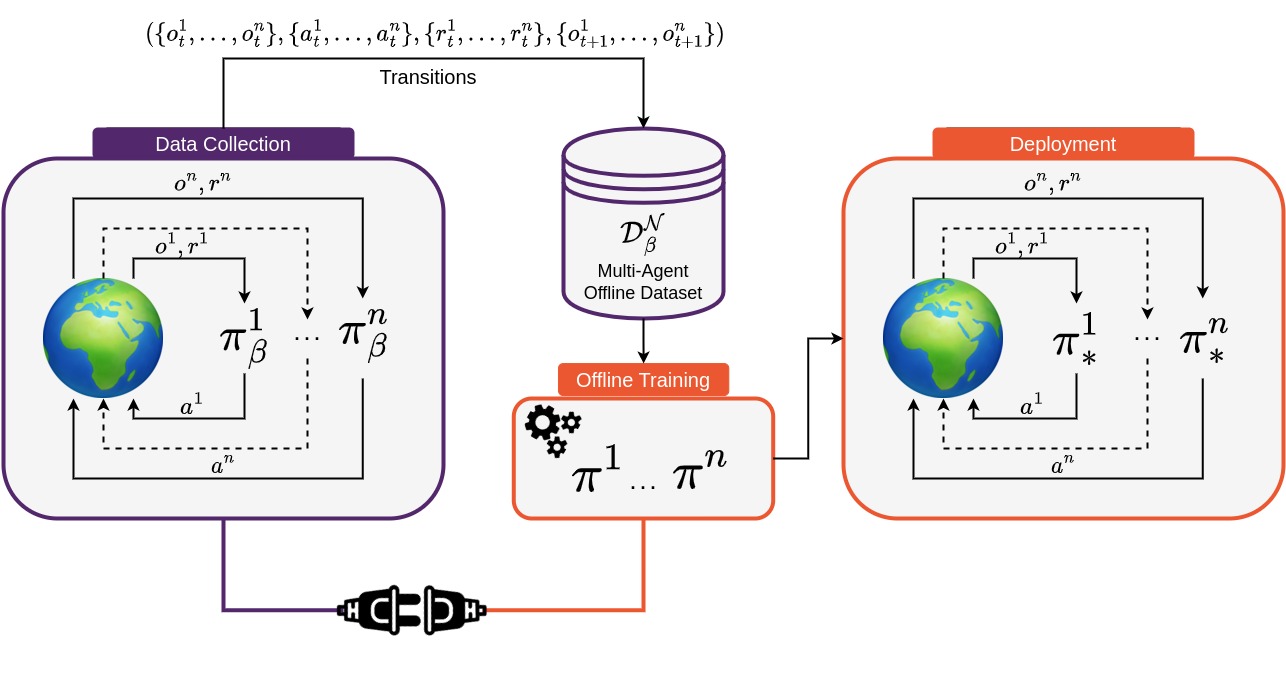

Offline MARL holds great promise for real-world applications by utilising static datasets to build decentralised controllers of complex multi-agent systems. However, currently offline MARL lacks a standardised benchmark for measuring meaningful research progress. Off-the-Grid MARL (OG-MARL) fills this gap by providing a diverse suite of datasets with baselines on popular MARL benchmark environments in one place, with a unified API and an easy-to-use set of tools.

OG-MARL forms part of the InstaDeep MARL ecosystem, developed jointly with the open-source community. To join us in these efforts, reach out, raise issues or just 🌟 to stay up to date with the latest developments!

OG-MARL is a research tool that is under active development and therefore evolving quickly. We have several very exciting new features on the roadmap but sometimes when we introduce a new feature we may abruptly change how things work in OG-MARL. But in the interest of moving quickly, we believe this is an acceptable trade-off and ask our users to kindly be aware of this.

The following is a list of the latest updates to OG-MARL:

✅ We have removed several cumbersome dependencies from OG-MARL, including reverb and launchpad. This means that its significantly easier to install and use OG-MARL.

✅ We added functionality to pre-load the TF Record datasets into a Cpprb replay buffer. This speeds up the time to sample the replay buffer by several orders of magnitude.

✅ We have implemented our first set of JAX-based systems in OG-MARL. Our JAX systems use Flashbax as the replay buffer backend. Flashbax buffers are completly jit-able, which means that our JAX systems have fully intergrated and jitted training and data sampling.

✅ We have intergrated MARL-eval into OG-MARL to standardise and simplify the reporting of experimental results.

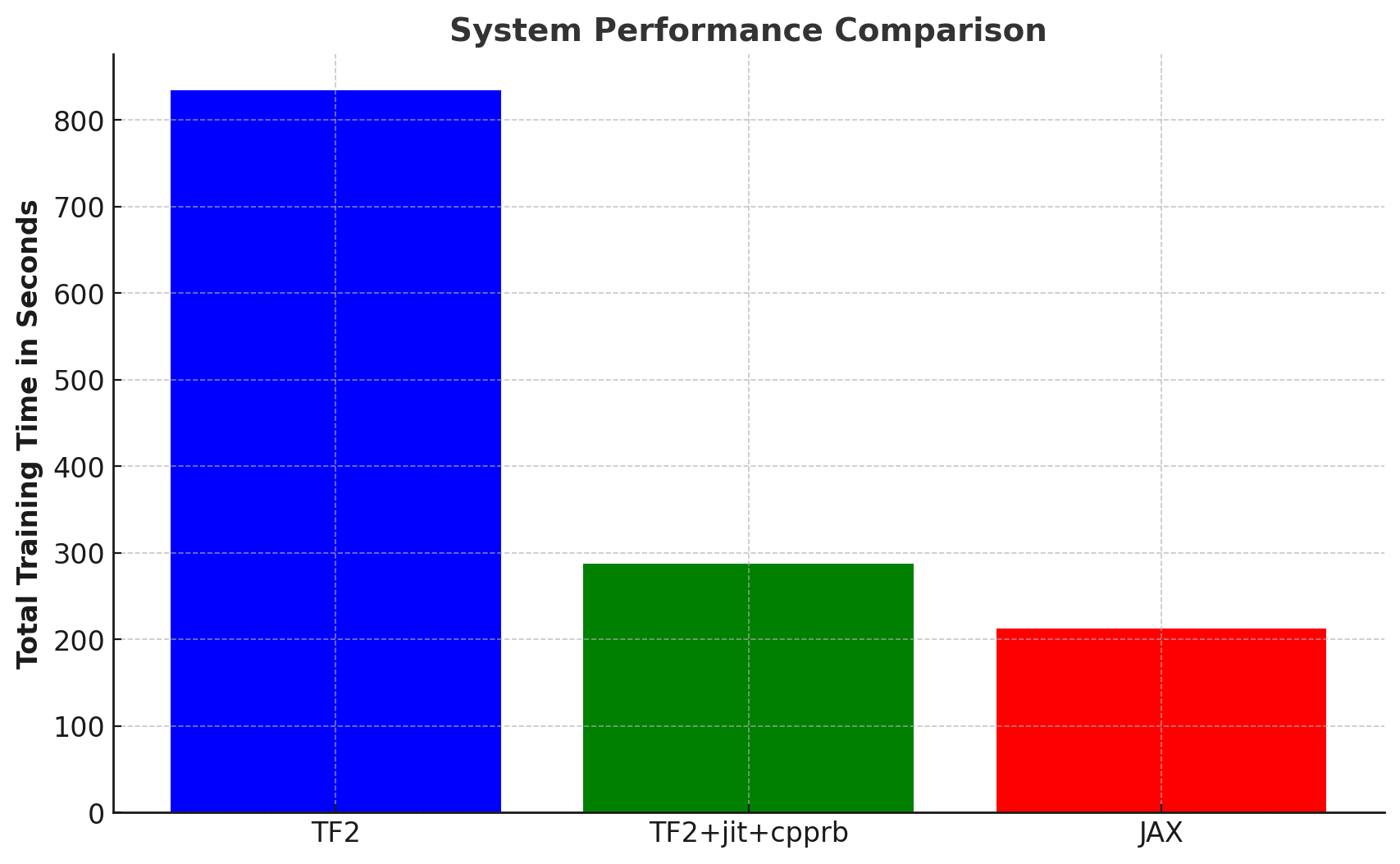

We have made our TF2 systems compatible with jit compilation. This combined with our new cpprb replay buffers have made our systems significantly faster. Furthermore, our JAX systems with tightly integrated replay sampling and training using Flashbax are even faster.

Speed Comparison: for each setup, we trained MAICQ on the 8m Good dataset for 10k training steps and evaluated every 1k training steps for 4 episodes using a batch size of 256.

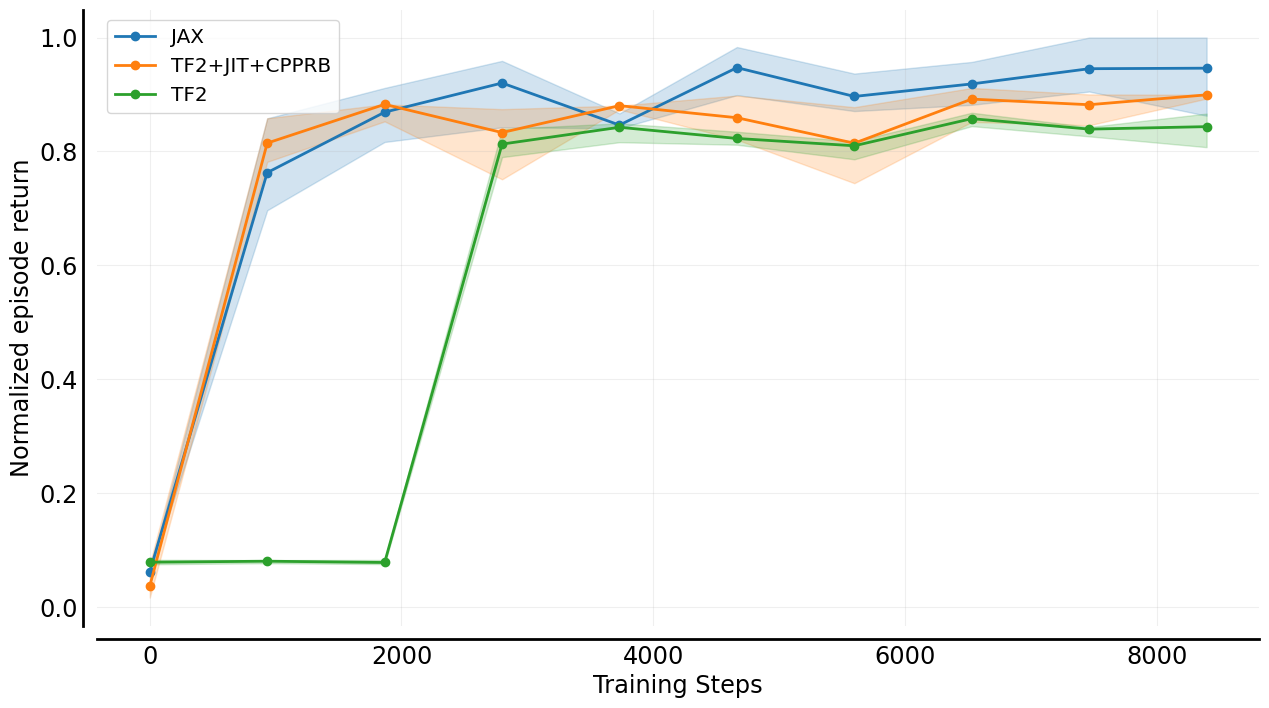

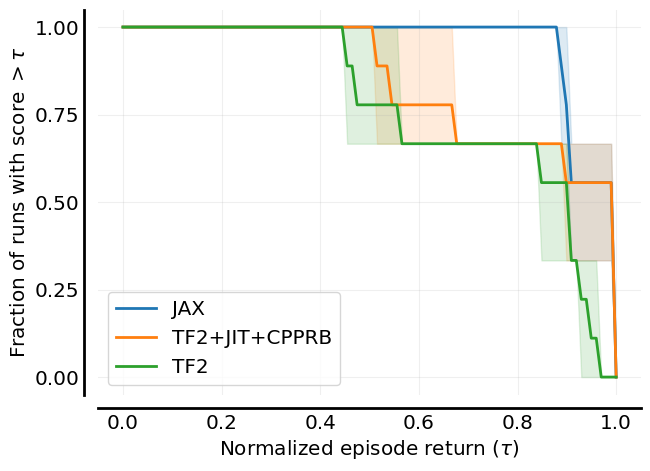

Performance Comparison: In order to make sure performance between the TF2 system and the JAX system is the same, we trained both varients on each of the three datasets for 8m (Good, Medium and Poor). We then normalised the scores and aggregated the results using MARL-eval. The sample efficiency curves and the performance profiles are given below.

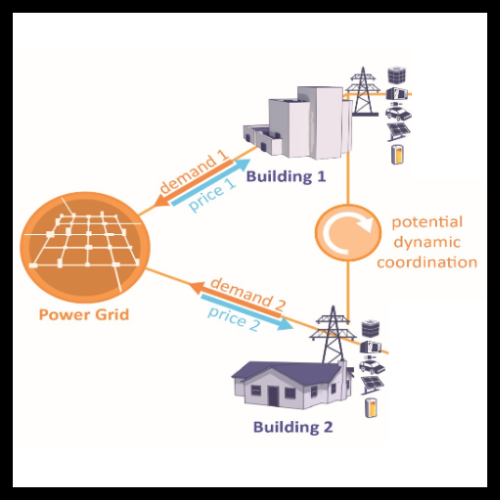

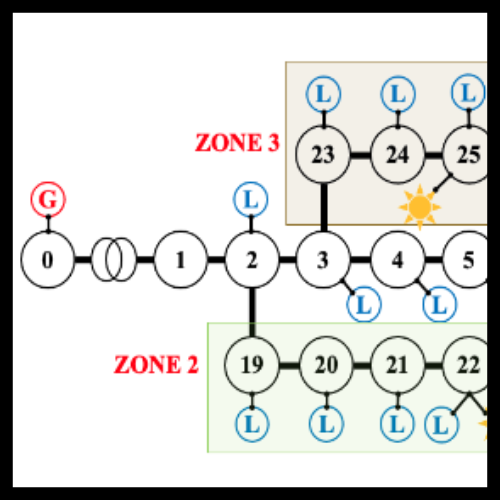

We have generated datasets on a diverse set of popular MARL environments. A list of currently supported environments is included in the table below. It is well known from the single-agent offline RL literature that the quality of experience in offline datasets can play a large role in the final performance of offline RL algorithms. Therefore in OG-MARL, for each environment and scenario, we include a range of dataset distributions including Good, Medium, Poor and Replay datasets in order to benchmark offline MARL algorithms on a range of different dataset qualities. For more information on why we chose to include each environment and its task properties, please read our accompanying paper.

| Environment | Scenario | Agents | Act | Obs | Reward | Types | Repo |

|---|---|---|---|---|---|---|---|

| 🔫SMAC v1 | 3m 8m 2s3z 5m_vs_6m 27m_vs_30m 3s5z_vs_3s6z 2c_vs_64zg |

3 8 5 5 27 8 2 |

Discrete | Vector | Dense | Homog Homog Heterog Homog Homog Heterog Homog |

source |

| 💣SMAC v2 | terran_5_vs_5 zerg_5_vs_5 terran_10_vs_10 |

5 5 10 |

Discrete | Vector | Dense | Heterog | source |

| 🐻PettingZoo | Pursuit Co-op Pong PistonBall KAZ |

8 2 15 2 |

Discrete Discrete Cont. Discrete |

Pixels Pixels Pixels Vector |

Dense | Homog Heterog Homog Heterog |

source |

| 🚅Flatland | 3 Trains 5 Trains |

3 5 |

Discrete | Vector | Sparse | Homog | source |

| 🐜MAMuJoCo | 2-HalfCheetah 2-Ant 4-Ant |

2 2 4 |

Cont. | Vector | Dense | Heterog Homog Homog |

source |

| 🏙️CityLearn | 2022_all_phases | 17 | Cont. | Vector | Dense | Homog | source |

| 🔌Voltage Control | case33_3min_final | 6 | Cont. | Vector | Dense | Homog | source |

| 🔴MPE | simple_adversary | 3 | Discrete. | Vector | Dense | Competative | source |

Note: The dataset on KAZ was generated by recording experience from human game players.

To install og-marl run the following command.

pip install -e .

To run the JAX based systems include the extra requirements.

pip install -e .[jax]

Depending on the environment you want to use, you should install that environments dependencies. We provide convenient shell scripts for this.

bash install_environments/<environment_name>.sh

You should replace <environment_name> with the name of the environment you want to install.

Installing several different environments dependencies in the same python virtual environment (or conda environment) may work in some cases but in others, they may have conflicting requirements. So we recommend maintaining a different virtual environment for each environment.

Next you need to download the dataset you want to use and add it to the correct file path. We provided a utility for easily downloading and extracting datasets. Below is an example of how to download the dataset for the "3m" map in SMACv1.

from og_marl.offline_dataset import download_and_unzip_dataset

download_and_unzip_dataset("smac_v1", "3m")After running the download function you should check that the datasets were extracted to the correct location, as below. Alternativly, go to the OG-MARL website and download the dataset manually. Once the zip file is downloaded, extract the datasets from it and add them to a directory called datasets on the same level as the og-marl/ directory. The folder structure should look like this:

examples/

|_> ...

og_marl/

|_> ...

datasets/

|_> smac_v1/

|_> 3m/

| |_> Good/

| |_> Medium/

| |_> Poor/

|_> ...

|_> smac_v2/

|_> terran_5_vs_5/

| |_> Good/

| |_> Medium/

| |_> Poor/

|_> ...

...

We include scripts (examples/tf2/main.py and examples/jax/main.py) for easily launching experiments using the command below:

python examples/<backend>/main.py --system=<system_name> --env=<env_name> --scenario=<scenario_name>

Example options for each placeholder are given below:

<backend>: {jax,tf2}<system_name>: {maicq,qmix,qmix+cql,qmix+bcq,idrqn,iddpg, ...}<env_name>: {smac_v1,smac_v2,mamujoco, ...}<scenario_name>: {3m,8m,terran_5_vs_5,2halfcheetah, ...}

Note: We have not implemented any checks to make sure the combination of env, scenario and system is valid. For example, certain algorithms can only be run on discrete action environments. We hope to implement more guard rails in the future. For now, please refer to the code and the paper for clarification. We are also still in the process of migrating all the experiments to this unified launcher. So if some configuration is not supported yet, please reach out in the issues and we will be happy to help.

from og_marl.offline_dataset import download_flashbax_dataset

from og_marl.environments.smacv1 import SMACv1

from og_marl.jax.systems.maicq import train_maicq_system

from og_marl.loggers import TerminalLogger

# Download the dataset

download_flashbax_dataset(

env_name="smac_v1",

scenario_name="8m",

base_dir="datasets/flashbax"

)

dataset_path = "datasets/flashbax/smac_v1/8m/Good"

# Instantiate environment for evaluation

env = SMACv1("8m")

# Setup a logger to write to terminal

logger = TerminalLogger()

# Train system

train_maicq_system(env, logger, dataset_path)InstaDeep's MARL ecosystem in JAX. In particular, we suggest users check out the following sister repositories:

- 🦁 Mava: a research-friendly codebase for distributed MARL in JAX.

- 🌴 Jumanji: a diverse suite of scalable reinforcement learning environments in JAX.

- 😎 Matrax: a collection of matrix games in JAX.

- 🔦 Flashbax: accelerated replay buffers in JAX.

- 📈 MARL-eval: standardised experiment data aggregation and visualisation for MARL.

Related. Other libraries related to accelerated MARL in JAX.

- 🦊 JaxMARL: accelerated MARL environments with baselines in JAX.

- ♟️ Pgx: JAX implementations of classic board games, such as Chess, Go and Shogi.

- 🔼 Minimax: JAX implementations of autocurricula baselines for RL.

If you use OG-MARL in your work, please cite the library using:

@inproceedings{formanek2023ogmarl,

author = {Formanek, Claude and Jeewa, Asad and Shock, Jonathan and Pretorius, Arnu},

title = {Off-the-Grid MARL: Datasets and Baselines for Offline Multi-Agent Reinforcement Learning},

year = {2023},

publisher = {AAMAS},

booktitle = {Extended Abstract at the 2023 International Conference on Autonomous Agents and Multiagent Systems},

}

The development of this library was supported with Cloud TPUs from Google's TPU Research Cloud (TRC) 🌤.