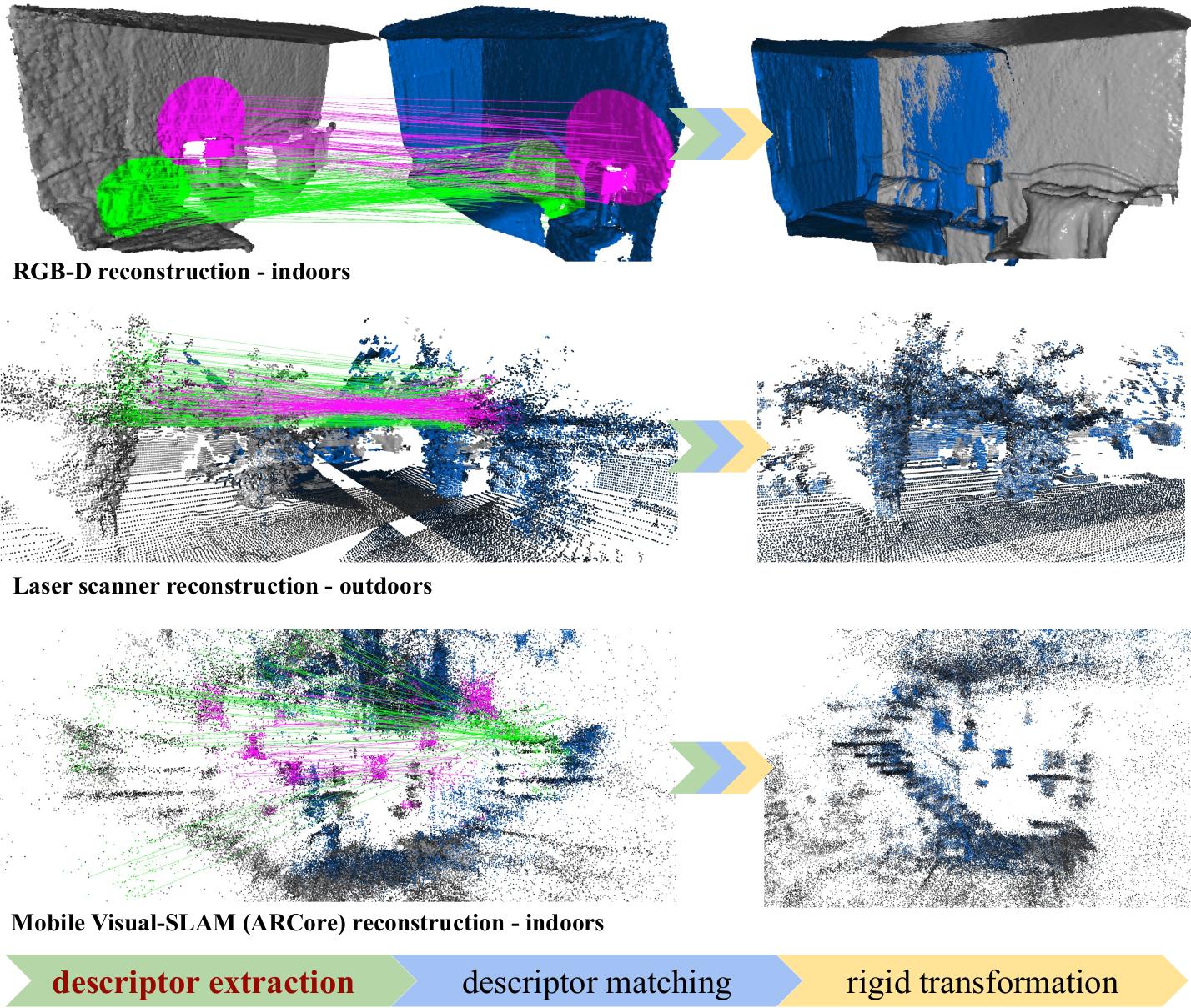

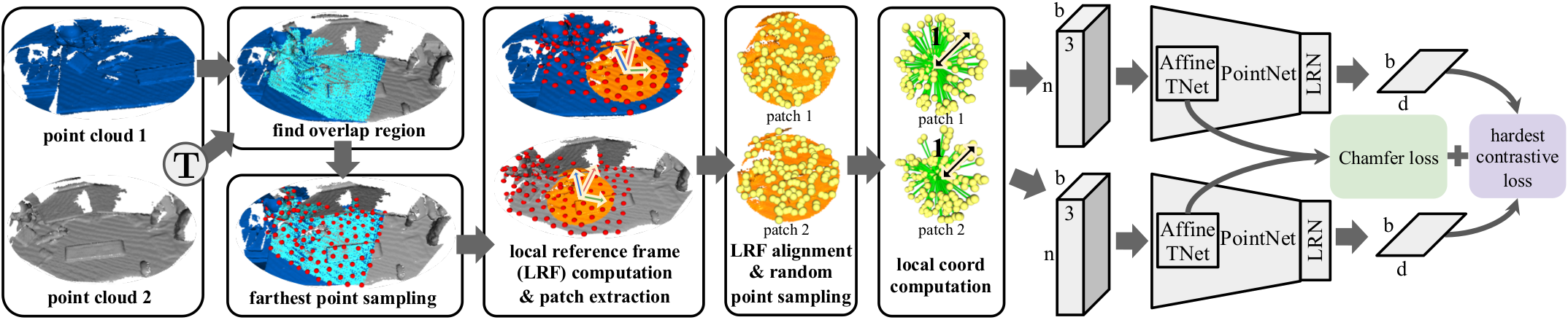

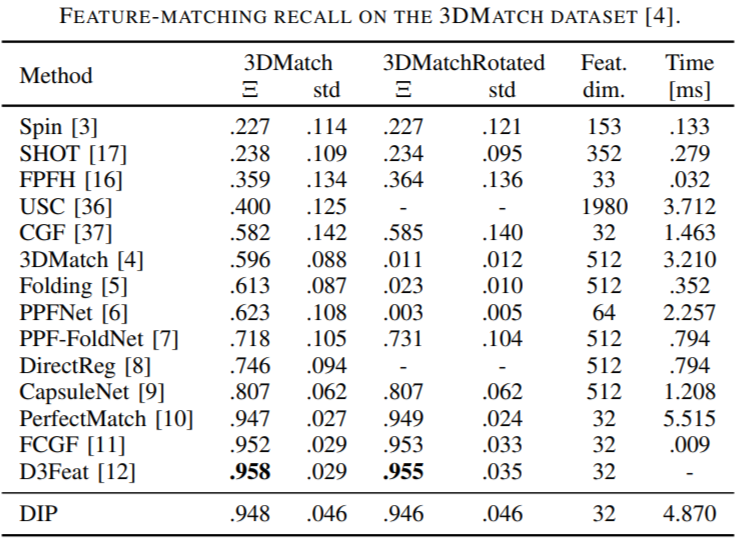

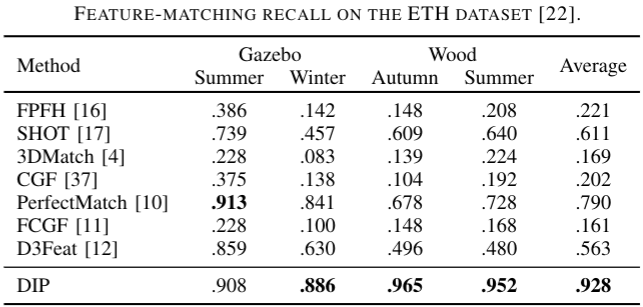

Distinctive 3D local deep descriptors (DIPs) are rotation-invariant compact 3D descriptors computed using a PointNet-based deep neural network. DIPs can be used to register point clouds without requiring an initial alignment. DIPs are generated from point-cloud patches that are canonicalised with respect to their estimated local reference frame (LRF). DIPs can effectively generalise across different sensor modalities because they are learnt end-to-end from locally and randomly sampled points. DIPs (i) achieve comparable results to the state-of-the-art on RGB-D indoor scenes (3DMatch dataset), (ii) outperform state-of-the-art by a large margin in terms of generalisation on laser-scanner outdoor scenes (ETH dataset), and (iii) generalise to indoor scenes reconstructed with the Visual-SLAM system of Android ARCore.

Descriptor quality is assessed using feature-matching recall [6]. See the paper for the references.

| 3DMatch dataset | Generalisation ability on ETH dataset |

|---|---|

|

|

- Ubuntu 16.04

- CUDA 10.2

- Python 3.6

- Pytorch 1.4

- Open3D 0.8.0

- torch-cluster

- torch-nndistance

git clone https://github.com/fabiopoiesi/dip.git

cd dip

pip install -r requirements.txt

pip install torch-cluster==1.4.5 -f https://pytorch-geometric.com/whl/torch-1.4.0.html

cd torch-nndistance

python build.py install

The datasets used in the paper are listed below along with links pointing to their respective original project page. For convenience and reproducibility, our preprocessed data1 are available for download. The preprocessed data for the 3DMatchRotated dataset (augmented version of 3DMatch) is not provided, it needs preprocessing (see below). After downloading folders and unzipping files, the dataset root directory should have the following structure.

.

├── 3DMatch_test

├── 3DMatch_test_pre

├── 3DMatch_train

├── 3DMatch_train_pre

├── ETH_test

├── ETH_test_pre

└── VigoHome

The original dataset can be found here. We used data from the RGB-D Reconstruction Datasets. Point cloud PLYs are generated using Multi-Frame Depth TSDF Fusion from here.

The original dataset can be found here.

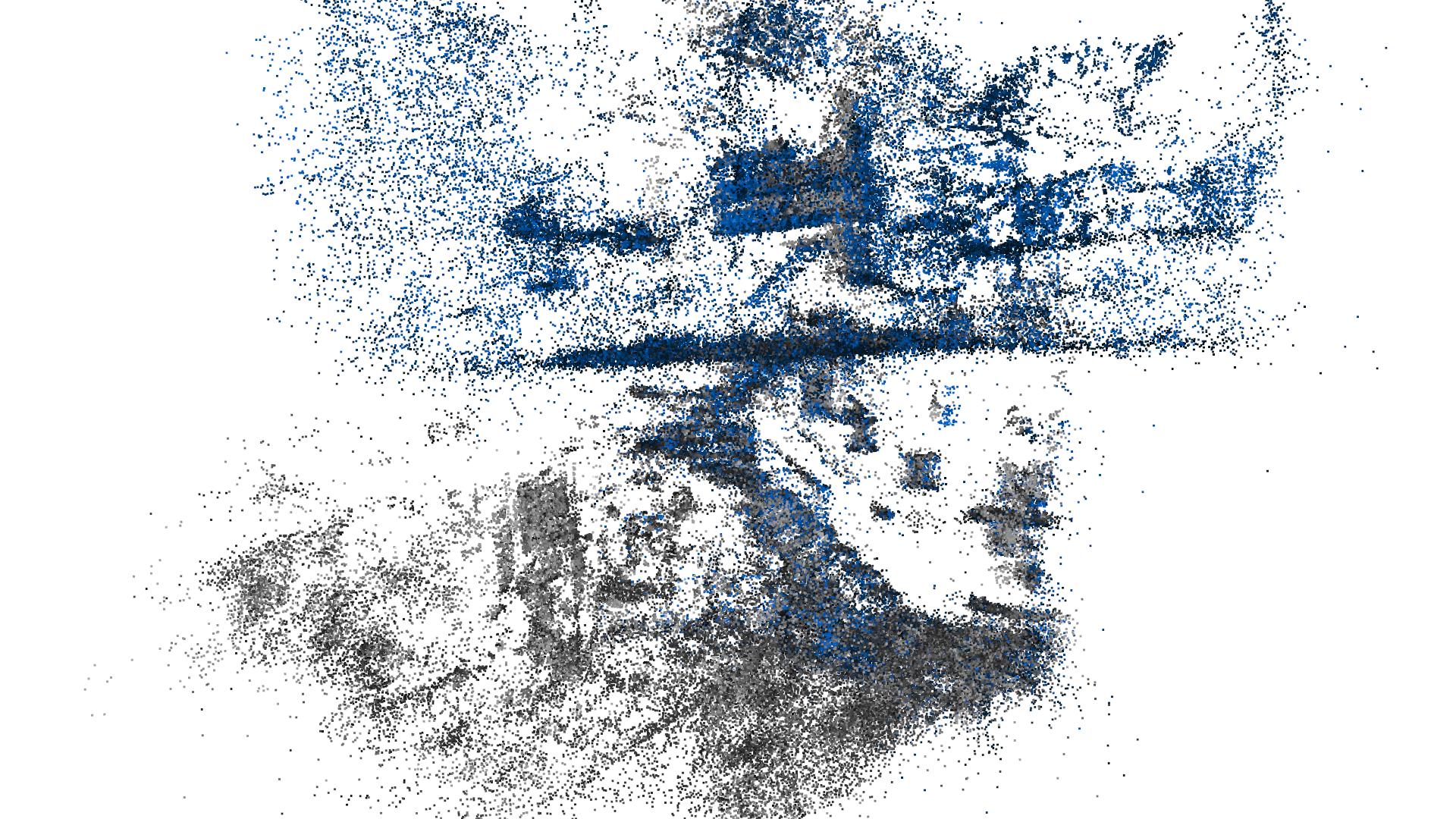

We collected VigoHome with our Android ARCore-based Visual-SLAM App. The dataset can be downloaded here, while the App's apk can be downloaded here (available soon).

Preprocessing can be used to generate patches and LRFs for training. This will greatly reduce training time. Preprocessing requires two steps: the first step computes point correspondences between point-cloud pairs using the Iterative Closest Point algoritm; the second step produces patches along with their LRF. To preprocess 3DMatch training data, run preprocess_3dmatch_correspondences_train.py and preprocess_3dmatch_lrf_train.py (same procedure for test data). Just make sure that datasets are downloaded and the paths in the code set.

Training requires preprocessed data, i.e. patches and LRFs (it would be too slow to extract and compute them at each iteration during training). See preprocessing to create your own preprocessed data or download our preprocessed data. To train set the variable dataset_root in train.py. Then run

python train.py

Training generates checkpoints in the chkpts directory and the training logs in the logs directory. Logs can be monitored through tensorboard by running

tensorboard --logdir=logs

We included three demos, one for each dataset we evaluated in the paper. The point clouds processed in the demos are in assets directory and the model trained on the 3DMatch dataset is in model. Run

python demo_3dmatch.py

python demo_eth.py

python demo_vigohome.py

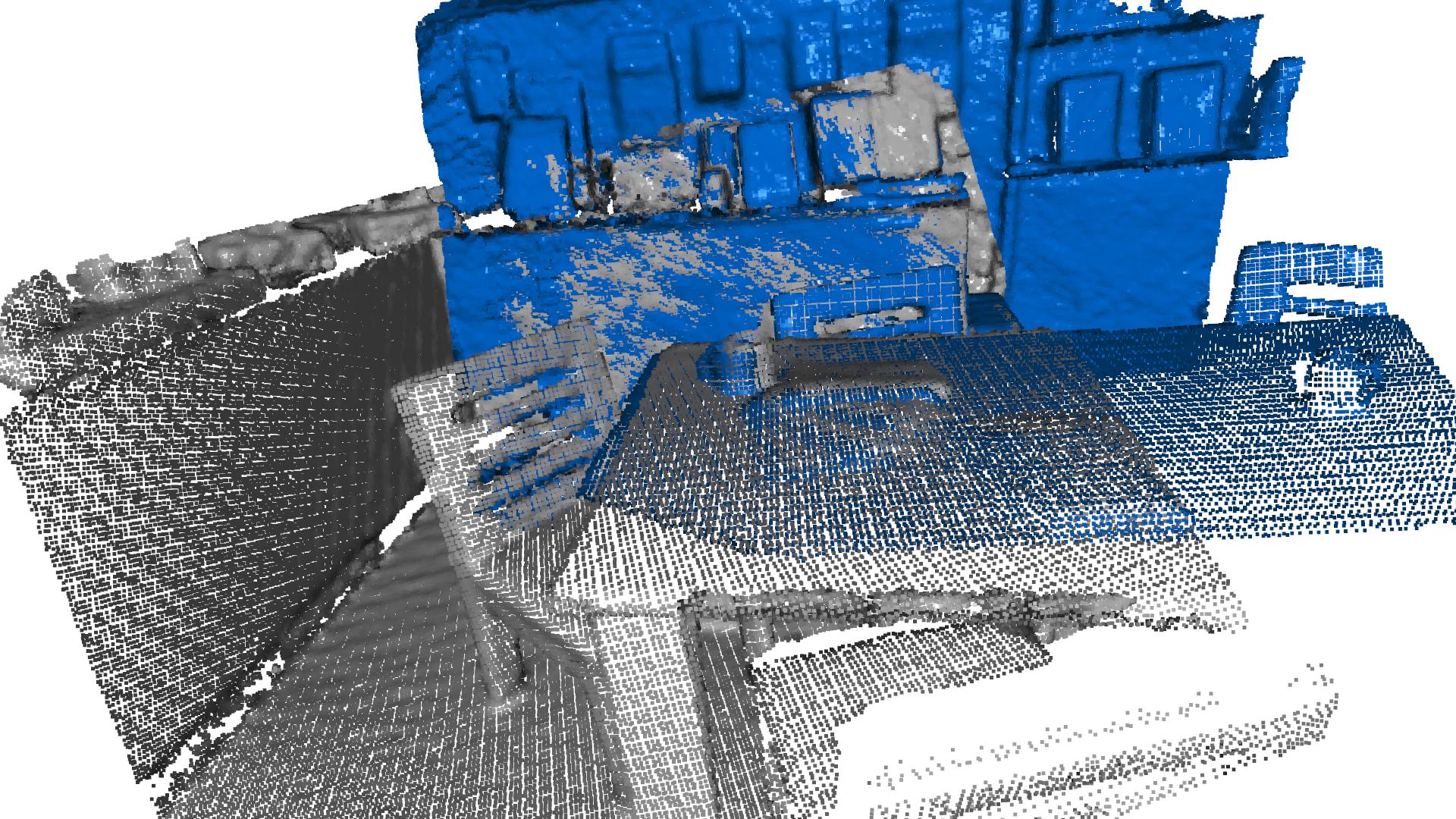

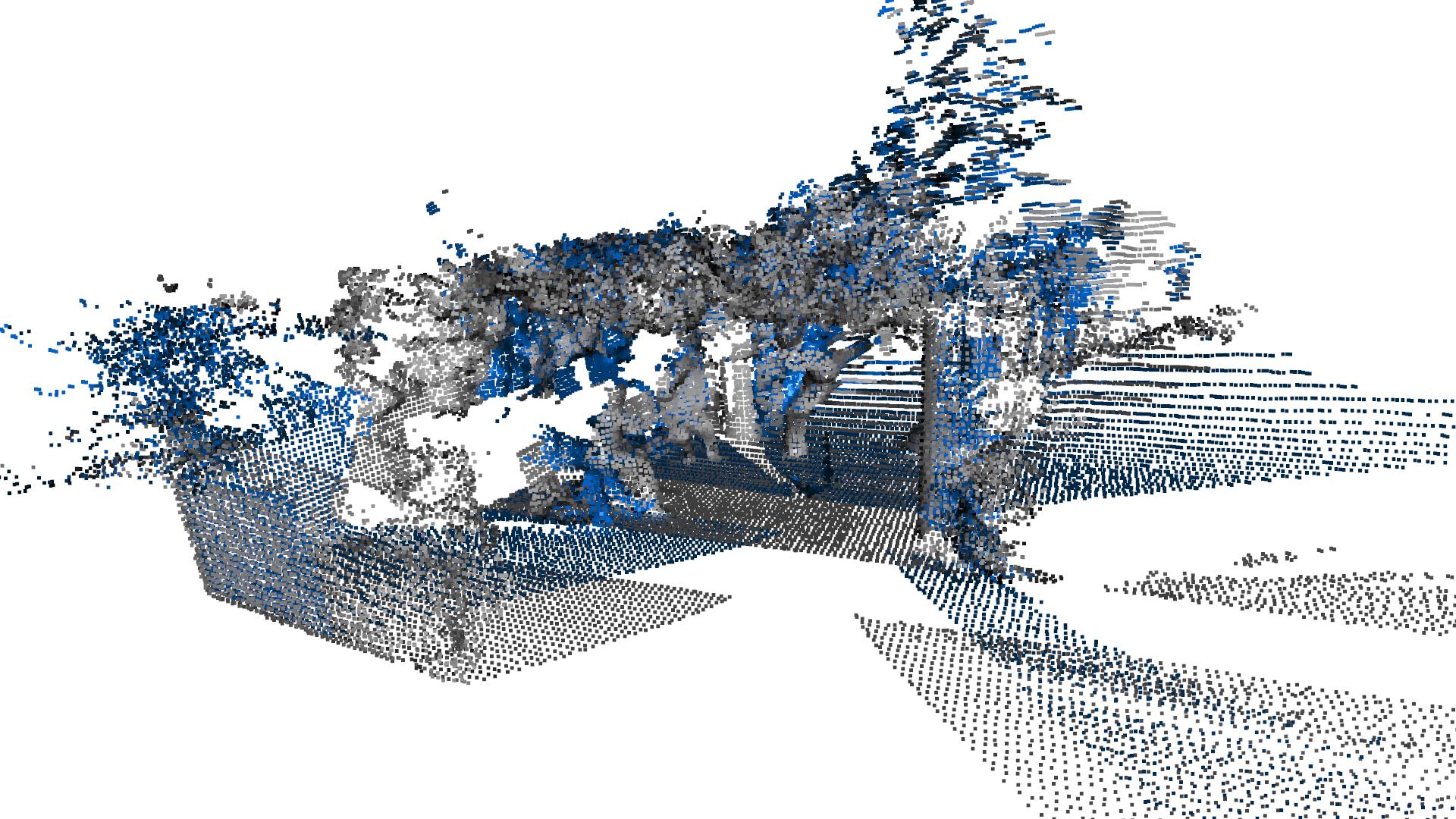

The results of each demo should look like the ones here below. Because the registration is estimated with RANSAC, results may differ slightly at each run.

| 3DMatch dataset | ETH dataset | VigoHome dataset |

|---|---|---|

|

|

|

Graphs2,3 of Fig. 6 can be generated by running

python graphs/viz_graphs.py

Please cite the following paper if you use our code

@inproceedings{Poiesi2021,

title = {Distinctive {3D} local deep descriptors},

author = {Poiesi, Fabio and Boscaini, Davide},

booktitle = {IEEE Proc. of Int'l Conference on Pattern Recognition},

address = {Milan, IT}

month = {Jan}

year = {2021}

}This research has received funding from the Fondazione CARITRO - Ricerca e Sviluppo programme 2018-2020.

We also thank 1Zan Gojcic, 2Chris Choy and 3Xuyang Bai for providing us with their support in the collection of the data for the paper.