This repository contains a collection of my articles on various topics of AI (also DL/ML/DS) research in python. Some articles contain code examples for demonstration purposes and the links to the full version of the corresponding code can be found in each article.

- Exploring distributed training with Keras and TensorFlow data module

- Exploring GAN, WGAN and StyleGAN

- OpenCV basics in python (Jupyter notebook)

- Face recognition using OpenFace

- Training and running Yolo on Jupyter notebook (TensorFlow)

- Exploring non-linear activation functions

- Preparing a customized image dataset from online sources

- Handling overfitting in CNN using keras ImageDataGenerator

- Comparison studies (pros and cons) on various supervised machine learning models

- Model evaluation

- Defining custom loss and custom layers on TensorFlow2.0 using

GradientTape - TensorFlow Lite for mobile/embedded deployment

- etc.

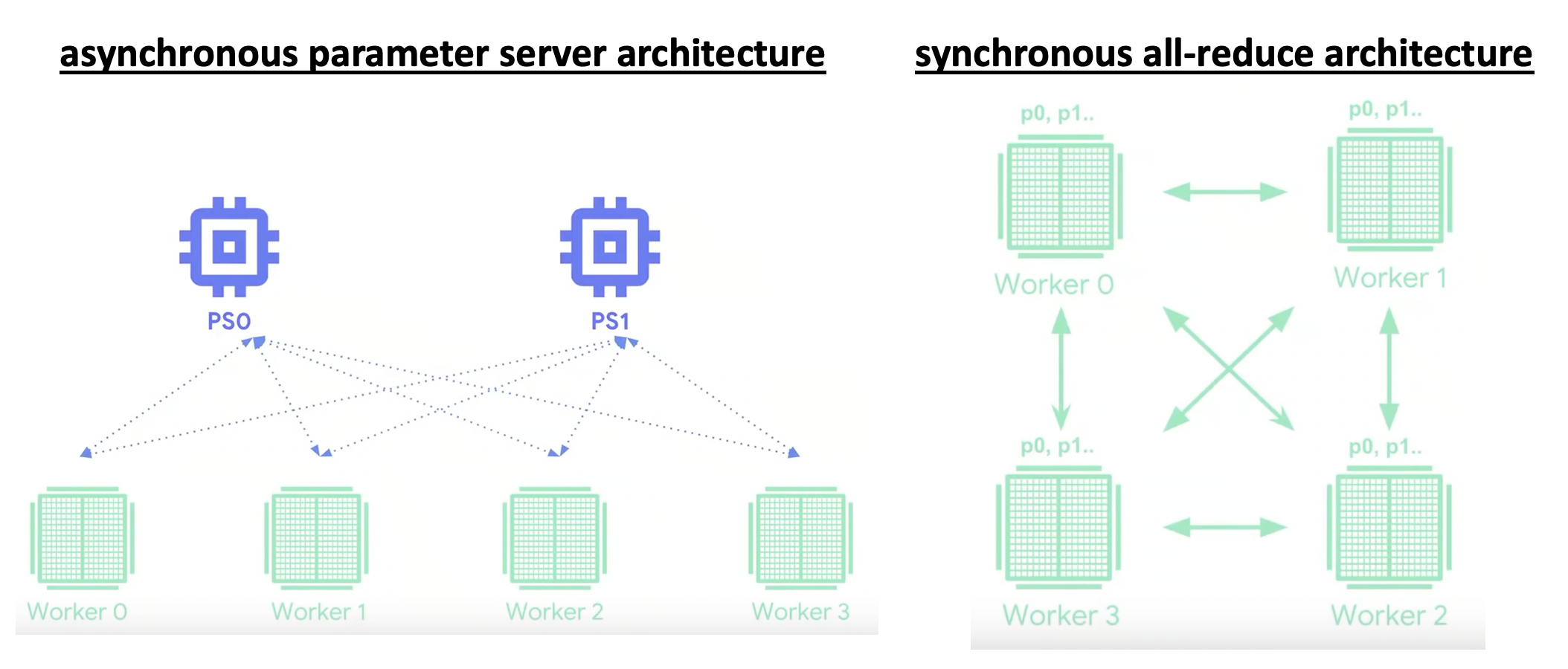

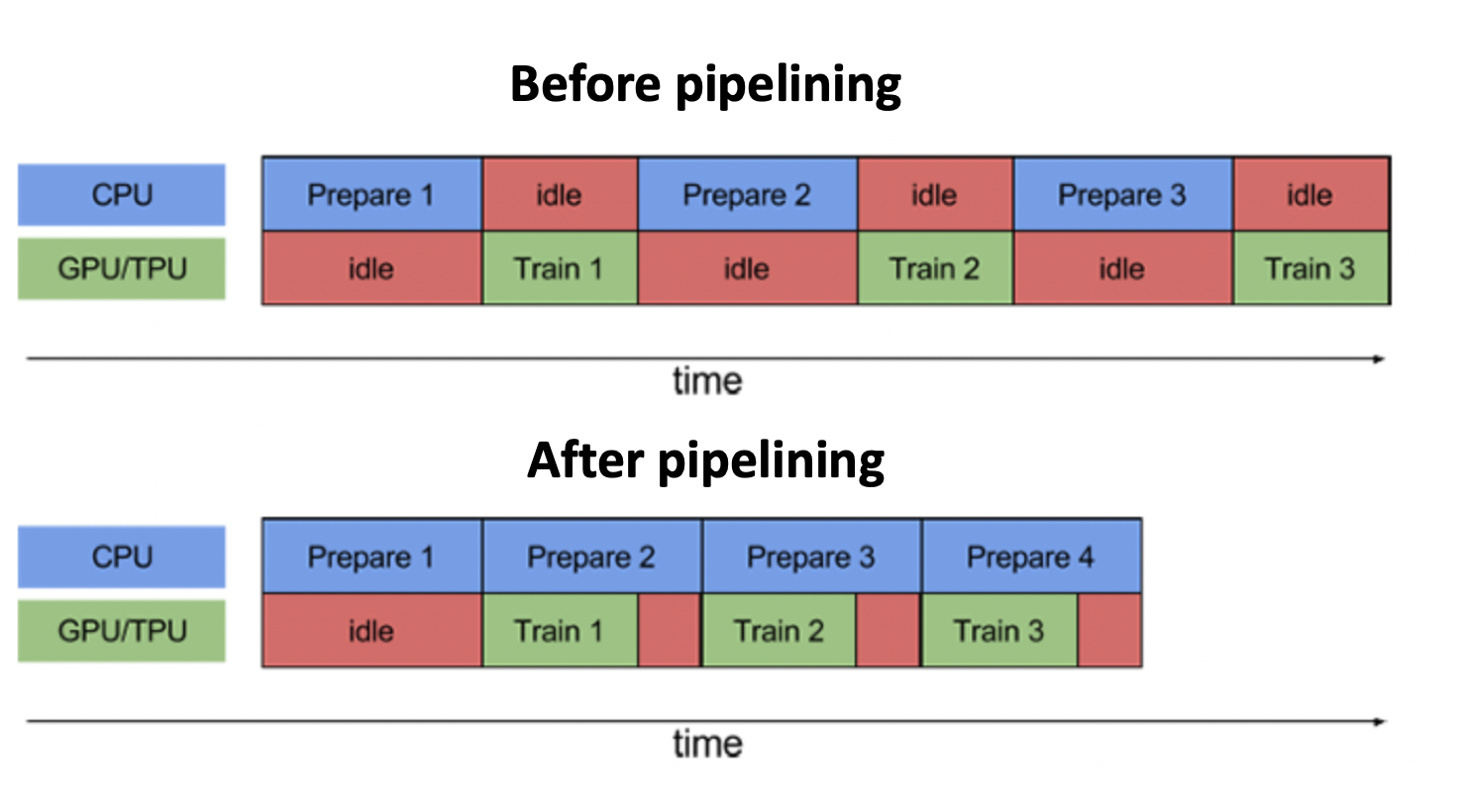

When a relatively small amount of data is on hand to train a relatively simple model with a single processing unit (CPU, GPU or TPU), training may not take too much time and a practitioner can go through several rounds of trial-and-error to optimize their models. When the amount of data gets bigger or the model gets complicated, training becomes computationally expensive and the corresponding training may take a few hours, days, even weeks, making the overall model development process very inefficient. The solution is distributed training or going parallel with multiple processing units (or even workers)! This article introduces the needs and importance of distributed training, and explores tf.distribute.Strategy and tf.data modules with actual code examples to understand how the TensorFlow's high-level API tf.keras can be tweaked to enable the distributed training with minimal changes in its user-friendly models/codes.

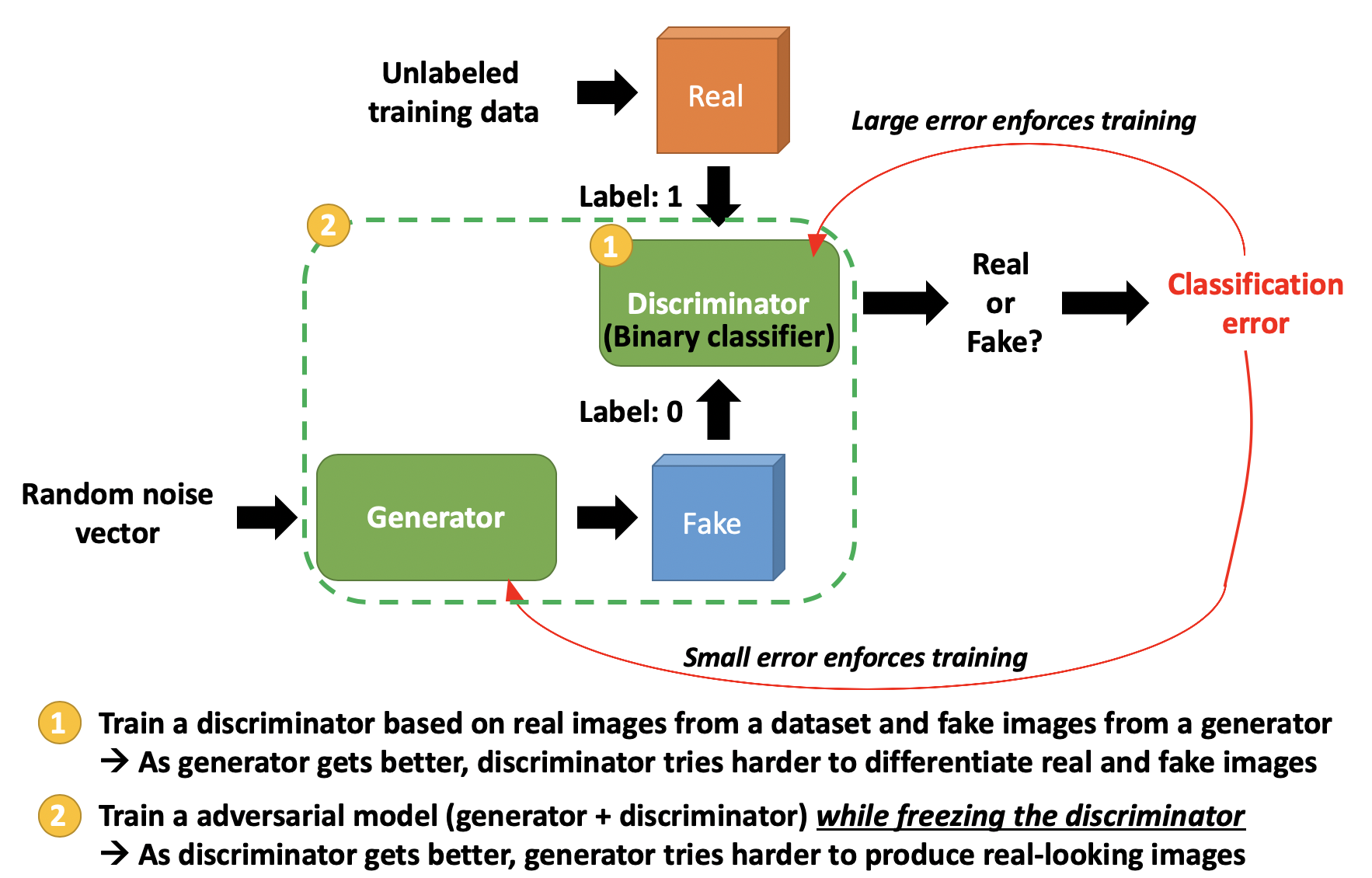

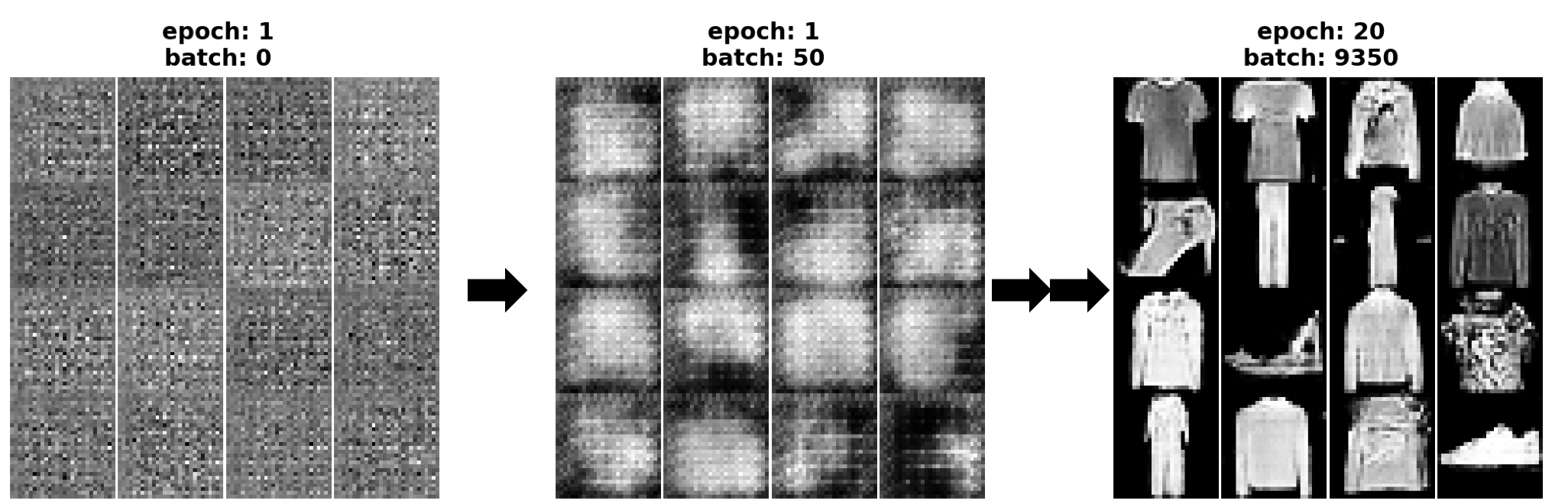

GAN (Generative and Adversarial Network) is one of the recent technologies in the field of deep learning that is pushing the threshold of deep-learning-based generative models in many different domains. Since its first introduction in 2014, there has been a number of interesting variations/applications made from GAN. This article would like to explore the theoretical backgrounds of the basic GAN model (in terms of images) and introduce practical code examples to generate the fashion_MNIST-like images from scratch. At the end of this article, WGAN (Wasserstein Generative and Adversarial Network) and NVIDIA's StyleGan are briefly explored as well.

StyleGAN fake human face generation (or Code)

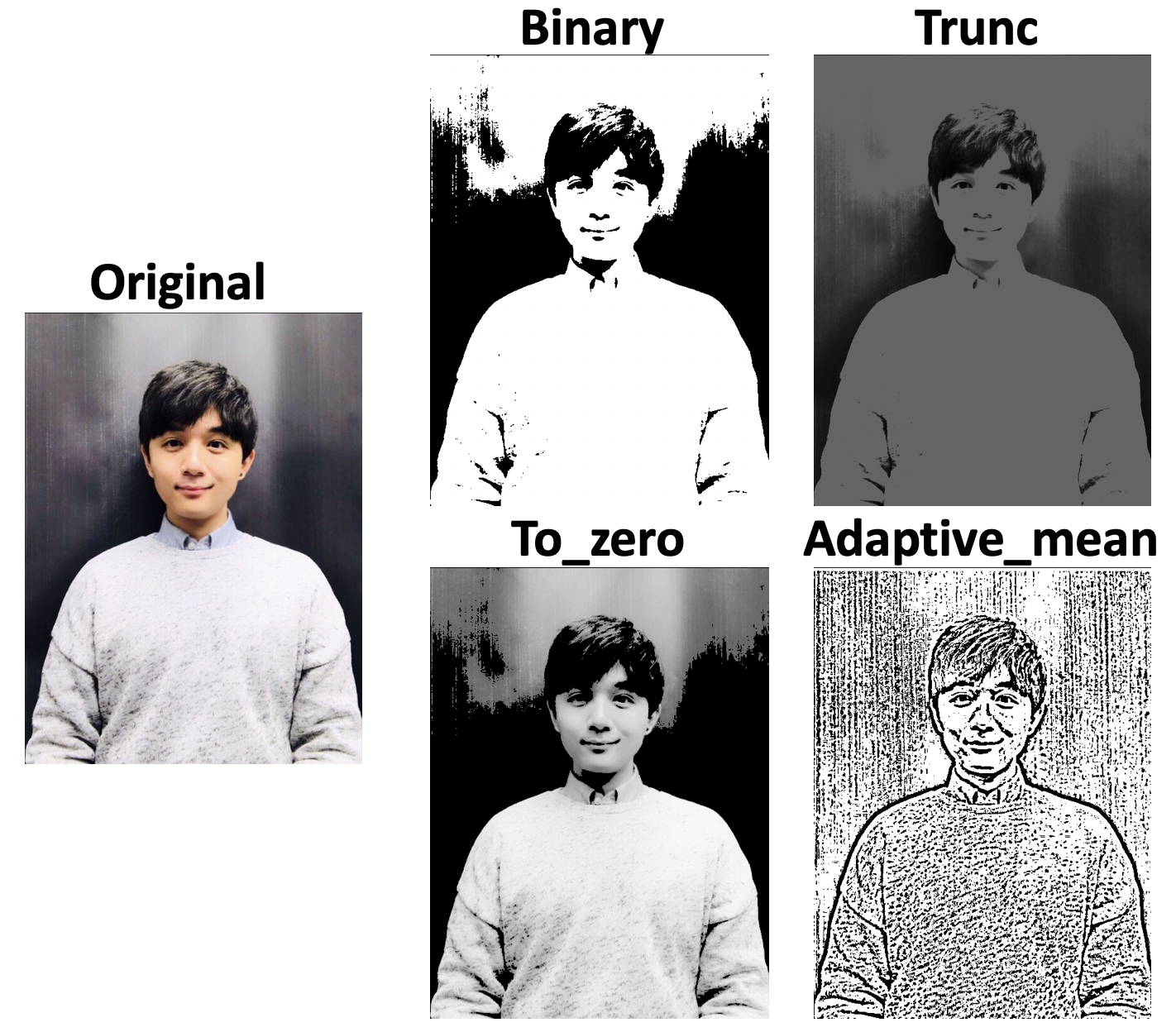

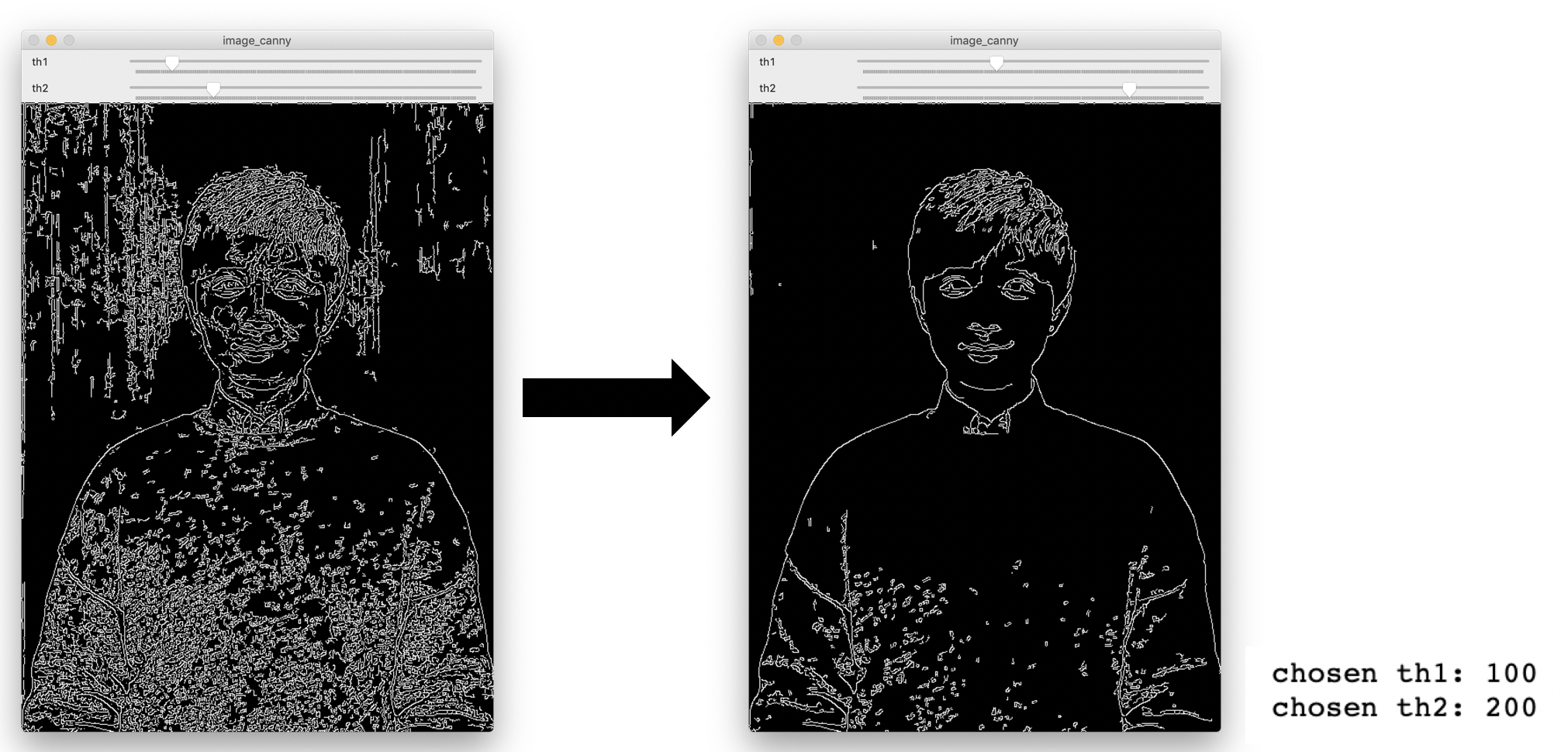

OpenCV is a powerful open-source tool that can be used in a variety of deep learning projects involving images and videos (in the field of computer vision). This article explores the basic/fundamental uses of this library in Python, especially in the Jupyter notebook environment. The purpose of this article is to provide the practical examples of the usages of some basic OpenCV algorithms on images and videos so that one can build upon the custom projects more easily.

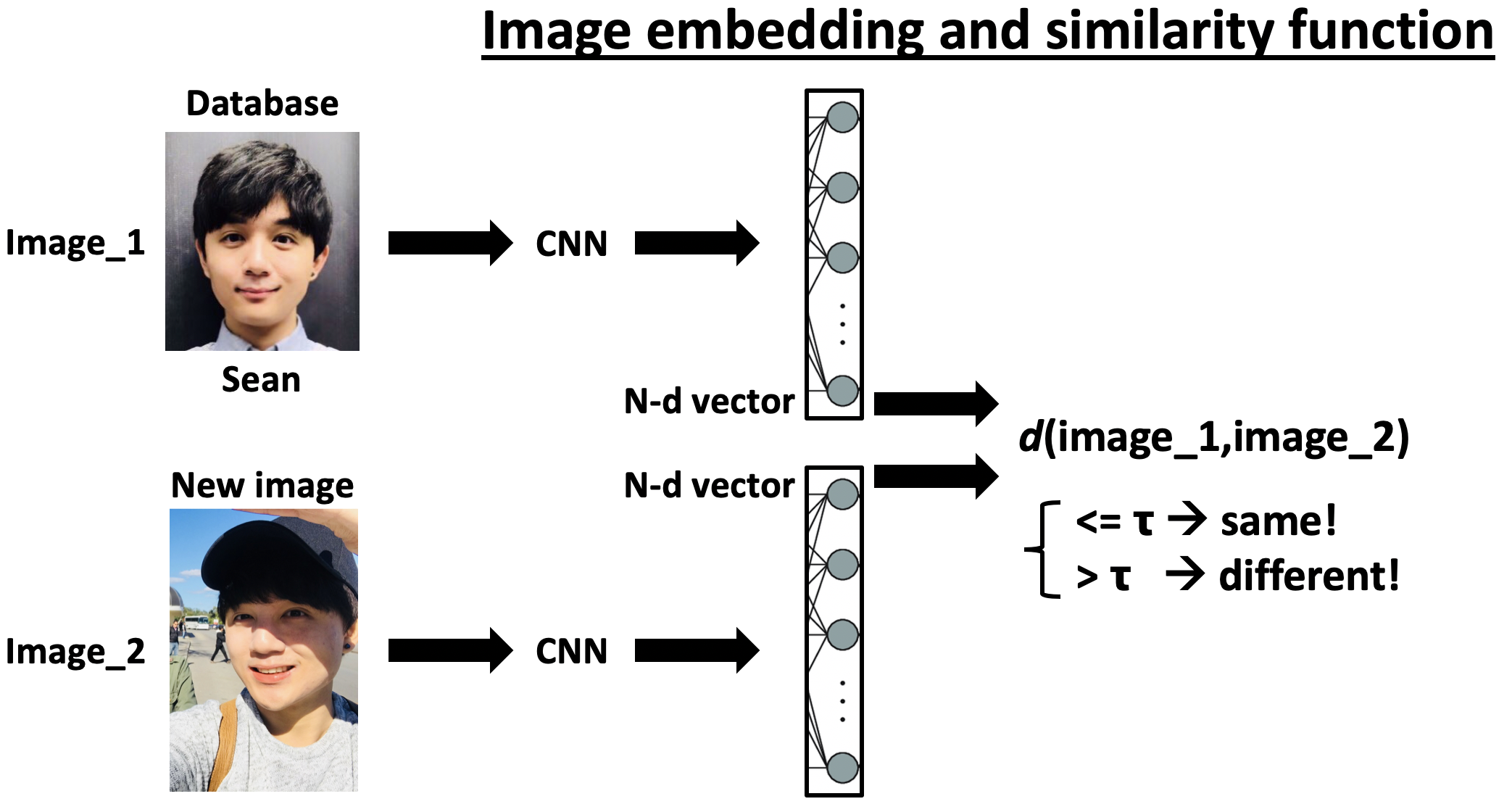

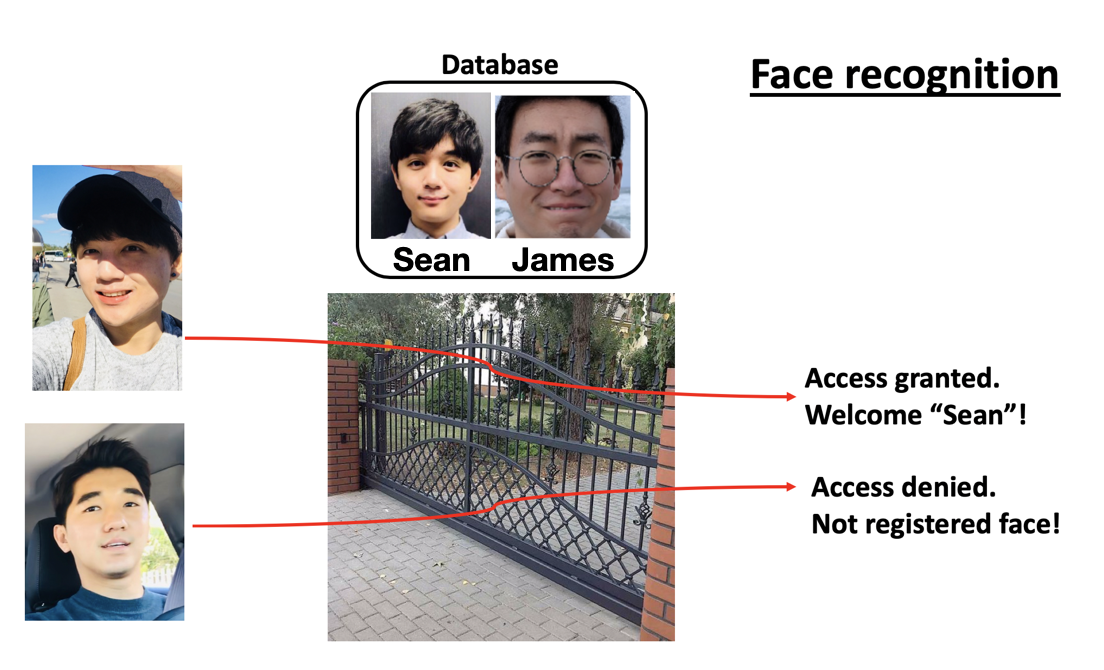

Face recognition is an interesting and very powerful application of a convolution neural network (CNN). It is the ubiquitous technology that can be used for many different purposes (i.e. to claim identification at the entrance, to track the motion of specific individuals, etc.). This article explores the theoretical background of popular face recognition systems and also introduce the practical way of implementing FaceNet model on Keras (with TensorFlow backend) with a custom dataset.

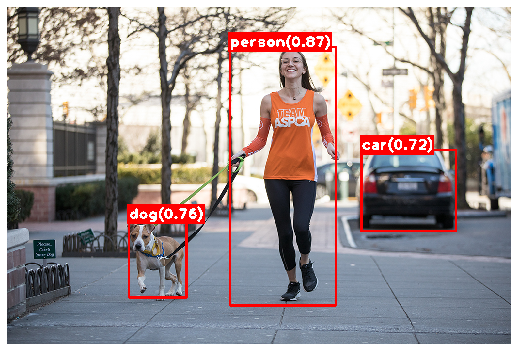

YOLO (You Only Look Once) is one of the most popular state-of-the-art one-stage object detectors out in the field. Unlike other typical two-stage object detectors like R-CNN, YOLO looks at the entire image once (with a single neural network) to localize/classify the objects that it was trained for.

The original YOLO algorithm is implemented in darknet framework by Joseph Redmon. This open-source framework is written in C and CUDA, and therefore a new framework called darkflow has been introduced to implement YOLO on TensorFlow. This article describes the practical step-by-step process of configuring, running, and training custom YOLO on Jupyter notebook and TensorFlow.

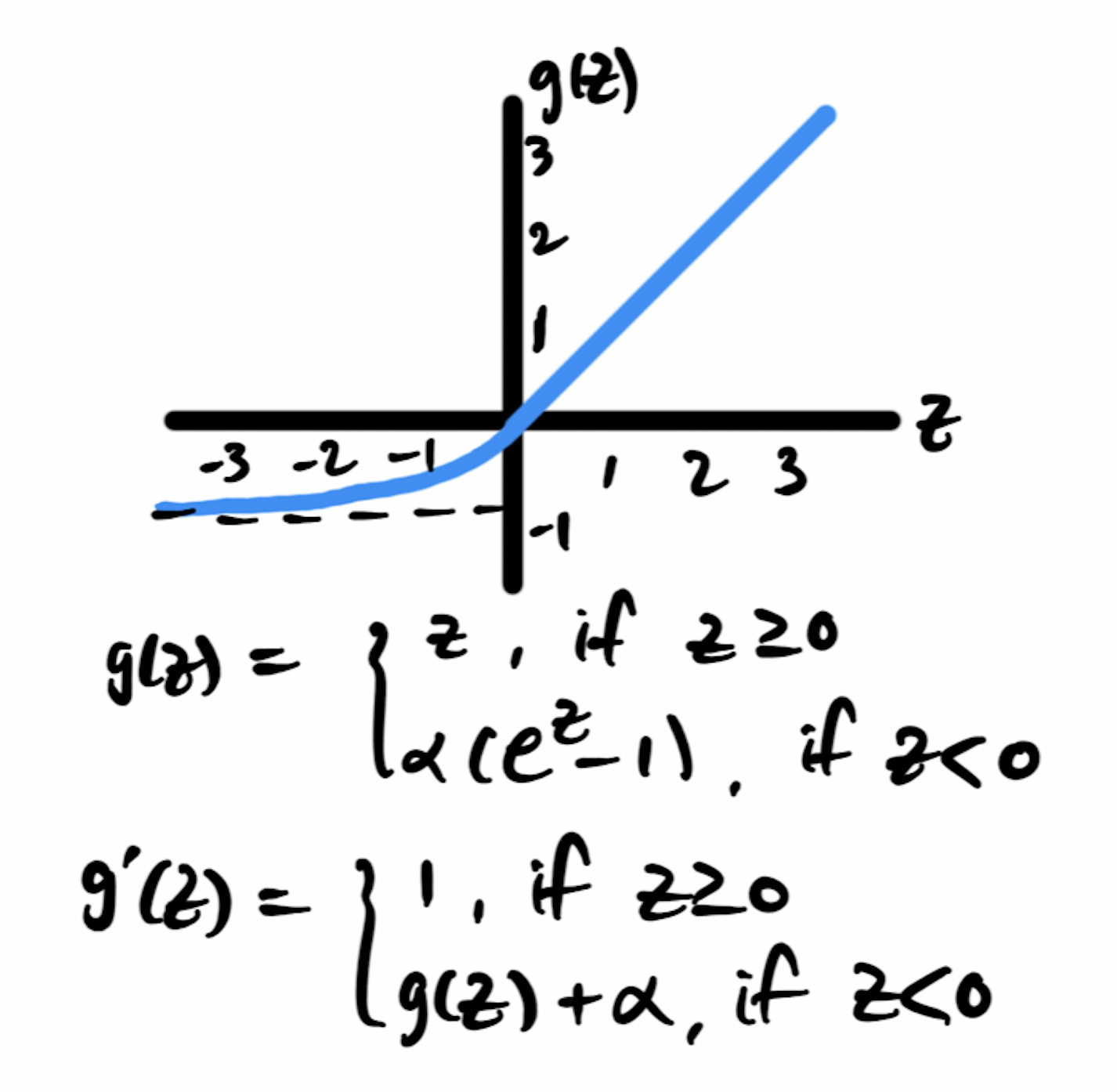

In a neural network, an activation function is an important transformer which enables a model to learn non-linearity. There are different types of activation function and their performance depends on many different factors. This article explores a few of today's most popular types of activation functions in a neural network: sigmoid, tanh, ReLU and ELU. Some pros and cons of each function are described along with their mathematical backgrounds. Lastly, experimental results are presented comparing the performance of the models with different activation functions when fitting the simple MNIST dataset (both on arbitrarily-built NN and CNN architectures).

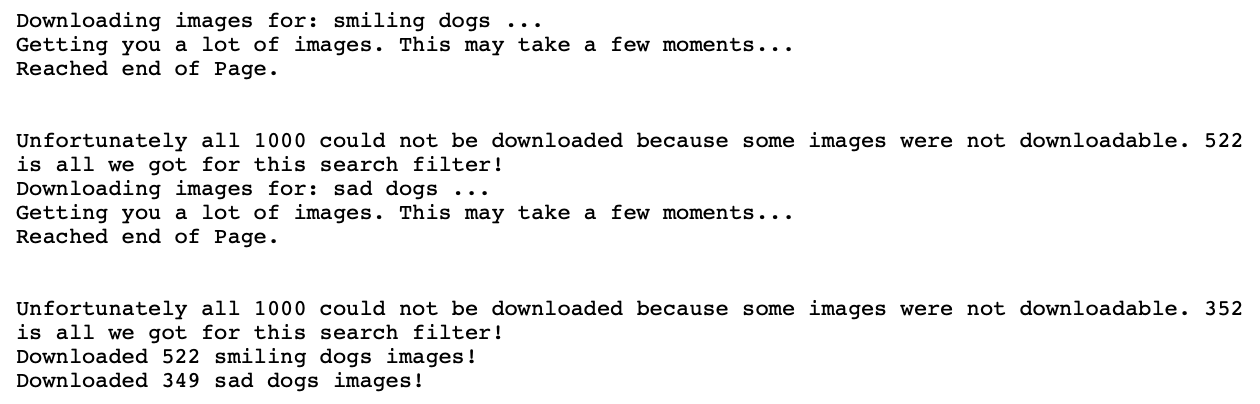

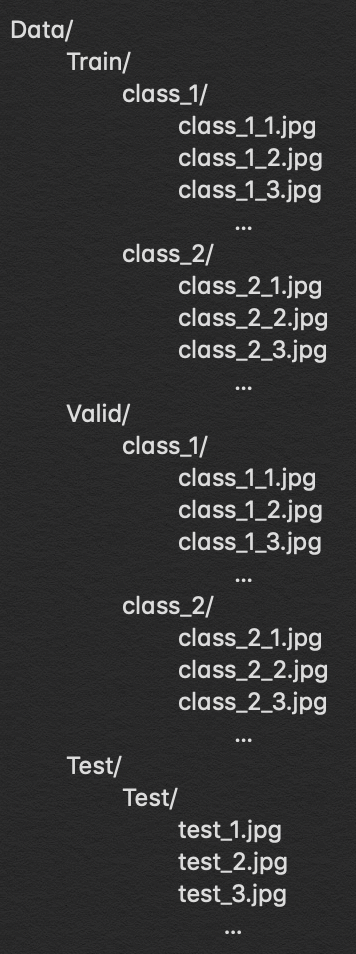

One of the most crucial parts in general CNN/computer vision modelling problems is to have a good quality/quantity image dataset. Depending on the number of available training data and their quality (i.e. correctly labelled? contains a good representation of a class? contains different variations of each class? and etc.), very different models will be achieved with different performances. Among many, one of the easiest way to prepare one's own dataset is to collect them from online. This article describes one way of doing it using google_images_download module. With a list of specific search queries, collecting tens of thousands of images to build a customized training dataset becomes straightforward.

When training a CNN model, one of the typical problems one may encounter is a model overfitting. It happens for several reasons and limits the performance of the model. Among many ways to resolve this issue, this article describes a way to implement data augmentation using keras' ImageDataGenerator. A few different scenarios where this class can be implemented are explored with actual code examples.

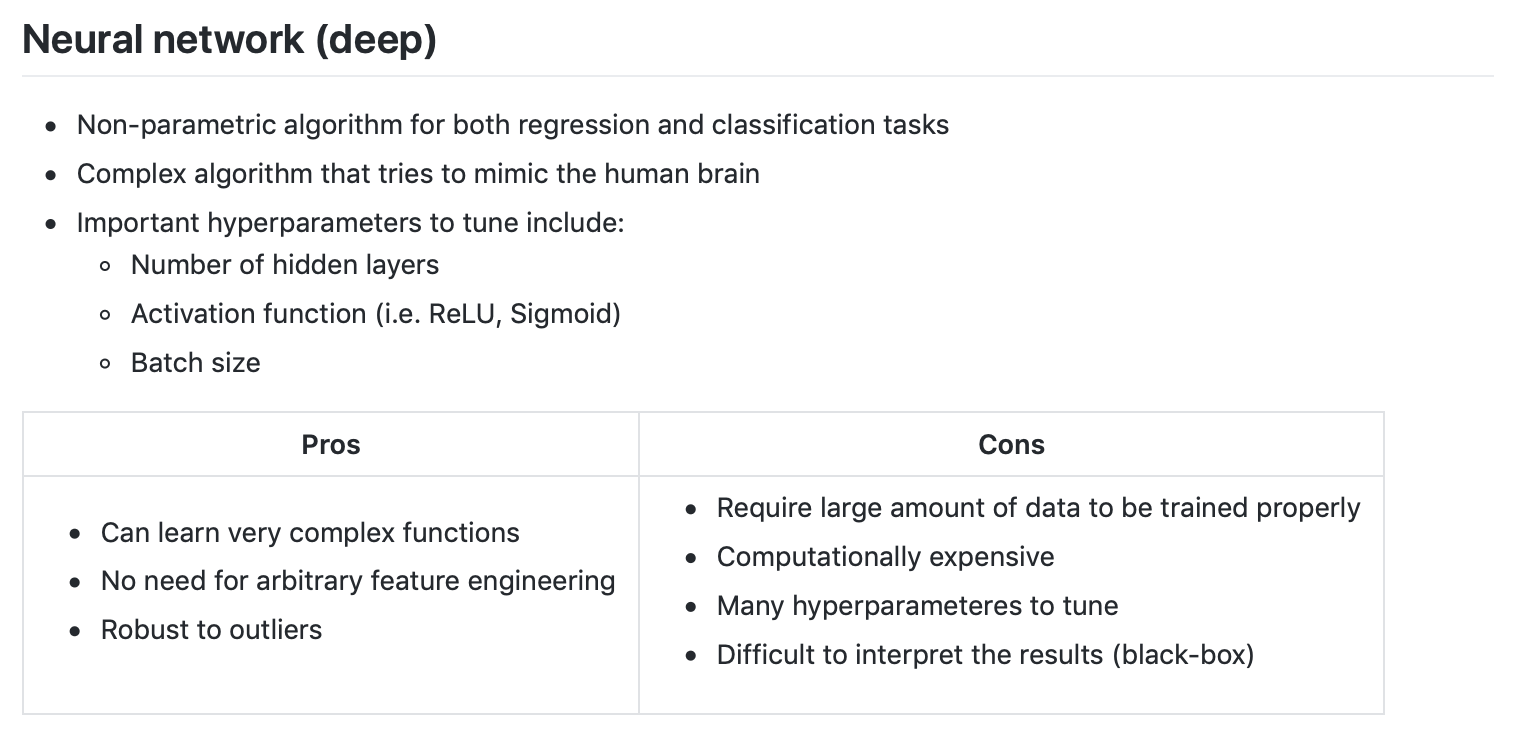

For typical supervised predictive modelling problems including regression and classification, there exist many different algorithms a practitioner can choose to use. Depending on the type of given problems, one algorithm tends to perform better than the others, but there is no one single algorithm that simply outperforms its counterparts in all different situations. This article explores some pros and cons of different supervised machine learning algorithms with least amount of maths involved. In these days, many high-level modules such as scikit-learn and TensorFlow are available for a practitioner to build and test different algorithms with only a few lines of code. One may need to test a few before choosing and optimizing a single model to work with.

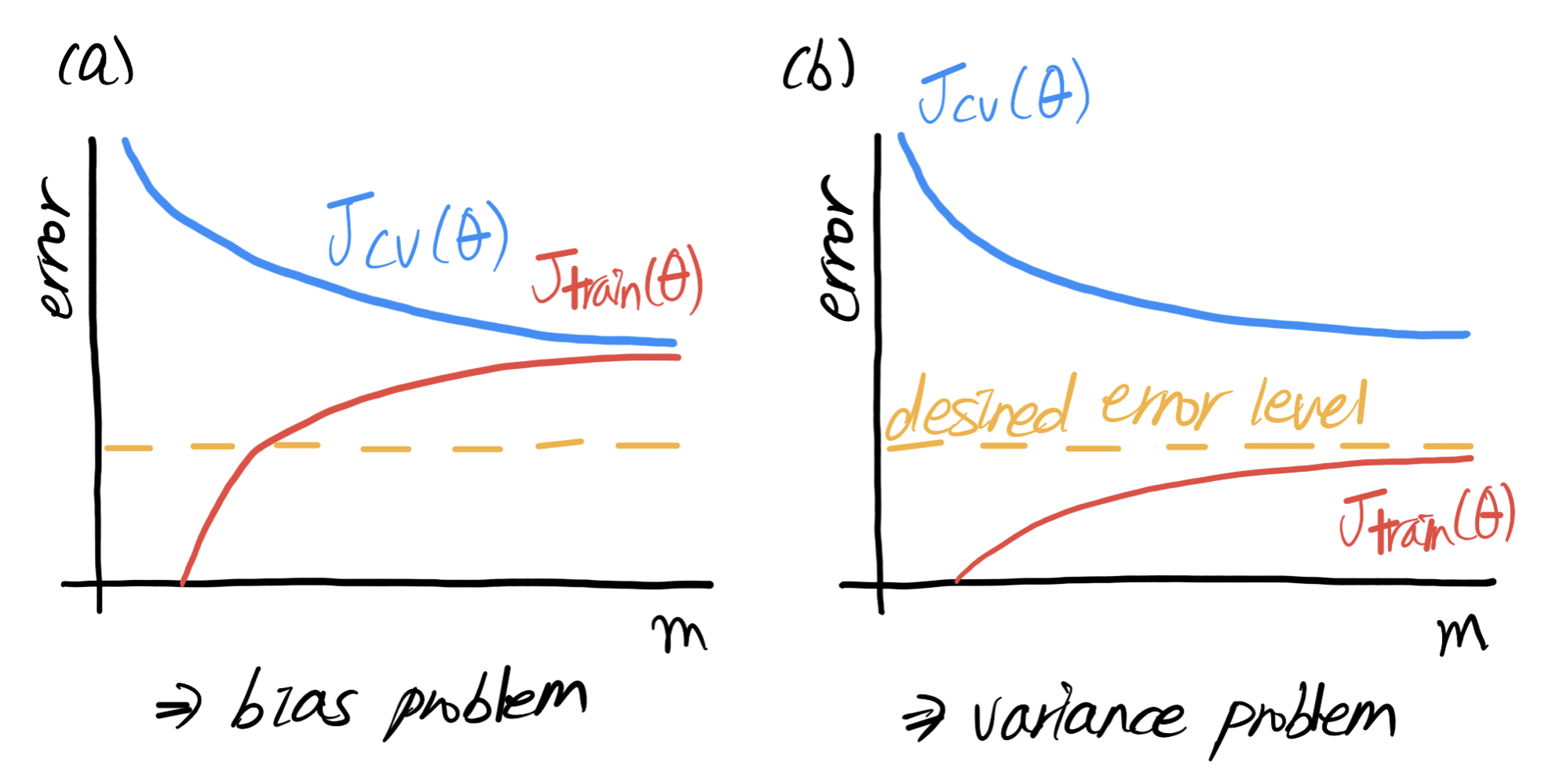

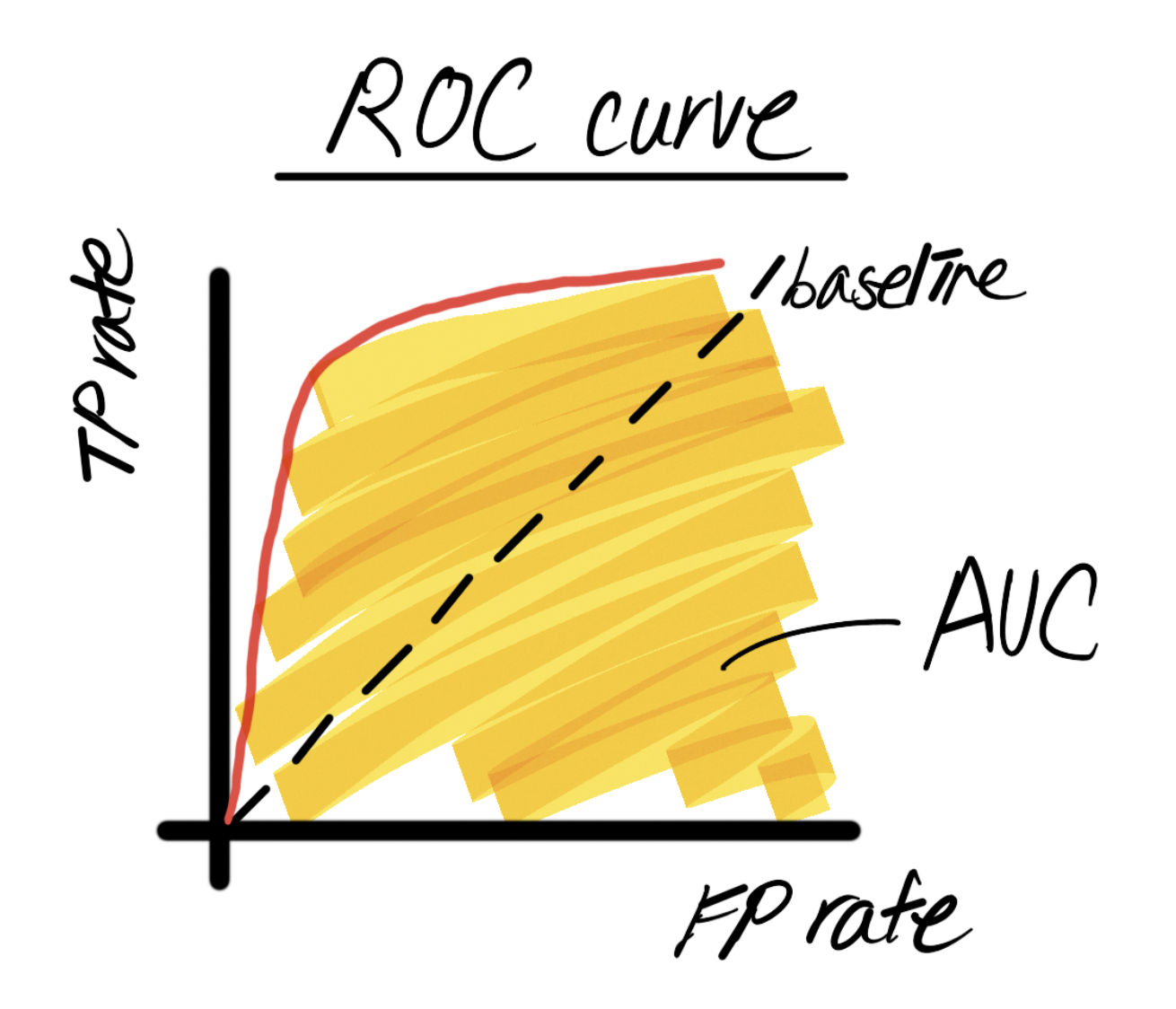

Regardless of the type of predictive modelling problems on hands, a model is optimized over time based on specific metrics. Usually, a single number metric is preferred to evaluate the current model, but a metric needs to be carefully chosen depending on the problems one tries to solve. With a properly divided dataset (training, validation and test), then the metric can be used to evaluate if the current model is over- or under-fitting. This article describes how the typical model evaluation is performed and suggest some methods to optimize the model in different scenarios. Also, popular types of metrics in typical supervised learning situations (regression and classification) are explored.