This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car. For more information about the project, see the project introduction here.

- Team : GiantCar

| Name | Udacity account | Slack Handle | Time Zone |

|---|---|---|---|

| Kim HyunKoo | studian@gmail.com | @hkkim | UTC+9 (KST) |

| Duong Hai Long | dhlong.hp@gmail.com | @3x3eyes | UTC+8 (Singapore) |

| Lee Wonjun | mymamyma@gmail.com | @mymamyma | UTC -8 (PST) |

| Xing Jin | jin_xing@hotmail.com | @xing2017 | UTC -8 (PST) |

| Zhao Minming | minmingzhao@gmail.com | @minmingzhao | UTC -8 (PST) |

- This is the final capstone project in Udacity’s Self-Driving Car (SDC) Nanodegree.

- In this project, we write code that will autonomously drive Udacity’s self-driving car

Carla, an actual car equipped with necessary sensors and actuators, around a small test track. - We test and develop the code on a simulated car using a simulator provided by Udacity.

- The project brings together several modules taught in the Nanodegree: Perception, Motion Planning, Control, etc. The code is written using the well-known Robotics Operating System (ROS) framework which works both on the simulator as well as the actual car.

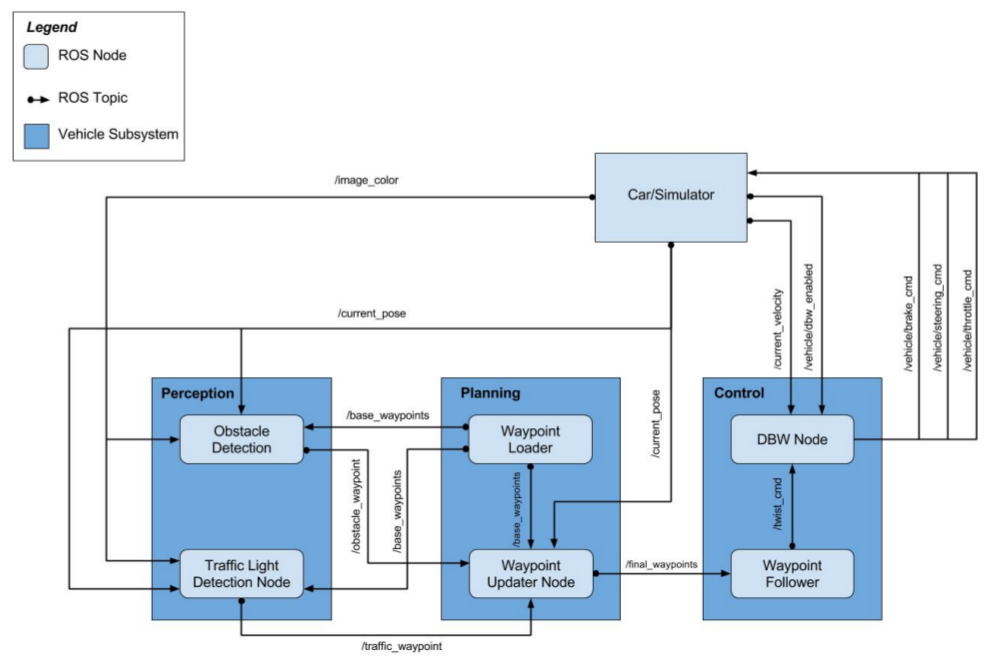

- The architecture of the system is depicted below.

- The system consists of three key modules:

Perception,Planning, andControl. - Each module consists of one or more ROS nodes, which utilize publish/subscribe (pub-sub) and request/response patterns to communicate critical information with each other while processing information that helps to drive the car.

- At a high level perspective,

the Perception moduleincludes functionality that perceives the environment using attached sensors (cameras, lidars, radars, etc.), such as lane lines, traffic lights, obstacles on the road, state of traffic lights, etc. - This information is passed to

the Planning module, which includes functionality to ingest the perceived environment, and uses that to publish a series of waypoints ahead of the car along with target velocities. The waypoints constitute a projected trajectory for the car. The Control moduletakes the waypoints and executes the target velocities on the car’s controller.- The car is equipped with a drive-by-wire (DBW) functionality that allows the controller to set its throttle (acceleration and deceleration), brake and steering angle commands.

- When the commands are executed, the car drives itself on the calculated trajectory.

- The entire cycle repeats itself continually so that the car continues driving.

- The modules are implemented as a set of ROS Nodes.

- Some nodes are implemented by Udacity, while other nodes are implemented by my self.

- As the Figure 1 above shows, there are six ROS nodes in three different modules.

- Of these, I implement three ROS nodes:

Traffic Light Detector,Waypoint UpdaterandDrive-by-Wire (DBW). - Of the other three nodes, two are implemented by Udacity:

Waypoint Loader node, andWaypoint Follower. - The last one (Obstacle Detector) is implemented as part of Traffic Light Detector.

- In this project, the Perception module has two ROS nodes: Obstacle Detector and Traffic Light Detector.

- The Obstacle Detector is not required by the project rubric.

- The discussion below is for the Traffic Light Detector node.

-

subscribed topics:

/base_waypoints: provides a complete list of waypoints on the course/current_pose: provides the car’s current position on the course/image_color: provides an image stream from the car’s camera

-

published topics:

/traffic_waypoint:

-

helper topics:

/vehicle/traffic_lights: simulator publishes the location and current color state of all traffic lights in the simulator. It can be used for training data collection or as a stub for testing of other components.

-

node files:

tl_detector/tl_detector.py: node filetl_detector/light_classification/tl_classifier.py: classifier filetl_detector/light_classification/optimized_graph.pb: tensorflow model and weight file

- NOT implement

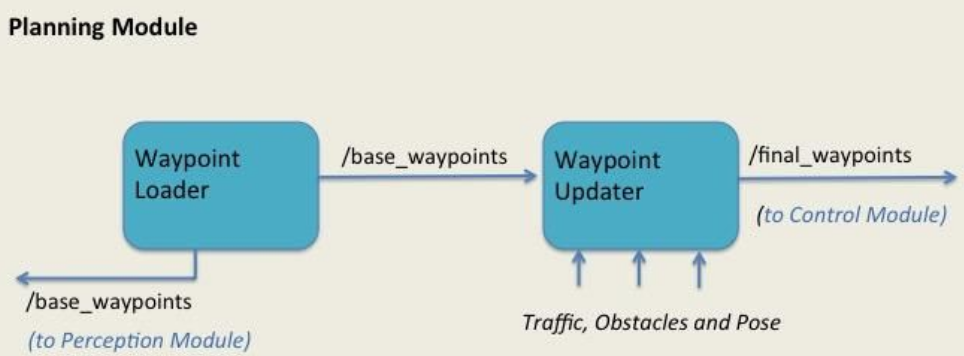

- This project implements the

Waypoint Updaternode. - The purpose of the Waypoint Updater node is to update the target velocities of final waypoints, based on traffic lights, state of the lights, and other obstacles.

-

subscribed topics:

/base_waypoints: containing all the waypoints in front of, and behind, the vehicle/obstacle_waypoints: the waypoints that are obstacles (not used). This information comes from the Perception module/traffic_waypoint: the waypoint of the red traffic light ahead of the car. This information comes from the Perception module/current_pose: the current position of the vehicle, as obtained by the actual car, or the simulator

-

published topics:

/final_waypoints: this is a list of waypoints ahead of the car (in order from closest to farthest) and the target velocities for those waypoints.

-

node files:

waypoint_updater/waypoint_updater.py: node file

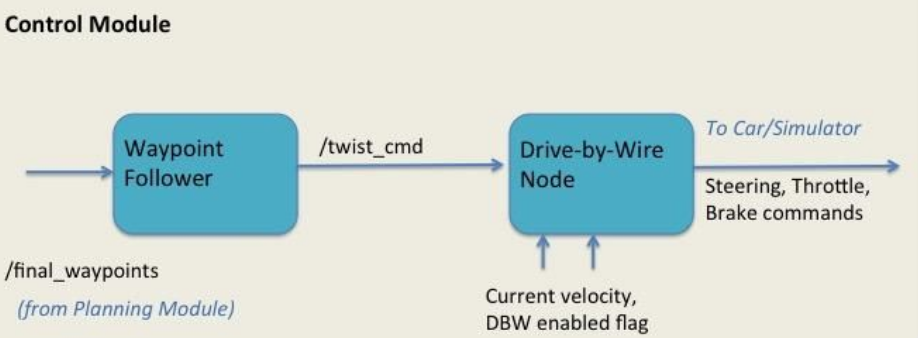

- Our project implements the

Drive-by-Wire (DBW)node. - The

DBWnode commands the car or the simulator by providing it with appropriate commands to control thethrottle(acceleration/deceleration),brakeand steering angle. - The DBW node instantiates two controllers (longitudinal contoller and lateral contoller)

- Longitudinal contoller

- takes as input the target speed (as mentioned in the /twist_cmd), the current speed and time delta.

- control throttle and brake using PID contol algorithm.

- Lateral contoller

- takes a target yaw rate, the current speed, current position, the following waypoints.

- calculates the required steering angle, while attempting to be within required tolerance limits for minimum and maximum steering angles.

-

subscribed topics:

- /twist_cmd : contains commands for proposed linear and angular velocities

- /vehicle/dbw_enabled : a flag to show if the car is under DBW control or Driver control

- /current_pose : contains current position of the car

- /current_velocity : contains current velocity of the car

- /final_waypoints : contains the list of final waypoints

-

published topics:

/vehicle/throttle_cmd: the throttle value/vehicle/steer_cmd: the steering angle value/vehicle/brake_cmd: the brake value

-

node files:

twist_controller/dbw_node.py

- This project is running Ubuntu 16.04.

- Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- Dataspeed DBW

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

- Download the Udacity Simulator.

git clone https://github.com/udacity/CarND-Capstone.gitcd CarND-Capstone

pip install -r requirements.txt

pip uninstall tensorflow

pip install tensorflow-gpu==1.0.0cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launchRun the simulator

-

Download training bag that was recorded on the Udacity self-driving car (a bag demonstraing the correct predictions in autonomous mode can be found here)

-

Unzip the file

unzip traffic_light_bag_files.zip- Play the bag file

rosbag play -l traffic_light_bag_files/loop_with_traffic_light.bag- Launch your project in site mode

cd CarND-Capstone/ros

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images