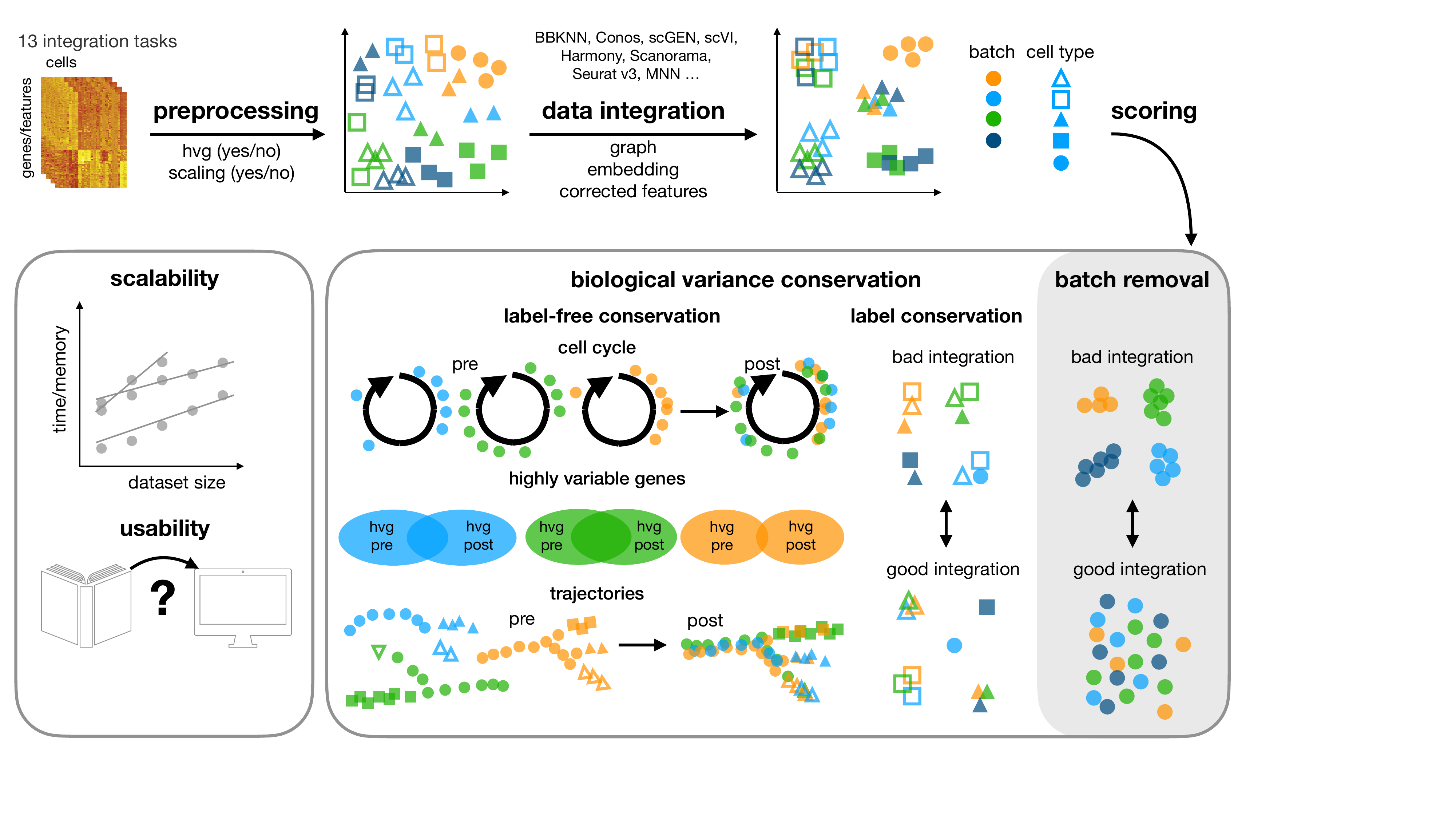

This repository contains the snakemake pipeline for our benchmarking study for data integration tools.

In this study, we benchmark 16 methods (see here) with 4 combinations of preprocessing steps leading to 68

methods combinations on 85 batches of gene expression and chromatin accessibility data.

The pipeline uses the scib package and allows for reproducible and automated

analysis of the different steps and combinations of preprocesssing and integration methods.

-

On our website we visualise the results of the study.

-

The scib package that is used in this pipeline can be found here.

-

For reproducibility and visualisation we have a dedicated repository: scib-reproducibility.

-

The data used in the study on figshare

Luecken, M.D., Büttner, M., Chaichoompu, K. et al. Benchmarking atlas-level data integration in single-cell genomics. Nat Methods 19, 41–50 (2022). https://doi.org/10.1038/s41592-021-01336-8

To reproduce the results from this study, two separate conda environments are needed for python and R operations.

Please make sure you have either mambaforge or

conda installed on your system to be able to use the pipeline.

We recommend using mamba, which is also available for conda, for faster package

installations with a smaller memory footprint.

We provide python and R environment YAML files in envs/, together with an installation script for setting up the

correct environments in a single command. based on the R version you want to use.

The pipeline currently supports R 3.6 and R 4.0, and we generally recommend using version R 4.0.

Call the script as follows e.g. for R 4.0

bash envs/create_conda_environments.sh -r 4.0Check the script's help output in order to get the full list of arguments it uses.

bash envs/create_conda_environments.sh -h Once installation is successful, you will have the python environment scib-pipeline-R<version> and the R environment

scib-R<version> that you must specify in the config file.

| R version | Python environment name | R environment name | Test data config YAML file |

|---|---|---|---|

| 4.0 | scib-pipeline-R4.0 |

scib-R4.0 |

configs/test_data-R4.0.yml |

| 3.6 | scib-pipeline-R3.6 |

scib-R3.6 |

configs/test_data-R3.6.yml |

Note: The installation script only works for the environments listed in the table above. The environments used in our study are included for reproducibility purposes and are described in

envs/.

For a more detailed description of the environment files and how to install the different environments manually, please

refer to the README in envs/.

This repository contains a snakemake pipeline to run integration methods and metrics reproducibly for different data scenarios preprocessing setups.

A script in data/ can be used to generate test data.

This is useful, in order to ensure that the installation was successful before moving on to a larger dataset.

The pipeline expects an anndata object with normalised and log-transformed counts in adata.X and counts in

adata.layers['counts'].

More information on how to use the data generation script can be found in data/README.md.

The parameters and input files are specified in config files.

A description of the config formats and example files can found in configs/.

You can use the example config that use the test data to get the pipeline running quickly, and then modify a copy of it

to work with your own data.

To call the pipeline on the test data e.g. using R 4.0

snakemake --configfile configs/test_data-R4.0.yaml -nThis gives you an overview of the jobs that will be run. In order to execute these jobs with up to 10 cores, call

snakemake --configfile configs/test_data-R4.0.yaml --cores 10More snakemake commands can be found in the documentation.

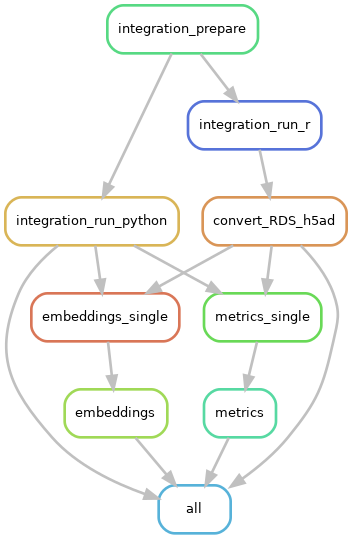

A dependency graph of the workflow can be created anytime and is useful to gain a general understanding of the workflow.

Snakemake can create a graphviz representation of the rules, which can be piped into an image file.

snakemake --configfile configs/test_data-R3.6.yaml --rulegraph | dot -Tpng -Grankdir=TB > dependency.pngTools that are compared include: