Extract information from images of herbarium specimen label sheets. This is the automated portion of a full solution that includes humans-in-the-loop.

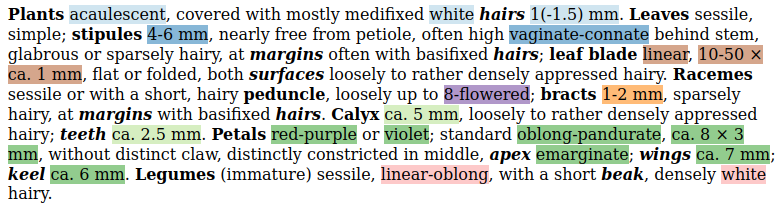

Given images like:

We want to extract as much text information as possible from the labels. We are mostly targeting labels with typewritten information and not handwritten labels or barcodes, although we may extract some text from barcode labels and some clearly written handwritten text.

You will need GIT to clone this repository. You will also need to have Python3.11+ installed, as well as pip, a package manager for Python. You can install the requirements into your python environment like so:

git clone https://github.com/rafelafrance/digi-leap.git

cd /path/to/digi-leap

make installThere are many moving parts to this project and I have broken this project into several smaller repositories. Some of these smaller repositories may have heavy requirements (like PyTorch) which can take a while to install.

Note that this repository doesn't contain any code itself, it is an umbrella repository for many parts and functions. If you don't want all the functionality of the entire pipeline you may be better off installing only the parts you actually want from their own repositories.

- Find all the labels on the images.

- OCR the cleaned up label images.

- Use Traiter to extract information from the clean OCR text.

- Use a large language model (LLM) to get traits from OCR text.

- Reconcile trait output from the Traiter and the LLM.

- Other repositories

Note that all scripts are available in this repository.

Repository: https://github.com/rafelafrance/label_finder

We also need to clone the YOLO7 repository separately. https://github.com/rafelafrance/label_finder

This repository contains scripts for training the

We find labels with a custom trained YOLOv7 model (https://github.com/WongKinYiu/yolov7).

- Labels that the model classified as typewritten are outlined in orange.

- Other identified labels are outlined in teal.

- All label are extracted into their own image file.

Scripts:

fix-herbarium-sheet-names: I had a problem where herbarium sheet file names were given as URLs, and it confused the Pillow (PIL) module, so I renamed the files to remove problem characters. You may not need this script.yolo-training: If you are training your own YOLO7 model then you may want to use this script to prepare the images of herbarium sheets for training. The herbarium images may be in all sorts of sizes, and model training requires that they're all uniformly sized.- YOLO scripts also requires a CSV file containing the paths to the herbarium sheets and the class and location of the labels on that sheet.

yolo-inference: Prepare herbarium sheet images for inference; i.e. finding labels. The images must be in the same size the training data.yolo-results-to-labels: This takes for output of the YOLO model and creates label images. The label name contains information about the YOLO results. The label name format:<sheet name>_<label class>_<left pixel>_<top pixel>_<right pixel>_<bottom pixel>.jpg- For example:

my-herbarium-sheet_typewritten_2261_3580_3397_4611.jpg

filter_labels.py: Move typewritten label images into a separate directory that are then available for further processing.

You will notice that there are no scripts for running the YOLO models directly. You will need to download that repository separately and run its scripts. Example invocations of YOLO scripts are below:

python train.py \

--weights yolov7.pt \

--data data/custom.yaml \

--workers 4 \

--batch-size 4 \

--cfg cfg/training/yolov7.yaml \

--name yolov7 \

--hyp data/hyp.scratch.p5.yaml \

--epochs 100 \

--exist-okpython detect.py \

--weights runs/train/yolov7_e100/weights/best.pt \

--source inference-640/ \

--save-txt \

--save-conf \

--project runs/inference-640/yolov7_e100/ \

--exist-ok \

--nosave \

--name rom2_2022-10-03 \

--conf-thres 0.1Repository: https://github.com/rafelafrance/ocr_ensemble

After you have each label in its own image file, you can extract the text from the labels using OCR. We have found that using an ensemble of OCR engines and image processing techniques works better in most cases than just OCR alone.

Image processing techniques:

- Do nothing to the image. This works best with clean new herbarium sheets.

- We slightly blur the image, scale it to a size that works with many OCR images, orient the image to get it right-side up, and then deskew the image to finetune its orientation.

- We perform all the steps in #2 and additionally perform a Sauvola (Sauvola & Pietikainen, 2000) binarization of the image, which often helps improve OCR results.

- We do all the steps in #3, then remove “snow” (image speckles) and fill in any small “holes” in the binarized image.

OCR engines:

- Tesseract OCR (Smith 2007).

- EasyOCR (https://github.com/JaidedAI/EasyOCR).

Therefore, there are 8 possible combinations of image processing and OCR engines. We found, by scoring against a gold standard, that using all 8 combinations does not always yield the best results. Currently, we use 6/8 combinations with binarize/EasyOCR and denoise/EasyOCR deemed unhelpful.

After the image processing & OCR combinations we then:

- Perform minor edits to fix some common OCR errors like the addition of spaces before punctuation or common character substitutions.

- Next we find the Levenshtein distance for all pairs of text sequences and remove any sequence that has a Levenshtein score greater than a predetermined cutoff (128) from the best Levenshtein score.

- The next step in the workflow is to use a Multiple Sequence Alignment (MSA) algorithm that is directly analogous to the ones used for biological sequences but instead of using a PAM or BLOSUM substitution matrix we use a visual similarity matrix. Exact visual similarity depends on the font so an exact distance is not feasible. Instead, we use a rough similarity score that ranges from +2, for characters that are identical, to -2, where the characters are wildly different like a period and a W. We also used a gap penalty of -3 and a gap extension penalty of -0.5.

- Finally, we edit the MSA consensus sequence with a spell checker, add or remove spaces within words, and fix common character substitutions.

Scripts:

ocr-labels: You feed this label images, and it returns the OCRed text.char-sub-matrix: You probably won't need this, but it is a utility script for build the substitution matrix for the multiple sequence alignment. The matrix is analogous to a PAM or BLOSUM matrix.

Repository: https://github.com/rafelafrance/LabelTraiter

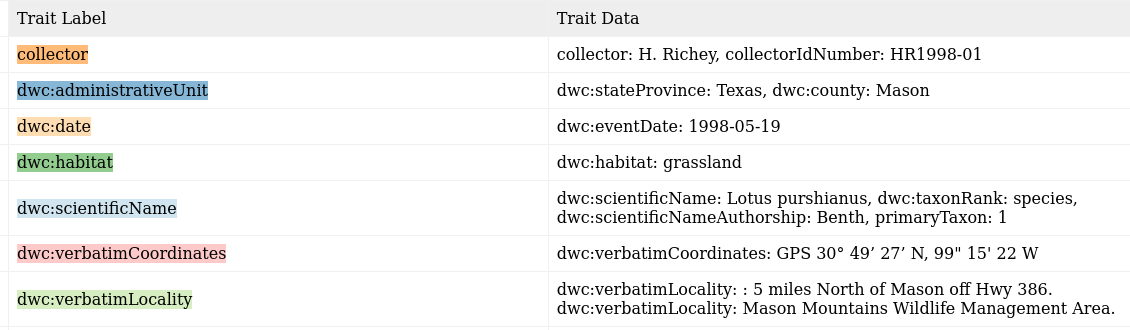

We are currently using a hierarchy of spaCy (https://spacy.io/) rule-based parsers to extract information from the labels. One level of parsers finds anchor words, another level of parsers finds common phrases around the anchor words, etc. until we have a final set of Darwin Core terms from that label.

I should be able to extract: (Colors correspond to the text above.)

The task is take text like this:

Herbarium of

San Diego State College

Erysimum capitatum (Dougl.) Greene.

Growing on bank beside Calif. Riding and

Hiking Trail north of Descanso.

13 May 1967 San Diego Co., Calif.

Coll: R.M. Beauchamp No. 484

And convert it into a machine-readable Darwin Core format like:

{

"dwc:eventDate": "1967-05-13",

"dwc:verbatimEventDate": "13 May 1967",

"dwc:country": "United States",

"dwc:stateProvince": "California",

"dwc:county": "San Diego",

"dwc:recordNumber": "484",

"dwc:verbatimLocality": "Bank beside California, Riding and Hiking Trail north of Descanso",

"dwc:recordedBy": "R.M. Beauchamp",

"dwc:scientificNameAuthorship": "Dougl Greene",

"dwc:scientificName": "Erysimum capitatum (Dougl.) Greene",

"dwc:taxonRank": "species"

}Of course, the OCRed input text and the resulting JSON are not always this clean.

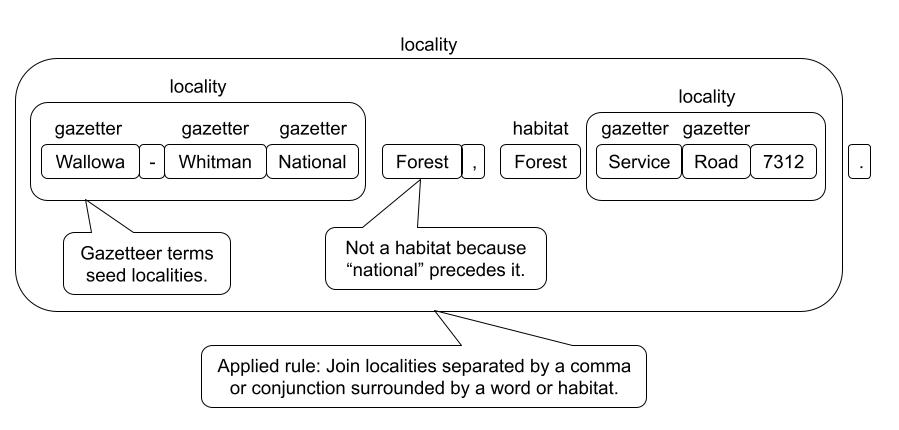

LabelTraiter uses a multistep approach to parse text into traits. The rules themselves are written using spaCy, with enhancements we developed to streamline the rule building process. The general outline of the rule building process follows:

- Have experts identify relevant terms and target traits.

- We use expert identified terms to label terms using spaCy's phrase matchers. These are sometimes traits themselves, but are more often used as anchors for more complex patterns of traits.

- We then build up more complex terms from simpler terms using spaCy's rule-based matchers repeatedly until there is a recognizable trait. See the image below.

- Depending on the trait we may then link traits to each other (entity relationships) using also spaCy rules.

- Typically, a trait gets linked to a higher level entity like SPECIES <--- FLOWER <--- {COLOR, SIZE, etc.} and not peer to peer like PERSON <---> ORG.

As an example of parsing a locality is shown below:

The rules can become complex and the vocabularies for things like taxa, or a gazetteer can be huge, but you should get the idea of what is involved in label parsing.

LabelTraiter was originally developed to parse plant treatments and was later adapted to parse label text. As such, it does have some issues with parsing label text. When dealing with treatments the identification of traits/terms is fairly easy and the linking of traits to their proper plant part is only slightly more difficult.

With labels, both the recognition of terms and linking them is difficult. There is often an elision of terms, museums or collectors may have their own abbreviations, and there is an inconsistent formatting of labels. Rule based-parsers are best at terms like dates, elevations, and latitudes/longitudes where the terms have recognizable structures, like numbers followed by units with a possible leading label. They are weakest is with vague terms like habitat, locality, or even names that require some sort of analysis of the context and meaning of the words.

Repository: https://github.com/rafelafrance/traiter_llm.git

Writing a rule-based parser is error-prone and labor-intensive. If we can, we try to use a model to parse the labels. This repository sends label text to GPT4 for parsing.

Scripts:

get-gpt-output: This takes the OCR text files and returns traits associated with the text files, only this time it's from a large language model (LLM), ChatGPT.clean-gpt-output: Clean the text returned from the LLM. This script only does so much, ChatGPT can get really creative in how it messes up JSON output. Expect that you will also need to manually update ChatGPT output.

Repository: https://github.com/rafelafrance/reconcile_traits.git

Both the rule-based parser and ChatGPT have their strengths and weaknesses. The scripts here try to combine their outputs to improve the overall results.

Scripts:

reconcile-traits: This takes the JSON output from both the rule-based parser and ChatGPT and does its best to improve the results.

The old digi_leap repository was taking too long to clone, but I wanted to keep its history, so I created this fresh repository for the project.

This is the base repository for all rule-based parsers. For plants, insects, mammals, etc.

This is the base repository for all plant related rule-based parser.

This holds utilities used by a lot of repositories. I got tired of changing the same code in several places.

See the following publication in Applications in Plant Sciences:

Humans in the Loop: Community science and machine learning synergies for overcoming herbarium digitization bottlenecks

Robert Guralnick, Raphael LaFrance, Michael Denslow, Samantha Blickhan, Mark Bouslog, Sean Miller, Jenn Yost, Jason Best, Deborah L Paul, Elizabeth Ellwood, Edward Gilbert, Julie Allen