This project explores how well scene geometry can be reconstrcuted from NeRF networks when color depends only on position (direction-agnostic), or when color depends on both position and direction (direction-dependent).

The implementation is mostly based on https://github.com/bmild/nerf and https://github.com/yenchenlin/nerf-pytorch.

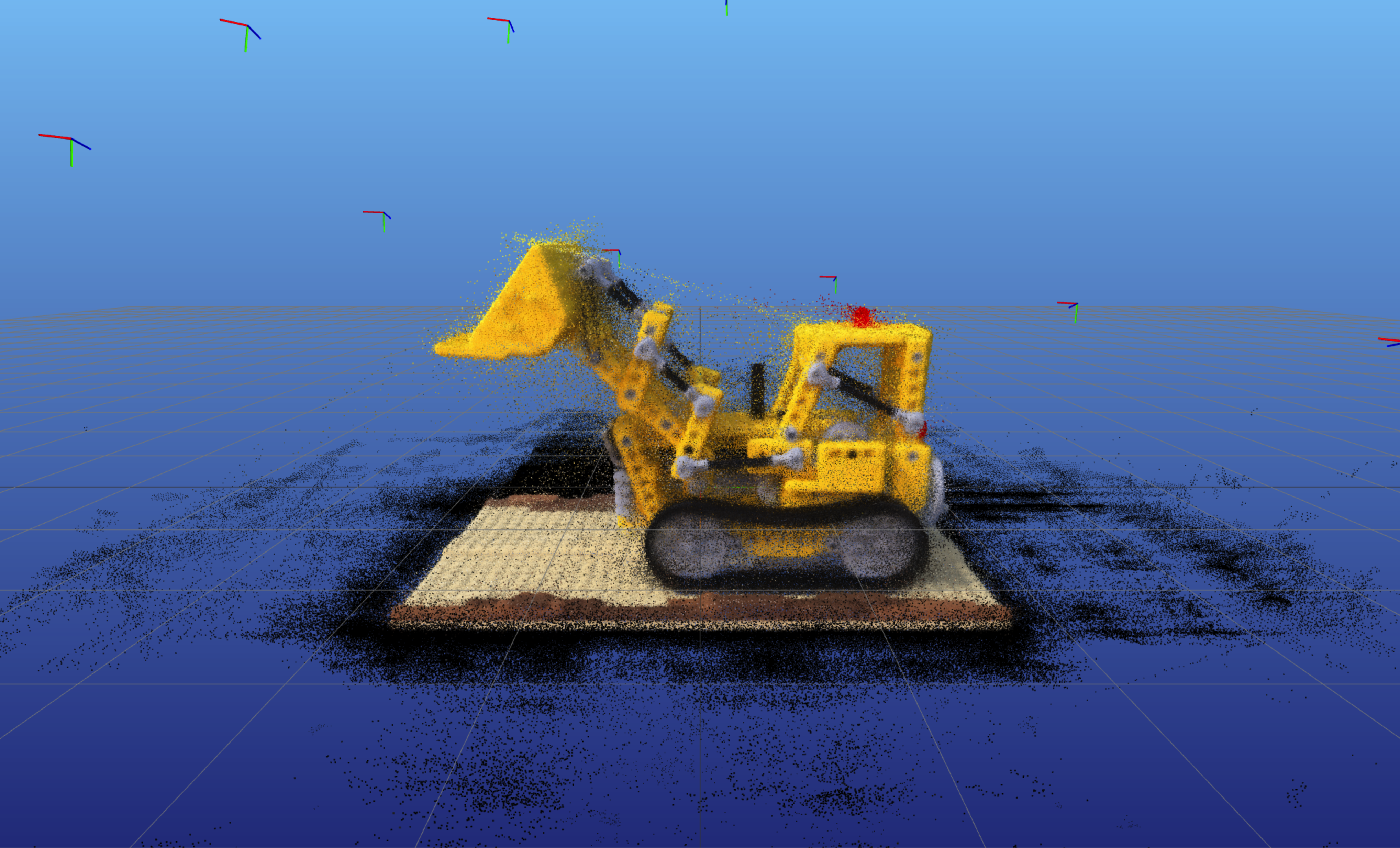

When synthesizing images from new views, a direction-agnostic NerF network appears to only miss the specular reflection effect compared with a direction-dependent NeRF network.

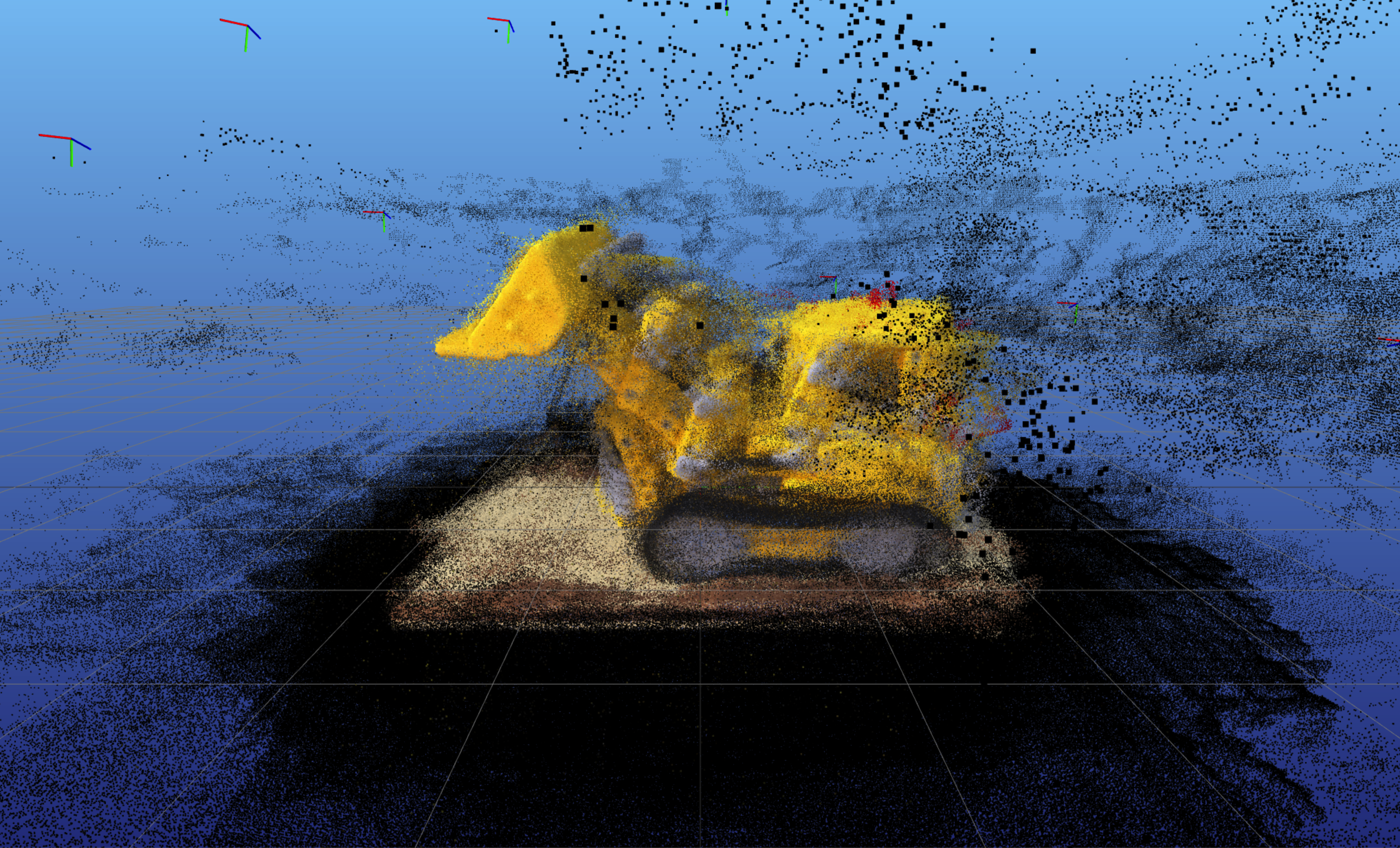

However, a direction-agnostic NeRF may learn direction-dependent color by adding extraneous geometry to the scene, making the reconstructed geometry inaccurate, as shown in the figures below.

Click on the images to view the full point cloud in 3D, which takes a while to load but looks cool!