Pytorch implementation of "PIZZA: A Powerful Image-only Zero-Shot Zero-CAD Approach to 6 DoF Tracking" paper

Van Nguyen Nguyen*, Yuming Du*, Yang Xiao, Michaël Ramamonjisoa and Vincent Lepetit

Check out our paper for more details!

If our project is helpful for your research, please consider citing :

@inproceedings{nguyen2022pizza,

title={PIZZA: A Powerful Image-only Zero-Shot Zero-CAD Approach to 6 DoF Tracking},

author={Nguyen, Van Nguyen and Du, Yuming and Xiao, Yang and Ramamonjisoa, Michael and Lepetit, Vincent},

journal={{International Conference on 3D Vision (3DV)}},

year={2022}

}We recommend creating a new Anaconda environment to use pizza. Use the following commands to setup a new environment:

conda env create -f environment.yml

conda activate pizza

pip install mmcv-full==1.3.8 -f https://download.openmmlab.com/mmcv/dist/cu102/1.7.0/index.html

# Assuming that you are in the root folder of "pizza"

cd mmsegmentation

pip install -e . # or "python setup.py develop"

# Optional: If you don't have initial boxes

cd mmdetection

pip install -r requirements/build.txt

pip install -v -e .

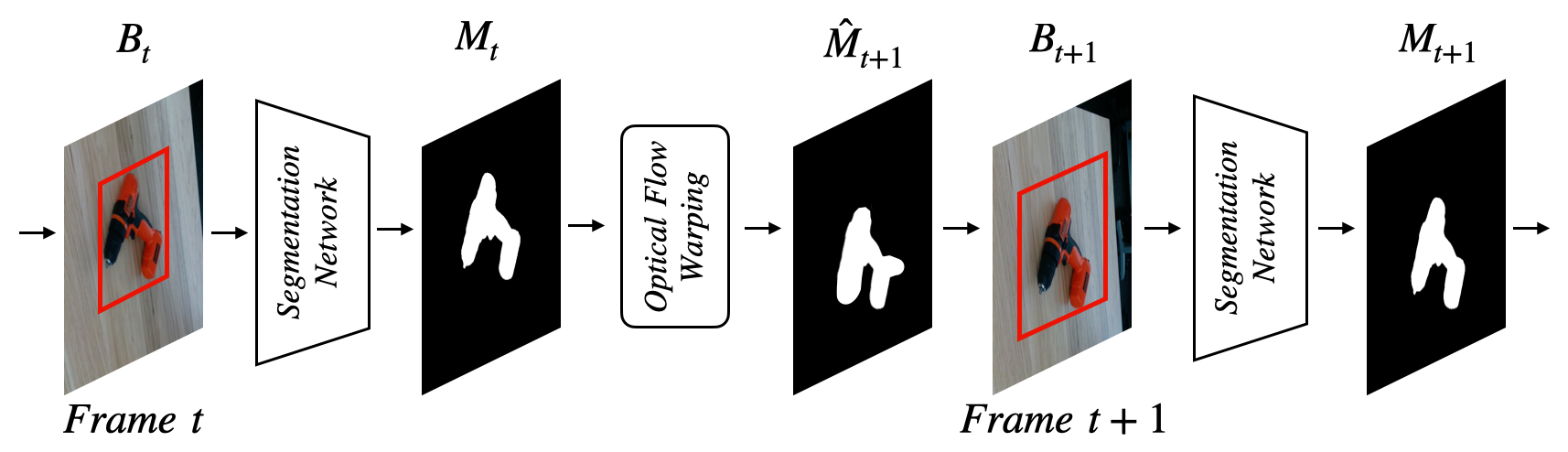

2D object tracking in the wild with 1st Place Solution for the UVO Challenge

Note: We track objects not seen during the training with 1st Place Solution for the UVO Challenge. This implementation is slightly modified comparing to the original repo to make it easy to run on different dataset of BOP challenge:

- Download pretrained-weights:

- Pretrained weight of segmentation model (2.81GB)

- Pretrained weight of RAFT things (21MB)

- (Optinal) Pretrained weight of detection model (2.54GB) (when initial boxes are not provided)

export WEIGHT_ROOT=$YOUR_WEIGHT_ROOT

# for example export WEIGHT_ROOT=/home/nguyen/Documents/results/UVO

bash ./scripts/download_uvo_weights.shOptional: You can download with attached gdrive links above manually.

- Organize the dataset as in BOP challenge, for example with YCB-V dataset:

$INPUT_DATA (ycb-v folder)

├── video1

├── rgb

├── ...

├── video2

├── rgb

├── ...

├── ...- Run detection and segmentation

Update file "input_dir", "output_dir" and "weight_dir" in configs/config_path.json

python -m lib.track.demo- Download testing datasets. We provide here the pre-generated synthetic images for ModelNet's experiments (checkout DeepIM paper for more details):

bash ./scripts/download_modelNet.sh- To launch a training:

python -m train --exp_name modelNet- To reproduce the quantitative results:

- Download checkpoint

bash ./scripts/download_modelNet_checkpoint.sh

python -m test --exp_name modelNet --checkpoint $CHECKPOINT_PATHThe code is based on mmdetection, mmsegmentation, videowalk, RAFT and template-pose. Many thanks to them!