Official code repository for the papers:

- Tree Detection and Diameter Estimation Based on Deep Learning, published in Forestry: An International Journal Of Forest Research. Preprint version (soon).

- Training Deep Learning Algorithms on Synthetic Forest Images for Tree Detection, presented at ICRA 2022 IFRRIA Workshop. The video presentation is available.

All our datasets are made available to increase the adoption of deep learning for many precision forestry problems.

| Dataset name | Description | Download |

|---|---|---|

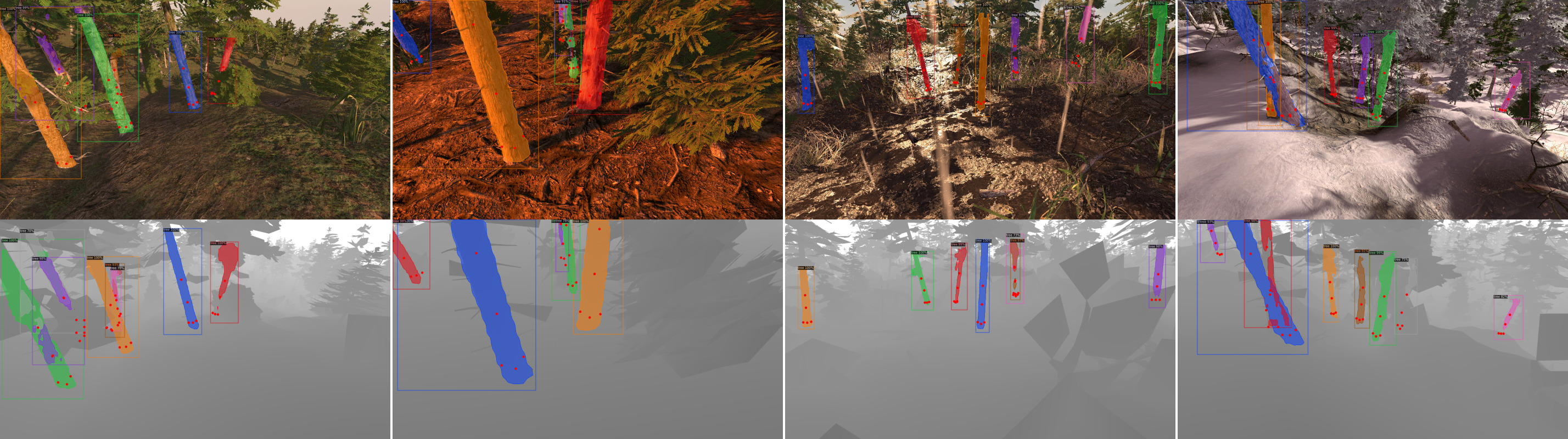

| SynthTree43k | A dataset containing 43 000 synthetic images and over 190 000 annotated trees. Includes images, train, test, and validation splits. (84.6 GB) | S3 storage |

| SynthTree43k | Depth images. | OneDrive |

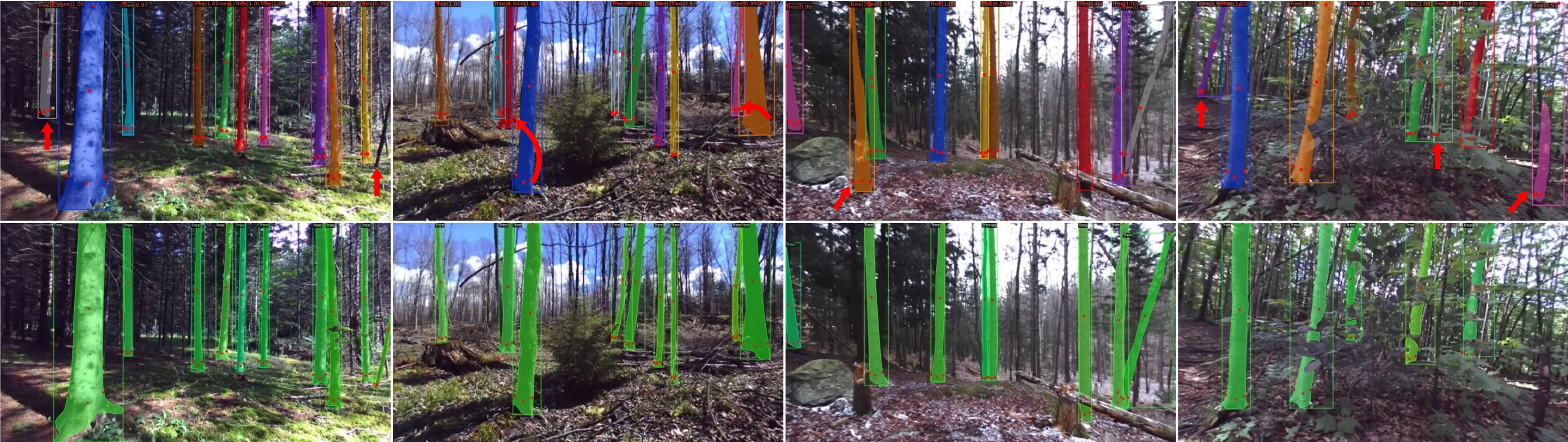

| CanaTree100 | A dataset containing 100 real images and over 920 annotated trees collected in Canadian forests. Includes images, train, test, and validation splits for all five folds. | OneDrive |

The annotations files are already included in the download link, but some users requested the annotations for entire trees: train_RGB_entire_tree.json, val_RGB_entire_tree.json, test_RGB_entire_tree.json. Beware that it can result in worse detection performance (in my experience), but maybe there is something to do with models not based on RPN (square ROIs), such as Mask2Former.

Pre-trained models weights are compatible with Detectron2 config files. All models are trained on our synthetic dataset SynthTree43k. We provide a demo file to try it out.

| Backbone | Modality | box AP50 | mask AP50 | Download | |||||

|---|---|---|---|---|---|---|---|---|---|

| R-50-FPN | RGB | 87.74 | 69.36 | model | |||||

| R-101-FPN | RGB | 88.51 | 70.53 | model | |||||

| X-101-FPN | RGB | 88.91 | 71.07 | model | |||||

| R-50-FPN | Depth | 89.67 | 70.66 | model | |||||

| R-101-FPN | Depth | 89.89 | 71.65 | model | |||||

| X-101-FPN | Depth | 87.41 | 68.19 | model | |||||

| Backbone | Description | Download | |||||

|---|---|---|---|---|---|---|---|

| X-101-FPN | Trained on fold 01, good for inference. | model | |||||

Once you have a working Detectron2 and OpenCV installation, running the demo is easy.

- Download the pre-trained model weight and save it in the

/outputfolder (of your local PercepTreeV1 repos). -Opendemo_single_frame.pyand uncomment the model config corresponding to pre-trained model weights you downloaded previously, comment the others. Default is X-101. Set themodel_nameto the same name as your downloaded model ex.: 'X-101_RGB_60k.pth' - In

demo_single_frame.py, specify path to the image you want to try it on by setting theimage_pathvariable.

- Download the pre-trained model weight and save it in the

/outputfolder (of your local PercepTreeV1 repos). -Opendemo_video.pyand uncomment the model config corresponding to pre-trained model weights you downloaded previously, comment the others. Default is X-101. - In

demo_video.py, specify path to the video you want to try it on by setting thevideo_pathvariable.

If you find our work helpful for your research, please consider citing the following BibTeX entry.

@article{grondin2022tree,

author = {Grondin, Vincent and Fortin, Jean-Michel and Pomerleau, François and Giguère, Philippe},

title = {Tree detection and diameter estimation based on deep learning},

journal = {Forestry: An International Journal of Forest Research},

year = {2022},

month = {10},

}

@inproceedings{grondin2022training,

title={Training Deep Learning Algorithms on Synthetic Forest Images for Tree Detection},

author={Grondin, Vincent and Pomerleau, Fran{\c{c}}ois and Gigu{\`e}re, Philippe},

booktitle={ICRA 2022 Workshop in Innovation in Forestry Robotics: Research and Industry Adoption},

year={2022}

}