GAN-based text-to-image generation models for the CUB and COCO datasets are experimented, and evaluated using the Inception Score (IS) and the Fréchet Inception Distance (FID) to compare output images across different architectures. The models are implemented in PyTorch 1.11.0. Save the datasets in data and follow the steps as given in each folder to replicate our results.

- Learning rate: 0.0002 for ManiGAN, Lightweight ManiGAN and 0.0001 for DF-GAN

- Optimizer: Adam

- Output image size: 256x256

- Epochs: 350

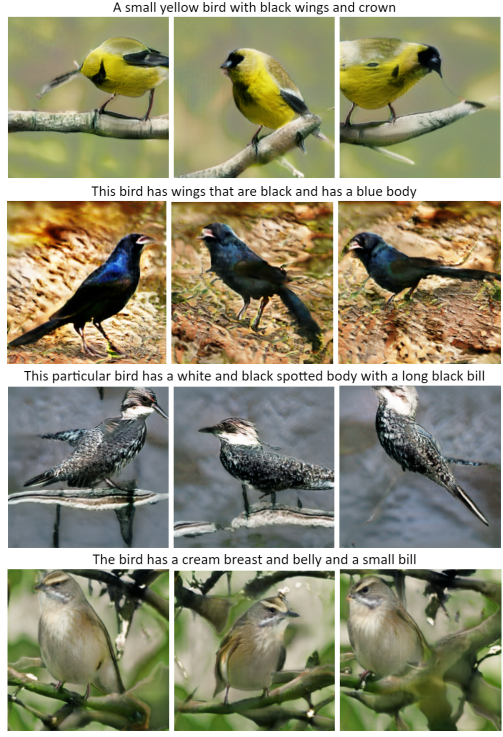

Synthesized images

Experimental Results

- DF-GAN

- ManiGAN

- Lightweight ManiGAN

Download and save it to

models/in order to get results

[1] Deep Fusion GAN - DF-GAN

[2] Text-Guided Image Manipulation - ManiGAN

[3] Lightweight Architecture for Text-Guided Image Manipulation - Lightweight ManiGAN

[4] PyTorch Implementation for Inception Score (IS) - IS

[5] PyTorch Implementation for Fréchet Inception Distance (FID) - FID