Segment and Track Anything is an open-source project that focuses on the segmentation and tracking of any objects in videos, utilizing both automatic and interactive methods. The primary algorithms utilized include the SAM (Segment Anything Models) for automatic/interactive key-frame segmentation and the DeAOT (Decoupling features in Associating Objects with Transformers) (NeurIPS2022) for efficient multi-object tracking and propagation. The SAM-Track pipeline enables dynamic and automatic detection and segmentation of new objects by SAM, while DeAOT is responsible for tracking all identified objects.

This video showcases the segmentation and tracking capabilities of SAM-Track in various scenarios, such as street views, AR, cells, animations, aerial shots, and more.

Bilibili Video Link: Versatile Demo

- Colab notebook

- 1.0-Version Interactive WebUI

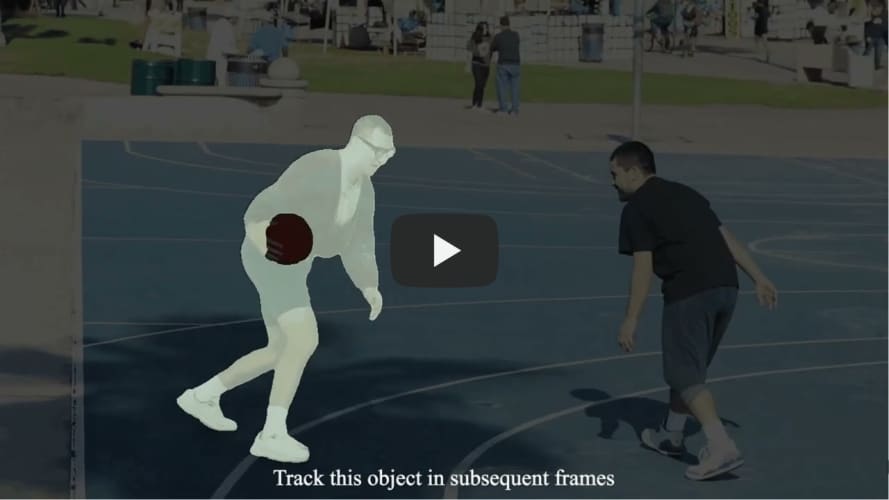

Demo1 showcases SAM-Track's ability to interactively segment and track individual objects. The user specified that SAM-Track tracked a man playing street basketball.

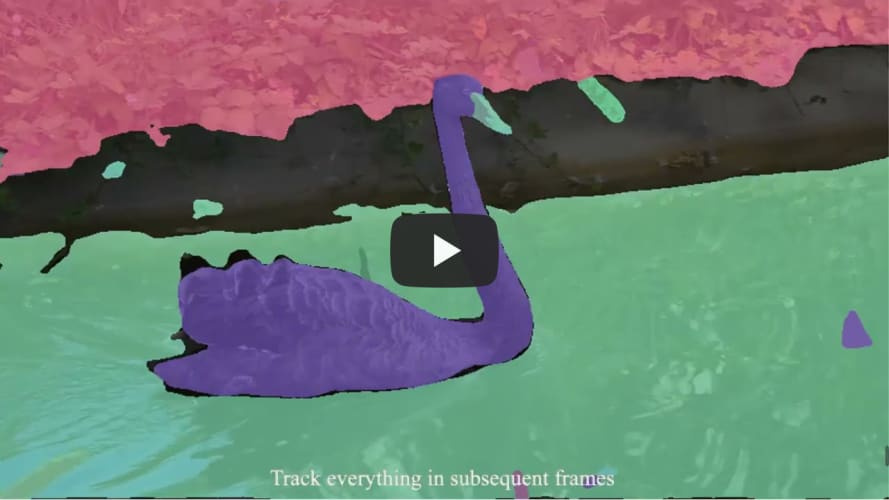

Demo2 showcases SAM-Track's ability to interactively add specified objects for tracking.The user customized the addition of objects to be tracked on top of the segmentation of everything in the scene using SAM-Track.

The Segment-Anything repository has been cloned and renamed as sam, and the aot-benchmark repository has been cloned and renamed as aot.

Please check the dependency requirements in SAM and DeAOT.

The implementation is tested under python 3.9, as well as pytorch 1.10 and torchvision 0.11. We recommend equivalent or higher pytorch version.

Use the install.sh to install the necessary libs for SAM-Track

bash script/install.sh

Download SAM model to ckpt, the default model is SAM-VIT-B (sam_vit_b_01ec64.pth).

Download DeAOT/AOT model to ckpt, the default model is R50-DeAOT-L (R50_DeAOTL_PRE_YTB_DAV.pth).

You can download the default weights using the command line as shown below.

bash script/download_ckpt.sh

- The video to be processed can be put in ./assets.

- Then run demo.ipynb step by step to generate results.

- The results will be saved as masks for each frame and a gif file for visualization.

The arguments for SAM-Track, DeAOT and SAM can be manually modified in model_args.py for purpose of using other models or controling the behavior of each model.

Our user-friendly visual interface allows you to easily obtain the results of your experiments. Simply initiate it using the command line.

python app.py

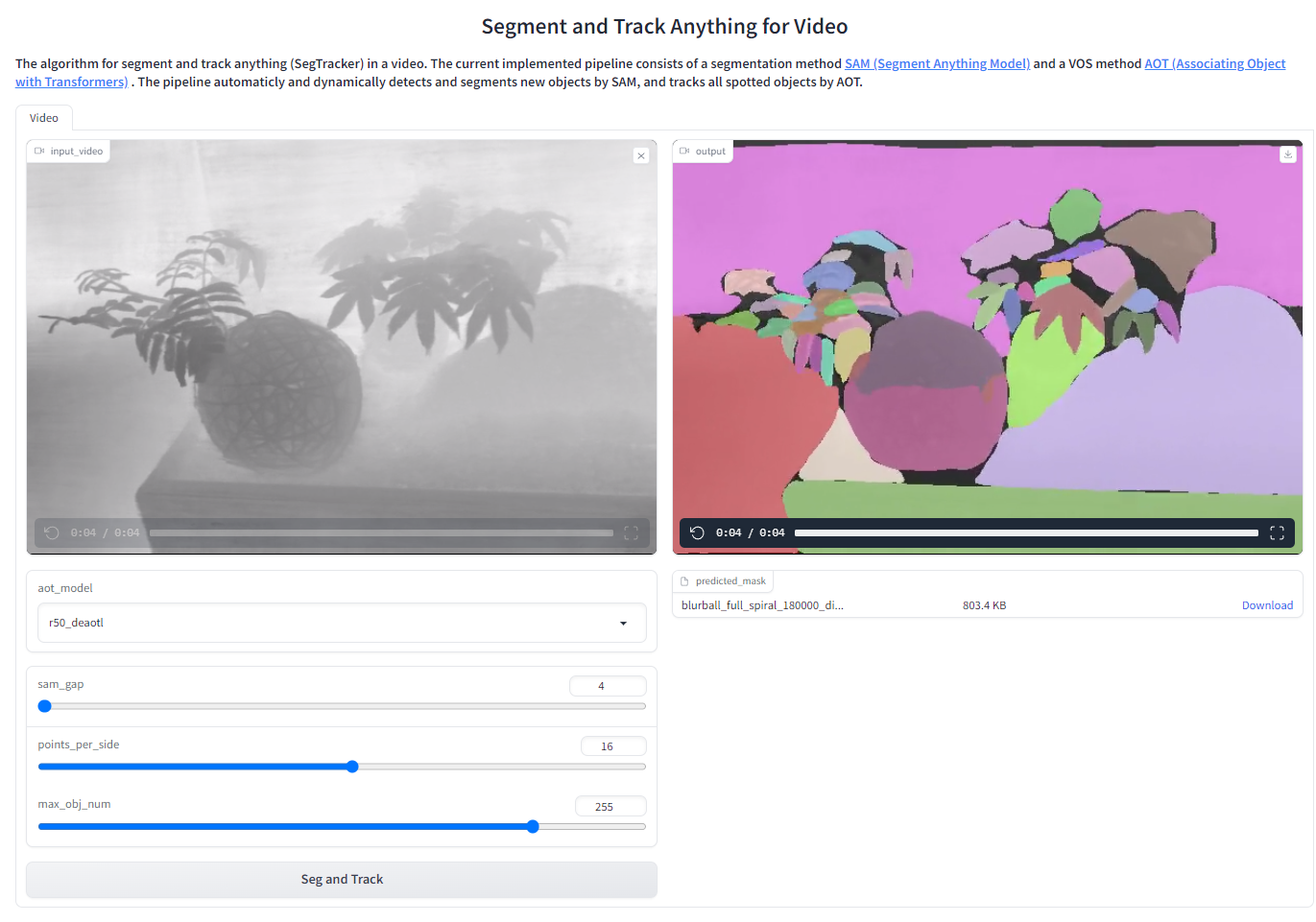

Users can upload the video directly on the UI and use Segtracker to track all objects within that video. We use the depth-map video as a example.

Parameters:

- aot_model: used to select which version of DeAOT/AOT to use for tracking and propagation.

- sam_gap: used to control how often SAM is used to add newly appearing objects at specified frame intervals. Increase to decrease the frequency of discovering new targets, but significantly improve speed of inference.

- points_per_side: used to control the number of points per side used for generating masks by sampling a grid over the image. Increasing the size enhances the ability to detect small objects, but larger targets may be segmented into finer granularity.

- max_obj_num: used to limit the maximum number of objects that SAM-Track can detect and track. A larger number of objects necessitates a greater utilization of memory, with approximately 16GB of memory capable of processing a maximum of 255 objects.

Usage:

- Start app, use your browser to open the web-link.

- Click on the

input-video windowto upload a video. - Adjust SAM-Track parameters as needed.

- Click

Seg and Trackto get the experiment results.

Licenses for borrowed code can be found in licenses.md file.

- DeAOT/AOT - https://github.com/yoxu515/aot-benchmark

- SAM - https://github.com/facebookresearch/segment-anything

- Gradio (for building WebUI) - https://github.com/gradio-app/gradio

Thank you for your interest in this project. The project is supervised by the ReLER Lab at Zhejiang University’s College of Computer Science and Technology. ReLER was established by Yang Yi, a Qiu Shi Distinguished Professor at Zhejiang University. Our dedicated team of contributors includes Yuanyou Xu, Yangming Cheng, Liulei Li, Zongxin Yang, Wenguan Wang and Yi Yang.