Northwestern Polytechnical University, Mohamed bin Zayed University of AI, TianJin University, Linköping University

2024/06/14: We released our trained models.2024/06/12: We released our code.2024/06/11: We released our technical report on arxiv. Our code and models are coming soon!

Abstract

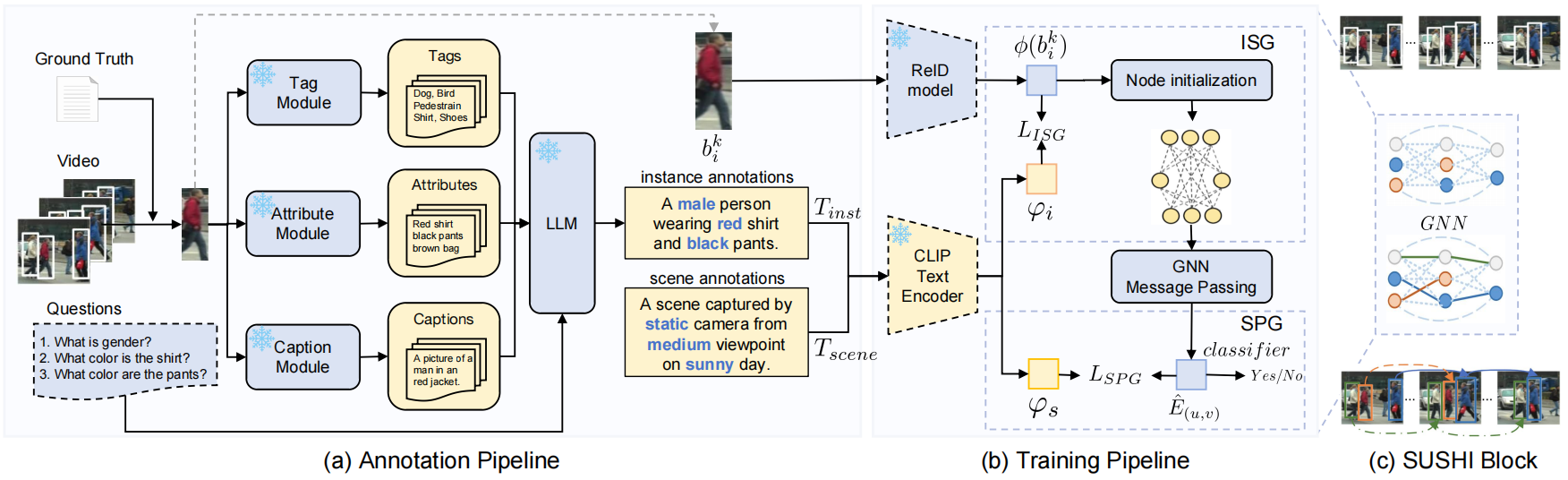

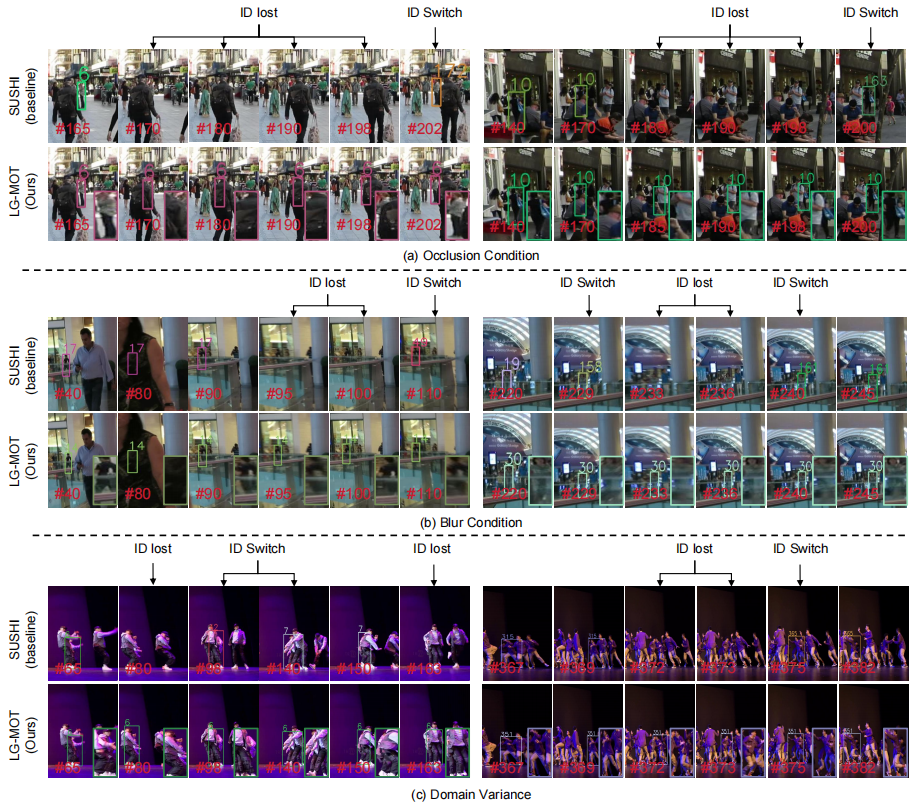

Most existing multi-object tracking methods typically learn visual tracking features via maximizing dis-similarities of different instances and minimizing similarities of the same instance. While such a feature learning scheme achieves promising performance, learning discriminative features solely based on visual information is challenging especially in case of environmental interference such as occlusion, blur and domain variance. In this work, we argue that multi-modal language-driven features provide complementary information to classical visual features, thereby aiding in improving the robustness to such environmental interference. To this end, we propose a new multi-object tracking framework, named LG-MOT, that explicitly leverages language information at different levels of granularity (scene-and instance-level) and combines it with standard visual features to obtain discriminative representations. To develop LG-MOT, we annotate existing MOT datasets with scene-and instance-level language descriptions. We then encode both instance-and scene-level language information into high-dimensional embeddings, which are utilized to guide the visual features during training. At inference, our LG-MOT uses the standard visual features without relying on annotated language descriptions. Extensive experiments on three benchmarks, MOT17, DanceTrack and SportsMOT, reveal the merits of the proposed contributions leading to state-of-the-art performance. On the DanceTrack test set, our LG-MOT achieves an absolute gain of 2.2% in terms of target object association (IDF1 score), compared to the baseline using only visual features. Further, our LG-MOT exhibits strong cross-domain generalizability.- LG-MOT first annotate the training sets and validation sets of commonly used MOT datasets including MOT17, DanceTrack and SportsMOT with language descriptions at both scene and instance levels.

| Dataset | Videos (Scenes) | Annotated Scenes | Tracks (Instances) | Annotated Instances | Annotated Boxes | Frames |

|---|---|---|---|---|---|---|

| MOT17-L | 7 | 7 | 796 | 796 | 614,103 | 110,407 |

| DanceTrack-L | 65 | 65 | 682 | 682 | 576,078 | 67,304 |

| SportsMOT-L | 90 | 90 | 1,280 | 1,280 | 608,152 | 55,544 |

| Total | 162 | 162 | 2,758 | 2,758 | 1798,333 | 233,255 |

-

LG-MOT is a new multi-object tracking framework which leverages language information at different granularity during training to enhance object association capabilities. During training, our ISG module aligns each node embedding

$\phi(b_i^k)$ with instance-level descriptions embeddings$\varphi_i$ , while our SPG module aligns edge embeddings$\hat{E}_{(u,v)}$ with scene-level descriptions embeddings$\varphi_s$ to guide correlation estimation after message passing. Our approach does not require language description during inference.

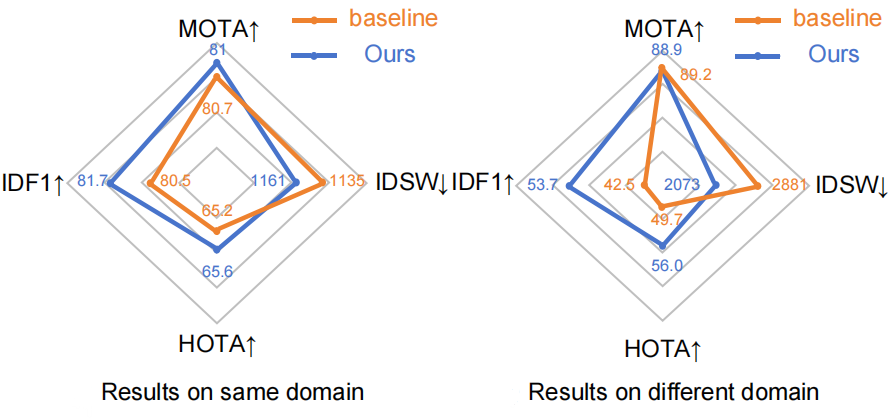

- LG-MOT increases 1.2% in terms of IDF1 over the baseline SUSHI intra-domain, while significantly improves 11.2% in cross-domain.

| Dataset | IDF1 | HOTA | MOTA | ID Sw. | model |

|---|---|---|---|---|---|

| MOT17 | 81.7 | 65.6 | 81.0 | 1161 | checkpoint.pth |

| Dataset | IDF1 | HOTA | MOTA | AssA | DetA | model |

|---|---|---|---|---|---|---|

| DanceTrack | 60.5 | 61.8 | 89.0 | 80.0 | 47.8 | checkpoint.pth |

| Dataset | IDF1 | HOTA | MOTA | ID Sw. | model |

|---|---|---|---|---|---|

| SportsMOT | 77.1 | 75.0 | 91.0 | 2847 | checkpoint.pth |

-

Clone and enter this repository

git clone https://github.com/WesLee88524/LG-MOT.git cd LG-MOT -

Create an Anaconda environment for this project:

conda env create -f environment.yml conda activate LG-MOT -

Clone fast-reid (latest version should be compatible but we use this version) and install its dependencies. The

fast-reidrepo should be insideLG-MOTroot:LG-MOT ├── src ├── fast-reid └── ... -

Download re-identification model we use from fast-reid and move it inside

LG-MOT/fastreid-models/model_weights/ -

Download MOT17, SPORTSMOT and DanceTrack datasets. In addition, prepare seqmaps to run evaluation (for details see TrackEval). We provide an example seqmap. Overall, the expected folder structure is:

DATA_PATH ├── DANCETRACK │ └── ... ├── SPORTSMOT │ └── ... └── MOT17 └── seqmaps │ ├── seqmap_file_name_matching_split_name.txt │ └── ... └── train │ ├── MOT17-02 │ │ ├── det │ │ │ └── det.txt │ │ └── gt │ │ │ └── gt.txt │ │ └── img1 │ │ │ └── ... │ │ └── seqinfo.ini │ └── ... └── test └── ...

You can launch a training from the command line. An example command for MOT17 training:

RUN=example_mot17_training

REID_ARCH='fastreid_msmt_BOT_R50_ibn'

DATA_PATH=YOUR_DATA_PATH

python scripts/main.py --experiment_mode train --cuda --train_splits MOT17-train-all --val_splits MOT17-train-all --run_id ${RUN}_${REID_ARCH} --interpolate_motion --linear_center_only --det_file byte065 --data_path ${DATA_PATH} --reid_embeddings_dir reid_${REID_ARCH} --node_embeddings_dir node_${REID_ARCH} --zero_nodes --reid_arch $REID_ARCH --edge_level_embed --save_cp --inst_KL_loss 1 --KL_loss 1 --num_epoch 200 --mpn_use_prompt_edge 1 1 1 1 --use_instance_prompt

You can test a trained model from the command line. An example for testing on MOT17 training set:

RUN=example_mot17_test

REID_ARCH='fastreid_msmt_BOT_R50_ibn'

DATA_PATH=your_data_path

PRETRAINED_MODEL_PATH=your_pretrained_model_path

python scripts/main.py --experiment_mode test --cuda --test_splits MOT17-test-all --run_id ${RUN}_${REID_ARCH} --interpolate_motion --linear_center_only --det_file byte065 --data_path ${DATA_PATH} --reid_embeddings_dir reid_${REID_ARCH} --node_embeddings_dir node_${REID_ARCH} --zero_nodes --reid_arch $REID_ARCH --edge_level_embed --save_cp --hicl_model_path ${PRETRAINED_MODEL_PATH} --inst_KL_loss 1 --KL_loss 1 --mpn_use_prompt_edge 1 1 1 1

if you use our work, please consider citing us:

@misc{li2024multigranularity,

title={Multi-Granularity Language-Guided Multi-Object Tracking},

author={Yuhao Li and Muzammal Naseer and Jiale Cao and Yu Zhu and Jinqiu Sun and Yanning Zhang and Fahad Shahbaz Khan},

year={2024},

eprint={2406.04844},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

This project is released under the Apache license. See LICENSE for additional details.

The code is mainly based on SUSHI. Thanks for their wonderful works.