Mini Project - Learning from Human Feedback

Assistant Professor: Silvia Tulli

Course of Social Robotics Coordinated by Mohamed Chetouani

Team

Table of Contents

1. Minigrid

The Minigrid library contains a collection of discrete grid-world environments to conduct research on Reinforcement Learning. To install the Minigrid library use pip install minigrid.

The included environments can be divided in two groups. The original Minigrid environments and the BabyAI environments. For this project the first one were chosen: these environments have in common a triangle-like agent with a discrete action space that has to navigate a 2D map with different obstacles (Walls, Lava, Dynamic obstacles) depending on the environment. The task to be accomplished is described by a mission string returned by the observation of the agent.

1.1 Reinforcement Learning: MiniGrid with Policy Gradient

In this session, it will show the pytorch-implemented Policy Gradient in Gym-MiniGrid Environment. Through this, you will know how to implement Vanila Policy Gradient (also known as REINFORCE), and test it on open source RL environment.

1.1.1 Run the project

The notebook is available here, as presented in the first section, the following commands to install the packages have to be run:

pip install gym==0.22

pip install gym-minigrid==1.0.0

Since the code is implemented in GCollab no other installations are needed.

1.1.2 Results and code link

| Learning Curve | Total reward: 0.802 Total length: 11 |

Total reward: 0.604 Total length: 22 |

|---|---|---|

|

minigrid.mp4 |

minigrid2.mp4 |

1.2 Inverse Reinforcement Learning on Minigrid

The aim of this project is to provide a tool to train an agent on Minigrid. The human player can make game demonstrations and then the agent is trained from these demonstrations using Inverse Reinforcement Learning techniques. The code is taken from https://github.com/francidellungo/Minigrid_HCI-project

1.2.1 Run the project

From the original project the

viewfunction in the files./policy_nets/emb_conv1x1_mlp_policy.pyand./policy_nets/one_hot_conv_policy.pyhave been replaced with 'reshape' because of an error.

However in the folder the functions have been already replaced and the code is ready to be executable. To run the code:

- go to the directory in which you have downloaded the project

- go inside Minigrid_HCI-project folder with the command:

cd Minigrid_HCI-project - Install the

requirements.txtwith the commandpip install -r requirements.txt - run the application with the command

python agents_window.py

1.2.2 Results and code link

| Total reward: 0.946 Total length: 6 |

|---|

minigrid3.mp4 |

2. Mountain Car

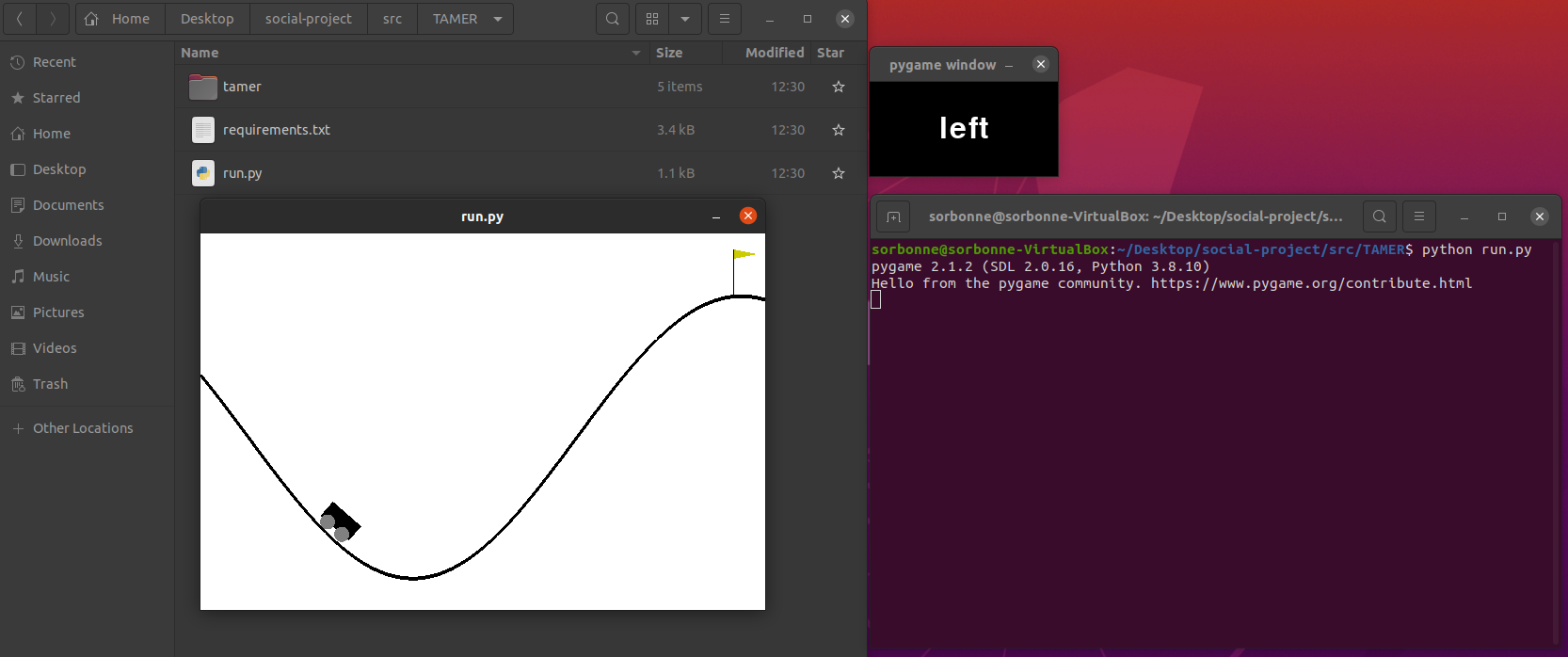

The Mountain Car MDP is a deterministic MDP that consists of a car placed stochastically at the bottom of a sinusoidal valley, with the only possible actions being the accelerations that can be applied to the car in either direction. The goal of the MDP is to strategically accelerate the car to reach the goal state on top of the right hill.

2.1 Mountain Car using Q-learning

In this project, Deep Q-Network (DQN), Dueling Double DQN (D3QN) and Dueling DQN for the Mountain Car environment are implemented. In order to describe the three algorthm Q learning need to be introduced: it is a Reinforcement learning policy that will find the next best action, given a current state. It chooses this action at random and aims to maximize the reward. Q-learning is a model-free, off-policy reinforcement learning that will find the best course of action, given the current state of the agent. Depending on where the agent is in the environment, it will decide the next action to be taken. The objective of the model is to find the best course of action given its current state. To do this, it may come up with rules of its own or it may operate outside the policy given to it to follow. This means that there is no actual need for a policy, hence we call it off-policy. The only difference between Q-learning and DQN is the agent’s brain. The agent’s brain in Q-learning is the Q-table, but in DQN the agent’s brain is a deep neural network. Double DQN uses two identical neural network models. One learns during the experience replay, just like DQN does, and the other one is a copy of the last episode of the first model. The difference in Dueling DQN is in the structure of the model. The model is created in a way to output a different specific formula.

The code is taken from https://github.com/DanielPalaio/MountainCar-v0_DeepRL.

2.2.1 Run the project

In order to run the codes, you need Python 3.8 and to install the specific requirements.txt depending on the folder of the algorithm desired.

2.2.2 Results and code link

2.2 Mountain Car with Tamer

TAMER (Training an Agent Manually via Evaluative Reinforcement) is a framework for human-in-the-loop Reinforcement Learning. Differing from traditional approaches to interactive shaping, a tamer agent models the human’s reinforcement and exploits its model by choosing actions expected to be most highly reinforced. Results from two domains demonstrate that lay users can train tamer agents without defining an environmental reward function (as in an MDP) and indicate that human training within the tamer framework can reduce sample complexity over autonomous learning algorithms (Knox+Stone).

The code is taken from https://github.com/benibienz/TAMER.

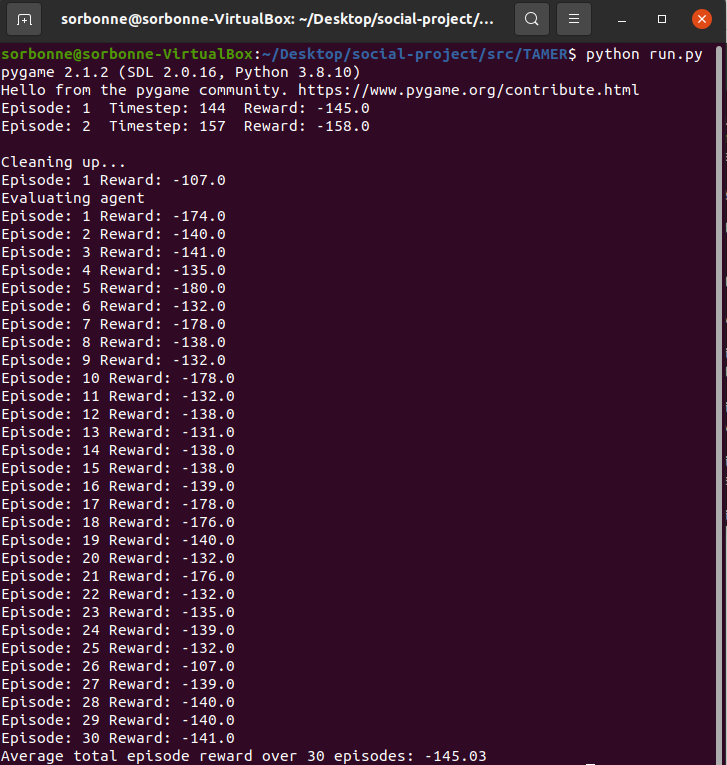

2.2.1 Run the project

- You need python 3.7+

- The version of gym has to be the 0.15.4, installing it running the following command

pip install gym==0.15.4 - numpy, sklearn, pygame and gym

- go inside TAMER folder with the command:

cd TAMER - to run the code

python run.py

2.2.2 Results and code link

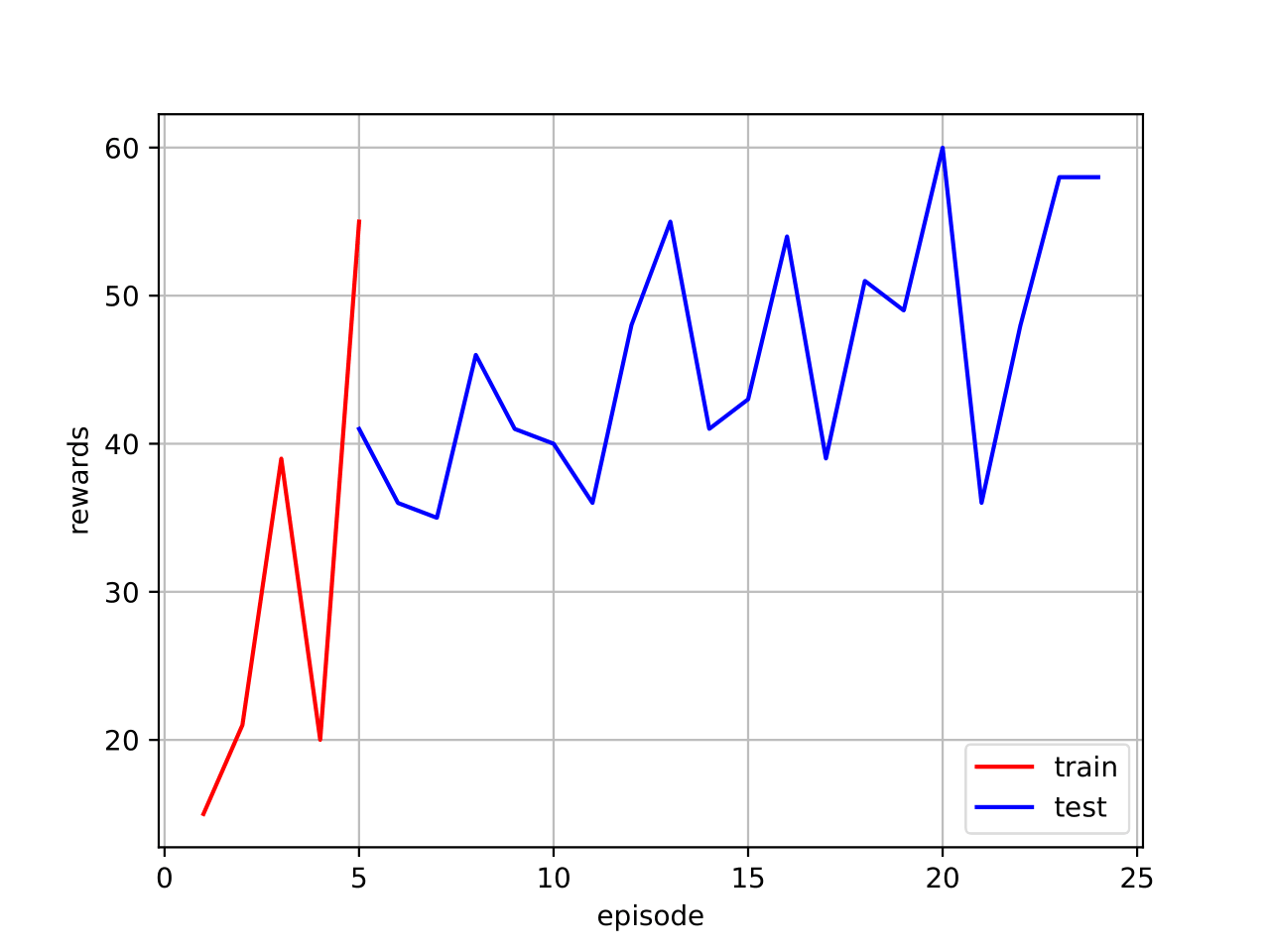

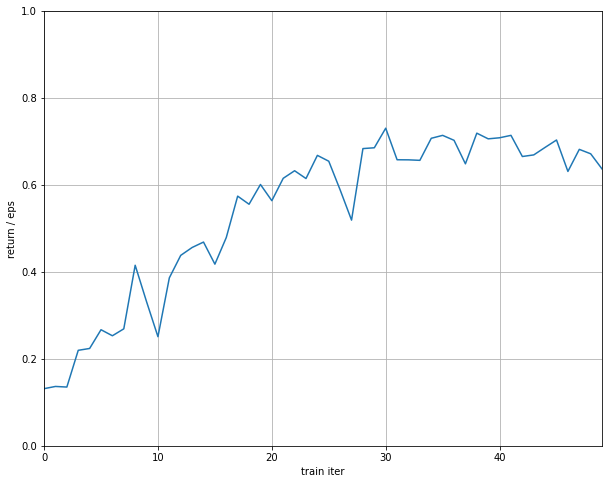

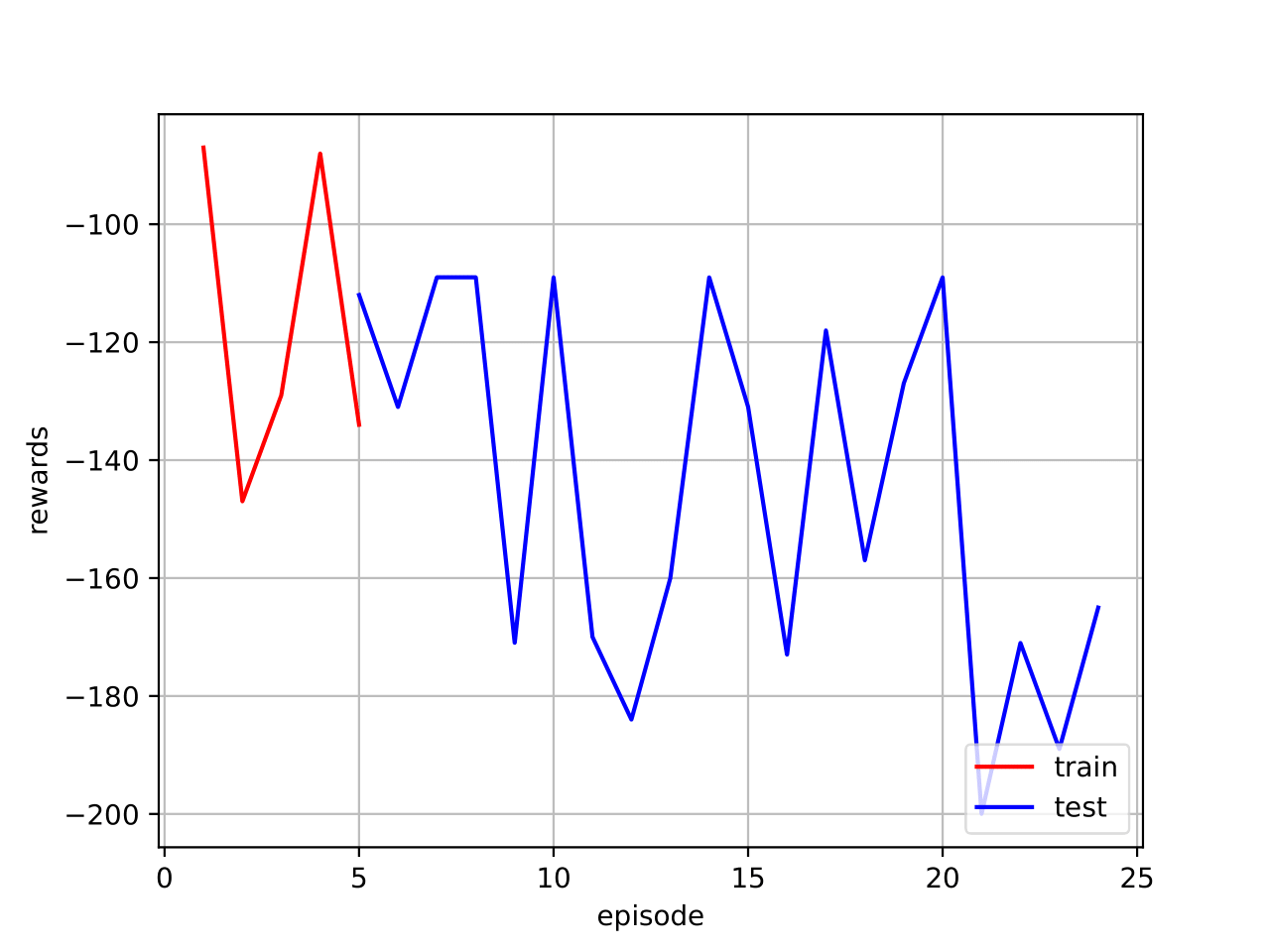

| Results reward | Interface game |

|---|---|

|

|

2.3 Mountain Car (Tamer) with vocal commands

2.3.1 Run the project

To implement a Tamer agent learning from vocal commands, you need to install the speech recognition package, running pip install SpeechRecognition==3.8.1.

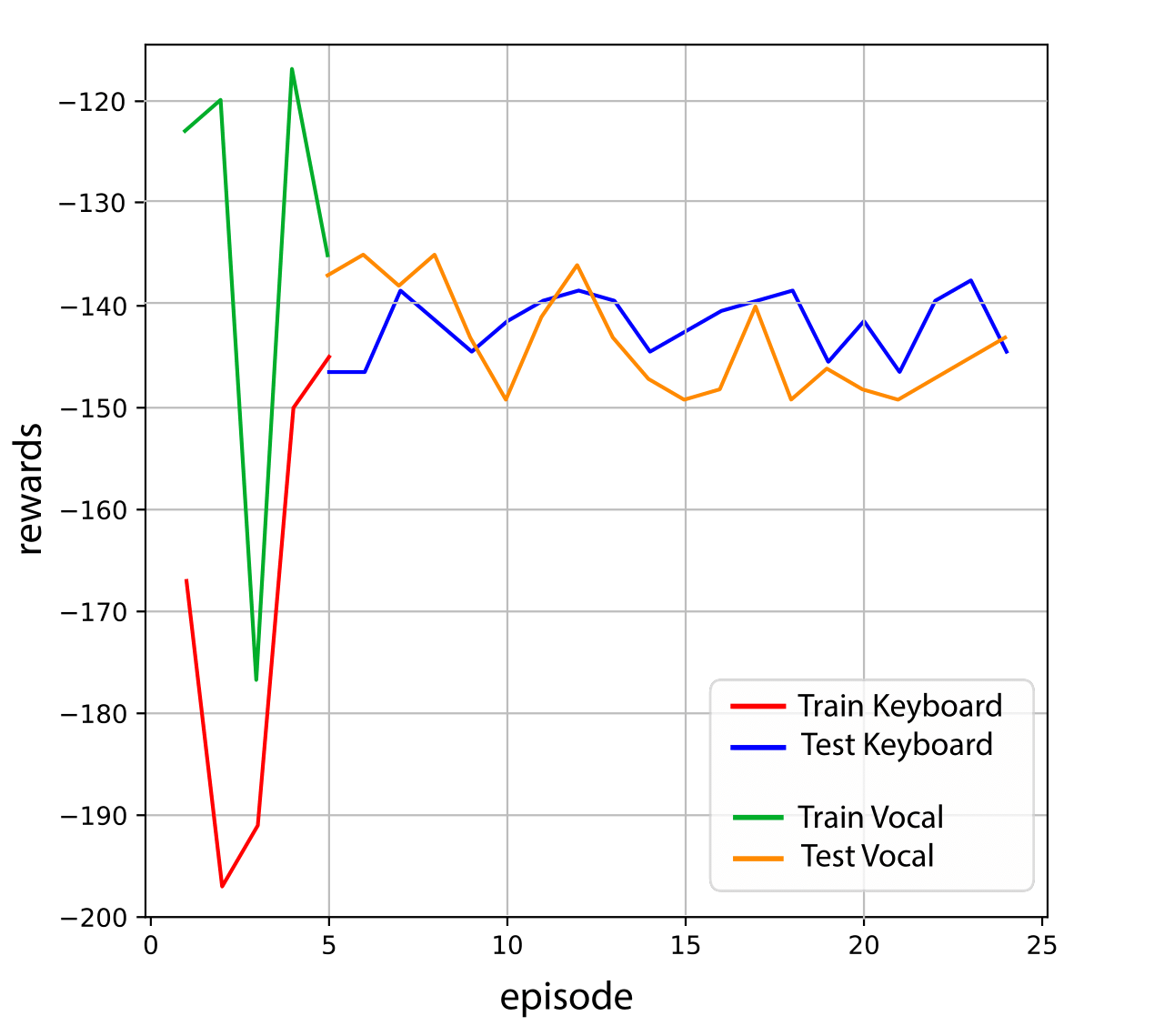

The code is the same of the "Mountain Car with Tamer", just some small modifications on the training process have been included. The human teacher, to give a feedback, only has to press "w" on the keyboard; then a vocal registration will start and recognize the speech content. If a "yes" feedback is given, a positive reward will be provided to the agent; if a "no" feedback is given, a negative one will be provided.

2.3.2 Results and code link

2.4 Mountain Car (Tamer) with gestures

2.4.1 Run the project

To implement a Tamer agent learning from gestures, you need to install:

- opencv, by running

pip install opencv-python==4.6.0.66 - MediaPipe, by running

pip install mediapipe

The keyboard key "w" has to be pressed at the beginning of the training, in order to switch on the camera (only the first time you run the code); then, the code works in the same way of "Mountain Car with Tamer". The human teacher, to give a feedback to the agent, has to press "w" on his keyboard: after 3 seconds, a photo will be captured. Based on the gesture recognized, a different reward will be provided. In particular:

- thumb up: positive reward

- thumb down: negative reward

The gesture is recognized through the MediaPipe framework, which performs precise keypoint localization of 21 3D hand-knuckle coordinates inside the detected hand, via regression. In order to succeed, the wrist of the hand has to be showed in the camera, otherwise no hands will be detected.

2.4.2 Results and code link

2.5 Mountain Car with Inverse Reinforcement learning

Generally, the expert policies are displayed by some human or machine who is an expert in that activity. Here, we used a different approach to learn expert policy. We used RL algorithm (Q-learning with Linear Function Approximation) to train an agent to be an expert in Mountain Car env. And then, that learnt agent is considered as an expert and the expert trajectories are generated by that agent. Finally, the IRL algorithm learns the reward function given expert policy/trajectories;

2.5.1 Run the project

2.5.2 Results and code link

3. CartPole

The CartPole environment consists of a pole which moves along a frictionless track. The system is controlled by applying a force of +1 or -1 to the cart. The pendulum starts upright, and the goal is to prevent it from falling over. The state space is represented by four values: cart position, cart velocity, pole angle, and the velocity of the tip of the pole. The action space consists of two actions: moving left or moving right. A reward of +1 is provided for every timestep that the pole remains upright. The episode ends when the pole is more than 15 degrees from vertical, or the cart moves more than 2.4 units from the center.

3.1 CartPole with Reinforcement Learning DQN

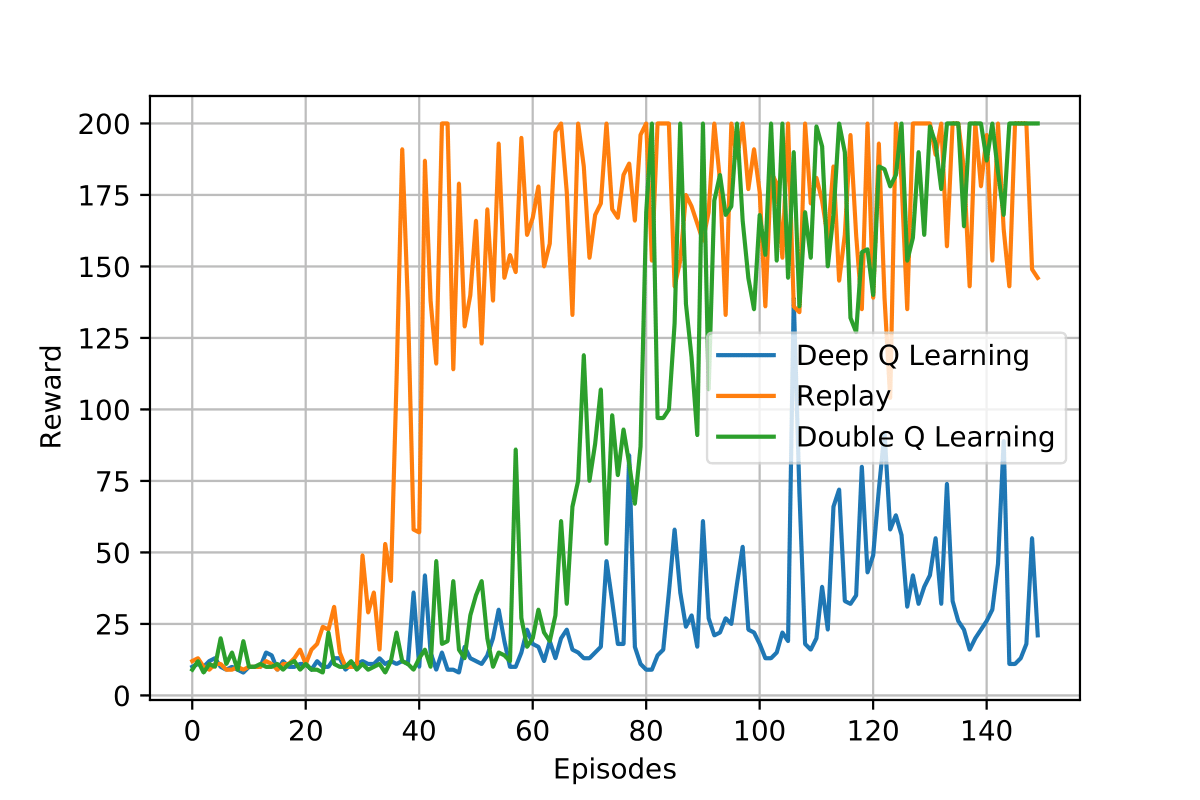

In this project, the Deep Reinforcement Learning (DQL) for the CartPole environment is implemented. DQL is a subfield of machine learning that combines reinforcement learning (RL) and deep learning; in particular, neural networks are used to approximate the Q function based on its most recent observation. Then, so that the approximation of Q using one sample at a time is not very effective, experience replay has been implemented in order to improve network stability and make sure previous experiences are not discarded, but used in training. In the end, it has been demonstrated that traditional Deep Q Learning tends to overestimate the reward, which leads to unstable training and lower quality policy. Consequently, Double Q-Learning has been implemented; it involves using two separate Q-value estimators, each of which is used to update the other. Using these independent estimators, we can have unbiased Q-value estimates of the actions selected using the opposite estimator.

3.1.1 Run the project

The notebook is available here. To run it, the following package installation is needed:

pip install gym[classic_control]

Since the code is implemented in GCollab no other installations are needed.

3.1.2 Results and Code link

3.2 Cartpole with Human in the loop Evaluative Feedback

In this project, the human in the loop has been added to the CartPole environment. The implemented algorithm is Tamer (Training an Agent Manually via Evaluative Reinforcement), already described above.

3.2.1 Run the project

- You need python 3.7+

- The version of gym has to be the 0.15.4, installing it running the following command

pip install gym==0.15.4 - numpy, sklearn, pygame and gym

- go inside TAMER folder with the command:

cd TAMER - to run the code

python run.py

3.2.2 Results and Code link