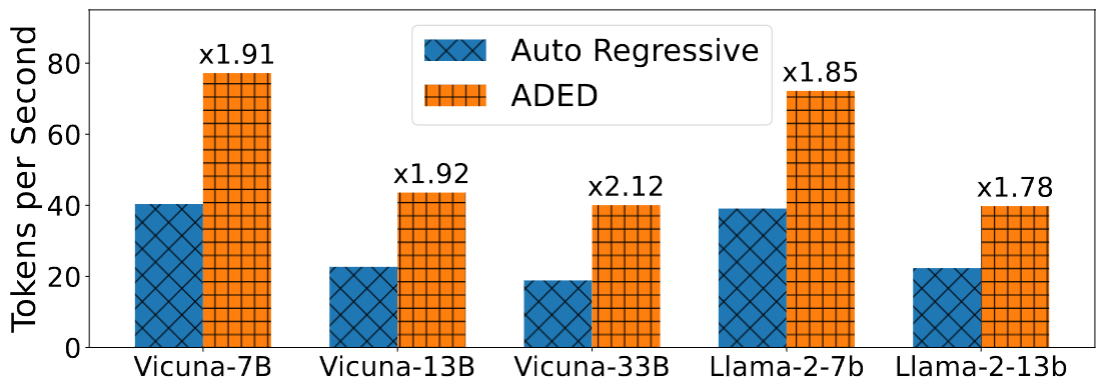

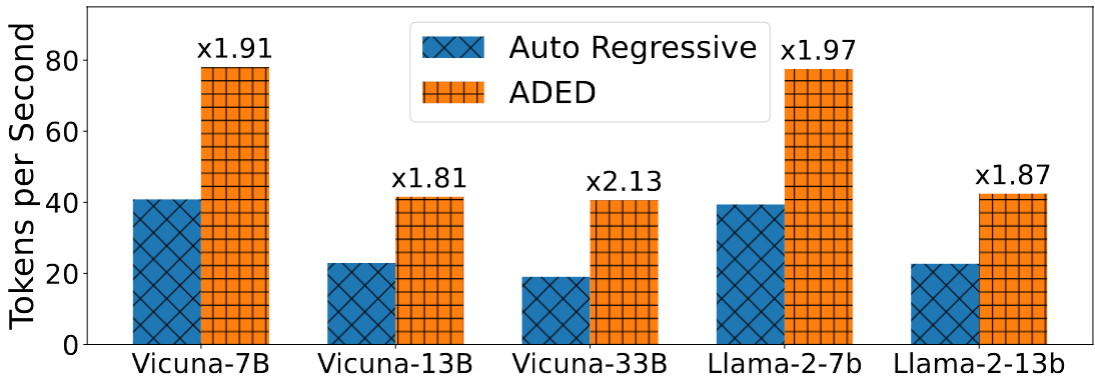

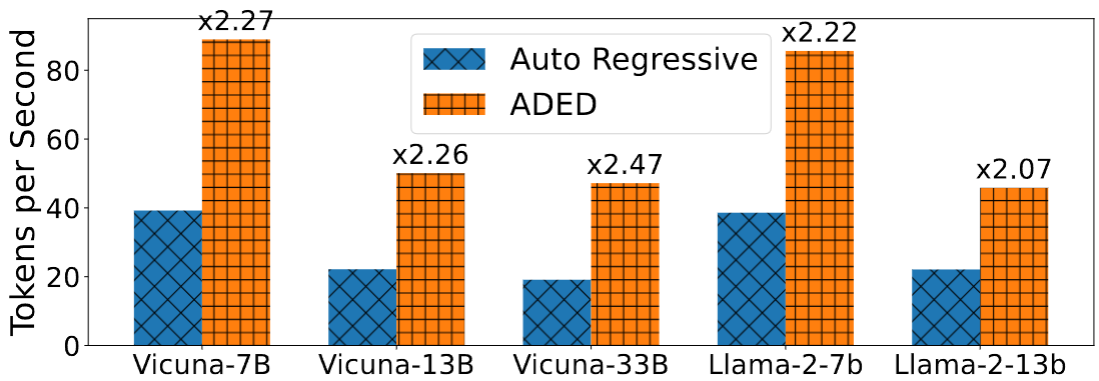

ADED is a kind of Retrieval Based Speculative Decoding method that accelerates large language model (LLM) decoding without fine-tuning, using an adaptive draft-verification process. It dynamically adjusts to token probabilities with a tri-gram matrix representation and employs MCTS to balance exploration and exploitation, producing accurate drafts quickly. ADED significantly speeds up decoding while maintaining high accuracy, making it ideal for practical applications.

Large language model (LLM) decoding involves generating a sequence of tokens based on a given context, where each token is predicted one at a time using the model's learned probabilities. The typical autoregressive decoding method requires a separate forward pass through the model for each token generated, which is computationally inefficient and poses challenges for deploying LLMs in latency-sensitive scenarios.

The main limitations of current decoding methods stem from their inefficiencies and resource demands. Existing approaches either necessitate fine-tuning smaller models, which is resource-intensive, or rely on fixed retrieval schemes to construct drafts for the next tokens, which lack adaptability and fail to generalize across different models and contexts.

pip install -r requirements.txtThen follow the README.md in DraftRetriever.

Build a chat datastore using data from UltraChat

cd datastore

python3 get_datastore_chat.py --model-path lmsys/vicuna-7b-v1.5 --large-datastore True Build a Python code generation datastore from The Stack

cd datastore

python3 get_datastore_code.py --model-path codellama/CodeLlama-7b-instruct-hf --large-datastore True Build a chat datastore using data from ShareGPT

cd datastore

python3 get_datastore_chat.py --model-path lmsys/vicuna-7b-v1.5 The corpus generated using the above commands will be larger than the data presented in our paper. This is because, for the convenience of testing the impact of corpus pruning, the generated corpus here retains the complete 3-gram (unpruned). The corpus will be pruned during reading, keeping only the top-12 entries with the highest probabilities (changeable as needed).

cd llm_judge

CUDA_VISIBLE_DEVICES=0 python gen_model_answer_aded.py --model-path lmsys/vicuna-7b-v1.5 --model-id vicuna-7b-v1.5 --datastore-path ../datastore/datastore_chat_large.idxcd human_eval

CUDA_VISIBLE_DEVICES=0 python aded_test.py --model-path lmsys/vicuna-7b-v1.5 --datastore-path ../datastore/datastore_stack_large.idxThe codebase is mainly from REST, some code is from Medusa and influenced by remarkable projects from the LLM community, including FastChat, TinyChat, vllm and many others.