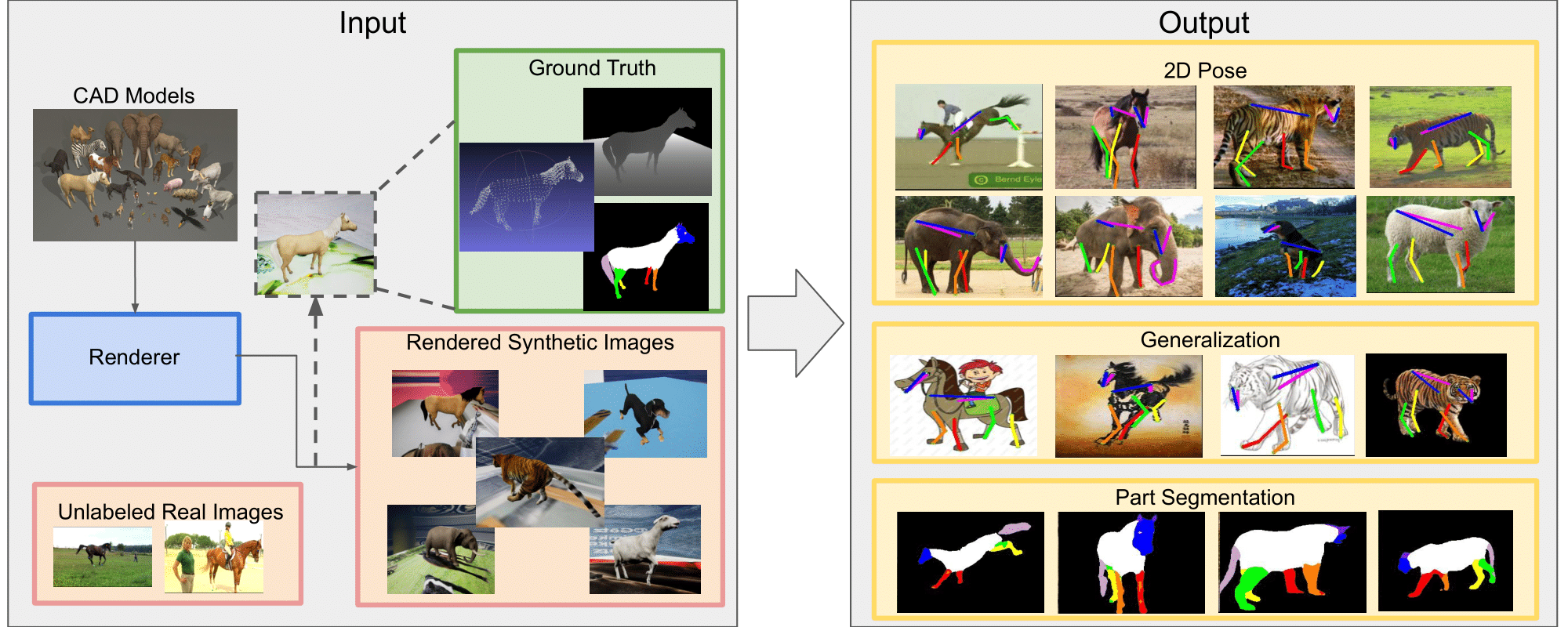

Learning from Synthetic Animals

Code for Learning from Synthetic Aniamls (CVPR 2020, oral). The code is developed based on the Pytorch framework(1.1.0) with python 3.7.3. This repo includes training code for consistency-constrained semi-supervised learning plus a synthetic animal dataset.

Citation

If you find our code or method helpful, please use the following BibTex entry.

@InProceedings{Mu_2020_CVPR,

author = {Mu, Jiteng and Qiu, Weichao and Hager, Gregory D. and Yuille, Alan L.},

title = {Learning From Synthetic Animals},

booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}

Requirements

- PyTorch (tested with 1.1.0): Please follow the installation instruction of PyTorch.

- Other packages, e.g. imgaug 0.3.0, opencv 4.1.1, scipy 1.2.1

Installation

-

Clone the repository with submodule.

git clone https://github.com/JitengMu/Learning-from-Synthetic-Animals -

Go to directory

Learning-from-Synthetic-Animals/and create a symbolic link to the images directory of the animal dataset:ln -s PATH_TO_IMAGES_DIR ./animal_data -

Download and pre-processing datasets:

- Download TigDog Dataset and move folder

behaviorDiscovery2.0to./animal_data. - Run

python get_cropped_TigDog.pyto get croppedTigDogDataset. - Download Synthetic Animal Dataset with script

bash get_dataset.sh.

- Download TigDog Dataset and move folder

High level organization

-

./datafor train/val split and dataset statistics (mean/std). -

./trainfor training scripts. -

./evaluationfor inference scripts -

./CC-SSLfor consistency constrained semi-supervised learing. -

./posefor stacked hourglass model. -

./data_generationfor synthetic animal dataset generation.

Demo

- Download the checkpoint with script

bash get_checkpoint.shand the structure looks like:

checkpoint

│

└───real_animal

│ │ horse

│ └ tiger

│

└───synthetic_animal

│ horse

│ tiger

└ others

-

Run the

demo.ipynbto visualize predictions. -

Evaluate the accuracy on TigDog Dataset. (18 per joint accuracies are in the order of left-eye, right-eye, chin, left-front-hoof, right-front-hoof, left-back-hoof, right-back-hoof, left-front-knee, right-front-knee, left-back-knee, right-back-knee, left-shoulder, right-shoulder, left-front-elbow, right-front-elbow, left-back-elbow, right-back-elbow)

CUDA_VISIBLE_DEVICES=0 python ./evaluation/test.py --dataset1 synthetic_animal_sp --dataset2 real_animal_sp --arch hg --resume ./checkpoint/synthetic_animal/horse/horse_ccssl/synthetic_animal_sp.pth.tar --evaluate --animal horse

Train

- Training on synthetic animal dataset.

CUDA_VISIBLE_DEVICES=0 python train/train.py --dataset synthetic_animal_sp -a hg --stacks 4 --blocks 1 --image-path ./animal_data/ --checkpoint ./checkpoint/horse/syn --animal horse

- Generate pseudo-labels for TigDog dataset and jointly train on synthetic animal and TigDog datasets.

CUDA_VISIBLE_DEVICES=0 python CCSSL/CCSSL.py --num-epochs 60 --checkpoint ./checkpoint/horse/ssl/ --resume ./checkpoint/horse/syn/model_best.pth.tar --animal horse

- Evaluate the accuracy on TigDog Dataset using metric PCK@0.05.

CUDA_VISIBLE_DEVICES=0 python ./evaluation/test.py --dataset1 synthetic_animal_sp --dataset2 real_animal_sp --arch hg --resume ./checkpoint/horse/ssl/synthetic_animal_sp.pth.tar --evaluate --animal horse

Please refer to TRAINING.md for detailed training recipes!

Generate synthetic animal dataset using Unreal Engine

-

Download and install the

unrealcv_binaryfor Linux (tested in Ubuntu 16.04) withbash get_unrealcv_binary.sh -

Run unreal engine.

DISPLAY= ./data_generation/unrealcv_binary/LinuxNoEditor/AnimalParsing/Binaries/Linux/AnimalParsing -cvport 9900

- Run the following script to generate images and ground truth (images, depths, keypoints)

python data_generation/unrealdb/example/animal_example/animal_data.py --animal horse --random-texture-path ./data_generation/val2017/ --use-random-texture --num-imgs 10

Generated data is saved in ./data_generation/generated_data/ by default.

- Run the following script to generate part segmentations (support horse, tiger)

python data_generation/generate_partseg.py --animal horse --dataset-path ./data_generation/generated_data/

Acknowledgement

-

Wei Yang's Stacked Hourglass Model

-

Yunsheng Li's Bidirectional Learning for Domain Adaptation of Semantic Segmentation