Keno Moenck

·

Duc Trung Thieu

·

Julian Koch

·

Thorsten Schüppstuhl

Hamburg University of Technology (TUHH), Institute of Aircraft Production Technology (IFPT), Germany

We present the Industrial Language-Image Dataset (ILID), a small and web-crawled dataset containing language-image samples from various web catalogs, representing parts/components from the industrial domain.

Currently, the dataset has 12.537 valid samples from five different web catalogs, including a diverse range of products ranging from standard elements small in size, like hinges, linear motion elements, bearings, or clamps, to larger ones, like scissor lifts, pallet trucks, etc. (s. Samples for an excerpt).

In initial studies, we used different transfer learning approaches on CLIP (Contrastive Language-Image Pretraining) to enable a variety of downstream tasks from classification on object- and material-level to language-guided segmentation.

The generation of the dataset followed six steps: selecting suitable sources, web crawling, pre-filtering, processing, post-filtering, and a download stage.

We have endeavored to take a step towards the use of VFM in machine vision applications by introducing the ILID to bring the industrial context into CLIP, while also demonstrating the effective self-supervised transfer learning from the dataset in our work.

To date, our studies have only included using three of the five natural language labels, but we encourage you to use or extend the dataset in your own studies for further tasks and evaluation. You can find our training- and evaluation-related code at kenomo/industrial-clip.

On request, we provide the final post-processed metadata of the dataset to recreate it. Send an email to 📧 Keno Moenck.

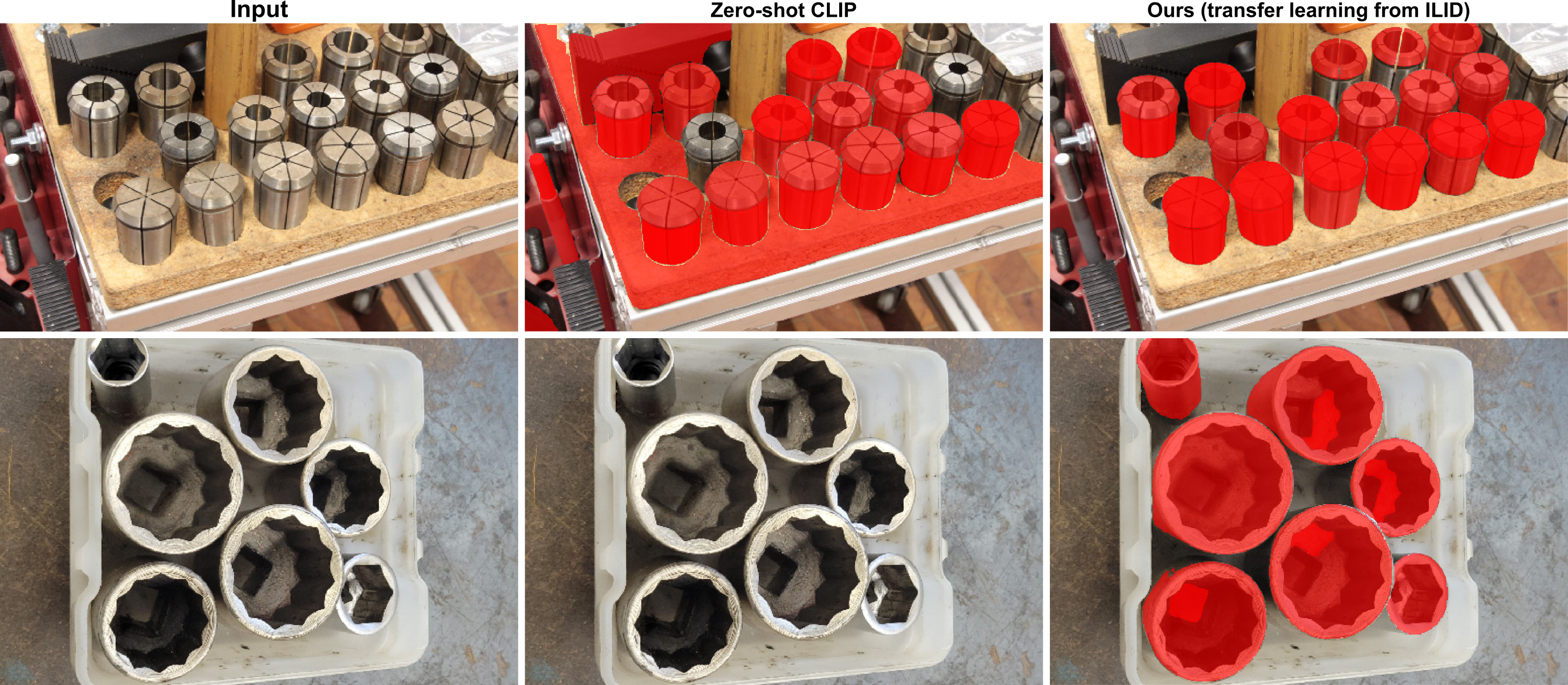

Language-guided segmentation results given the prompt "collet" and "socket" compared to zero-shot CLIP under the same settings (s. publication for more model and training details).

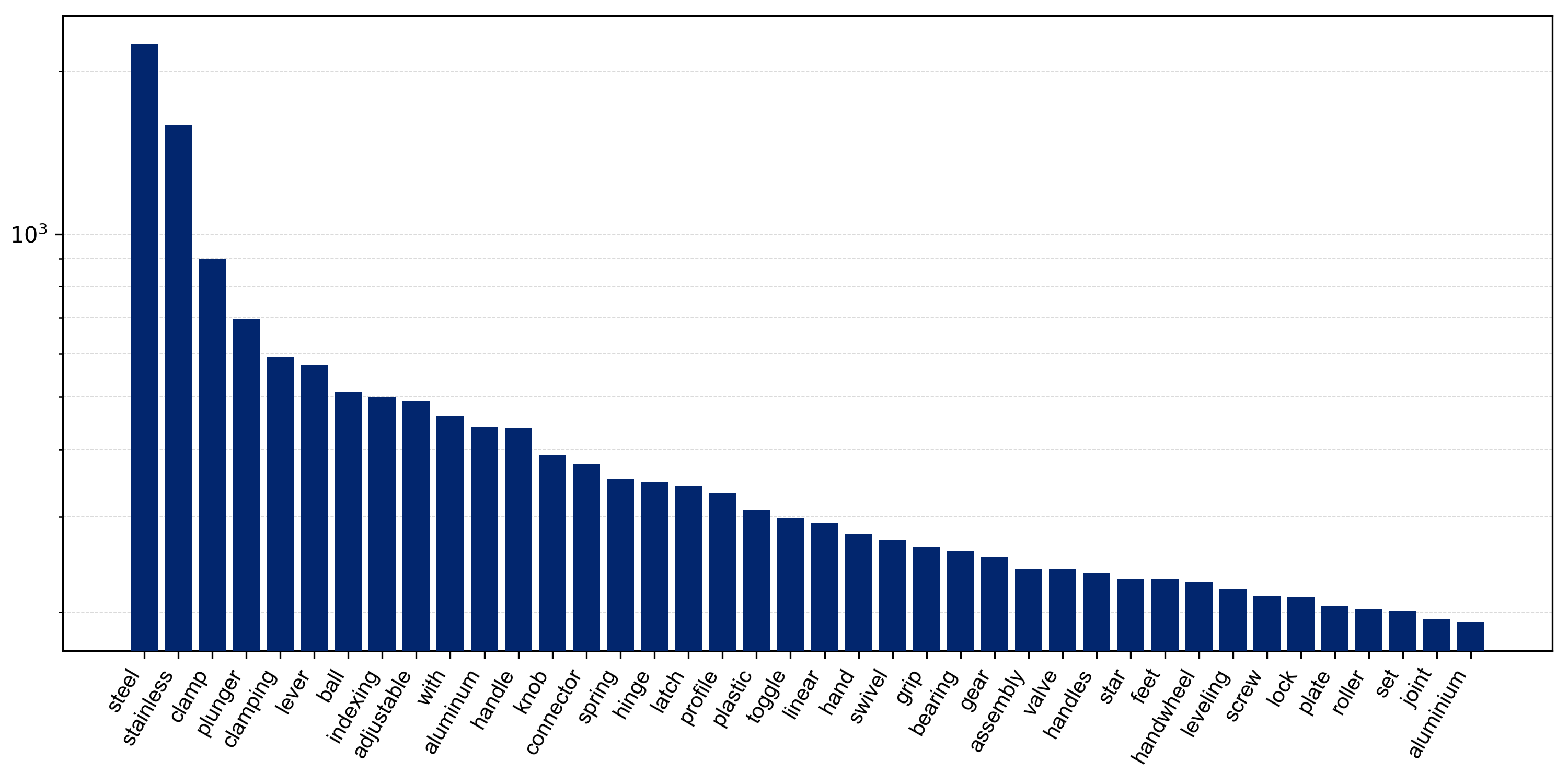

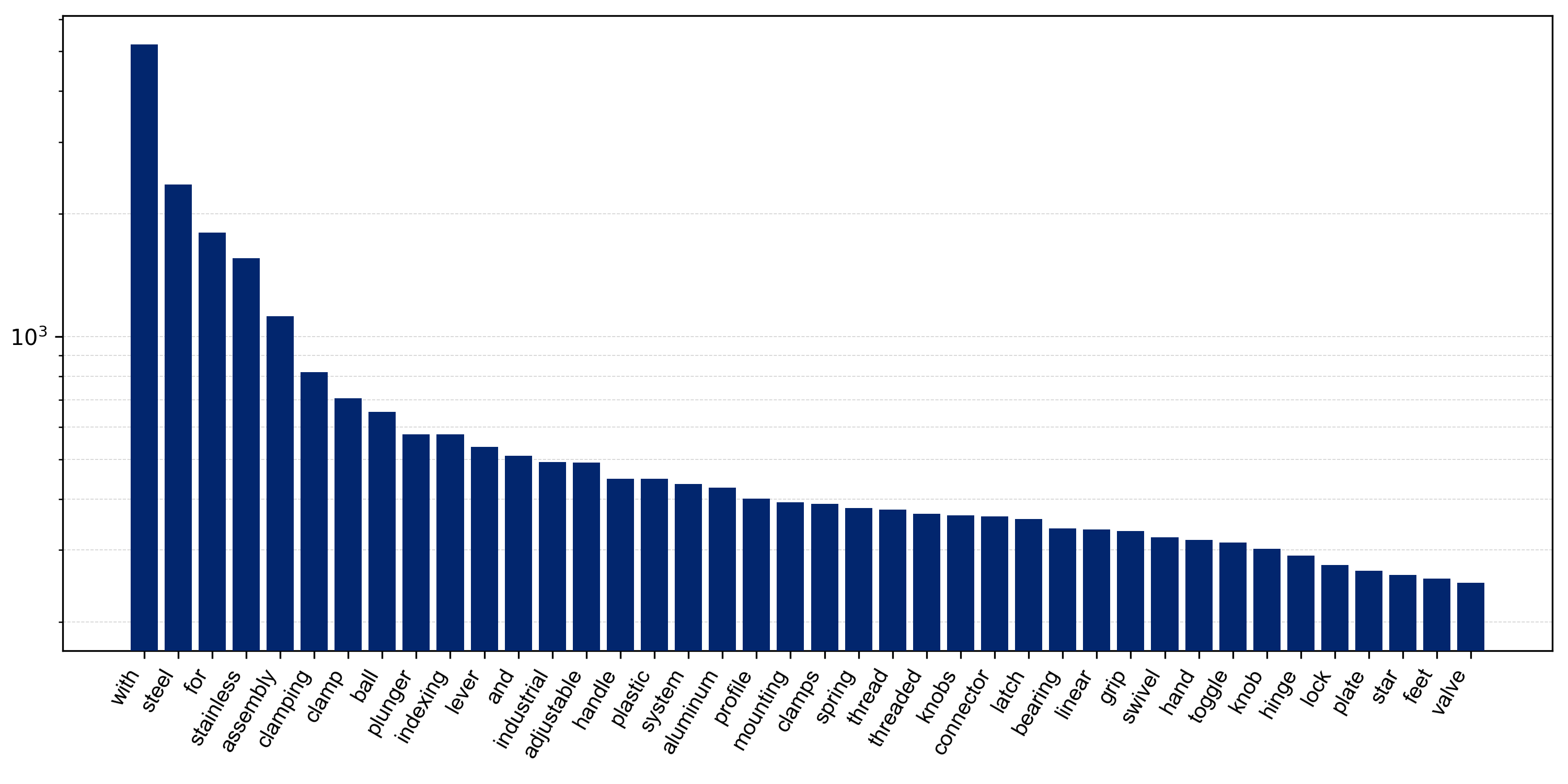

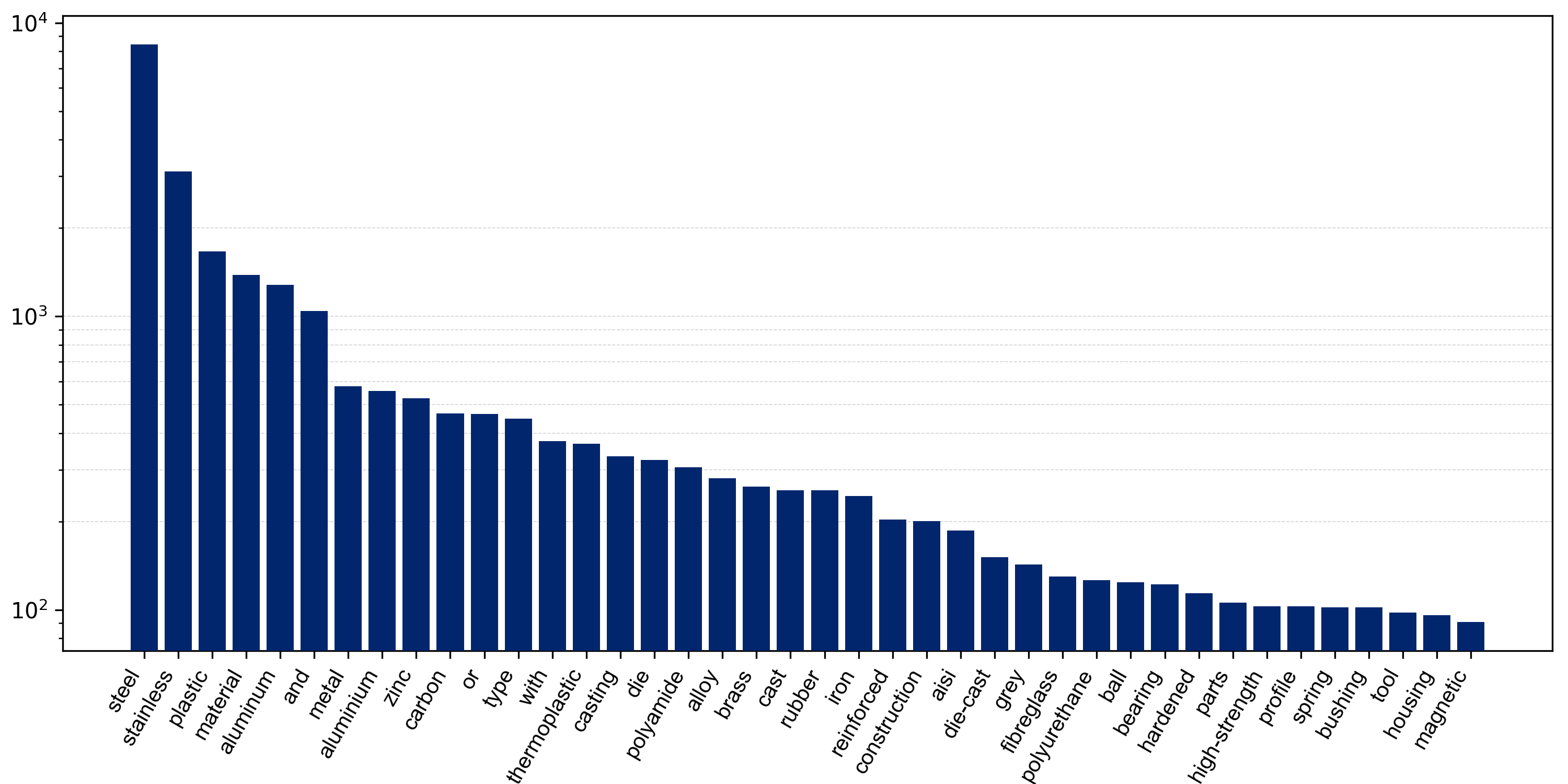

| Top-40 word frequencies | ||

|---|---|---|

| label_short | label_long | material |

|

|

|

The dataset has five labels per item, which follows the following structure:

{

"id": "<<uuid>>",

"image": "<<label_short>>/<<uuid>>.png",

"url": "<<original image url>>",

"label_short": "<<a short label describing the product>>",

"label_long": "<<a longer label, longer than the short label>>",

"description": "<<a longer description>>",

"material": "<<the product's material>>",

"material_finish": "<<the finish or color of the product>>",

"source": "<<the source of the sample>>"

}On request, we provide ILID's metadata, which you can use to download the images; the language labels are already included in the provided json file. If you want to extend the dataset (add data from additional stores), follow the sections starting from Writing a spider. Otherwise, you can continue downloading the images from the provided ILID json file.

The repository contains a .devcontainer/devcontainer.json, which contains all the necessary dependencies.

python download.py \

--dataset ./data/ilid.json \

--folder ./data/images \

--image_width 512 \

--image_height 512First, identify a suitable web source (and its product sitemap.xml) from which to crawl data. With the browser debug console, you can identify the relevant DOM elements from which to yield data. Then, you can use the Scrapy shell to initially access a store and test if you found the correct elements.

scrapy shell https://uk.rs-online.com/web/p/tubing-and-profile-struts/7613313

Write a spider following the example given in example/spider.py. Then, run the spider using:

scrapy runspider <<shop>>/spider.py -O data/<<shop>>_raw.json

- Filter the data initially and remove, e.g., tradenames using the regex argument:

python pre-filtering.py \

--file ./data/<<shop>>_raw.json \

--output ./data/<<shop>>_prefiltered.json \

--regex "(ameise|proline|basic)"

- Process the data. You need a Hugging Face Access Token to get access to the

--model "meta-llama/Meta-Llama-3-8B-Instruct". If you only want to process a subset of the data, use the--debug 0.1argument to process, e.g., only a tenth of the data

python processing.py \

--file ./data/<<shop>>_prefiltered.json \

--output ./data/<<shop>>_processed.json \

--access_token "<<huggingface access token>>"

- Apply post-filtering and assemble a combined dataset file from all processed shop data:

python post-filtering.py \

--file ./data/<<shop>>_processed.json \

--output ./data/<<shop>>_postfiltered.json

python assemble.py \

--file ./data/<<shop>>_postfiltered.json \

--dataset ./data/dataset.json \

--source_tag "<<shop>>"

- Finally, run the

download.pyas described above.

Some samples from the dataset (source MÄDLER GmbH):

You are welcome to submit issues, send pull requests, or share some ideas with us. If you have any other questions, please contact 📧: Keno Moenck.

If you find ILID useful to your work/research, please cite:

@misc{Moenck.14.06.2024,

author = {Moenck, Keno and Thieu, Duc Trung and Koch, Julian and Sch{\"u}ppstuhl, Thorsten},

title = {Industrial Language-Image Dataset (ILID): Adapting Vision Foundation Models for Industrial Settings},

date = {14.06.2024},

year = {2024},

url = {http://arxiv.org/pdf/2406.09637},

doi = {https://doi.org/10.48550/arXiv.2406.09637}

}