The goal of this project is to recognize the DVD covers in the given image and retrieve them from the database. The recognition scheme is based on a paper from David et al., Scalable Recognition with a Vocabulary Tree[1]. The paper present a recognition scheme that scales efficiently to large number of objects.

You need to have your own data structure as following.

+-- src

+-- database.py

+-- feature.py

+-- homography.py

+-- data

+-- DVDcovers

+-- DVD_name.jpg

+-- .

+-- .

+-- .

+-- test

+-- image_01.jpg

+-- image_02.jpg

+-- .

+-- .

+-- .

+-- docs

cd src

python database.py

Process of Building the database

Initial the Database

Loading the images from ../data/DVDcovers, use SIFT for features

Building Vocabulary Tree, with 5 clusters, 5 levels

Building Histgram for each images

Building BoW for each images

Saving the database to data_sift.txt

Process of querying

Loading the database

0: scores: -2.795583265523189, image: ../data/DVDcovers/antz.jpg

1: scores: -1.9157748210404475, image: ../data/DVDcovers/o_brother_where_art_thou.jpg

2: scores: -1.9093178618880344, image: ../data/DVDcovers/mystic_river.jpg

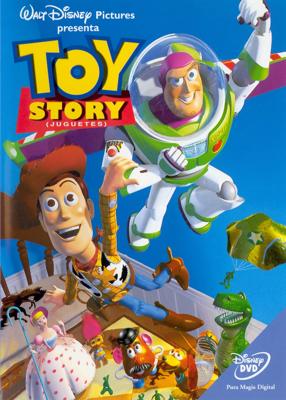

3: scores: -1.7939076605071755, image: ../data/DVDcovers/toy_story.jpg

4: scores: -1.7509253310436343, image: ../data/DVDcovers/world_war_Z.jpg

5: scores: -1.746503545605861, image: ../data/DVDcovers/anastasia.jpg

6: scores: -1.7339252689292404, image: ../data/DVDcovers/wanted.jpg

7: scores: -1.7081165120755246, image: ../data/DVDcovers/once.jpg

8: scores: -1.6730278848379108, image: ../data/DVDcovers/indiana_jones_and_the_raiders_of_the_lost_ark.jpg

9: scores: -1.6599345737901579, image: ../data/DVDcovers/coyote_ugly.jpg

Running RANSAC... Image: ../data/DVDcovers/antz.jpg Inliers: 15

Running RANSAC... Image: ../data/DVDcovers/o_brother_where_art_thou.jpg Inliers: 16

Running RANSAC... Image: ../data/DVDcovers/mystic_river.jpg Inliers: 8

Running RANSAC... Image: ../data/DVDcovers/toy_story.jpg Inliers: 61

Running RANSAC... Image: ../data/DVDcovers/world_war_Z.jpg Inliers: 3

Running RANSAC... Image: ../data/DVDcovers/anastasia.jpg Inliers: 4

Running RANSAC... Image: ../data/DVDcovers/wanted.jpg Inliers: 4

Running RANSAC... Image: ../data/DVDcovers/once.jpg Inliers: 3

Running RANSAC... Image: ../data/DVDcovers/indiana_jones_and_the_raiders_of_the_lost_ark.jpg Inliers: 3

Running RANSAC... Image: ../data/DVDcovers/coyote_ugly.jpg Inliers: 4

The best match image: ../data/DVDcovers/toy_story.jpg

Homography: [[ 6.54908715e-06 1.12769156e-03 6.50655466e-01]

[-1.14684312e-03 2.29689828e-05 7.59370040e-01]

[-5.25944125e-08 4.10266206e-09 1.42116071e-03]]

I tried both SIFT and ORB, found out the SIFT works better in terms of accuracy whereas the ORB is faster. You can specify both SIFT and ORB in the code.

The first step is to build the database use Vocabulary Tree. I first use SIFT to get all the feature descriptors for each the DVD images and then build a Vocabulary Tree of those descriptors. The

For each images in the dataset, the Bags of Visual Words(BoG) is a descriptor that summarize entire image based on its distribution of visual word occurrences. In my implementation, I keep a dictionary that map a image to it's BoG. This dictionary can be build along the way I build the the Tree. The idea of BoG saves log of time from robustly matching each feature descriptor.

When I querying the image, I first calculate the BoG of the querying image. While doing that, I can obtain a list of target image from the database that have at least one common visual word. Then for each target image, I can score each pair between query image and target image to find the top K most similar images.

I use the scoring method that define in the paper. Define two descriptor

Since all top K image may have many visual words in common, I use RANSAC(2000 iterations) to calculate the Homography matrix for each pair, and then pick the final image that its Homography obtain the largest number of inliers.

[1] David N, and et al, Scalable Recognition with a Vocabulary Tree. 2006