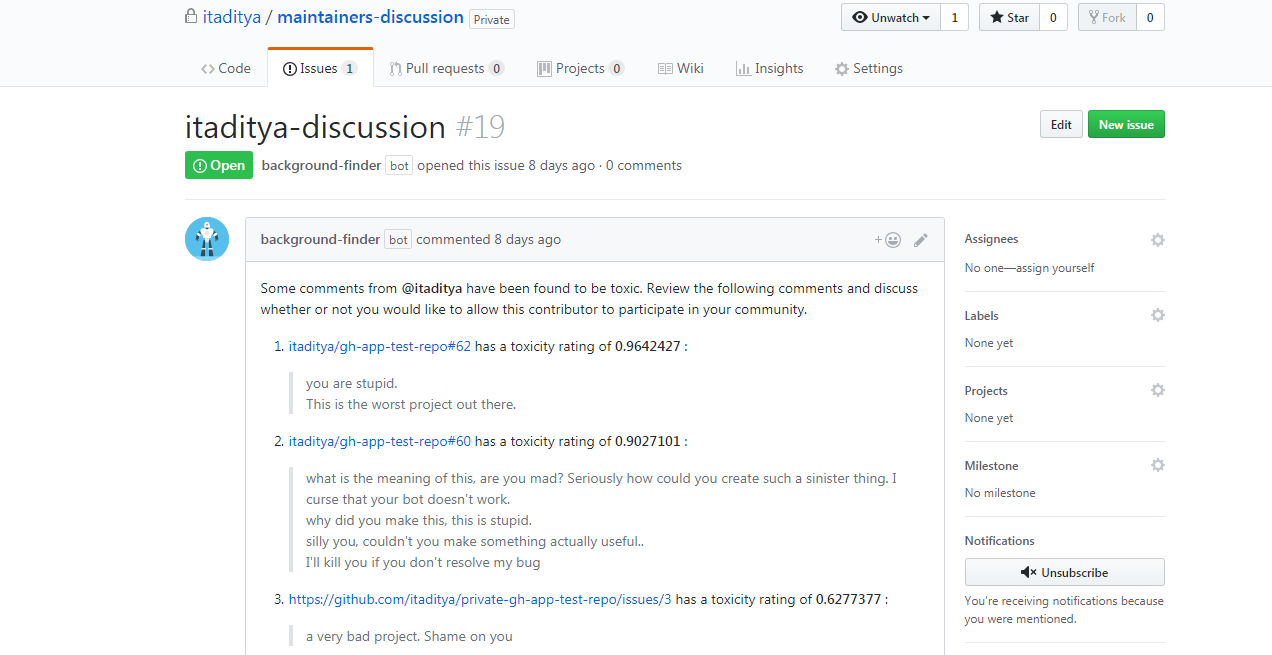

A GitHub App built with probot that peforms a "background check" to identify users who have been toxic in the past, and shares their toxic activity in the maintainer’s discussion repo.

- Go to the github app page.

- Install the github app on your repos.

- You'll get an invitation to a private repo, accept it and add other maintainers to the repo as well.

The bot listens to comments on repos in which the bot is installed. When a new user comments, the bot fetches public comments of this user and run sentiment analyser on them. If 5 or more comments stand out as toxic, then the bot concludes that the user is of hostile background and an issue is opened for this user in probot-background-check/{your-name}-discussions private repo so that the maintainers can review these toxic comments and discuss whether or not they will like to allow this hostile user to participate in their community.

In case of false positives where the sentiment analysis flags certain comments as toxic while they are not, the discussion issue would still be created. As the bot posts the toxic comments in the issue description, the maintainers can then verify the toxicity and then close the issue if they find the sentiment analysis incorrect.

The discussion about a user who has been hostile in the past must ke kept private, so that only maintainers can see it. Because not every account (individual/org) has access to private repo, the app instead uses it's own org. Whenver the app is installed, a private repo for the maintainer's account gets created in the org and the installer is added as collaborator. This way discussions can be held privately.

- Fork this repo.

- Clone the forked repo in your development machine

cdinto the repo directory,cd background-checkprobably.- Run

npm ito setup project.

- Run

cp .env.example .env. - Open

.envfile. - Generate API key for Perspective API.

- Paste this API key against

PERSPECTIVE_API_KEYin.envfile. - Create an org for the app.

- Create a personal access token and paste that against

GITHUB_ACCESS_TOKENin.envfile. - Create a Github App and follow these instructions.

Do npm start to check if github app runs correctly in your dev machine. After this create branch, make changes, run tests, commit the changes and make a PR.

To make the development of the project faster, these CLI commands are created.

# Install dependencies

npm install

# Run the bot

npm start

# Run bot in dev mode which watches files for changes

npm run dev

# Run Unit Tests

npm test

# Run Unit Tests in watch mode

npm run test:watch

# Run linter and fix the issues

npm run lint

# Serve documentation locally

npm run docs:serve

# Run sandbox

npm run sandbox -- --sandboxName

Eg - npm run sandbox -- --getCommentsOnIssue