UMC

UMC: A Unified Bandwidth-efficient and Multi-resolution based Collaborative Perception Framework

Tianhang Wang, Guang Chen†, Kai Chen, Zhengfa Liu, Bo Zhang, Alois Knoll, Changjun Jiang

Paper (arXiv) | Paper (ICCV) | Project Page | Video | Talk | Slides | Poster

Table of Contents

Changelog

- 2023-8-10: We release the project page.

- 2023-7-14: This paper is accepted by ICCV 2023 🎉🎉.

Introduction

-

This repository is the PyTorch implementation

under

for UMC.

-

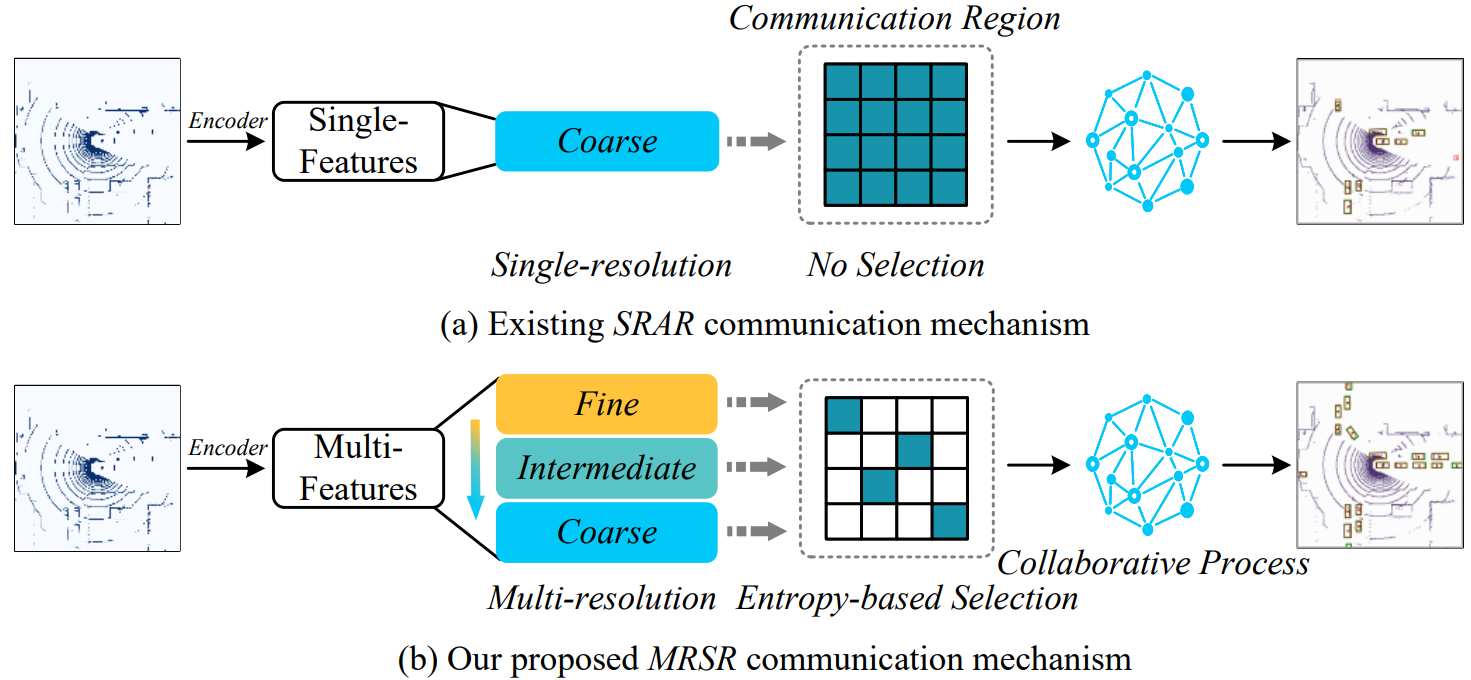

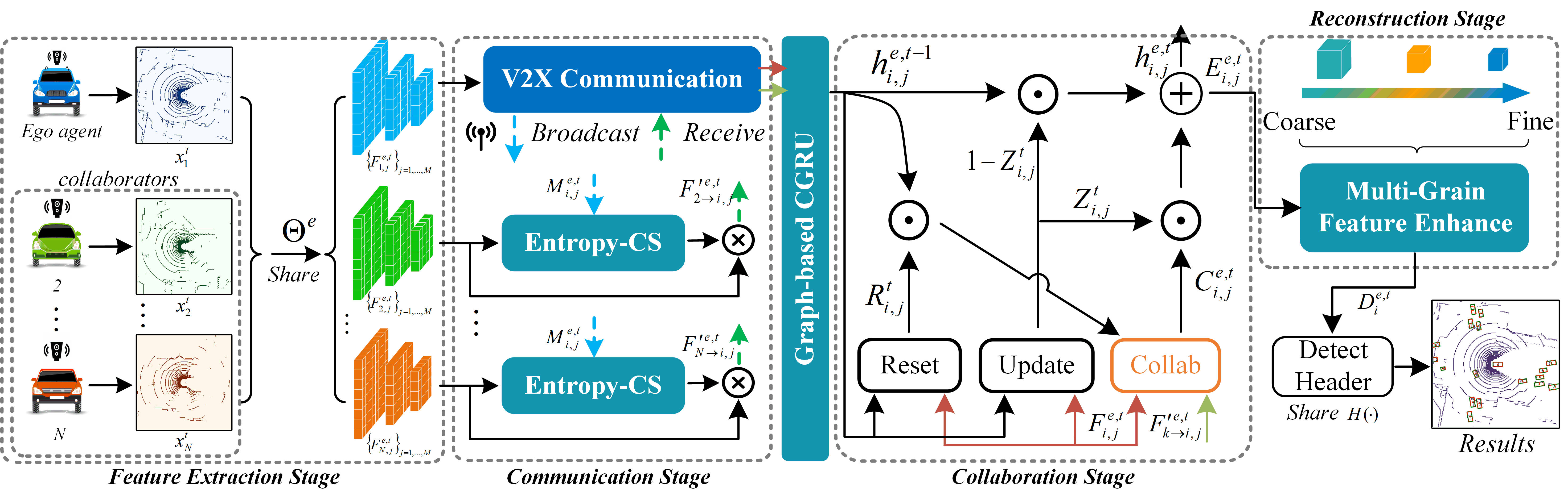

We aim to propose a Unified Collaborative perception framework named UMC, optimizing the communication, collaboration, and reconstruction processes with the Multi-resolution technique.

- The communication introduces a novel trainable multi-resolution and selective-region (MRSR) mechanism, achieving higher quality and lower bandwidth. Then, a graph-based collaboration is proposed, conducting on each resolution to adapt the MRSR. Finally, the reconstruction integrates the multi-resolution collaborative features for downstream tasks.

Get Started

- Our code is build on DiscoNet, please kindly refer it for more details.

Datasets

Pretrained Model

V2X-Sim dataset

- UMC: Pretrained Model: BaiduYun[Code: erhg] |

Google Drive; Detection Results:BaiduYun|Google Drive

-

GCGRU Pretrained Model: BaiduYun[Code: jinj] |

Google Drive; Detection Results:BaiduYun|Google Drive -

EntropyCS_GCGRU Pretrained Model: BaiduYun[Code: ssye] |

Google Drive; Detection Results:BaiduYun|Google Drive -

MGFE_GCGRU Pretrained Model: BaiduYun[Code: x6b7] |

Google Drive; Detection Results:BaiduYun|Google Drive

-

UMC_GrainSelection_1_3 Pretrained Model: BaiduYun[Code: 5ngb] |

Google Drive; Detection Results:BaiduYun|Google Drive -

UMC_GrainSelection_2_3 Pretrained Model: BaiduYun[Code: mya8] |

Google Drive; Detection Results:BaiduYun|Google Drive

OPV2V dataset

- UMC Pretrained Model: BaiduYun[Code: x2y2] |

Google Drive; Detection Results:BaiduYun|Google Drive

Visualization

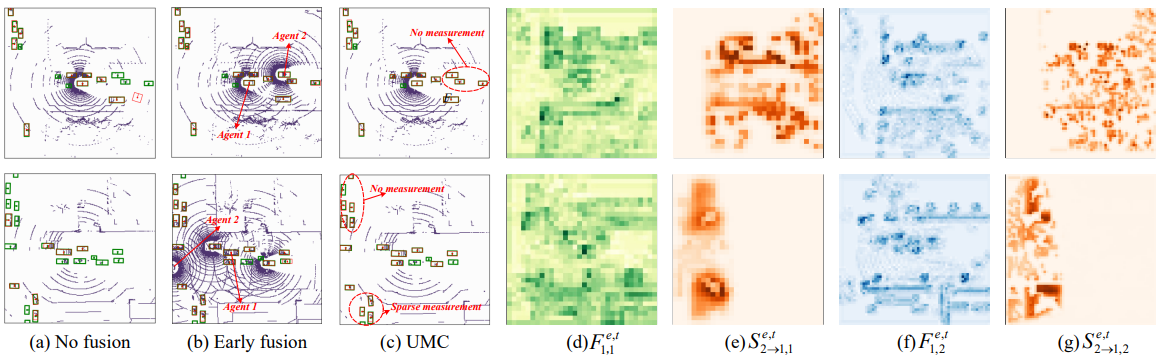

- Detection and communication selection for Agent 1. The green and red boxes represent the ground truth (GT) and predictions, respectively. (a-c) shows the results of no fusion, early fusion, and UMC compared to GT. (d) The coarse-grained collaborative feature of Agent 1. (e) Matrix-valued entropy-based selected communication coarse-grained feature map from Agent 2. (f) The fine-grained collaborative feature of Agent 1. (g) Matrix-valued entropy-based selected communication fine-grained feature map from Agent 2.

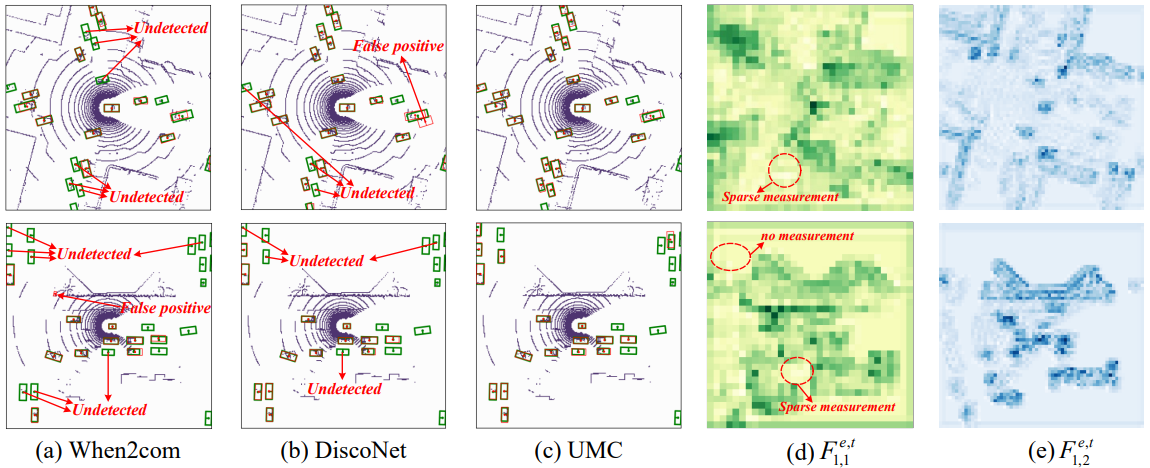

- UMC qualitatively outperforms the state-of-the-art methods. The green and red boxes denote ground truth and detection, respectively. (a) Results of When2com. (b) Results of DiscoNet. (c) Results of UMC. (d)-(e) Agent 1's coarse-grained and fine-grained collaborative feature maps, respectively.

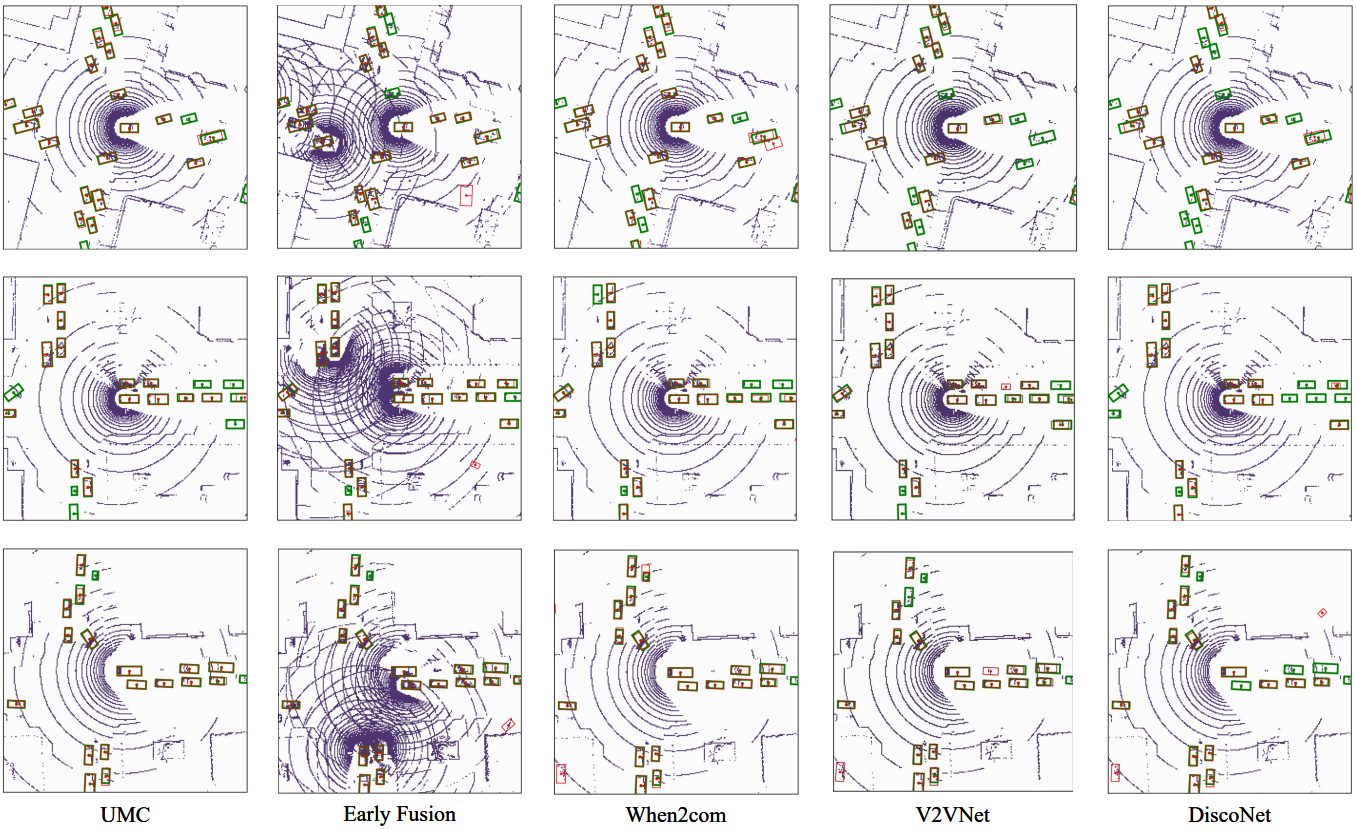

- Detection results of UMC, Early Fusion, When2com, V2VNet and DiscoNet on V2X-Sim dataset.

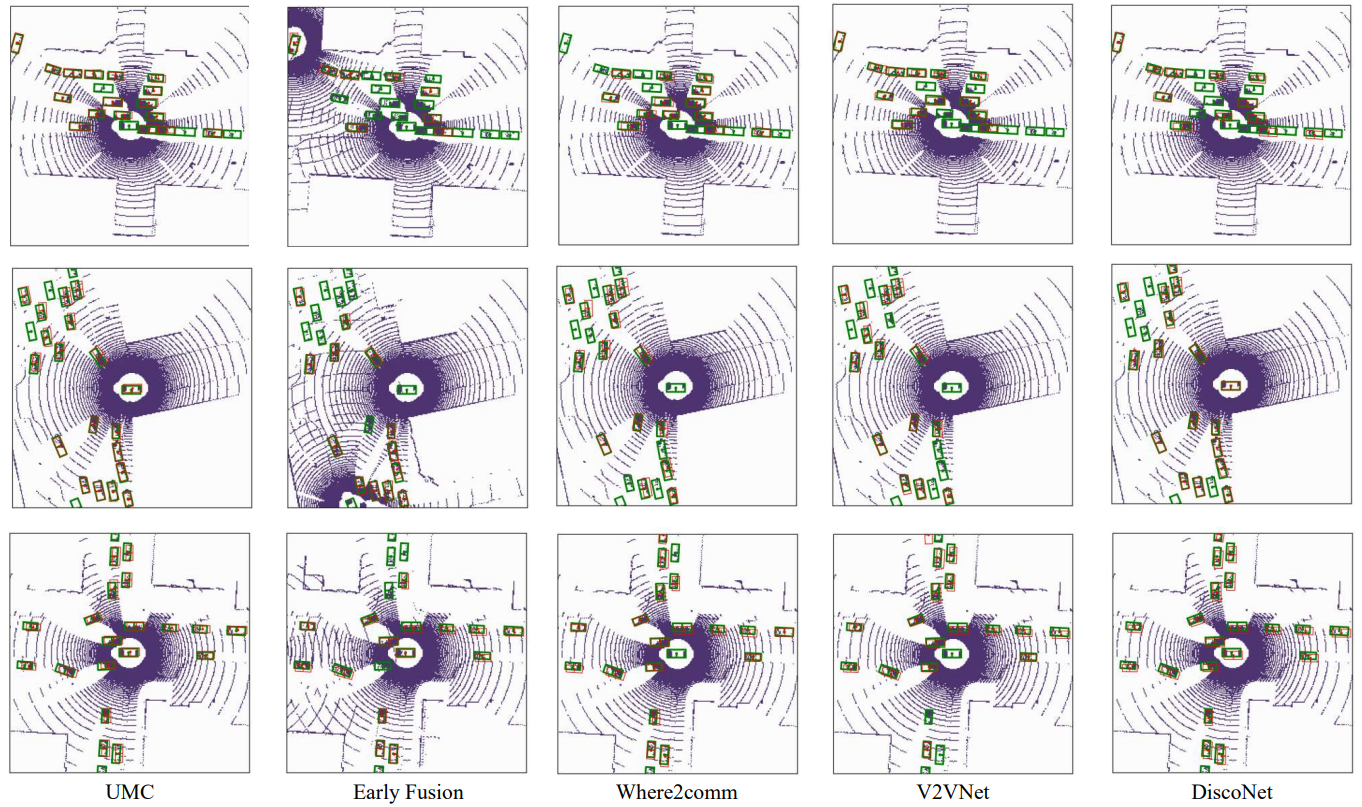

- Detection results of UMC, Early Fusion, Where2comm, V2VNet and DiscoNet on OPV2V dataset.

Citation

If you find our code or paper useful, please cite

@inproceedings{wang2023umc,

title = {UMC: A Unified Bandwidth-efficient and Multi-resolution based Collaborative Perception Framework},

author = {Tianhang, Wang and Guang, Chen and Kai, Chen and Zhengfa, Liu, Bo, Zhang, Alois, Knoll, Changjun, Jiang},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2023}

}