AcurusTrack is a highly predictable multiple object tracker. It is based on a custom data association approach.

This component does not depend on objects' nature - we work with arbitrary ones. You can serve any metadata as input. Here we display the examples of the metadata derived by face and pose estimators. You can use any of the estimators you prefer to create the data for input.

This repository is a work of AIHunters.

We got inspired by the article describing the Markov chain Monte Carlo data association. For fairly simple cases we're releasing this slightly shorter version. But if you are interested in a full version, or have business cases for any of the two versions, feel free to contact us at oss@aihunters.com for more information.

-

Complicated multi-occlusion movement

-

Medical face masks

-

Identically-dressed people with faces fully covered

-

People in identical military uniform

-

Visitor analytics for physical locations

-

Feel free to suggest yours

git clone https://github.com/AIHunters/AcurusTrack.git

cd AcurusTrack

sudo apt-get install python-virtualenv

virtualenv venv --system-site-packages

source venv/bin/activate

pip install -r requirements.txt

source venv/bin/activate

deactivate

Input data should have the following form:

{"frame_no": [{"x1": top_right_x, "y1": top_right_y, "x2": bottom_left_x, "y2": bottom_left_y}], "frame_no":[],...}in case of face processing (for face meta extraction we used RetinaFace)

{"frame_no": [{"body_part_name_x": x_coord, "body_part_name_y": y_coord, "body_part_name_x": x_coord, "body_part_name_y": y_coord}], "frame_no":[],...}in case of body processing (for pose meta extraction we used OpenPose, and you can use any pose estimator you want). More information about the parameters you can find in config.py

To enhance accuracy, we recommend using the EvenVizion component which allows transfer to a fixed coordinate system. If you choose to use it:

- navigate to the FCS;

- create a separate environment for the EvenVizion setup;

- use run_EvenVizion.py to get json with with homography matrices;

- run AcurusTrack, setting fixed_coordinate_system = True beforehand.

- run.py - main file for running algorithm

- pipeline.py - pipeline, including splitting video on chunks, processing them individually, merging results, running final merge algorithm

- config.py - parameters that define overall pipeline

- FCS

- run_EvenVizion.py - moving to fixed coordinate system

- fixed_coordinate_system.py

- processing

- track

- tracker.py - contain abstract class AbstractTracker, realizing full pipeline.

- tracker_merger.py - contain class TrackerMerger for simplified pipeline.

- initialisation - meta preprocessing based on some similarity metric

- initialisation.py - universal preprocessing scheme

- face.py - preprocessing scheme for face meta

- pose.py - preprocessing scheme for pose meta

- additional - auxiliary file with modified Kalman filter.

- utils - custom useful functions

- examples - demo scripts

- visualization - folder containing all visualization functions

Please NOTE that we have slightly changed a souce code of the filterpy library, you can find it at repository.

$python3 run.py --detections [json containing detections] --video_path --video_name --exp_name --path_to_homography_dict optionally --start_frame optionlly --end_frame optionally

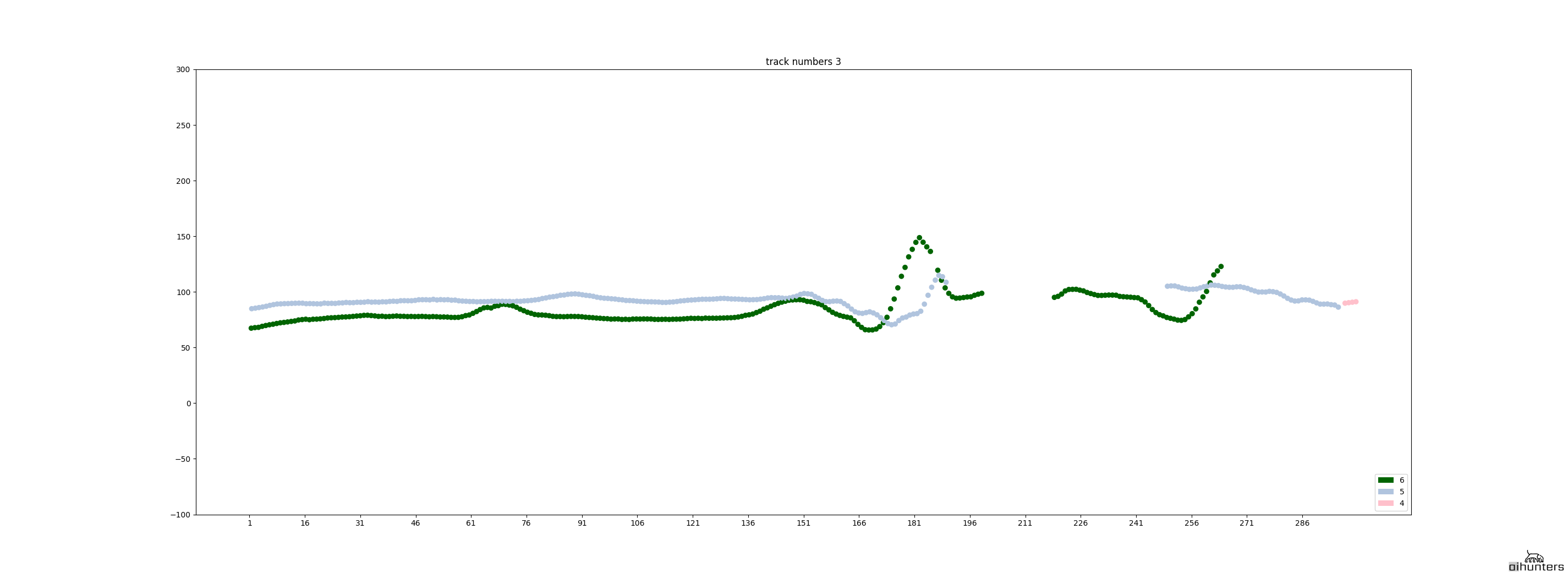

We decided to compare our AcurusTrack component (right) with one of the popular trackers - Re3 (left).

As you can see, the accuracy is higher when using AcurusTrack (especially in the presence of occlusions and camera movement).

AcurusTrack is licensed under the GNU General Public License, either version 3 of the License, or (at your option) any later version. All the third-party open-source software used in this project is listed in Third-Party Open Source Software Notice together with the according licenses.

https://github.com/AIHunters/AcurusTrack

We're open to any kind of communication, whether it is your feedback, failure cases, possible improvements or cooperation possibilities. Contact us at oss@aihunters.com.

Please adhere to our CODE_OF_CONDUCT to keep positive and constructive environment here.