English-Vietnamese Bilingual Translation using Transformer model applied Separated Positional Embedding (SPETModel)

- Continued development from the repo NeuralMachineTranslation

@github{Translation,

author = {The Ho Sy},

title = {English-Vietnamese Bilingual Translation with Transformer},

year = {2023},

url = {https://github.com/hsthe29/Translation},

}

- Modified from Vanilla Transformer's Architecture

- See PhoMT.

- Target Masked Translation Modeling (Target MTM)

- Target MTM:

Training: input: ["en<s>", "How", "are", "you?", "</s>"] target in: ["vi<s>", "Bạn", "có", "<mask>", "không?", "</s>"] target out: ["Bạn", "có", "khỏe", "không?", "</s>", "<pad>"] Inference: input: ["en<s>", "How", "are", "you?", "</s>"] target in: ["vi<s>"] Autoregressive -> full target out: ["vi<s>", Bạn", "có", "khỏe", "không?", "</s>"]

- Target MTM:

- English sentence start token:

en<s> - Vietnamese sentence start token:

vi<s> - End sentence token:

</s> - Mask token:

<mask>for task MLM (training only)

- Natural english

"Hello, how are you?", target start token"vi<s>":- Transform to

"en<s> Hello, how are you? </s>" - Target:

"vi<s> Xin chào, bạn có khỏe không? </s>""

- Transform to

- Natural vietnamese

"Xin chào, bạn có khỏe không?", target start token"en<s>":- Transform to

"vi<s> Xin chào, bạn có khỏe không? </s>" - Target:

"en<s> Hello, how are you? </s>""

- Transform to

- See file config.py and configV1.json

- Because of the large amount of data, my resources are limited, so I have to process and segment the data to be able to train the model.

- Preload parameter:

- seed: a seed to create randoms from random generator

- shuffle: if True, the dataset will be shuffled before chunked

- chunk_size: size of each chunk

- Optimizer: AdamW

- Learning rate scheduler: WarmupLinearLR

Training arguments:

- config: "assets/config/configV1.json"

- load_prestates: True

- epochs: 20

- init_lr: 1e-4

- train_data_dir: /path_to_train_data_dir/

- val_data_dir: /path_to_val_data_dir/

- train_batch_size: 16

- val_batch_size: 32

- print_steps: 500

- validation_steps: 1000

- max_warmup_steps: 10000

- gradient_accumulation_steps: 4

- save_state_steps: 1000

- weight_decay: 0.001

- warmup_proportion: 0.1

- use_gpu: True

- max_grad_norm: 1.0

- save_ckpt: True

- ckpt_loss_path: /path_to_loss_ckpt/

- ckpt_bleu_path: /path_to_bleu_ckpt/

- state_path: /path_to_state/

$ pip install -r requirements.txt

$ python preload_data.py --config=... --data_dir=... --save_dir=... --chunk_size=... --shuffle=...

$ python train.py [training arguments]

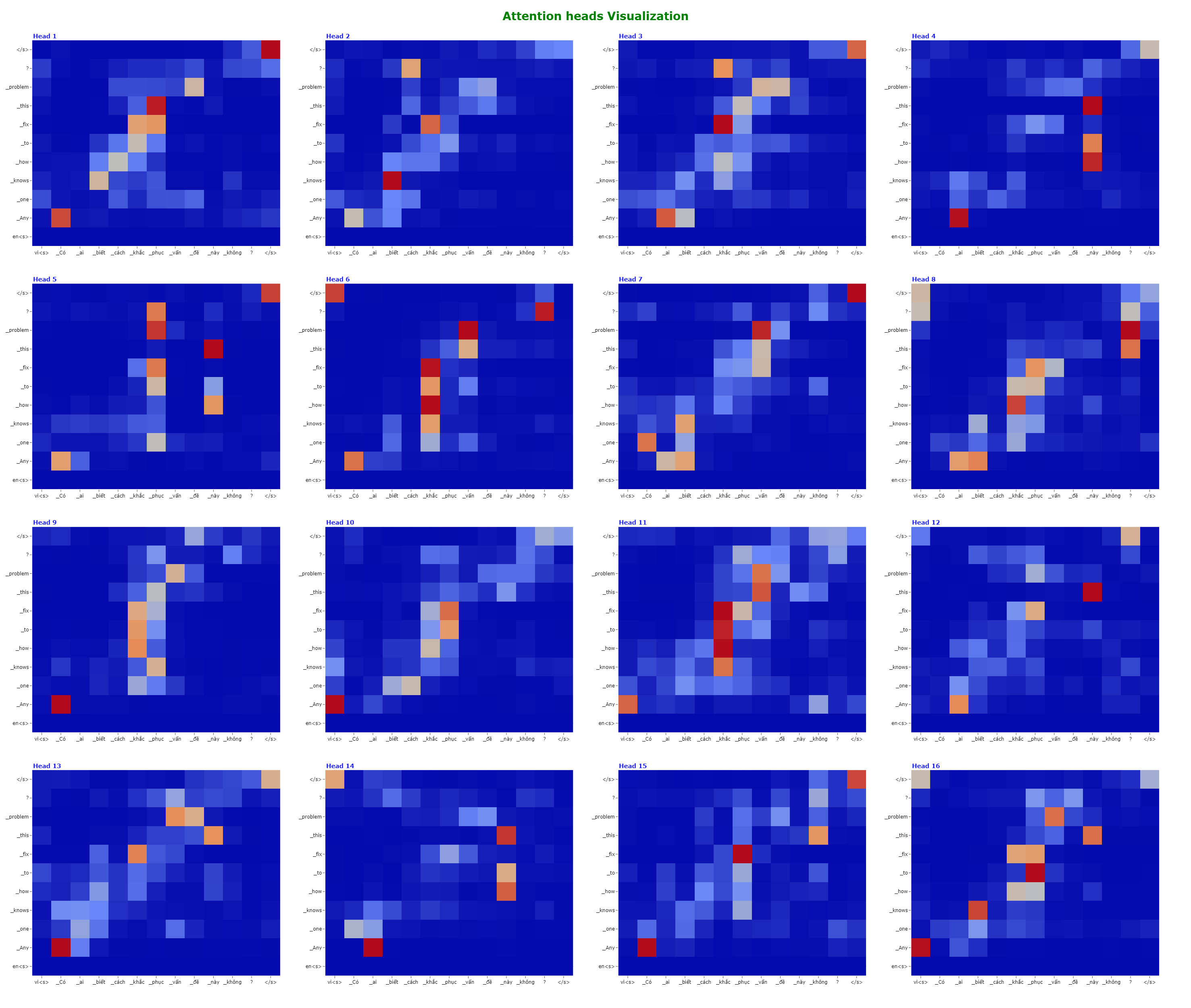

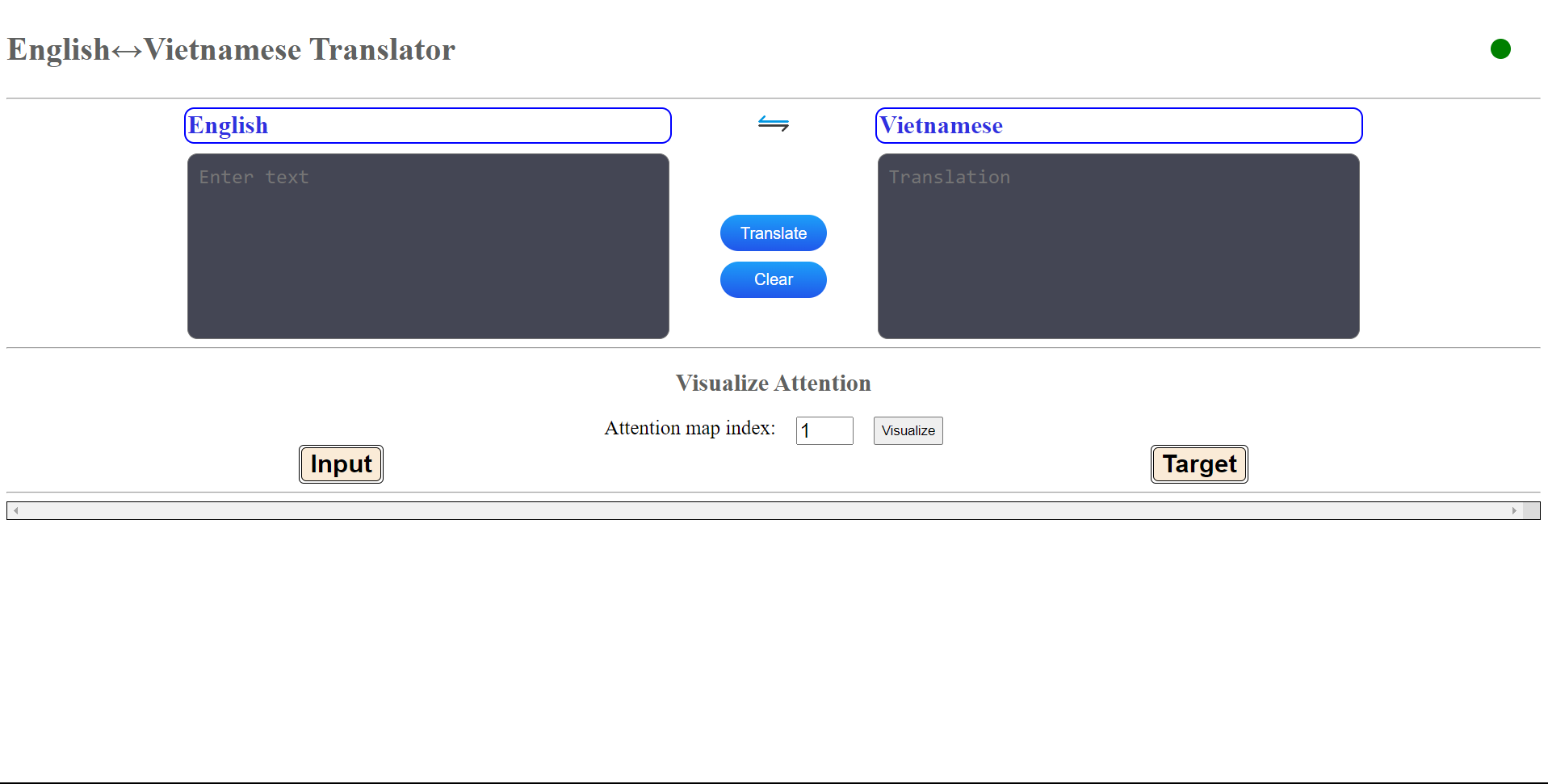

- Use Flask to deploy a simple web server run on

localhostthat provides bilingual translation and visualizes attention weights between pairs of sentences - Use Plotly.js to visualize attention maps.

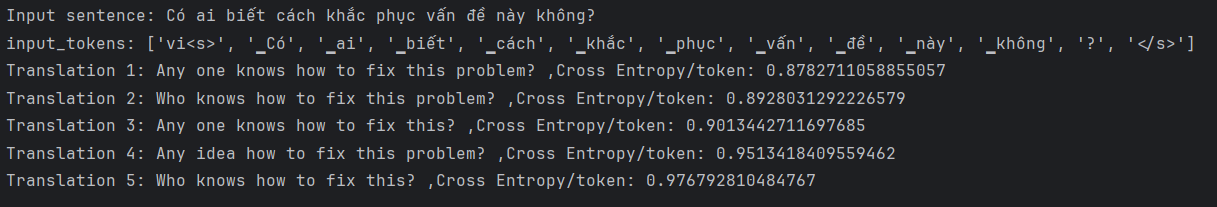

- Use checkpoint at step 100k (25k update steps), Cross Entropy per tokens: 3.5154,

- Download checkpoint and edit "pretrained_path" (path that checkpoint has been downloaded) in config file

- Run:

$ python run_app.pyor$ python3 run_app.py