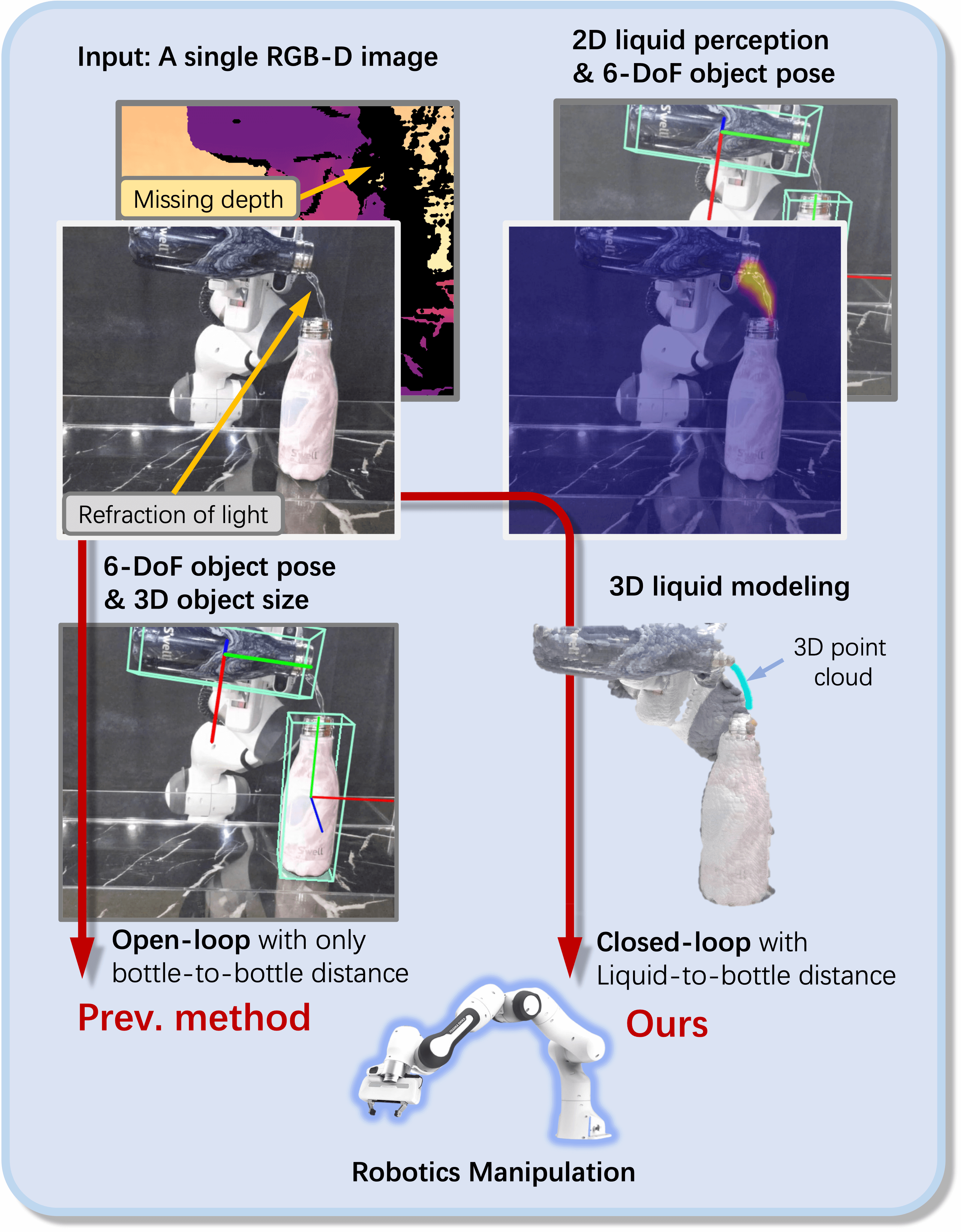

PourIt!: Weakly-Supervised Liquid Perception from a Single Image for Visual Closed-Loop Robotic Pouring

This repository contains the PyTorch implementation of the paper "PourIt!: Weakly-Supervised Liquid Perception from a Single Image for Visual Closed-Loop Robotic Pouring" [PDF] [Supp] [arXiv]. Our approach could recover the 3D shape of the detected liquid.

For more results and robotic demos, please refer to our Webpage.

- Python >= 3.6

- PyTorch >= 1.7.1

- CUDA >= 10.1

conda create -n pourit python=3.6

conda activate pourit

pip install -r requirements.txt

Download PourIt! dataset.

Unzip and organize these files in ./data as follows,

data

└── PourIt

├── seen

└── unseen

If you want to use your own data, please organize the files as follows,

data

└── PourIt_additional

├── ori_scene1

│ ├── water

│ │ ├── 000000_rgb.png

│ │ ├── ...

│ │ └── 000099_rgb.png

│ │

│ └── water_no

│ ├── 000000_rgb.png

│ ├── ...

│ └── 000099_rgb.png

│

├── ori_scene2

├── ori_scene3

├── ...

└── ori_scene10

Note: The water folder stores the RGB images with flowing liquid, while the water_no folder stores the RGB images without flowing liquid.

Then run the pre-processing code to process your own data (If you are using the PourIt! dataset, please skip this step).

# For example, modify the code

SCENE_NUM = 10

# Then execute

python preprocess/process_origin_data.py

Download the ImageNet-1k pre-trained weights mit_b1.pth from the official SegFormer implementation and move them to ./pretrained.

# train on PourIt! dataset

bash launch/train.sh

# visualize the log

tensorboard --logdir ./logs/pourit_ours/tb_logger --bind_all

# type ${HOST_IP}:6006 into your browser to visualize the training results

# evaluation on PourIt! dataset (seen and unseen scenes)

bash launch/eval.sh

Download the PourIt! pre-trained weights and move it to the ./logs/pourit_ours/checkpoints

- Set up your camera, e.g. kinect_azure or realsense

# launch ROS node of kinect_azure camera

roslaunch azure_kinect_ros_driver driver.launch

- Run the

demo.py

python demo.py

# online 2d liquid prediction

predictor.inference(liquid_2d_only=True)

Then you will observe the results of 2D liquid detection, similar to the following,

- Set up your camera, e.g. kinect_azure or realsense

# launch ROS node of kinect_azure camera

roslaunch azure_kinect_ros_driver driver.launch

-

Launch your pose estimator, e.g. SAR-Net, to publish the estimated pose transformation of the container

'/bottle'versus'/rgb_camera_link'(kinect_azure) or'/camera_color_frame'(realsense) -

Run the

demo.py

python demo.py

# online 3d liquid prediction

predictor.inference()

# If you don't have a camera available on-the-fly, you can run our example in offline mode.

# offline 3d liquid prediction

predictor.process_metadata_multiple('./examples/src')

The reconstructed 3D point cloud of liquid will be saved in ./example/dst.

If you find our work helpful, please consider citing:

@InProceedings{Lin_2023_ICCV,

author = {Lin, Haitao and Fu, Yanwei and Xue, Xiangyang},

title = {PourIt!: Weakly-Supervised Liquid Perception from a Single Image for Visual Closed-Loop Robotic Pouring},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {241-251}

}

Our implementation leverages the code from AFA. Thanks for the authors' work. We also thank Dr. Connor Schenck for providing the UW Liquid Pouring Dataset.