glu-gestures

Note: this repository if kept for historical reference only. Please refer to https://github.com/gsaponaro/tcds-gestures/ for an extended and more complete version of this work.

This repository contains code and material for this paper:

- Giovanni Saponaro, Lorenzo Jamone, Alexandre Bernardino and Giampiero Salvi. Interactive Robot Learning of Gestures, Language and Affordances. International Workshop on Grounding Language Understanding (GLU), INTERSPEECH 2017. [more information on ISCA Archive] [GLU 2017 workshop website]

Description

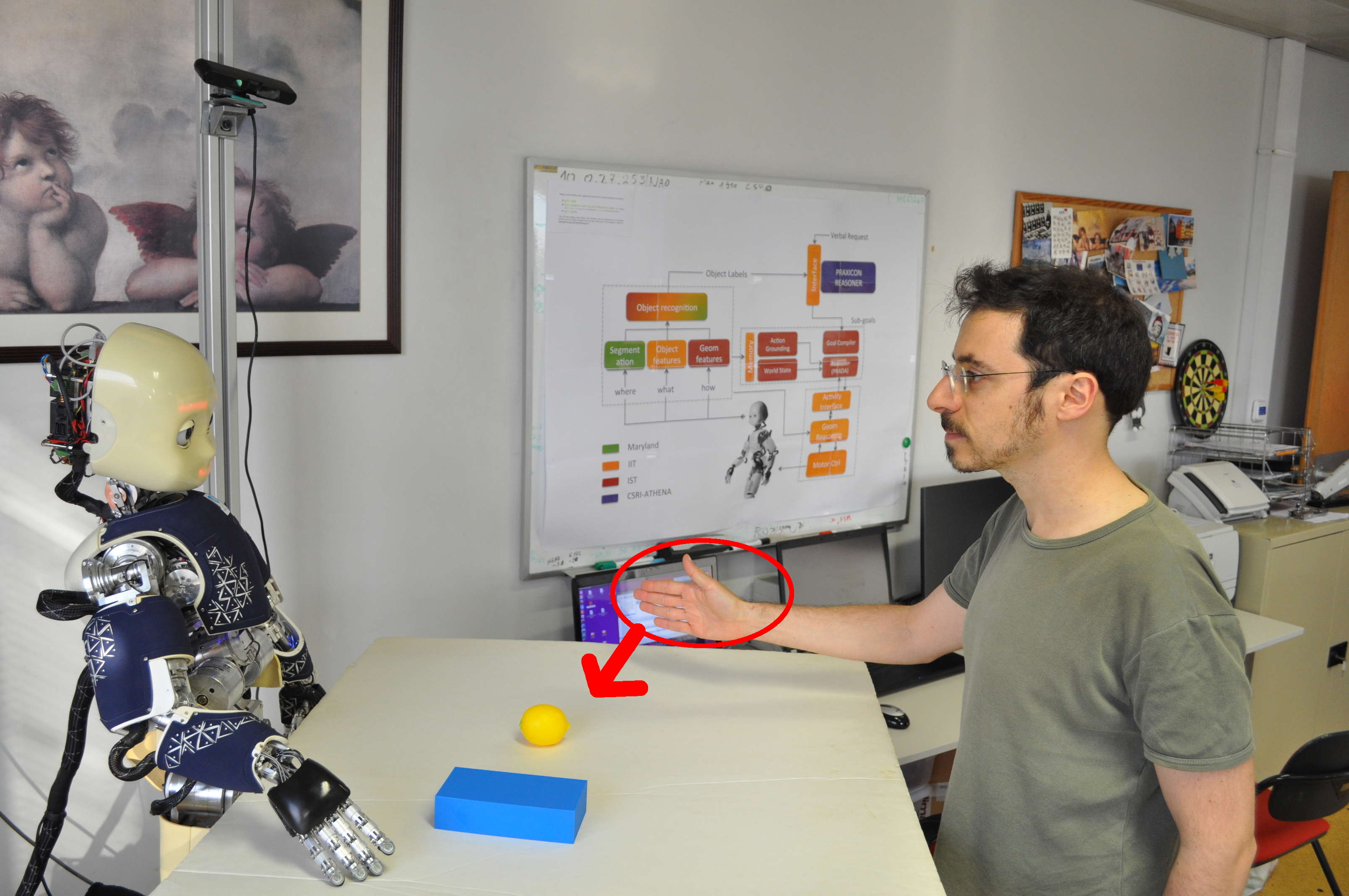

Below we show the experimental setup, consisting of an iCub humanoid robot and a human user performing a manipulation gesture on a shared table with different objects on top. The depth sensor in the top-left corner is used to extract human hand coordinates for gesture recognition. Depending on the gesture and on the target object, the resulting effect will differ.

License

Released under the terms of the GPL v3.0 or later. See the file LICENSE for details.